目录

LangChain Experssion Language简介

Querying a SQL DB:根据用户的问题写SQL检索数据库

LangChain Experssion Language简介

LangChain Experssion Language 简称LCEL,感觉就是为了节省代码量,让程序猿们更好地搭建基于大语言模型的应用,而在LangChain框架中整了新的语法来搭建prompt+LLM的chain。来,大家直接看官网链接:LangChain Expression Language (LCEL) | 🦜️🔗 Langchain。

本文的例子主要来自官网给出的Cookbook(Cookbook | 🦜️🔗 Langchain)的示例。所谓Cookbook,那当然是不会厨艺的人每次做菜之前的必读物,我觉得这个官网的Cookbook不仅仅是关于如何使用LCEL来做大语言模型的应用了,就是给大家枚举了一下Langchain本身该怎么的几大使用方法。本人自己理解看了一遍代码,如果有问题的话欢迎来评论。为了让大家看着不累,这个CookBook的示例我分了两篇,分别是《LangChain Experssion Language之CookBook(一/二)》,快来学习吧。

另外,如果你运行代码发现报错是需要api key那就是代码里模型定义和加载的时候请加上api key的参数,记得申请key哦。

✅ gpt系列,需要参数openai_api_key,申请地址:https://platform.openai.com/api-keys

✅ anthropic也就是前几天发的Claude系列,需要参数anthropic_api_key,申请地址:App unavailable \ Anthropic

记得Create new secret key以后需要把你的key在别的地方存一下,因为不会再能展示给你看了。

CookBook示例大赏

Prompt + LLM:正经本分事儿

最最最基础案例,那当然是怎么把Prompt和LLM串起来了啦,Langchain给出了他们的框架流程大致如下:

下面直接上代码,第一个PromptTemplate + LLM:

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

prompt = ChatPromptTemplate.from_template("tell me a joke about {foo}")

# 这里一般是需要你有个openai的api key哦,需要申请一下。

model = ChatOpenAI()

# 定义好chain

chain = prompt | model

# invoke你的chain吧那就

chain.invoke({"foo": "bears"})

# 返回的结果如下:

# AIMessage(content="Why don't bears wear shoes?\n\nBecause they have bear feet!", additional_kwargs={}, example=False)

# 一些可以进行的骚操作

利用停用词,截取LLM返回的结果,第一个stop词之前的内容

chain = prompt | model.bind(stop=["\n"])

chain.invoke({"foo": "bears"})

# AIMessage(content='Why did the bear never wear shoes?', additional_kwargs={}, example=False)另外,你还可以通过functions加入函数调用的附加信息:

functions = [

{

"name": "joke",

"description": "A joke",

"parameters": {

"type": "object",

"properties": {

"setup": {"type": "string", "description": "The setup for the joke"},

"punchline": {

"type": "string",

"description": "The punchline for the joke",

},

},

"required": ["setup", "punchline"],

},

}

]

chain = prompt | model.bind(function_call={"name": "joke"}, functions=functions)

chain.invoke({"foo": "bears"}, config={})

# 返回结果

# AIMessage(content='', additional_kwargs={'function_call': {'name': 'joke', 'arguments': '{\n "setup": "Why don\'t bears wear shoes?",\n "punchline": "Because they have bear feet!"\n}'}}, example=False)第二个例子加上了output parser:

from langchain_core.output_parsers import StrOutputParser

chain = prompt | model | StrOutputParser()

chain.invoke({"foo": "bears"})

# 返回结果直接是字符串信息

# "Why don't bears wear shoes?\n\nBecause they have bear feet!"

# 如果限定返回的内容且利用jsonoutputfunctionsparser

from langchain.output_parsers.openai_functions import JsonOutputFunctionsParser

chain = (

prompt

| model.bind(function_call={"name": "joke"}, functions=functions)

| JsonOutputFunctionsParser()

)

chain.invoke({"foo": "bears"})

# 结果直接返回了

# {'setup': "Why don't bears like fast food?",

# 'punchline': "Because they can't catch it!"}

# 还可以在JsonOutputFunctionsParser()中限定要返回的key

from langchain.output_parsers.openai_functions import JsonKeyOutputFunctionsParser

chain = (

prompt

| model.bind(function_call={"name": "joke"}, functions=functions)

| JsonKeyOutputFunctionsParser(key_name="setup")

)

chain.invoke({"foo": "bears"})

# 返回结果

# "Why don't bears wear shoes?"进一步简化输入的形式:

from langchain_core.runnables import RunnableParallel, RunnablePassthrough

map_ = RunnableParallel(foo=RunnablePassthrough())

chain = (

map_

| prompt

| model.bind(function_call={"name": "joke"}, functions=functions)

| JsonKeyOutputFunctionsParser(key_name="setup")

)

chain.invoke("bears")

# 返回

# "Why don't bears wear shoes?"

# 或者直接在chain中传参数

chain = (

{"foo": RunnablePassthrough()}

| prompt

| model.bind(function_call={"name": "joke"}, functions=functions)

| JsonKeyOutputFunctionsParser(key_name="setup")

)

chain.invoke("bears")

# 返回

# "Why don't bears like fast food?"RAG:检索的时候用上用户自己的数据吧

RAG的全称是:retrieval-augmented generation,就是在构建chain的时候,第一环用的是检索用户自己数据后得到的结果来作为LLM的context输入。那首先用户的数据呢,是要存起来的,示例代码里用的是OpenAI的embedding,结合Facebook的FAISS来进行相似内容的检索,请看:

# 在这里进行用户数据embedding的存储

vectorstore = FAISS.from_texts(

["harrison worked at kensho"], embedding=OpenAIEmbeddings()

)

# 初始化一个存好embedding的检索器

retriever = vectorstore.as_retriever()

template = """Answer the question based only on the following context:

{context}

Question: {question}

"""

prompt = ChatPromptTemplate.from_template(template)

model = ChatOpenAI()

# 作为chain的第一环,input环节

chain = (

{"context": retriever, "question": RunnablePassthrough()}

| prompt

| model

| StrOutputParser()

)

chain.invoke("where did harrison work?")

# 返回

# 'Harrison worked at Kensho.'

# 或者你还可以把retriever自己加入到chainchain里面

template = """Answer the question based only on the following context:

{context}

Question: {question}

Answer in the following language: {language}

"""

prompt = ChatPromptTemplate.from_template(template)

chain = (

{

"context": itemgetter("question") | retriever,

"question": itemgetter("question"),

"language": itemgetter("language"),

}

| prompt

| model

| StrOutputParser()

)

chain.invoke({"question": "where did harrison work", "language": "italian"})

# 返回

# 'Harrison ha lavorato a Kensho.'另外,检索也可能发生在跟用户的历史对话记录中,以下示例比较长,逐一分析看看,首先咱们的chain是

conversational_qa_chain = _inputs | _context | ANSWER_PROMPT | ChatOpenAI()

这里的_inputs是一个chain的结果,即利用CONDENSE_QUESTION_PROMPT,根据聊天记录和随之的问题,将问题改写成一个独立的问题。

这里的_context 是另一个chain的结果,在_combine_documents里检索standalone_question得到结果

最后用ANSWER_PROMPT,来得到问题的最终答案。

from langchain.prompts.prompt import PromptTemplate

_template = """Given the following conversation and a follow up question, rephrase the follow up question to be a standalone question, in its original language.

Chat History:

{chat_history}

Follow Up Input: {question}

Standalone question:"""

CONDENSE_QUESTION_PROMPT = PromptTemplate.from_template(_template)

template = """Answer the question based only on the following context:

{context}

Question: {question}

"""

ANSWER_PROMPT = ChatPromptTemplate.from_template(template)

DEFAULT_DOCUMENT_PROMPT = PromptTemplate.from_template(template="{page_content}")

def _combine_documents(

docs, document_prompt=DEFAULT_DOCUMENT_PROMPT, document_separator="\n\n"

):

doc_strings = [format_document(doc, document_prompt) for doc in docs]

return document_separator.join(doc_strings)

# chain的输入

_inputs = RunnableParallel(

standalone_question=RunnablePassthrough.assign(

chat_history=lambda x: get_buffer_string(x["chat_history"])

)

| CONDENSE_QUESTION_PROMPT

| ChatOpenAI(temperature=0)

| StrOutputParser(),

)

_context = {

"context": itemgetter("standalone_question") | retriever | _combine_documents,

"question": lambda x: x["standalone_question"],

}

conversational_qa_chain = _inputs | _context | ANSWER_PROMPT | ChatOpenAI()

conversational_qa_chain.invoke(

{

"question": "where did harrison work?",

"chat_history": [],

}

)

# 返回

# AIMessage(content='Harrison was employed at Kensho.')

# 加载human message和ai message后

conversational_qa_chain.invoke(

{

"question": "where did he work?",

"chat_history": [

HumanMessage(content="Who wrote this notebook?"),

AIMessage(content="Harrison"),

],

}

)

# 返回

# AIMessage(content='Harrison worked at Kensho.')然后吧,你还可以把咱们之前的问题和答案存起来作为memory的样子进行加载:

memory = ConversationBufferMemory(

return_messages=True, output_key="answer", input_key="question"

)

# First we add a step to load memory

# This adds a "memory" key to the input object

loaded_memory = RunnablePassthrough.assign(

chat_history=RunnableLambda(memory.load_memory_variables) | itemgetter("history"),

)

# Now we calculate the standalone question

standalone_question = {

"standalone_question": {

"question": lambda x: x["question"],

"chat_history": lambda x: get_buffer_string(x["chat_history"]),

}

| CONDENSE_QUESTION_PROMPT

| ChatOpenAI(temperature=0)

| StrOutputParser(),

}

# Now we retrieve the documents

retrieved_documents = {

"docs": itemgetter("standalone_question") | retriever,

"question": lambda x: x["standalone_question"],

}

# Now we construct the inputs for the final prompt

final_inputs = {

"context": lambda x: _combine_documents(x["docs"]),

"question": itemgetter("question"),

}

# And finally, we do the part that returns the answers

answer = {

"answer": final_inputs | ANSWER_PROMPT | ChatOpenAI(),

"docs": itemgetter("docs"),

}

# And now we put it all together!

final_chain = loaded_memory | standalone_question | retrieved_documents | answer

# 然后我们来看一下

inputs = {"question": "where did harrison work?"}

result = final_chain.invoke(inputs)

# result打印结果如下

# {'answer': AIMessage(content='Harrison was employed at Kensho.'),

# 'docs': [Document(page_content='harrison worked at kensho')]}

#另外, memory需要手动存储一下

# Note that the memory does not save automatically

# This will be improved in the future

# For now you need to save it yourself

memory.save_context(inputs, {"answer": result["answer"].content})

memory.load_memory_variables({})

# 看看load了啥

# {'history': [HumanMessage(content='where did harrison work?'),

# AIMessage(content='Harrison was employed at Kensho.')]}

# inputs = {"question": "but where did he really work?"}

result = final_chain.invoke(inputs)

# result中返回了docs对应的内容

# {'answer': AIMessage(content='Harrison actually worked at Kensho.'),

# 'docs': [Document(page_content='harrison worked at kensho')]}Multiple chains:玩转chain的叠加合并

LCEL得优越性在这样的例子里好像就展现出来了,用一行简单的代码就可以在一个chain里加入另一个chain,比如下面这个示例:

from operator import itemgetter

from langchain_core.output_parsers import StrOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_openai import ChatOpenAI

prompt1 = ChatPromptTemplate.from_template("what is the city {person} is from?")

prompt2 = ChatPromptTemplate.from_template(

"what country is the city {city} in? respond in {language}"

)

model = ChatOpenAI()

chain1 = prompt1 | model | StrOutputParser()

# 可以看到chain2的第一个环节是chain1给的输入

chain2 = (

{"city": chain1, "language": itemgetter("language")}

| prompt2

| model

| StrOutputParser()

)

chain2.invoke({"person": "obama", "language": "spanish"})然后我们最后再来看一个复杂的chain套chain,在这个案例里,每个chain有点像是一个agent,几条chain组成了一个辩论活动。有正方arguments_for,也有反方arguments_against,有出题的人planner,还有集成各个想法的final_responder。下面来看下具体代码:

planner = (

ChatPromptTemplate.from_template("Generate an argument about: {input}")

| ChatOpenAI()

| StrOutputParser()

| {"base_response": RunnablePassthrough()}

)

arguments_for = (

ChatPromptTemplate.from_template(

"List the pros or positive aspects of {base_response}"

)

| ChatOpenAI()

| StrOutputParser()

)

arguments_against = (

ChatPromptTemplate.from_template(

"List the cons or negative aspects of {base_response}"

)

| ChatOpenAI()

| StrOutputParser()

)

final_responder = (

ChatPromptTemplate.from_messages(

[

("ai", "{original_response}"),

("human", "Pros:\n{results_1}\n\nCons:\n{results_2}"),

("system", "Generate a final response given the critique"),

]

)

| ChatOpenAI()

| StrOutputParser()

)

chain = (

planner

| {

"results_1": arguments_for,

"results_2": arguments_against,

"original_response": itemgetter("base_response"),

}

| final_responder

)是不是有那味儿了。

Querying a SQL DB:根据用户的问题写SQL检索数据库

虽然之前一直知道现在的大语言模型可以写代码写SQL这种了,但是第一次发现,所以是可以直接去检索数据库的吗?瞅一眼Langchain官网的示例:

from langchain_core.prompts import ChatPromptTemplate

# 首先,这是定义了一个prompt,基于数据库表的schema来根据用户问题写一个sql查询

template = """Based on the table schema below, write a SQL query that would answer the user's question:

{schema}

Question: {question}

SQL Query:"""

prompt = ChatPromptTemplate.from_template(template)

# 定义要查询的数据库

db = SQLDatabase.from_uri("sqlite:///./Chinook.db")

# 取数据库的表信息

def get_schema(_):

return db.get_table_info()

# 运行用户查询

def run_query(query):

return db.run(query)

from langchain_core.output_parsers import StrOutputParser

from langchain_core.runnables import RunnablePassthrough

from langchain_openai import ChatOpenAI

model = ChatOpenAI()

# 定义chain,分别是查询表结构信息,然后放到prompt里,取出LLM出来的SQLResult部分,解析结果

sql_response = (

RunnablePassthrough.assign(schema=get_schema)

| prompt

| model.bind(stop=["\nSQLResult:"])

| StrOutputParser()

)

sql_response.invoke({"question": "How many employees are there?"})

# 这一步得到了咱们的查询语句

# 'SELECT COUNT(*) FROM Employee'

# 这个template,则是需要在基于数据库表的schema来根据用户问题写好sql查询

# 并且查询完结果后,回答用户的问题

template = """Based on the table schema below, question, sql query, and sql response, write a natural language response:

{schema}

Question: {question}

SQL Query: {query}

SQL Response: {response}"""

prompt_response = ChatPromptTemplate.from_template(template)

full_chain = (

RunnablePassthrough.assign(query=sql_response).assign(

schema=get_schema,

response=lambda x: db.run(x["query"]),

)

| prompt_response

| model

)

full_chain.invoke({"question": "How many employees are there?"})

# 返回结果直接就是语句化的答案了:

# AIMessage(content='There are 8 employees.', additional_kwargs={}, example=False)是不是有,一些工业问答场景下,比如查询库存数据库或者其他的数据库,可以拿来用用的感觉?

Agents:终于看到万众瞩目的Agent例子了

看了一遍代码,哦,原来Agent是咱们Langchain里一个个需要定义的tool。本节的例子是做一个询问天气的agent,代码如下:

from langchain import hub

from langchain.agents import AgentExecutor, tool

from langchain.agents.output_parsers import XMLAgentOutputParser

from langchain_community.chat_models import ChatAnthropic

# 首先当然是定义好咱们的model啦

model = ChatAnthropic(model="claude-2")

# 这里咱们的tool叫做search,自定义,示例代码直接返回了“32degree”

@tool

def search(query: str) -> str:

"""Search things about current events."""

return "32 degrees"

tool_list = [search]

# Get the prompt to use - you can modify this!

prompt = hub.pull("hwchase17/xml-agent-convo")

#这里将中间步骤的action.tool和action.tool_input,以及observation结果截取出来返回给了模型

# Logic for going from intermediate steps to a string to pass into model

# This is pretty tied to the prompt

def convert_intermediate_steps(intermediate_steps):

log = ""

for action, observation in intermediate_steps:

log += (

f"<tool>{action.tool}</tool><tool_input>{action.tool_input}"

f"</tool_input><observation>{observation}</observation>"

)

return log

# Logic for converting tools to string to go in prompt

def convert_tools(tools):

return "\n".join([f"{tool.name}: {tool.description}" for tool in tools])

agent = (

{

"input": lambda x: x["input"],

"agent_scratchpad": lambda x: convert_intermediate_steps(

x["intermediate_steps"]

),

}

| prompt.partial(tools=convert_tools(tool_list))

| model.bind(stop=["</tool_input>", "</final_answer>"])

| XMLAgentOutputParser()

)

agent_executor = AgentExecutor(agent=agent, tools=tool_list, verbose=True)

agent_executor.invoke({"input": "whats the weather in New york?"})

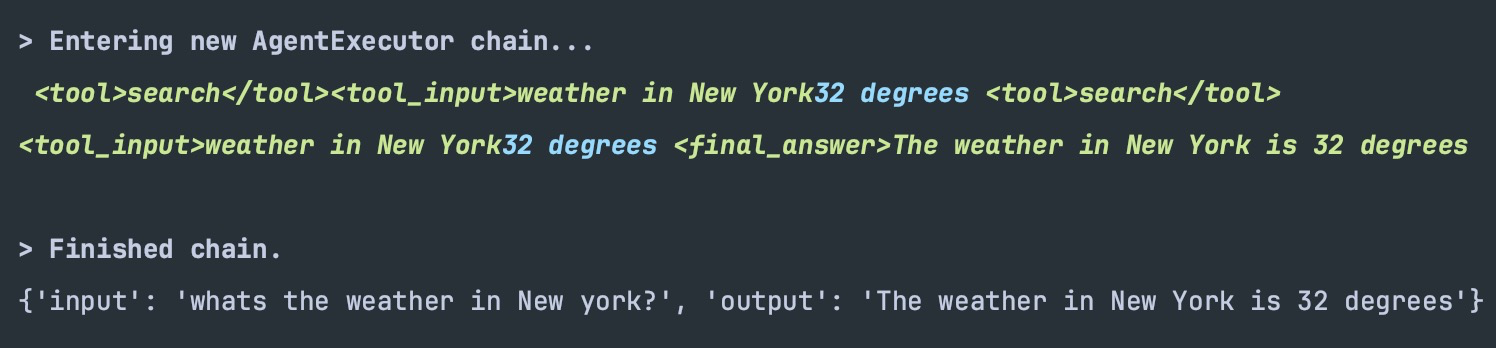

执行后的结果是:

这里我们先来看看prompt长得什么样子,直接打印一下prompt,print(agent.get_prompts()),得到:

[ChatPromptTemplate(input_variables=['agent_scratchpad', 'input'], partial_variables={'chat_history': '', 'tools': 'search: search(query: str) -> str - Search things about current events.'}, messages=[HumanMessagePromptTemplate(prompt=PromptTemplate(input_variables=['agent_scratchpad', 'chat_history', 'input', 'tools'], template="You are a helpful assistant. Help the user answer any questions.\n\nYou have access to the following tools:\n\n{tools}\n\nIn order to use a tool, you can use <tool></tool> and <tool_input></tool_input> tags. You will then get back a response in the form <observation></observation>\nFor example, if you have a tool called 'search' that could run a google search, in order to search for the weather in SF you would respond:\n\n<tool>search</tool><tool_input>weather in SF</tool_input>\n<observation>64 degrees</observation>\n\nWhen you are done, respond with a final answer between <final_answer></final_answer>. For example:\n\n<final_answer>The weather in SF is 64 degrees</final_answer>\n\nBegin!\n\nPrevious Conversation:\n{chat_history}\n\nQuestion: {input}\n{agent_scratchpad}"))])]

# prompt template长下面这样子

"""You are a helpful assistant. Help the user answer any questions.

You have access to the following tools:

{tools}

In order to use a tool, you can use <tool></tool> and <tool_input></tool_input> tags. You will then get back a response in the form <observation></observation>

For example, if you have a tool called 'search' that could run a google search, in order to search for the weather in SF you would respond:

<tool>search</tool><tool_input>weather in SF</tool_input>

<observation>64 degrees</observation>

When you are done, respond with a final answer between <final_answer></final_answer>. For example:

<final_answer>The weather in SF is 64 degrees</final_answer>

Begin!

Previous Conversation:

{chat_history}

Question: {input}

{agent_scratchpad}"""然后回头看了一眼intermediate_steps里的action和observation。

action 打印出来:

tool='search' tool_input='weather in New york' log=' <tool>search</tool><tool_input>weather in New york'

observation打印出来:

32 degrees有没有那种感觉,通过tool得到了结果,然后把里面的重点信息组织组织得到最后的答案。不过这里只用了一个Tool,后面我多定义了一个tool并且把其加入了tool_list里,不过返回的结果没变。目测可能是因为在prompt里已经说明了search工具,且可以用search来查询SF的天气:

For example, if you have a tool called 'search' that could run a google search, in order to search for the weather in SF you would respond:

<tool>search</tool><tool_input>weather in SF</tool_input>

<observation>64 degrees</observation>回头看到Agents的例子再更新!

856

856

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?