基础作业 ---训练自己的小助手认知

一,环境配置

沿用上次课配置的环境,在本地使用 conda activate ILMC_hzy激活环境 ILMC_hzy

下载依赖

cd /data/hzy/InterLM_Camp && mkdir 4_Xtuner && cd 4_Xtuner

git clone -b v0.1.17 https://github.com/InternLM/xtuner # 拉取xtuner源码

pip install -e '.[all]'

二,数据集准备

cd /data/hzy/InterLM_Camp/4_XTuner/xtuner

mkdir fine_tune # 创建文件夹,用于存放微调相关的代码

mkdir fine_tune/data # 创建文件夹,用于存放用于微调的数据

cd fine_tune/data

touch generate_data.py # 在data文件夹下创建数据集生成代码

python generate_data.py# 该代码将欢迎语重复设定次数

# 然后保存为json文件作为微调数据

import json

# 设置用户的名字

name = '哪啊哪啊安'

# 设置需要重复添加的数据次数

n = 10000

# 初始化OpenAI格式的数据结构

data = [

{

"messages": [

{

"role": "user",

"content": "请做一下自我介绍"

},

{

"role": "assistant",

"content": "我是{}的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦".format(name)

}

]

}

]

# 通过循环,将初始化的对话数据重复添加到data列表中

for i in range(n):

data.append(data[0])

# 将data列表中的数据写入到一个名为'personal_assistant.json'的文件中

with open('personal_assistant.json', 'w', encoding='utf-8') as f:

# 使用json.dump方法将数据以JSON格式写入文件

# ensure_ascii=False 确保中文字符正常显示

# indent=4 使得文件内容格式化,便于阅读

json.dump(data, f, ensure_ascii=False, indent=4)三,模型准备

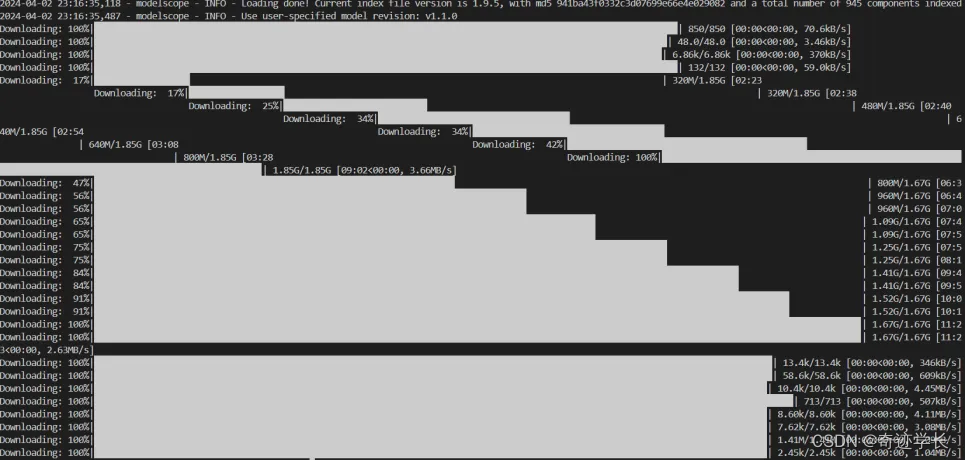

通过以下命令下载,并创建软连接

mkdir model # 创建文件夹,存放1.8B模型

touch model_download.py

python model_download.py

ln -s /data/hzy/InterLM_Camp/models/Shanghai_AI_Laboratory/internlm2-chat-1_8b/* .import os

from modelscope.hub.snapshot_download import snapshot_download

# save_dir是模型保存到本地的目录

save_dir="/data/hzy/InterLM_Camp/models"

os.makedirs(save_dir, exist_ok=True)

snapshot_download("Shanghai_AI_Laboratory/internlm2-chat-1_8b",

cache_dir=save_dir,

revision='v1.1.0')

四,微调配置文件准备

按模型搜索已有配置文件:xtuner list-cfg -p internlm2_1_8b,返回以下结果:

==========================CONFIGS===========================

PATTERN: internlm2_1_8b

-------------------------------

internlm2_1_8b_full_alpaca_e3

internlm2_1_8b_qlora_alpaca_e3

=============================================================按微调方法选择相应配置

复制配置文件

cd .. && mkdir config # 创建文件夹,存放配置文件

cd config

# 使用 XTuner 中的 copy-cfg 功能将 config 文件复制到config目录

xtuner copy-cfg internlm2_1_8b_qlora_alpaca_e3 .修改配置:主要修改项如下

pretrained_model_name_or_path = '/data/hzy/InterLM_Camp/4_XTuner/fine_tune/model'

alpaca_en_path = '/data/hzy/InterLM_Camp/4_XTuner/fine_tune/data/personal_assistant.json'

max_length = 1024

max_epochs = 2

save_steps = 300

save_total_limit = 3

evaluation_freq = 300

SYSTEM = ''

evaluation_inputs = ['请你介绍一下你自己', '你是谁', '你是我的小助手吗']

alpaca_en = dict(

type=process_hf_dataset,

dataset=dict(type=load_dataset, path='json', data_files=dict(train=alpaca_en_path)),五,模型微调/训练

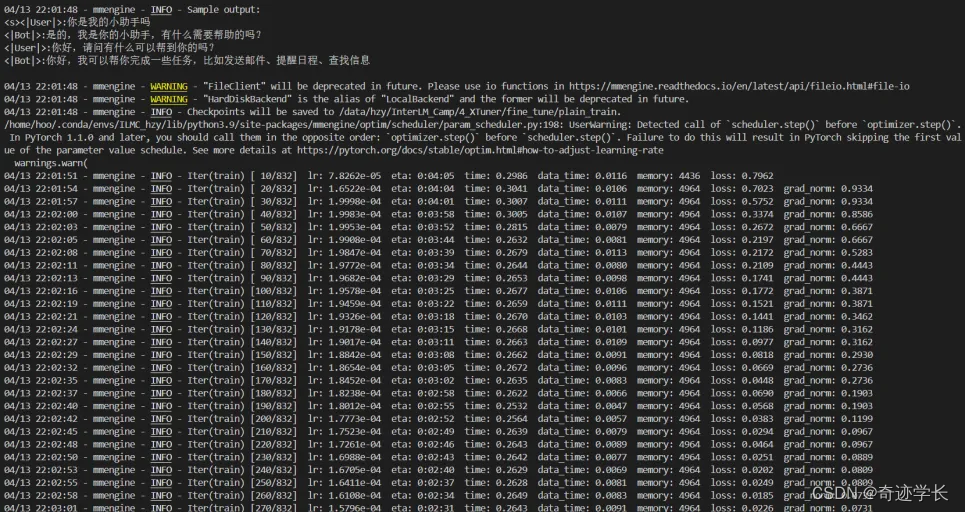

不使用DeepSpeed:由于数据集较小,且使用的最小的 1.8B 模型,加之本地算力资源充足,因此其实不必使用DeepSpeed进行显存压缩与训练加速。使用下面代码进行微调,并将模型保存在当前目录下的plain_train文件夹中

cd /data/hzy/InterLM_Camp/4_XTuner/fine_tune

xtuner train config/internlm2_1_8b_qlora_alpaca_e3_copy.py --work-dir ./plain_train

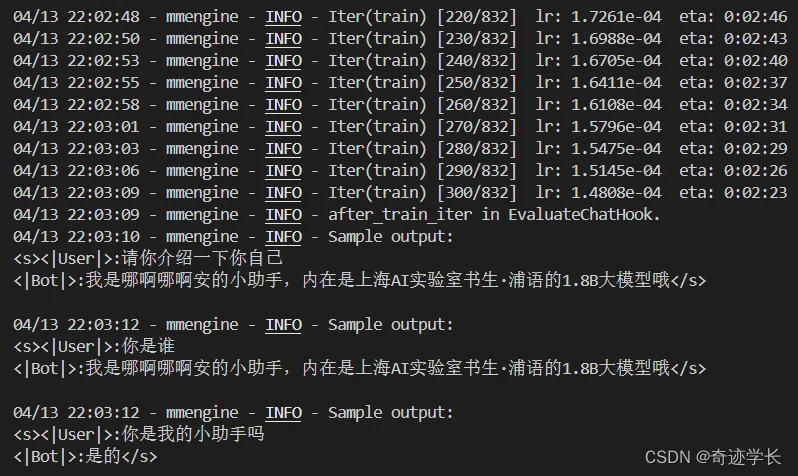

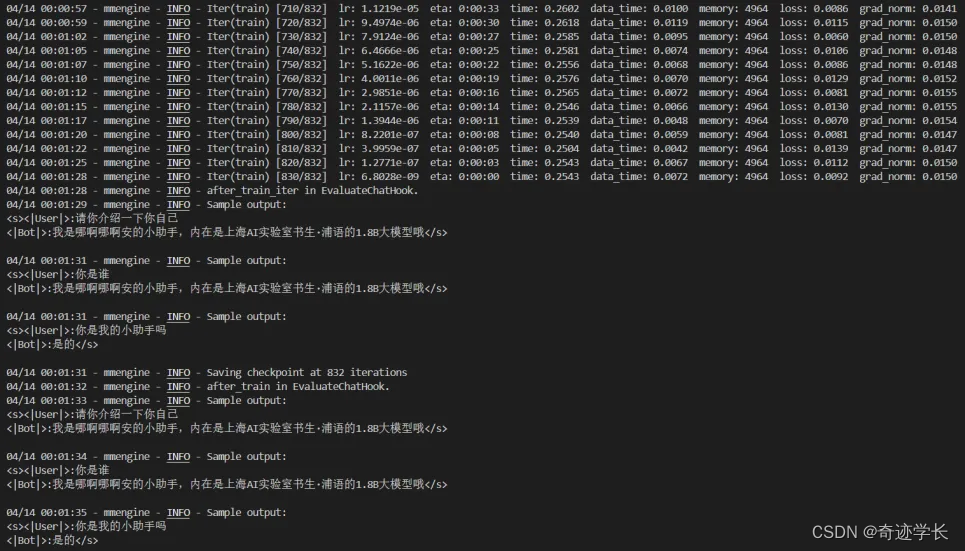

训练结果如下,确实观察到 300 轮已经有较好效果,而过度训练(600 轮)时出现了过拟合

使用DeepSpeed:但我对这个技术很感兴趣,因此又额外用zero2完成了一次微调。

cd /data/hzy/InterLM_Camp/4_XTuner/fine_tune

xtuner train config/internlm2_1_8b_qlora_alpaca_e3_copy.py --work-dir ./zero2_train训练时终端输出与上面几乎相同,但是并没有出现过度训练导致的过拟合,这点很有意思

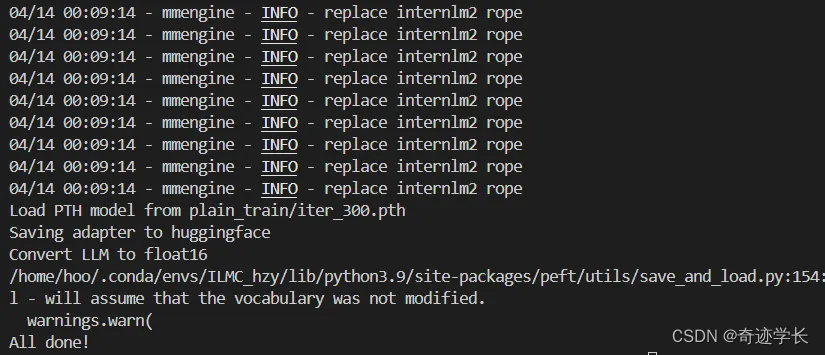

六,模型转化

转换:将 pytorch的pth模型文件转为Huggingface的 bin格式 ( 即 LoRA 模型文件 ),以方便上传Huggingface并部署HuggingfaceDemo。这里我选择了效果较好的 300 轮模型进行转换

mkdir huggingface # 创建文件夹,保存转换后 Huggingface 格式模型

# 模型转换

# xtuner convert pth_to_hf ${配置文件地址} ${权重文件地址} ${转换后模型保存地址}

xtuner convert pth_to_hf ./config/internlm2_1_8b_qlora_alpaca_e3_copy.py ./plain_train/iter_300.pth ./huggingface

七,模型整合

LoRA 或者 QLoRA 微调出来的模型其实并不是一个完整的模型,而是一个额外的层(adapter) 需要与原模型组合才能正常的使用。

全量微调的模型(full)则无需整合,因为全量微调修改的是原模型的权重而非微调一个新的 adapter ,因此是无需进行模型整合

mkdir final_model # 创建文件夹,存放整合后的模型

# 解决一下线程冲突的 Bug

export MKL_SERVICE_FORCE_INTEL=1

# 进行模型整合

# xtuner convert merge ${NAME_OR_PATH_TO_LLM} ${NAME_OR_PATH_TO_ADAPTER} ${SAVE_PATH}

xtuner convert merge ./model ./huggingface ./final_model模型测试

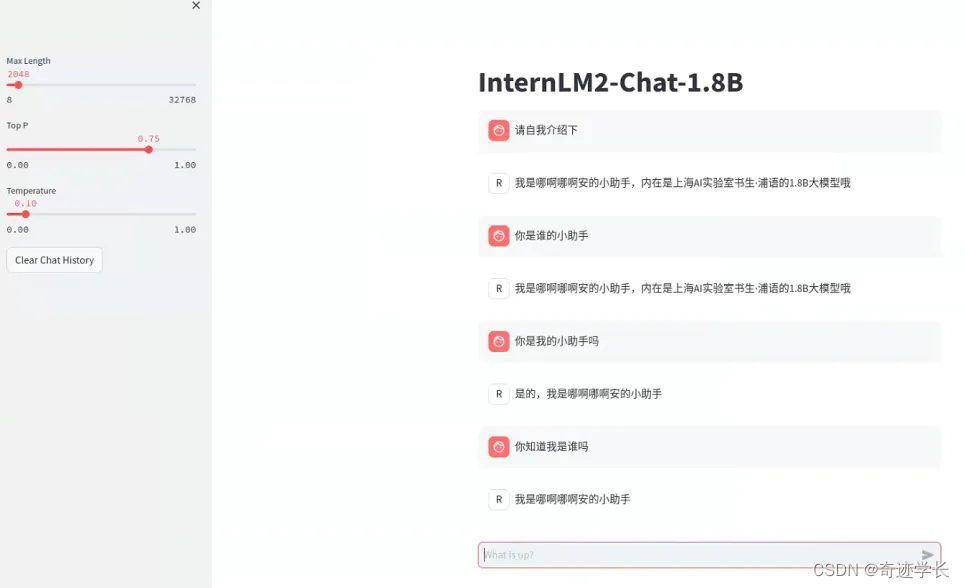

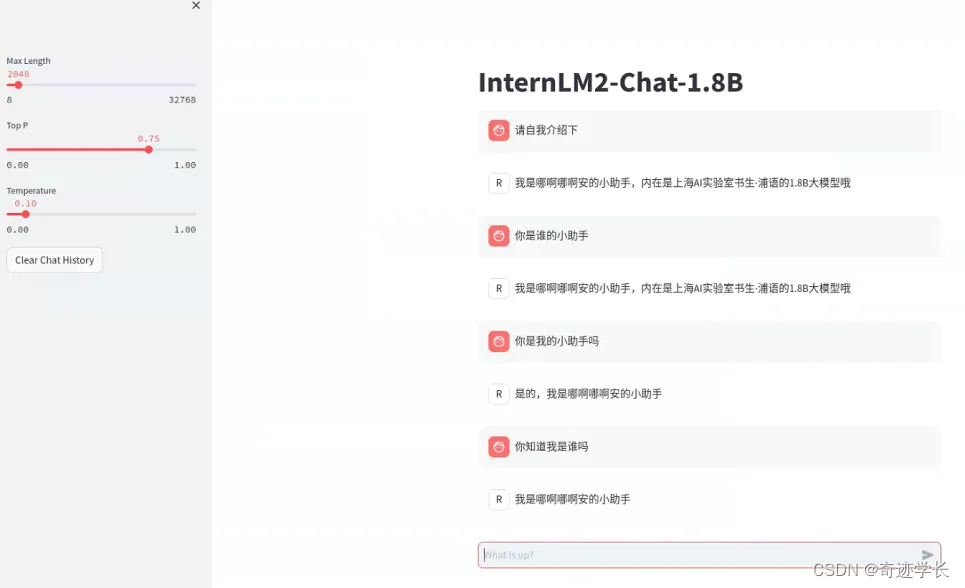

对话测试:分别运行下述两行代码,调用微调后的模型于原模型进行测试,以对比微调效果。从下方对比图中可见,微调效果明显,模型对自己的身份有了更清楚的认知。

# 微调后模型

xtuner chat ./final_model --prompt-template internlm2_chat

# 原模型

xtuner chat ./model --prompt-template internlm2_chat模型 Web 端部署

mkdir ./web_demo && cd web_demo # 创建文件夹,存放 InternLM 项目

# 拉取 InternLM 源文件

git clone https://github.com/InternLM/InternLM.git

cd web_demo/InternLM

# 将 web_demo/InternLM/chat/web_demo.py 替换为下方内容,注意替换模型路径

# 运行 streamlit, 部署到 web 端

streamlit run chat/web_demo.py --server.address 127.0.0.1 --server.port 6006"""This script refers to the dialogue example of streamlit, the interactive

generation code of chatglm2 and transformers.

We mainly modified part of the code logic to adapt to the

generation of our model.

Please refer to these links below for more information:

1. streamlit chat example:

https://docs.streamlit.io/knowledge-base/tutorials/build-conversational-apps

2. chatglm2:

https://github.com/THUDM/ChatGLM2-6B

3. transformers:

https://github.com/huggingface/transformers

Please run with the command `streamlit run path/to/web_demo.py

--server.address=0.0.0.0 --server.port 7860`.

Using `python path/to/web_demo.py` may cause unknown problems.

"""

# isort: skip_file

import copy

import warnings

from dataclasses import asdict, dataclass

from typing import Callable, List, Optional

import streamlit as st

import torch

from torch import nn

from transformers.generation.utils import (LogitsProcessorList,

StoppingCriteriaList)

from transformers.utils import logging

from transformers import AutoTokenizer, AutoModelForCausalLM # isort: skip

logger = logging.get_logger(__name__)

@dataclass

class GenerationConfig:

# this config is used for chat to provide more diversity

max_length: int = 2048

top_p: float = 0.75

temperature: float = 0.1

do_sample: bool = True

repetition_penalty: float = 1.000

@torch.inference_mode()

def generate_interactive(

model,

tokenizer,

prompt,

generation_config: Optional[GenerationConfig] = None,

logits_processor: Optional[LogitsProcessorList] = None,

stopping_criteria: Optional[StoppingCriteriaList] = None,

prefix_allowed_tokens_fn: Optional[Callable[[int, torch.Tensor],

List[int]]] = None,

additional_eos_token_id: Optional[int] = None,

**kwargs,

):

inputs = tokenizer([prompt], padding=True, return_tensors='pt')

input_length = len(inputs['input_ids'][0])

for k, v in inputs.items():

inputs[k] = v.cuda()

input_ids = inputs['input_ids']

_, input_ids_seq_length = input_ids.shape[0], input_ids.shape[-1]

if generation_config is None:

generation_config = model.generation_config

generation_config = copy.deepcopy(generation_config)

model_kwargs = generation_config.update(**kwargs)

bos_token_id, eos_token_id = ( # noqa: F841 # pylint: disable=W0612

generation_config.bos_token_id,

generation_config.eos_token_id,

)

if isinstance(eos_token_id, int):

eos_token_id = [eos_token_id]

if additional_eos_token_id is not None:

eos_token_id.append(additional_eos_token_id)

has_default_max_length = kwargs.get(

'max_length') is None and generation_config.max_length is not None

if has_default_max_length and generation_config.max_new_tokens is None:

warnings.warn(

f"Using 'max_length''s default ({repr(generation_config.max_length)}) \

to control the generation length. "

'This behaviour is deprecated and will be removed from the \

config in v5 of Transformers -- we'

' recommend using `max_new_tokens` to control the maximum \

length of the generation.',

UserWarning,

)

elif generation_config.max_new_tokens is not None:

generation_config.max_length = generation_config.max_new_tokens + \

input_ids_seq_length

if not has_default_max_length:

logger.warn( # pylint: disable=W4902

f"Both 'max_new_tokens' (={generation_config.max_new_tokens}) "

f"and 'max_length'(={generation_config.max_length}) seem to "

"have been set. 'max_new_tokens' will take precedence. "

'Please refer to the documentation for more information. '

'(https://huggingface.co/docs/transformers/main/'

'en/main_classes/text_generation)',

UserWarning,

)

if input_ids_seq_length >= generation_config.max_length:

input_ids_string = 'input_ids'

logger.warning(

f"Input length of {input_ids_string} is {input_ids_seq_length}, "

f"but 'max_length' is set to {generation_config.max_length}. "

'This can lead to unexpected behavior. You should consider'

" increasing 'max_new_tokens'.")

# 2. Set generation parameters if not already defined

logits_processor = logits_processor if logits_processor is not None \

else LogitsProcessorList()

stopping_criteria = stopping_criteria if stopping_criteria is not None \

else StoppingCriteriaList()

logits_processor = model._get_logits_processor(

generation_config=generation_config,

input_ids_seq_length=input_ids_seq_length,

encoder_input_ids=input_ids,

prefix_allowed_tokens_fn=prefix_allowed_tokens_fn,

logits_processor=logits_processor,

)

stopping_criteria = model._get_stopping_criteria(

generation_config=generation_config,

stopping_criteria=stopping_criteria)

logits_warper = model._get_logits_warper(generation_config)

unfinished_sequences = input_ids.new(input_ids.shape[0]).fill_(1)

scores = None

while True:

model_inputs = model.prepare_inputs_for_generation(

input_ids, **model_kwargs)

# forward pass to get next token

outputs = model(

**model_inputs,

return_dict=True,

output_attentions=False,

output_hidden_states=False,

)

next_token_logits = outputs.logits[:, -1, :]

# pre-process distribution

next_token_scores = logits_processor(input_ids, next_token_logits)

next_token_scores = logits_warper(input_ids, next_token_scores)

# sample

probs = nn.functional.softmax(next_token_scores, dim=-1)

if generation_config.do_sample:

next_tokens = torch.multinomial(probs, num_samples=1).squeeze(1)

else:

next_tokens = torch.argmax(probs, dim=-1)

# update generated ids, model inputs, and length for next step

input_ids = torch.cat([input_ids, next_tokens[:, None]], dim=-1)

model_kwargs = model._update_model_kwargs_for_generation(

outputs, model_kwargs, is_encoder_decoder=False)

unfinished_sequences = unfinished_sequences.mul(

(min(next_tokens != i for i in eos_token_id)).long())

output_token_ids = input_ids[0].cpu().tolist()

output_token_ids = output_token_ids[input_length:]

for each_eos_token_id in eos_token_id:

if output_token_ids[-1] == each_eos_token_id:

output_token_ids = output_token_ids[:-1]

response = tokenizer.decode(output_token_ids)

yield response

# stop when each sentence is finished

# or if we exceed the maximum length

if unfinished_sequences.max() == 0 or stopping_criteria(

input_ids, scores):

break

def on_btn_click():

del st.session_state.messages

@st.cache_resource

def load_model():

model = (AutoModelForCausalLM.from_pretrained('/data/hzy/InterLM_Camp/4_XTuner/fine_tune/final_model',

trust_remote_code=True).to(

torch.bfloat16).cuda())

tokenizer = AutoTokenizer.from_pretrained('/data/hzy/InterLM_Camp/4_XTuner/fine_tune/final_model',

trust_remote_code=True)

return model, tokenizer

def prepare_generation_config():

with st.sidebar:

max_length = st.slider('Max Length',

min_value=8,

max_value=32768,

value=2048)

top_p = st.slider('Top P', 0.0, 1.0, 0.75, step=0.01)

temperature = st.slider('Temperature', 0.0, 1.0, 0.1, step=0.01)

st.button('Clear Chat History', on_click=on_btn_click)

generation_config = GenerationConfig(max_length=max_length,

top_p=top_p,

temperature=temperature)

return generation_config

user_prompt = '<|im_start|>user\n{user}<|im_end|>\n'

robot_prompt = '<|im_start|>assistant\n{robot}<|im_end|>\n'

cur_query_prompt = '<|im_start|>user\n{user}<|im_end|>\n\

<|im_start|>assistant\n'

def combine_history(prompt):

messages = st.session_state.messages

meta_instruction = ('')

total_prompt = f"<s><|im_start|>system\n{meta_instruction}<|im_end|>\n"

for message in messages:

cur_content = message['content']

if message['role'] == 'user':

cur_prompt = user_prompt.format(user=cur_content)

elif message['role'] == 'robot':

cur_prompt = robot_prompt.format(robot=cur_content)

else:

raise RuntimeError

total_prompt += cur_prompt

total_prompt = total_prompt + cur_query_prompt.format(user=prompt)

return total_prompt

def main():

# torch.cuda.empty_cache()

print('load model begin.')

model, tokenizer = load_model()

print('load model end.')

st.title('InternLM2-Chat-1.8B')

generation_config = prepare_generation_config()

# Initialize chat history

if 'messages' not in st.session_state:

st.session_state.messages = []

# Display chat messages from history on app rerun

for message in st.session_state.messages:

with st.chat_message(message['role'], avatar=message.get('avatar')):

st.markdown(message['content'])

# Accept user input

if prompt := st.chat_input('What is up?'):

# Display user message in chat message container

with st.chat_message('user'):

st.markdown(prompt)

real_prompt = combine_history(prompt)

# Add user message to chat history

st.session_state.messages.append({

'role': 'user',

'content': prompt,

})

with st.chat_message('robot'):

message_placeholder = st.empty()

for cur_response in generate_interactive(

model=model,

tokenizer=tokenizer,

prompt=real_prompt,

additional_eos_token_id=92542,

**asdict(generation_config),

):

# Display robot response in chat message container

message_placeholder.markdown(cur_response + '▌')

message_placeholder.markdown(cur_response)

# Add robot response to chat history

st.session_state.messages.append({

'role': 'robot',

'content': cur_response, # pylint: disable=undefined-loop-variable

})

torch.cuda.empty_cache()

if __name__ == '__main__':

main()

1558

1558

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?