阅前可看

Pytorch | yolov3原理及代码详解(一)

https://blog.csdn.net/qq_24739717/article/details/92399359

Pytorch | yolov3原理及代码详解(二)

https://blog.csdn.net/qq_24739717/article/details/96705055

上接“Pytorch | yolov3原理及代码详解(二)”的内容

2.3 查看训练指标并评估(train.py——part3)

这段完整代码如下:

for epoch in range(opt.epochs):

model.train()

start_time = time.time()

#print("len(dataloader):\n",len(dataloader))

for batch_i, (_, imgs, targets) in enumerate(dataloader):

batches_done = len(dataloader) * epoch + batch_i

imgs = Variable(imgs.to(device))

targets = Variable(targets.to(device), requires_grad=False)

print("targets.shape:\n",targets.shape)

loss, outputs = model(imgs, targets)

loss.backward()

if batches_done % opt.gradient_accumulations:

# Accumulates gradient before each step

optimizer.step()

optimizer.zero_grad()

# ----------------

# Log progress

# ----------------

log_str = "\n---- [Epoch %d/%d, Batch %d/%d] ----\n" % (epoch, opt.epochs, batch_i, len(dataloader))

metric_table = [["Metrics", *[f"YOLO Layer {i}" for i in range(len(model.yolo_layers))]]]

# Log metrics at each YOLO layer

for i, metric in enumerate(metrics):

formats = {m: "%.6f" for m in metrics}

formats["grid_size"] = "%2d"

formats["cls_acc"] = "%.2f%%"

row_metrics = [formats[metric] % yolo.metrics.get(metric, 0) for yolo in model.yolo_layers]

metric_table += [[metric, *row_metrics]]

# Tensorboard logging

tensorboard_log = []

for j, yolo in enumerate(model.yolo_layers):

for name, metric in yolo.metrics.items():

if name != "grid_size":

tensorboard_log += [(f"{name}_{j+1}", metric)]

tensorboard_log += [("loss", loss.item())]

logger.list_of_scalars_summary(tensorboard_log, batches_done)

log_str += AsciiTable(metric_table).table

log_str += f"\nTotal loss {loss.item()}"

# Determine approximate time left for epoch

epoch_batches_left = len(dataloader) - (batch_i + 1)

time_left = datetime.timedelta(seconds=epoch_batches_left * (time.time() - start_time) / (batch_i + 1))

log_str += f"\n---- ETA {time_left}"

print(log_str)

model.seen += imgs.size(0)

if epoch % opt.evaluation_interval == 0:

print("\n---- Evaluating Model ----")

# Evaluate the model on the validation set

precision, recall, AP, f1, ap_class = evaluate(

model,

path=valid_path,

iou_thres=0.5,

conf_thres=0.5,

nms_thres=0.5,

img_size=opt.img_size,

batch_size=8,

)

evaluation_metrics = [

("val_precision", precision.mean()),

("val_recall", recall.mean()),

("val_mAP", AP.mean()),

("val_f1", f1.mean()),

]

logger.list_of_scalars_summary(evaluation_metrics, epoch)

# Print class APs and mAP

ap_table = [["Index", "Class name", "AP"]]

for i, c in enumerate(ap_class):

ap_table += [[c, class_names[c], "%.5f" % AP[i]]]

print(AsciiTable(ap_table).table)

print(f"---- mAP {AP.mean()}")

if epoch % opt.checkpoint_interval == 0:

torch.save(model.state_dict(), f"checkpoints/yolov3_ckpt_%d.pth" % epoch)

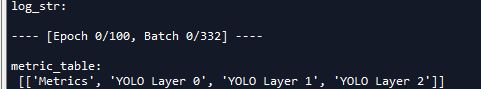

2.3.1 展示训练进度

log_str = "\n---- [Epoch %d/%d, Batch %d/%d] ----\n" % (epoch, opt.epochs, batch_i, len(dataloader))

metric_table = [["Metrics", *[f"YOLO Layer {i}" for i in range(len(model.yolo_layers))]]]

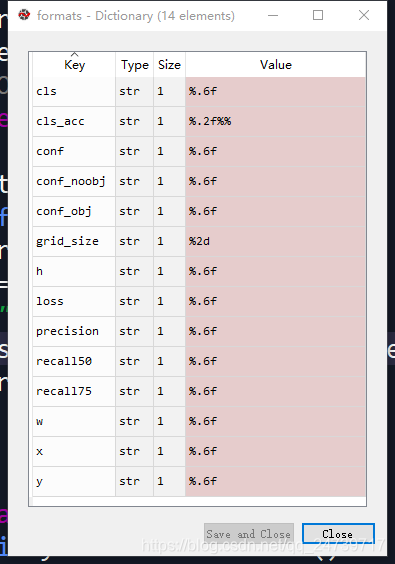

2.3.2 获取指标

从metrics中获取指标类型,并保存到format中。

下一步便通过for循环获取3个yolo层的各项指标,如grid_size、loss、坐标等。并保存在metric_table列表中

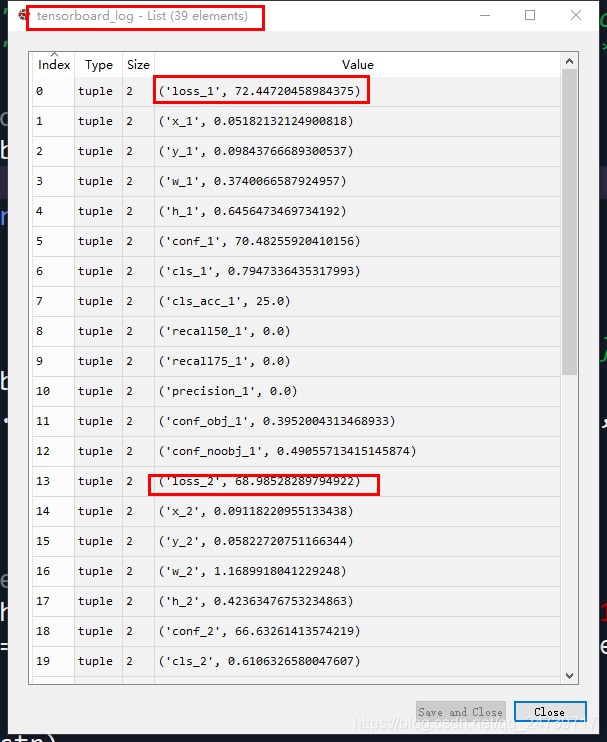

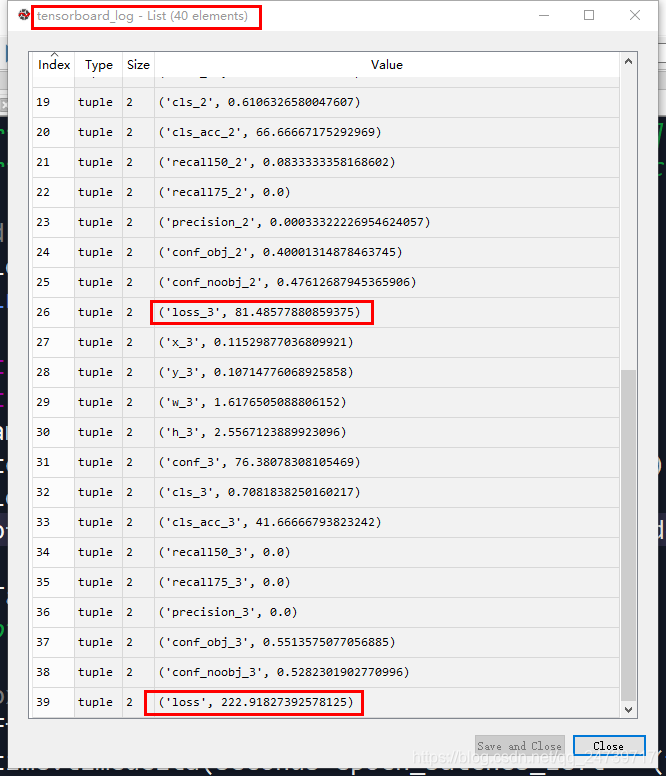

并通过一下代码解析yolo层的参数,放进列表tensorboard_log中。

tensorboard_log = []

for j, yolo in enumerate(model.yolo_layers):

for name, metric in yolo.metrics.items():

if name != "grid_size":

tensorboard_log += [(f"{name}_{j+1}", metric)]

tensorboard_log += [("loss", loss.item())]

logger.list_of_scalars_summary(tensorboard_log, batches_done)

使用log_str打印各项指标参数:

2.3.2 评估训练情况

precision, recall, AP, f1, ap_class = evaluate(

model,

path=valid_path,

iou_thres=0.5,

conf_thres=0.5,

nms_thres=0.5,

img_size=opt.img_size,

batch_size=8,

)使用evaluate函数得到各项指标,evaluate函数完整代码如下:

def evaluate(model, path, iou_thres, conf_thres, nms_thres, img_size, batch_size):

model.eval()

# Get dataloader

dataset = ListDataset(path, img_size=img_size, augment=False, multiscale=False)

dataloader = torch.utils.data.DataLoader(

dataset, batch_size=batch_size, shuffle=False, num_workers=1, collate_fn=dataset.collate_fn

)

Tensor = torch.cuda.FloatTensor if torch.cuda.is_available() else torch.FloatTensor

labels = []

sample_metrics = [] # List of tuples (TP, confs, pred)

for batch_i, (_, imgs, targets) in enumerate(tqdm.tqdm(dataloader, desc="Detecting objects")):

# Extract labels

labels += targets[:, 1].tolist()

# Rescale target

targets[:, 2:] = xywh2xyxy(targets[:, 2:])

targets[:, 2:] *= img_size

imgs = Variable(imgs.type(Tensor), requires_grad=False)

with torch.no_grad():

outputs = model(imgs)

outputs = non_max_suppression(outputs, conf_thres=conf_thres, nms_thres=nms_thres)

sample_metrics += get_batch_statistics(outputs, targets, iou_threshold=iou_thres)

# Concatenate sample statistics

true_positives, pred_scores, pred_labels = [np.concatenate(x, 0) for x in list(zip(*sample_metrics))]

precision, recall, AP, f1, ap_class = ap_per_class(true_positives, pred_scores, pred_labels, labels)

return precision, recall, AP, f1, ap_class这段代码思路很清晰,加载数据和标签,使用xywh2xyxy函数把target坐标进行转换。使用with torch.no_grad():进行模型预测,减少资源浪费。同时对输出进行非极大值抑制,对输出进行筛选。

pytorch的基本使用可看http://blog.sina.com.cn/s/blog_a99f842a0102y1e4.html

“with torch.no_grad(): ”:

为了防止跟踪历史(和使用内存),你还可以用“with torch.no_grad(): ”来包装代码块。

这在评估一个模型时特别有用,因为模型可能有可训练参数(具有属性“requiresgrad=True”),但我们并不需要整个模型的所有梯度。

这句代码是上段的核心:sample_metrics += get_batch_statistics(outputs, targets, iou_threshold=iou_thres)。

评估的时候主要需要2个值,1、样本标注值。2、模型输出值。

1。样本的标注值。为了方便理解,这里简单回顾一下:voclabel.py会生成标注文件,保存在xxxx.txt文件中,每个.txt文件中的内容为了不混淆,我们称之为boxes,其boxes=【class id, x, y, w, h】按这种形式进行保存的。在ListDataset类中的__getitem__函数,会读取这个boxes,并把它从x,y,w,h(已经归一化成0~1)转换成对应特征图大小下的x,y,w,h的形式,并保存为targets。( targets = torch.zeros((len(boxes), 6)) ;targets[:, 1:] = boxes )。不过在评估的时候,为了方便计算IOU值,把target的坐标从x,y,w,h转换到xmin,ymin,xmax,ymax。

2。模型输出值。模型的输出output的shape为【batch_size,10647,5+class】。经过非极大值抑制处理之后,outputs的变成了一个列表,根据非极大值抑制处理的说明Returns detections with shape: (x1, y1, x2, y2, object_conf, class_score, class_pred),output变成了一个列表,长度为batch_size(下图设置的是8),可以看到每一个列表元素对应的张量的shape都是不一样的,这是因为每一张图片经过非极大值抑制处理之后剩下的boxes是不一样的,即tensor.shape(0)是不一样的,但tensor.shape(1)均为7,对应的是(x1, y1, x2, y2, object_conf, class_score, class_pred)。

![]()

![]()

使用了get_batch_statistics函数,获取测试样本的各项指标。结合下面代码,不难理解。其完整代码如下:

def get_batch_statistics(outputs, targets, iou_threshold):

""" Compute true positives, predicted scores and predicted labels per sample """

batch_metrics = []

for sample_i in range(len(outputs)):

if outputs[sample_i] is None:

continue

output = outputs[sample_i]

pred_boxes = output[:, :4]

pred_scores = output[:, 4]

pred_labels = output[:, -1]

true_positives = np.zeros(pred_boxes.shape[0])

annotations = targets[targets[:, 0] == sample_i][:, 1:]

target_labels = annotations[:, 0] if len(annotations) else []

if len(annotations):

detected_boxes = []

target_boxes = annotations[:, 1:]

for pred_i, (pred_box, pred_label) in enumerate(zip(pred_boxes, pred_labels)):

# If targets are found break

if len(detected_boxes) == len(annotations):

break

# Ignore if label is not one of the target labels

if pred_label not in target_labels:

continue

iou, box_index = bbox_iou(pred_box.unsqueeze(0), target_boxes).max(0)

if iou >= iou_threshold and box_index not in detected_boxes:

true_positives[pred_i] = 1

detected_boxes += [box_index]

batch_metrics.append([true_positives, pred_scores, pred_labels])

return batch_metrics

annotations = targets[targets[:, 0] == sample_i][:, 1:],这句把对应ID下的target和图像进行匹配,这点在“Pytorch | yolov3原理及代码详解(二)”中讲到过,使用collate_fn函数给target赋予ID。

precision, recall, AP, f1, ap_class值则是使用ap_per_class函数进行计算,完整代码如下:

def ap_per_class(tp, conf, pred_cls, target_cls):

""" Compute the average precision, given the recall and precision curves.

Source: https://github.com/rafaelpadilla/Object-Detection-Metrics.

# Arguments

tp: True positives (list).

conf: Objectness value from 0-1 (list).

pred_cls: Predicted object classes (list).

target_cls: True object classes (list).

# Returns

The average precision as computed in py-faster-rcnn.

"""

# Sort by objectness

i = np.argsort(-conf)

tp, conf, pred_cls = tp[i], conf[i], pred_cls[i]

# Find unique classes

unique_classes = np.unique(target_cls)

# Create Precision-Recall curve and compute AP for each class

ap, p, r = [], [], []

for c in tqdm.tqdm(unique_classes, desc="Computing AP"):

i = pred_cls == c

n_gt = (target_cls == c).sum() # Number of ground truth objects

n_p = i.sum() # Number of predicted objects

if n_p == 0 and n_gt == 0:

continue

elif n_p == 0 or n_gt == 0:

ap.append(0)

r.append(0)

p.append(0)

else:

# Accumulate FPs and TPs

fpc = (1 - tp[i]).cumsum()

tpc = (tp[i]).cumsum()

# Recall

recall_curve = tpc / (n_gt + 1e-16)

r.append(recall_curve[-1])

# Precision

precision_curve = tpc / (tpc + fpc)

p.append(precision_curve[-1])

# AP from recall-precision curve

ap.append(compute_ap(recall_curve, precision_curve))

# Compute F1 score (harmonic mean of precision and recall)

p, r, ap = np.array(p), np.array(r), np.array(ap)

f1 = 2 * p * r / (p + r + 1e-16)

return p, r, ap, f1, unique_classes.astype("int32")

在训练到一定程度的时候,便保存模型:

if epoch % opt.checkpoint_interval == 0:

torch.save(model.state_dict(), f"checkpoints/yolov3_ckpt_%d.pth" % epoch)以上,train.py基本分析完毕。

其实还有一个test.py函数,但是在train.py以及使用其中的evaluation函数了,所以不在另作分析。剩下还,一点总结还有整体程序流程图,将放在下一个部分:

Pytorch | yolov3原理及代码详解(四)(更新中)

已更完

Pytorch | yolov3原理及代码详解(四)

1070

1070

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?