import numpy as np

from torch.utils.tensorboard import SummaryWriter

writer = SummaryWriter(log_dir='./log')

flag = 0

if flag :

for x in range(100):

# 把x*2的数据加入标签y=2x的曲线

writer.add_scalar(tag='y=2x',scalar_value=x*2,global_step=x)

# 把2**x的数据加入标签y=pow(2,x)的曲线

writer.add_scalar(tag='y=pow(2,x)',scalar_value=2**x,global_step=x)

# 把x*sin(x)和x*cos(x)的数据加入data/scalar_group的标签组中,即

# 两个曲线绘制在一张图中

writer.add_scalars(tag='data/scalar_group',{'xsinx': x*np.sin(x),

'xcosx':x*np.cos(x)}, x)

示例代码:

import numpy as np

from torch.utils.tensorboard import SummaryWriter

writer = SummaryWriter(log_dir='./log')

flag = 0

if flag :

for x in range(10):

data_1 = np.arange(1000)

data_2 = np.random.normal(size=1000)

writer.add_histogram("data1",data_1,x)

writer.add_histogram('data2',data_2,x)

from torchvision import datasets

import torchvision.transforms as transforms

from torch.utils.data.sampler import SubsetRandomSampler

# number of subprocesses to use for data loading

num_workers = 0

# 每批加载16张图片

batch_size = 16

# percentage of training set to use as validation

valid_size = 0.2

# 将数据转换为torch.FloatTensor,并标准化。

transform = transforms.Compose([

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

])

# 选择训练集与测试集的数据

train_data = datasets.CIFAR10('data', train=True,

download=True, transform=transform)

test_data = datasets.CIFAR10('data', train=False,

download=True, transform=transform)

# obtain training indices that will be used for validation

num_train = len(train_data)

indices = list(range(num_train))

np.random.shuffle(indices)

split = int(np.floor(valid_size * num_train))

train_idx, valid_idx = indices[split:], indices[:split]

# define samplers for obtaining training and validation batches

train_sampler = SubsetRandomSampler(train_idx)

valid_sampler = SubsetRandomSampler(valid_idx)

# prepare data loaders (combine dataset and sampler)

train_loader = torch.utils.data.DataLoader(train_data, batch_size=batch_size,sampler=train_sampler, num_workers=num_workers)

valid_loader = torch.utils.data.DataLoader(train_data, batch_size=batch_size, sampler=valid_sampler, num_workers=num_workers)

test_loader = torch.utils.data.DataLoader(test_data, batch_size=batch_size, num_workers=num_workers)

# 对训练输入数据进行可视化

b_img,b_label=iter(train_data).next()

iter = 1

for img in b_img:

# 乘以偏差

img = img.mul(torch.Tensor(np.array([0.5, 0.5, 0.5]).reshape(-1,1,1)))

# 加上均值

img = img.add(torch.Tensor(np.array([0.5, 0.5, 0.5]).reshape(-1,1,1)))

# 加入图像数据

writer.add_image('input',img, iter)

iter += 1

可以拖动图片上方的红线,就可以看到不同step的图像了

对于多张图片的可以使用torchvision.utils.make_grid API把多张图片拼到一张图中方便查看。(make_grid详细参数参考make_grid帮助文档)

修改以上代码:

#导入make_grid

from torchvision.utils import make_grid

# 对训练输入数据进行可视化

b_img,b_label=iter(train_data).next()

# b_img:batch image,4:把图像分成4行 ,normalize=True图像进行了标准化

gimg=make_grid(b_img,4,normalize=True)

# 加入图像数据

writer.add_image("data_input",gimg,1)

效果图:

使用图像可视化对模型输出特征图进行可视化

这里我们需要用到pytorch的hook函数机制,通过注册hook函数获取特征图并进行可视化。

示例代码(使用cifar10分类卷积网络训练代码):

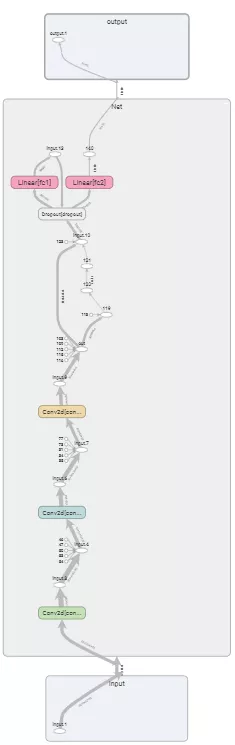

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

# 卷积层 (32x32x3的图像)

self.conv1 = nn.Conv2d(3, 16, 3, padding=1)

# 卷积层(16x16x16)

self.conv2 = nn.Conv2d(16, 32, 3, padding=1)

# 卷积层(8x8x32)

self.conv3 = nn.Conv2d(32, 64, 3, padding=1)

# 最大池化层

self.pool = nn.MaxPool2d(2, 2)

# linear layer (64 * 4 * 4 -> 500)

self.fc1 = nn.Linear(64 * 4 * 4, 500)

# linear layer (500 -> 10)

self.fc2 = nn.Linear(500, 10)

# dropout层 (p=0.3)

self.dropout = nn.Dropout(0.3)

def forward(self, x):

# add sequence of convolutional and max pooling layers

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = self.pool(F.relu(self.conv3(x)))

# flatten image input

x = x.view(-1, 64 * 4 * 4)

# add dropout layer

x = self.dropout(x)

# add 1st hidden layer, with relu activation function

x = F.relu(self.fc1(x))

# add dropout layer

x = self.dropout(x)

# add 2nd hidden layer, with relu activation function

x = self.fc2(x)

return x

# create a complete CNN

model = Net()

print(model)

# 定义hook函数

conv_fmap_ls = []

def conv1_fmap_hook(model,input,output):

conv_fmap_ls.append(output)

# 注册hook函数,作为示例只对conv1的输出进行记录

model.conv1.register_forward_hook(conv1_fmap_hook)

# 使用GPU

if train_on_gpu:

model.cuda()

import torch.optim as optim

# 使用交叉熵损失函数

criterion = nn.CrossEntropyLoss()

# 使用随机梯度下降,学习率lr=0.01

optimizer = optim.SGD(model.parameters(), lr=0.01)

# 训练模型的次数

n_epochs = 30

valid_loss_min = np.Inf # track change in validation loss

iter = 0

for epoch in range(1, n_epochs + 1):

# keep track of training and validation loss

train_loss = 0.0

valid_loss = 0.0

###################

# 训练集的模型 #

###################

model.train()

for data, target in train_loader:

iter += 1

# move tensors to GPU if CUDA is available

if train_on_gpu:

data, target = data.cuda(), target.cuda()

# clear the gradients of all optimized variables

optimizer.zero_grad()

# forward pass: compute predicted outputs by passing inputs to the model

output = model(data)

# calculate the batch loss

loss = criterion(output, target)

# backward pass: compute gradient of the loss with respect to model parameters

loss.backward()

# perform a single optimization step (parameter update)

optimizer.step()

# update training loss

train_loss += loss.item() * data.size(0)

#记录feature map

if len(conv_fmap_ls)>0:

# 取出conv1的输出

fm = conv_fmap_ls[0]

#增维,(batch_num,output_channel,width,height)->(batch_num,output_channel,1,width,height)

fm = fm.unsqueeze(2)

b,output_c,c,w,h = fm.size()

#改变形状

fm = fm.view(-1,c,w,h)

# 拼图

gm = make_grid(fm, nrow=16, normalize=True)

# 添加图像记录

writer.add_image("conv1_feature_map", gm, iter)

conv_fmap_ls.clear()

3609

3609

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?