以Titanic乘客生存预测任务为例,进一步熟悉Ray Tune调参工具。

titanic数据集的目标是根据乘客信息预测他们在Titanic号撞击冰山沉没后能否生存。

本示例的基础代码参考了下面两篇文章:

- [1-1,结构化数据建模流程范例(

一个不错的PyTorch教程

)](/)

也可以看一下上一篇文章:

PyTorch + Ray Tune 调参

教程中的原始代码如下:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import torch

from torch import nn

from torch.utils.data import Dataset,DataLoader,TensorDataset

from sklearn.metrics import accuracy_score

import datetime

dftrain_raw = pd.read_csv('train.csv')

dftest_raw = pd.read_csv('test.csv')

dftrain_raw.head(10)

def preprocessing(dfdata):

dfresult= pd.DataFrame()

#Pclass

dfPclass = pd.get_dummies(dfdata['Pclass'])

dfPclass.columns = ['Pclass_' +str(x) for x in dfPclass.columns ]

dfresult = pd.concat([dfresult,dfPclass],axis = 1)

#Sex

dfSex = pd.get_dummies(dfdata['Sex'])

dfresult = pd.concat([dfresult,dfSex],axis = 1)

#Age

dfresult['Age'] = dfdata['Age'].fillna(0)

dfresult['Age_null'] = pd.isna(dfdata['Age']).astype('int32')

#SibSp,Parch,Fare

dfresult['SibSp'] = dfdata['SibSp']

dfresult['Parch'] = dfdata['Parch']

dfresult['Fare'] = dfdata['Fare']

#Carbin

dfresult['Cabin_null'] = pd.isna(dfdata['Cabin']).astype('int32')

#Embarked

dfEmbarked = pd.get_dummies(dfdata['Embarked'],dummy_na=True)

dfEmbarked.columns = ['Embarked_' + str(x) for x in dfEmbarked.columns]

dfresult = pd.concat([dfresult,dfEmbarked],axis = 1)

return(dfresult)

x_train = preprocessing(dftrain_raw).values

y_train = dftrain_raw[['Survived']].values

x_test = preprocessing(dftest_raw).values

y_test = dftest_raw[['Survived']].values

print("x_train.shape =", x_train.shape )

print("x_test.shape =", x_test.shape )

print("y_train.shape =", y_train.shape )

print("y_test.shape =", y_test.shape )

dl_train = DataLoader(TensorDataset(torch.tensor(x_train).float(),torch.tensor(y_train).float()),

shuffle = True, batch_size = 8)

dl_valid = DataLoader(TensorDataset(torch.tensor(x_test).float(),torch.tensor(y_test).float()),

shuffle = False, batch_size = 8)

def create_net():

net = nn.Sequential()

net.add_module("linear1", nn.Linear(15, 20))

net.add_module("relu1", nn.ReLU())

net.add_module("linear2", nn.Linear(20, 15))

net.add_module("relu2", nn.ReLU())

net.add_module("linear3", nn.Linear(15, 1))

net.add_module("sigmoid", nn.Sigmoid())

return net

net = create_net()

print(net)

loss_func = nn.BCELoss()

optimizer = torch.optim.Adam(params=net.parameters(),lr = 0.01)

metric_func = lambda y_pred,y_true: accuracy_score(y_true.data.numpy(),y_pred.data.numpy()>0.5)

metric_name = "accuracy"

epochs = 10

log_step_freq = 30

dfhistory = pd.DataFrame(columns = ["epoch","loss",metric_name,"val_loss","val_"+metric_name])

print("Start Training...")

nowtime = datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S')

print("=========="*8 + "%s"%nowtime)

for epoch in range(1, epochs + 1):

# 1,训练循环-------------------------------------------------

net.train()

loss_sum = 0.0

metric_sum = 0.0

step = 1

for step, (features, labels) in enumerate(dl_train, 1):

# 梯度清零

optimizer.zero_grad()

# 正向传播求损失

predictions = net(features)

loss = loss_func(predictions, labels)

metric = metric_func(predictions, labels)

# 反向传播求梯度

loss.backward()

optimizer.step()

# 打印batch级别日志

loss_sum += loss.item()

metric_sum += metric.item()

if step % log_step_freq == 0:

print(("[step = %d] loss: %.3f, " + metric_name + ": %.3f") %

(step, loss_sum / step, metric_sum / step))

# 2,验证循环-------------------------------------------------

net.eval()

val_loss_sum = 0.0

val_metric_sum = 0.0

val_step = 1

for val_step, (features, labels) in enumerate(dl_valid, 1):

# 关闭梯度计算

with torch.no_grad():

predictions = net(features)

val_loss = loss_func(predictions, labels)

val_metric = metric_func(predictions, labels)

val_loss_sum += val_loss.item()

val_metric_sum += val_metric.item()

# 3,记录日志-------------------------------------------------

info = (epoch, loss_sum / step, metric_sum / step,

val_loss_sum / val_step, val_metric_sum / val_step)

dfhistory.loc[epoch - 1] = info

# 打印epoch级别日志

print(("\nEPOCH = %d, loss = %.3f," + metric_name + \

" = %.3f, val_loss = %.3f, " + "val_" + metric_name + " = %.3f")

% info)

nowtime = datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S')

print("\n" + "==========" * 8 + "%s" % nowtime)

print('Finished Training...')

运行效果如下:

x_train.shape = (712, 15)

x_test.shape = (179, 15)

y_train.shape = (712, 1)

y_test.shape = (179, 1)

Sequential(

(linear1): Linear(in_features=15, out_features=20, bias=True)

(relu1): ReLU()

(linear2): Linear(in_features=20, out_features=15, bias=True)

(relu2): ReLU()

(linear3): Linear(in_features=15, out_features=1, bias=True)

(sigmoid): Sigmoid()

)

Start Training...

================================================================================2021-01-07 10:57:41

[step = 30] loss: 0.706, accuracy: 0.613

[step = 60] loss: 0.641, accuracy: 0.673

EPOCH = 1, loss = 0.653,accuracy = 0.660, val_loss = 0.595, val_accuracy = 0.745

================================================================================2021-01-07 10:57:42

[step = 30] loss: 0.593, accuracy: 0.700

[step = 60] loss: 0.591, accuracy: 0.700

EPOCH = 2, loss = 0.574,accuracy = 0.719, val_loss = 0.472, val_accuracy = 0.772

================================================================================2021-01-07 10:57:42

[step = 30] loss: 0.574, accuracy: 0.767

[step = 60] loss: 0.537, accuracy: 0.769

EPOCH = 3, loss = 0.525,accuracy = 0.772, val_loss = 0.454, val_accuracy = 0.804

================================================================================2021-01-07 10:57:42

[step = 30] loss: 0.523, accuracy: 0.783

[step = 60] loss: 0.503, accuracy: 0.794

EPOCH = 4, loss = 0.506,accuracy = 0.792, val_loss = 0.473, val_accuracy = 0.804

================================================================================2021-01-07 10:57:42

[step = 30] loss: 0.492, accuracy: 0.796

[step = 60] loss: 0.482, accuracy: 0.802

EPOCH = 5, loss = 0.485,accuracy = 0.796, val_loss = 0.429, val_accuracy = 0.799

================================================================================2021-01-07 10:57:42

[step = 30] loss: 0.461, accuracy: 0.808

[step = 60] loss: 0.461, accuracy: 0.798

EPOCH = 6, loss = 0.471,accuracy = 0.792, val_loss = 0.454, val_accuracy = 0.799

================================================================================2021-01-07 10:57:42

[step = 30] loss: 0.467, accuracy: 0.779

[step = 60] loss: 0.490, accuracy: 0.762

EPOCH = 7, loss = 0.464,accuracy = 0.787, val_loss = 0.401, val_accuracy = 0.810

================================================================================2021-01-07 10:57:42

[step = 30] loss: 0.444, accuracy: 0.804

[step = 60] loss: 0.444, accuracy: 0.808

EPOCH = 8, loss = 0.442,accuracy = 0.808, val_loss = 0.446, val_accuracy = 0.777

================================================================================2021-01-07 10:57:42

[step = 30] loss: 0.460, accuracy: 0.775

[step = 60] loss: 0.458, accuracy: 0.792

EPOCH = 9, loss = 0.440,accuracy = 0.802, val_loss = 0.429, val_accuracy = 0.810

================================================================================2021-01-07 10:57:42

[step = 30] loss: 0.436, accuracy: 0.808

[step = 60] loss: 0.438, accuracy: 0.806

EPOCH = 10, loss = 0.439,accuracy = 0.803, val_loss = 0.418, val_accuracy = 0.810

================================================================================2021-01-07 10:57:43

Finished Training...

回顾上一篇博客,要使用Ray Tune进行调参,我们要做以下几件事:

- 将数据加载和训练过程封装到函数中;

- 使一些网络参数可配置;

- 增加检查点(可选);

- 定义模型参数搜索空间。

下面我们看一下按照上面的要求怎么修改代码:

import os

import pandas as pd

import torch

from torch import nn

from functools import partial

from torch.utils.data import random_split

from torch.utils.data import DataLoader, TensorDataset

from sklearn.metrics import accuracy_score

from ray import tune

from ray.tune import CLIReporter

from ray.tune.schedulers import ASHAScheduler

# 执行数据预处理功能

def preprocessing(dfdata):

dfresult = pd.DataFrame()

# Pclass

dfPclass = pd.get_dummies(dfdata['Pclass'])

dfPclass.columns = ['Pclass_' + str(x) for x in dfPclass.columns]

dfresult = pd.concat([dfresult, dfPclass], axis=1)

# Sex

dfSex = pd.get_dummies(dfdata['Sex'])

dfresult = pd.concat([dfresult, dfSex], axis=1)

# Age

dfresult['Age'] = dfdata['Age'].fillna(0)

dfresult['Age_null'] = pd.isna(dfdata['Age']).astype('int32')

# SibSp,Parch,Fare

dfresult['SibSp'] = dfdata['SibSp']

dfresult['Parch'] = dfdata['Parch']

dfresult['Fare'] = dfdata['Fare']

# Carbin

dfresult['Cabin_null'] = pd.isna(dfdata['Cabin']).astype('int32')

# Embarked

dfEmbarked = pd.get_dummies(dfdata['Embarked'], dummy_na=True)

dfEmbarked.columns = ['Embarked_' + str(x) for x in dfEmbarked.columns]

dfresult = pd.concat([dfresult, dfEmbarked], axis=1)

return (dfresult)

# 执行数据加载功能的函数

def load_data(train_dir='F:/Data_Analysis/Visualization/7_ray_tune/train.csv',

test_dir='F:/Data_Analysis/Visualization/7_ray_tune/test.csv'):

dftrain_raw = pd.read_csv(train_dir)

dftest_raw = pd.read_csv(test_dir)

x_train = preprocessing(dftrain_raw).values

y_train = dftrain_raw[['Survived']].values

x_test = preprocessing(dftest_raw).values

y_test = dftest_raw[['Survived']].values

trainset = TensorDataset(torch.tensor(x_train).float(), torch.tensor(y_train).float())

testset = TensorDataset(torch.tensor(x_test).float(), torch.tensor(y_test).float())

return trainset, testset

# 定义神经网络模型

class Net(nn.Module):

def __init__(self, l1=20, l2=15):

super(Net, self).__init__()

self.net = nn.Sequential(

nn.Linear(15, l1),

nn.ReLU(),

nn.Linear(l1, l2),

nn.ReLU(),

nn.Linear(l2, 1),

nn.Sigmoid()

)

def forward(self, x):

x = self.net(x)

return x

metric_func = lambda y_pred,y_true: accuracy_score(y_true.data.numpy(),y_pred.data.numpy()>0.5)

# 执行模型训练过程的函数

def train_titanic(config, checkpoint_dir=None, train_dir=None, test_dir=None):

net = Net(config["l1"], config["l2"]) # 可配置参数

device = "cpu"

net.to(device)

loss_func = nn.BCELoss()

optimizer = torch.optim.Adam(params=net.parameters(), lr=config["lr"]) # 可配置参数

if checkpoint_dir:

# 模型的状态、优化器的状态

model_state, optimizer_state = torch.load(

os.path.join(checkpoint_dir, "checkpoint"))

net.load_state_dict(model_state)

optimizer.load_state_dict(optimizer_state)

# numpy.ndarray

trainset, testset = load_data(train_dir, test_dir)

train_abs = int(len(trainset) * 0.8)

train_subset, val_subset = random_split(trainset, [train_abs, len(trainset) - train_abs])

trainloader = torch.utils.data.DataLoader(

train_subset,

batch_size=int(config["batch_size"]), # 可配置参数

shuffle=True,

num_workers=4)

valloader = torch.utils.data.DataLoader(

val_subset,

batch_size=int(config["batch_size"]), # 可配置参数

shuffle=True,

num_workers=4)

for epoch in range(1, 11):

net.train()

loss_sum = 0.0

metric_sum = 0.0

step = 1

for step, (features, labels) in enumerate(trainloader, 1):

# 梯度清零

optimizer.zero_grad()

# 正向传播求损失

predictions = net(features)

loss = loss_func(predictions, labels)

metric = metric_func(predictions, labels)

# 反向传播求梯度

loss.backward()

optimizer.step()

net.eval()

val_loss_sum = 0.0

val_metric_sum = 0.0

val_step = 1

for val_step, (features, labels) in enumerate(valloader, 1):

# 关闭梯度计算

with torch.no_grad():

predictions = net(features)

val_loss = loss_func(predictions, labels)

val_metric = metric_func(predictions, labels)

val_loss_sum += val_loss.item()

val_metric_sum += val_metric.item()

with tune.checkpoint_dir(epoch) as checkpoint_dir:

path = os.path.join(checkpoint_dir, "checkpoint")

torch.save((net.state_dict(), optimizer.state_dict()), path)

# 打印平均损失和平均精度

tune.report(loss=(val_loss_sum / val_step), accuracy=(val_metric_sum / val_step))

print("Finished Training")

# 执行测试功能的函数

def test_accuracy(net, device="cpu"):

trainset, testset = load_data()

testloader = DataLoader(testset, shuffle = False, batch_size = 4, num_workers=6)

loss_func = nn.BCELoss()

net.eval()

test_loss_sum = 0.0

test_metric_sum = 0.0

test_step = 1

for test_step, (features, labels) in enumerate(testloader, 1):

# 关闭梯度计算

with torch.no_grad():

predictions = net(features)

test_loss = loss_func(predictions, labels)

test_metric = metric_func(predictions, labels)

test_loss_sum += test_loss.item()

test_metric_sum += test_metric.item()

return test_loss_sum / test_step, test_metric_sum / test_step

# 主程序

def main(num_samples=10, max_num_epochs=10):

checkpoint_dir = 'F:/Data_Analysis/Visualization/7_ray_tune/checkpoints/'

train_dir = 'F:/Data_Analysis/Visualization/7_ray_tune/train.csv'

test_dir = 'F:/Data_Analysis/Visualization/7_ray_tune/test.csv'

load_data(train_dir, test_dir)

# 定义模型参数搜索空间

config = {

"l1": tune.choice([8, 16, 32, 64]),

"l2": tune.choice([8, 16, 32, 64]),

"lr": tune.choice([0.1, 0.01, 0.001, 0.0001]),

"batch_size": tune.choice([2, 4, 8, 16])

}

# ASHAScheduler会根据指定标准提前中止坏实验

scheduler = ASHAScheduler(

metric="loss",

mode="min",

max_t=max_num_epochs,

grace_period=1,

reduction_factor=2)

# 在命令行打印实验报告

reporter = CLIReporter(

# parameter_columns=["l1", "l2", "lr", "batch_size"],

metric_columns=["loss", "accuracy", "training_iteration"])

# 执行训练过程

result = tune.run(

partial(train_titanic, checkpoint_dir=checkpoint_dir, train_dir=train_dir, test_dir=test_dir),

# 指定训练资源

resources_per_trial={"cpu": 6},

config=config,

num_samples=num_samples,

scheduler=scheduler,

progress_reporter=reporter)

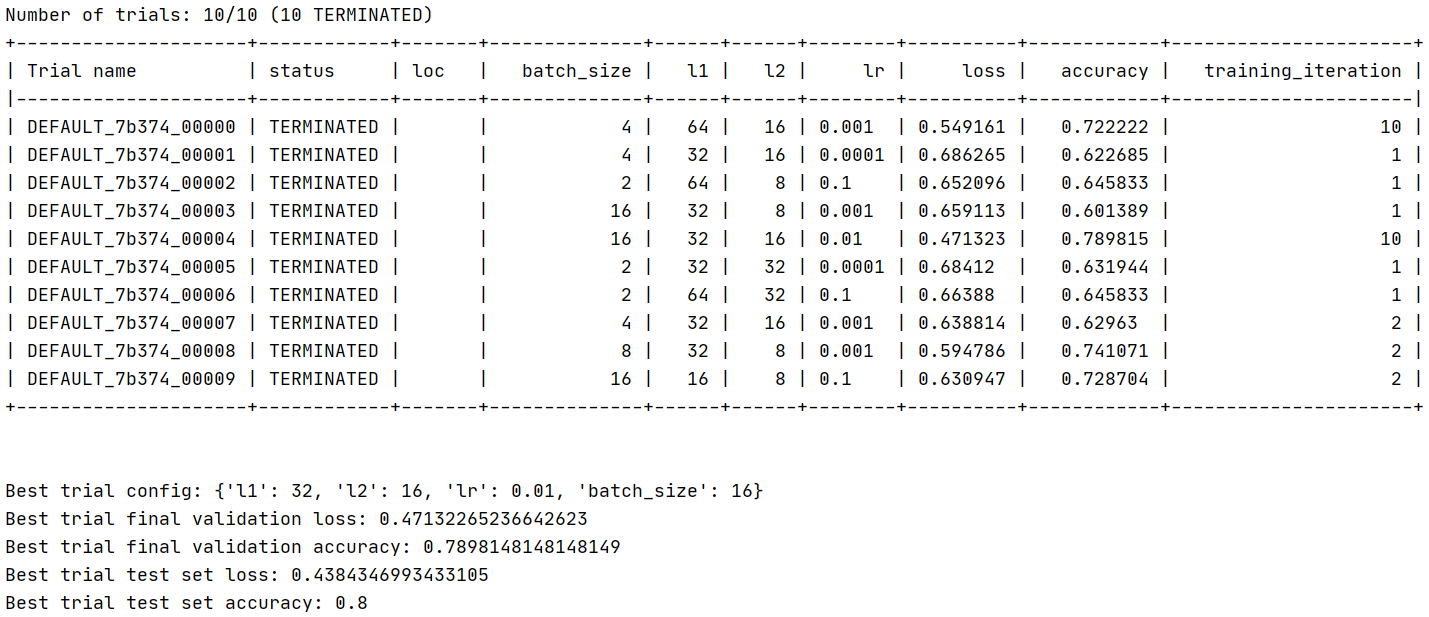

# 找出最佳实验

best_trial = result.get_best_trial("loss", "min", "last")

# 打印最佳实验的参数配置

print("Best trial config: {}".format(best_trial.config))

print("Best trial final validation loss: {}".format(

best_trial.last_result["loss"]))

print("Best trial final validation accuracy: {}".format(

best_trial.last_result["accuracy"]))

# 打印最优超参数组合对应的模型在测试集上的性能

best_trained_model = Net(best_trial.config["l1"], best_trial.config["l2"])

device = "cpu"

best_trained_model.to(device)

best_checkpoint_dir = best_trial.checkpoint.value

model_state, optimizer_state = torch.load(os.path.join(

best_checkpoint_dir, "checkpoint"))

best_trained_model.load_state_dict(model_state)

test_loss, test_acc = test_accuracy(best_trained_model, device)

print("Best trial test set loss: {}".format(test_loss))

print("Best trial test set accuracy: {}".format(test_acc))

if __name__ == "__main__":

# You can change the number of GPUs per trial here:

main(num_samples=10, max_num_epochs=10)

效果如下:

351

351

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?