Albumentations方法目录

- 前言

- 基础调用

- Pixel-level transforms

- AdvancedBlur(使用随机选择参数的广义正态滤波器)

- Blur(模糊)

- CLAHE(限制对比度自适应直方图均衡化)

- ChannelDropout(随机drop一个或多个通道)

- ChannelShuffle(通道打乱)

- ColorJitter(色彩抖动【亮度、对比度、饱和度】)

- Defocus(虚焦)

- Downscale(降质)

- Emboss(浮雕效果)

- Equalize(直方图均衡)

- FDA(Fourier-Domain-Adaptation,实现简单的风格迁移)

- FancyPCA(RGB图像色彩增强)

- FromFloat(乘最大值变整型,与ToFloat相反)

- GaussNoise(高斯噪声)

- GaussianBlur(高斯模糊)

- GlassBlur(玻璃模糊)

- HistogramMatching(直方图匹配,会引起色调变化)

- HueSaturationValue(色调、饱和度、亮度)

- ISONoise(传感器噪声)

- ImageCompression(图像压缩)

- InvertImg(255-img)

- MedianBlur(中值滤波)

- MotionBlur(运动模糊)

- MultiplicativeNoise(乘性噪声)

- Normalize(归一化)

- PixelDistributionAdaptation

- Posterize(色调分层)

- RGBShift(RGB每个通道上值偏移)

- RandomBrightnessContrast(亮度、对比度)

- RandomFog(雾效果)

- RandomGamma(gamma变换)

- RandomRain(下雨效果)

- RandomShadow

- RandomSnow

- RandomSunFlare(太阳耀斑效果)

- RandomToneCurve

- RingingOvershoot

- Sharpen(锐化)

- Solarize(大于阈值反转)

- Spatter(镜头雨点泥点飞溅效果)

- Superpixels(超像素)

- TemplateTransform

- ToFloat(除最大值归一化,与FromFloat相反)

- ToGray(转灰度(三通道))

- ToRGB(灰度转三通道RGB)

- ToSepia(加棕褐色滤镜)

- UnsharpMask(锐化)

- ZoomBlur(变焦模糊)

- Spatial-level transforms

- Affine

- BBoxSafeRandomCrop(包含所有bboxes的裁剪)

- CenterCrop(裁剪中心区域)

- CoarseDropout(矩形区域cutout)

- Crop(裁切)

- CropAndPad(裁剪或填充图像边缘)

- CropNonEmptyMaskIfExists(裁剪+缩放,可以忽略mask部分区域)

- ElasticTransform(弹性变换)

- Flip(翻转)

- GridDistortion(网格畸变)

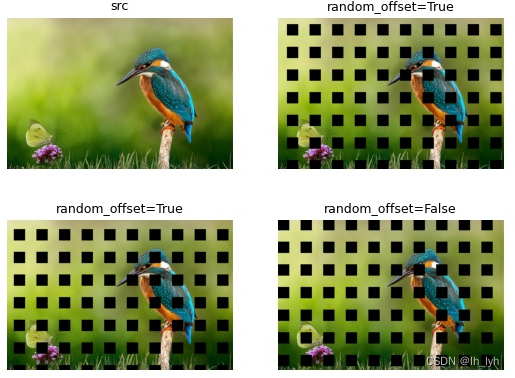

- GridDropout(网格状cutout)

- HorizontalFlip(水平翻转)

- Lambda

- LongestMaxSize(长边等比例缩放至指定size)

- MaskDropout(随机抹除目标实例)

- NoOp(无操作)

- OpticalDistortion(光学畸变(桶形、枕形))

- PadIfNeeded(边界填充)

- Perspective(透视变换)

- PiecewiseAffine(局部仿射变换,效果类似ElasticTransform,但速度很慢)

- PixelDropout(随机丢弃像素值)

- RandomCrop(随机裁剪)

- RandomCropFromBorders(图像边缘裁剪,会改变尺寸)

- RandomCropNearBBox(指定rect附近裁剪)

- RandomGridShuffle(分块打乱)

- RandomResizedCrop(裁剪+缩放,裁剪区域宽高比随机)

- RandomRotate90(随机旋转90度n次,即0°,90°,180°,270°随机旋转)

前言

本文旨在详解albumentations 增强方法使用,结合源码了解参数含义和有效值范围,结合可视化结果直观了解各个增强方法的功能以及参数取值不同如何影响增强图像。

参照官网将所有增强方法划分为两个大类别介绍:Pixel-level transforms和Spatial-level transforms,两者区别在于该增强方法是否会引起图像附加属性变化(如masks, bounding boxes, keypoints)。Pixel-level不会,Spatial-level会,Spatial-level transforms有个总览表格记录每个增强方法会引起哪些附加属性变化。每个类别的增强方法按字母顺序排序,方便检索。

版本说明

本文初期编辑时版本是Albumentations version : 1.3.0,v1.3相比以前版本有较大变化(变换方法新增,级目录重构等),建议更新至1.3.0及以上版本,否则有些变换调用不到或者路径不对。文中个别变换方法在1.3.0以上版本。如果某些函数调用不到,可以更新一下。

更新albumentations:pip install -U albumentations

文中代码默认import albumentations as A,若出现A.transformxx,等同于albumentations.transformxx

如有错误,可在评论区指出。

拓展阅读

官方code网站:https://github.com/albumentations-team/albumentations

官方文档:https://albumentations.readthedocs.io/

部分增强可视化:Albumentations数据增强方法(文中VerticalFlip和HorizontalFlip结果反了)

道路场景图像增强:https://github.com/UjjwalSaxena/Automold–Road-Augmentation-Library

Albumentations已包含其中一些实现:RandomRain,RandomFog,RandomSunFlare,RandomShadow,RandomSnow。

基础调用

Notes

- 调用时注意默认参数p,大多都是p=0.5,偶尔有些是p=1。

该参数表示应用该变换的概率,后续参数说明时不再额外列出。

查看base初始化参数:get_base_init_args()

查看transform初始化参数 :get_transform_init_args() - 许多参数接受单个数字或者两个数字区间形式输入。两个数字区间形式一般都是在这范围中随机采样,若是单个数字,有些转化为默认区间(如ColorJitter参数,解释得很详细),有些直接用该值(如Spatter参数)。需注意区分。

- 各变换的

apply方法是核心,init方法中会对输入参数先进行些预处理工作,如单个数字转化为区间参数、检查参数是否在有效区间内等。 - get_params()方法不可单独调用来追溯结果图对应的参数,因为单独调用get_params()方法时,又再一次随机采样了。

想要固定参数的话,可以将输入参数的区间边界值均设为相同值,这样random采样之后只能是其本身。 - bounding box指的是归一化(x/width, y/height)后的坐标,float型,非整型绝对值。

- 有很多方法涉及图像边界补充,参数border_mode可视化:

OpenCV滤波之copyMakeBorder和borderInterpolate

OpenCV图像处理|1.16 卷积边界处理

OpenCV-扩充图像的边界

Demo Code

调用增强方法的demo code,以Sharpen方法为例:

```python

import cv2

import albumentations as A

if __name__ == "__main__":

filename = 'src'

src_img = cv2.imread(f'imgs/{filename}.jpg')

dst_path = f'imgs/{filename}_aug.jpg'

transform = A.Sharpen(alpha=(0.2, 0.5), lightness=(0.5, 1.0), p=0.5)

img_aug = transform(image=src_img)['image']

cv2.imwrite(dst_path, img_aug)

```

to_tuple( )

单个输入参数转化为区间参数时经常用到这个功能函数。

注意 low 参数表示另一边界的填补值。

举例:

self.blur_limit = to_tuple(1, 3) # self.blur_limit = (1, 3)

self.blur_limit = to_tuple(5, 3) # self.blur_limit = (3, 5)

# source code

def to_tuple(param, low=None, bias=None):

"""Convert input argument to min-max tuple

Args:

param (scalar, tuple or list of 2+ elements): Input value.

If value is scalar, return value would be (offset - value, offset + value).

If value is tuple, return value would be value + offset (broadcasted).

low: Second element of tuple can be passed as optional argument

bias: An offset factor added to each element

"""

if low is not None and bias is not None:

raise ValueError("Arguments low and bias are mutually exclusive")

if param is None:

return param

if isinstance(param, (int, float)):

if low is None:

param = -param, +param

else:

param = (low, param) if low < param else (param, low)

elif isinstance(param, Sequence):

if len(param) != 2:

raise ValueError("to_tuple expects 1 or 2 values")

param = tuple(param)

else:

raise ValueError("Argument param must be either scalar (int, float) or tuple")

if bias is not None:

return tuple(bias + x for x in param)

return tuple(param)

获取初始化的默认base参数

method:get_base_init_args()

包含"always_apply"和"p"两个参数

# source code

def get_base_init_args(self) -> Dict[str, Any]:

return {"always_apply": self.always_apply, "p": self.p}

# demo code

transform1 = A.Emboss()

print(transform1.get_base_init_args())

# output

# {'always_apply': False, 'p': 0.5}

transform1 = A.Emboss(p=1)

print(transform1.get_base_init_args())

# output

# {'always_apply': False, 'p': 1}

获取初始化的默认transform参数

method:get_transform_init_args()

除基础参数always_apply、p以外的变换参数

注意:调用此函数前需先实现get_transform_init_args_names()方法指定需要获取的transform参数,因为BasicTransform类未实现该方法。

# source code from class Emboss(ImageOnlyTransform)

def get_transform_init_args_names(self): # 若变换的该方法未实现,需先实现

return ("alpha", "strength")

def get_transform_init_args(self) -> Dict[str, Any]:

return {k: getattr(self, k) for k in self.get_transform_init_args_names()}

# demo code

transform1 = A.Emboss()

print(transform1.get_transform_init_args())

# output

# {'alpha': (0.2, 0.5), 'strength': (0.2, 0.7)}

transform1 = A.Emboss(alpha=(0.1, 0.5))

print(transform1.get_transform_init_args())

# output

# {'alpha': (0.1, 0.5), 'strength': (0.2, 0.7)}

获取随机参数

method:get_params_dependent_on_targets()

此方法BasicTransform未实现,可以参考如下ChannelShuffle()的实现,返回想要查看的参数。

注意:不能单独调用此函数查看结果图对应的参数是什么,单独调用查看时随机数已改变。

# ChannelShuffle.get_params_dependent_on_targets

def get_params_dependent_on_targets(self, params):

img = params["image"]

ch_arr = list(range(img.shape[2]))

random.shuffle(ch_arr)

return {"channels_shuffled": ch_arr}

# demo code

# 查看ChannelShuffle变换随机生成的channels_shuffled参数

param = A.ChannelShuffle().get_params_dependent_on_targets(

dict(image=src_img))['channels_shuffled']

Pixel-level transforms

像素级变换将仅更改输入图像,对应的其他targets例如mask、bounding boxes和keypoints将保持不变。

Pixel-level transforms will change just an input image and will leave any additional targets such as masks, bounding boxes, and keypoints unchanged.

像素级变换列举如下:

功能:Blur the input image using a Generalized Normal filter with a randomly selected parameters.

参数说明:

ScaleFloatType = Union[float, Tuple[float, float]]

ScaleIntType = Union[int, Tuple[int, int]]

以下参数只有 blur_limit和rotate_limit是ScaleIntType,其余为ScaleFloatType,都是可以输入一个整数或者一个范围。整数输入会根据内部逻辑自动转为区间。最后变换应用参数由在区间内随机采样获取。

- blur_limit: 图像模糊的最大高斯核。可以为0或者正奇数。默认值:(3, 7)。

若为0,会根据sigma参数自动计算:round(sigma * (3 if img.dtype == np.uint8 else 4) * 2 + 1) + 1 - sigmaX_limit: X方向上的高斯核标准差. 可以为0或者正数。默认值:0

若为0,会根据ksize参数自动计算:sigma = 0.3*((ksize-1)*0.5 - 1) + 0.8

若为正数,会转化为区间范围(0, sigma_limit),在该范围内随机取值。 - sigmaY_limit: Y方向上的高斯核标准差.

- rotate_limit: 旋转高斯核的参数。若输入是一个整型,将转换为

(-rotate_limit, rotate_limit)。默认值: (-90, 90). - beta_limit: 控制分布形状的参数。 1为正态分布。默认值: (0.5, 8.0).

- noise_limit:控制噪声强度的乘性因子。必须为正数,最好在1.0附近。若为单个数字,会转化为区间 (0, noise_limit)。默认值: (0.75, 1.25).

注意:blur_limit与sigmaX_limit(sigmaY_limit)有计算依赖关系,两者不可同时为0!!!

# source code

class AdvancedBlur(ImageOnlyTransform):

"""Blur the input image using a Generalized Normal filter with a randomly selected parameters.

This transform also adds multiplicative noise to generated kernel before convolution.

Args:

blur_limit: maximum Gaussian kernel size for blurring the input image.

Must be zero or odd and in range [0, inf). If set to 0 it will be computed from sigma

as `round(sigma * (3 if img.dtype == np.uint8 else 4) * 2 + 1) + 1`.

If set single value `blur_limit` will be in range (0, blur_limit).

Default: (3, 7).

sigmaX_limit: Gaussian kernel standard deviation. Must be in range [0, inf).

If set single value `sigmaX_limit` will be in range (0, sigma_limit).

If set to 0 sigma will be computed as `sigma = 0.3*((ksize-1)*0.5 - 1) + 0.8`. Default: 0.

sigmaY_limit: Same as `sigmaY_limit` for another dimension.

rotate_limit: Range from which a random angle used to rotate Gaussian kernel is picked.

If limit is a single int an angle is picked from (-rotate_limit, rotate_limit). Default: (-90, 90).

beta_limit: Distribution shape parameter, 1 is the normal distribution. Values below 1.0 make distribution

tails heavier than normal, values above 1.0 make it lighter than normal. Default: (0.5, 8.0).

noise_limit: Multiplicative factor that control strength of kernel noise. Must be positive and preferably

centered around 1.0. If set single value `noise_limit` will be in range (0, noise_limit).

Default: (0.75, 1.25).

p (float): probability of applying the transform. Default: 0.5.

Reference:

https://arxiv.org/abs/2107.10833

Targets:

image

Image types:

uint8, float32

"""

def __init__(

self,

blur_limit: ScaleIntType = (3, 7),

sigmaX_limit: ScaleFloatType = (0.2, 1.0),

sigmaY_limit: ScaleFloatType = (0.2, 1.0),

rotate_limit: ScaleIntType = 90,

beta_limit: ScaleFloatType = (0.5, 8.0),

noise_limit: ScaleFloatType = (0.9, 1.1),

always_apply: bool = False,

p: float = 0.5,

):

super().__init__(always_apply, p)

self.blur_limit = to_tuple(blur_limit, 3)

self.sigmaX_limit = self.__check_values(to_tuple(sigmaX_limit, 0.0), name="sigmaX_limit")

self.sigmaY_limit = self.__check_values(to_tuple(sigmaY_limit, 0.0), name="sigmaY_limit")

self.rotate_limit = to_tuple(rotate_limit)

self.beta_limit = to_tuple(beta_limit, low=0.0)

self.noise_limit = self.__check_values(to_tuple(noise_limit, 0.0), name="noise_limit")

if (self.blur_limit[0] != 0 and self.blur_limit[0] % 2 != 1) or (

self.blur_limit[1] != 0 and self.blur_limit[1] % 2 != 1

):

raise ValueError("AdvancedBlur supports only odd blur limits.")

if self.sigmaX_limit[0] == 0 and self.sigmaY_limit[0] == 0:

raise ValueError("sigmaX_limit and sigmaY_limit minimum value can not be both equal to 0.")

if not (self.beta_limit[0] < 1.0 < self.beta_limit[1]):

raise ValueError("Beta limit is expected to include 1.0")

@staticmethod

def __check_values(

value: Sequence[float], name: str, bounds: Tuple[float, float] = (0, float("inf"))

) -> Sequence[float]:

if not bounds[0] <= value[0] <= value[1] <= bounds[1]:

raise ValueError(f"{name} values should be between {bounds}")

return value

def apply(self, img: np.ndarray, kernel: np.ndarray = None, **params) -> np.ndarray:

return FMain.convolve(img, kernel=kernel)

def get_params(self) -> Dict[str, np.ndarray]:

ksize = random.randrange(self.blur_limit[0], self.blur_limit[1] + 1, 2)

sigmaX = random.uniform(*self.sigmaX_limit)

sigmaY = random.uniform(*self.sigmaY_limit)

angle = np.deg2rad(random.uniform(*self.rotate_limit))

# Split into 2 cases to avoid selection of narrow kernels (beta > 1) too often.

if random.random() < 0.5:

beta = random.uniform(self.beta_limit[0], 1)

else:

beta = random.uniform(1, self.beta_limit[1])

noise_matrix = random_utils.uniform(self.noise_limit[0], self.noise_limit[1], size=[ksize, ksize])

# Generate mesh grid centered at zero.

ax = np.arange(-ksize // 2 + 1.0, ksize // 2 + 1.0)

# Shape (ksize, ksize, 2)

grid = np.stack(np.meshgrid(ax, ax), axis=-1)

# Calculate rotated sigma matrix

d_matrix = np.array([[sigmaX**2, 0], [0, sigmaY**2]])

u_matrix = np.array([[np.cos(angle), -np.sin(angle)], [np.sin(angle), np.cos(angle)]])

sigma_matrix = np.dot(u_matrix, np.dot(d_matrix, u_matrix.T))

inverse_sigma = np.linalg.inv(sigma_matrix)

# Described in "Parameter Estimation For Multivariate Generalized Gaussian Distributions"

kernel = np.exp(-0.5 * np.power(np.sum(np.dot(grid, inverse_sigma) * grid, 2), beta))

# Add noise

kernel = kernel * noise_matrix

# Normalize kernel

kernel = kernel.astype(np.float32) / np.sum(kernel)

return {"kernel": kernel}

def get_transform_init_args_names(self) -> Tuple[str, str, str, str, str, str]:

return (

"blur_limit",

"sigmaX_limit",

"sigmaY_limit",

"rotate_limit",

"beta_limit",

"noise_limit",

)

默认参数随机生成的三张结果图。可视化图像并排显示的时候压缩了,肉眼感受变化不明显。

功能:图像模糊

参数说明: blur_limit (int, (int, int)):模糊图像的最大kernel size. 有效值范围[3, inf),默认值:(3, 7).

# source code

class Blur(ImageOnlyTransform):

"""Blur the input image using a random-sized kernel.

Args:

blur_limit (int, (int, int)): maximum kernel size for blurring the input image.

Should be in range [3, inf). Default: (3, 7).

p (float): probability of applying the transform. Default: 0.5.

Targets:

image

Image types:

uint8, float32

"""

def __init__(self, blur_limit: ScaleIntType = 7, always_apply: bool = False, p: float = 0.5):

super().__init__(always_apply, p)

self.blur_limit = to_tuple(blur_limit, 3)

def apply(self, img: np.ndarray, ksize: int = 3, **params) -> np.ndarray:

return F.blur(img, ksize)

def get_params(self) -> Dict[str, Any]:

return {"ksize": int(random.choice(np.arange(self.blur_limit[0], self.blur_limit[1] + 1, 2)))}

def get_transform_init_args_names(self) -> Tuple[str, ...]:

return ("blur_limit",)

功能:对输入图像应用限制对比度自适应直方图均衡化(Contrast Limited Adaptive Histogram Equalization)

扩展阅读:

Image Enhancement - CLAHE

CLAHE算法学习

# source code

class CLAHE(ImageOnlyTransform):

"""Apply Contrast Limited Adaptive Histogram Equalization to the input image.

Args:

clip_limit (float or (float, float)): upper threshold value for contrast limiting.

If clip_limit is a single float value, the range will be (1, clip_limit). Default: (1, 4).

tile_grid_size ((int, int)): size of grid for histogram equalization. Default: (8, 8).

p (float): probability of applying the transform. Default: 0.5.

Targets:

image

Image types:

uint8

"""

def __init__(self, clip_limit=4.0, tile_grid_size=(8, 8), always_apply=False, p=0.5):

super(CLAHE, self).__init__(always_apply, p)

self.clip_limit = to_tuple(clip_limit, 1)

self.tile_grid_size = tuple(tile_grid_size)

def apply(self, img, clip_limit=2, **params):

if not is_rgb_image(img) and not is_grayscale_image(img):

raise TypeError("CLAHE transformation expects 1-channel or 3-channel images.")

return F.clahe(img, clip_limit, self.tile_grid_size)

def get_params(self):

return {"clip_limit": random.uniform(self.clip_limit[0], self.clip_limit[1])}

def get_transform_init_args_names(self):

return ("clip_limit", "tile_grid_size")

功能:随机drop一些通道,用固定值填充

参数说明:

channel_drop_range (int, int): [min_dropout_channel_num, max_dropout_channel_num](闭区间),表示在channel_drop_range范围内随机选一个数,作为drop的通道数量。具体drop的通道id随机choice产生。

其中min_dropout_channel_num > 0 (单通道图像不支持),max_dropout_channel_num < image_channels(不可全通道drop),min_dropout_channel_num可以等于max_dropout_channel_num,默认(1,1),即随机drop一个通道。

fill_value (int, float): 用来填充dropped channel的像素值,默认0。

drop机制详解:

-

确定drop的通道数量

num_drop_channels = random.randint(channel_drop_range[0], channel_drop_range[1]) -

在图像通道中随机选择num_drop_channels个通道drop,选中的通道用fill_value填充

channels_to_drop = random.sample(range(num_channels), k=num_drop_channels) -

对选中的 channels_to_drop 通道进行fill_value填充

def channel_dropout(img, channels_to_drop, fill_value=0): if len(img.shape) == 2 or img.shape[2] == 1: raise NotImplementedError("Only one channel. ChannelDropout is not defined.") img = img.copy() img[..., channels_to_drop] = fill_value return img

ChannelDropout源码如下:

# source code

class ChannelDropout(ImageOnlyTransform):

"""Randomly Drop Channels in the input Image.

Args:

channel_drop_range (int, int): range from which we choose the number of channels to drop.

fill_value (int, float): pixel value for the dropped channel.

p (float): probability of applying the transform. Default: 0.5.

Targets:

image

Image types:

uint8, uint16, unit32, float32

"""

def __init__(self, channel_drop_range=(1, 1), fill_value=0, always_apply=False, p=0.5):

super(ChannelDropout, self).__init__(always_apply, p)

self.channel_drop_range = channel_drop_range

self.min_channels = channel_drop_range[0]

self.max_channels = channel_drop_range[1]

if not 1 <= self.min_channels <= self.max_channels:

raise ValueError("Invalid channel_drop_range. Got: {}".format(channel_drop_range))

self.fill_value = fill_value

def apply(self, img, channels_to_drop=(0,), **params):

return F.channel_dropout(img, channels_to_drop, self.fill_value)

def get_params_dependent_on_targets(self, params):

img = params["image"]

num_channels = img.shape[-1]

if len(img.shape) == 2 or num_channels == 1:

raise NotImplementedError("Images has one channel. ChannelDropout is not defined.")

if self.max_channels >= num_channels:

raise ValueError("Can not drop all channels in ChannelDropout.")

num_drop_channels = random.randint(self.min_channels, self.max_channels)

channels_to_drop = random.sample(range(num_channels), k=num_drop_channels)

return {"channels_to_drop": channels_to_drop}

def get_transform_init_args_names(self):

return ("channel_drop_range", "fill_value")

@property

def targets_as_params(self):

return ["image"]

opencv读图是BGR格式,channels_to_drop=[1]时,drop G通道,用0填充,所以右上图像绿色部分变为黑色。

channels_to_drop=[0]时,drop B通道,用0填充,所以左下图像蓝色部分变为黑色。

channels_to_drop=[1,2]时,drop G,R通道,用0填充,所以右下图像绿色、红色部分变为黑色,白底部分有RGB三个通道,RG通道置为0,只剩B通道为255,所以背景变为蓝色。

功能:输入图像通道重排(rearrange channels)

# source code

class ChannelShuffle(ImageOnlyTransform):

"""Randomly rearrange channels of the input RGB image.

Args:

p (float): probability of applying the transform. Default: 0.5.

Targets:

image

Image types:

uint8, float32

"""

@property

def targets_as_params(self):

return ["image"]

def apply(self, img, channels_shuffled=(0, 1, 2), **params):

return F.channel_shuffle(img, channels_shuffled)

def get_params_dependent_on_targets(self, params):

img = params["image"]

ch_arr = list(range(img.shape[2]))

random.shuffle(ch_arr) # 生成随机通道列表

return {"channels_shuffled": ch_arr}

def get_transform_init_args_names(self):

return ()

####################### F.channel_shuffle

def channel_shuffle(img, channels_shuffled):

img = img[..., channels_shuffled]

return img

右上:opencv读图是BGR格式,channels_shuffled=[0,2,1],表示G通道和R通道交换,所以图中绿色和红色互换

右下:channels_shuffled=[1,0,2],表示B通道和G通道交换,所以图中蓝色和绿色互换

功能:随机改变图像的亮度、对比度、饱和度(参数均表示抖动幅度)

Randomly changes the brightness, contrast, and saturation of an image. Compared to ColorJitter from torchvision,

this transform gives a little bit different results because Pillow (used in torchvision) and OpenCV (used in

Albumentations) transform an image to HSV format by different formulas. Another difference - Pillow uses uint8

overflow, but we use value saturation.

参数(详见下方source code中的__check_values函数):

- 参数初始化:

brightness、contrast 、saturation 、hue 输入形式:一个数或者一个区间(float or tuple(list) of float (min, max))。

区间参数(输入为数字的话将内部转换为区间)需要满足各参数的有效区间(有效区间和数字转区间规则见下)。

所以各参数输入要求为:

brightness,contrast ,saturation: f l o a t ∈ [ 0 , + ∞ ) , t u p l e ( l i s t ) ∈ [ 0 , + ∞ ) float \in [0 , +\infty), tuple(list)\in [0 , +\infty) float∈[0,+∞),tuple(list)∈[0,+∞)

hue: f l o a t ∈ [ 0 , 0.5 ] , t u p l e ( l i s t ) ∈ [ − 0.5 , 0.5 ] float \in [0 , 0.5], tuple(list)\in [-0.5, 0.5] float∈[0,0.5],tuple(list)∈[−0.5,0.5]- 有效区间

brightness,contrast ,saturation有效区间为:[0, +inf]

hue有效区间为:[-0.5, 0.5] - 数字转区间内部逻辑

brightness,contrast ,saturation:[ max(0, 1 - input_value), 1 + input_value]

hue:[ - input_value, + input_value]

- 有效区间

Apply(详见下方source code中的get_params函数):

- 各变换factor确定:random.uniform(处理后的参数区间)

- 各变换apply顺序随机

# source code

class ColorJitter(ImageOnlyTransform):

"""Randomly changes the brightness, contrast, and saturation of an image. Compared to ColorJitter from torchvision,

this transform gives a little bit different results because Pillow (used in torchvision) and OpenCV (used in

Albumentations) transform an image to HSV format by different formulas. Another difference - Pillow uses uint8

overflow, but we use value saturation.

Args:

brightness (float or tuple of float (min, max)): How much to jitter brightness.

brightness_factor is chosen uniformly from [max(0, 1 - brightness), 1 + brightness]

or the given [min, max]. Should be non negative numbers.

contrast (float or tuple of float (min, max)): How much to jitter contrast.

contrast_factor is chosen uniformly from [max(0, 1 - contrast), 1 + contrast]

or the given [min, max]. Should be non negative numbers.

saturation (float or tuple of float (min, max)): How much to jitter saturation.

saturation_factor is chosen uniformly from [max(0, 1 - saturation), 1 + saturation]

or the given [min, max]. Should be non negative numbers.

hue (float or tuple of float (min, max)): How much to jitter hue.

hue_factor is chosen uniformly from [-hue, hue] or the given [min, max].

Should have 0 <= hue <= 0.5 or -0.5 <= min <= max <= 0.5.

"""

def __init__(

self,

brightness=0.2,

contrast=0.2,

saturation=0.2,

hue=0.2,

always_apply=False,

p=0.5,

):

super(ColorJitter, self).__init__(always_apply=always_apply, p=p)

self.brightness = self.__check_values(brightness, "brightness")

self.contrast = self.__check_values(contrast, "contrast")

self.saturation = self.__check_values(saturation, "saturation")

# hue参数初始化的offset和bounds均不同于上,

self.hue = self.__check_values(hue, "hue", offset=0, bounds=[-0.5, 0.5], clip=False)

@staticmethod

# 输入参数处理,需符合各参数有效区间

def __check_values(value, name, offset=1, bounds=(0, float("inf")), clip=True):

if isinstance(value, numbers.Number): # 数字转区间内部逻辑

if value < 0: # 单个数字输入不可为负数

raise ValueError("If {} is a single number, it must be non negative.".format(name))

value = [offset - value, offset + value]

if clip: # hue是不进行clip的,其他三个参数进行clip操作

value[0] = max(value[0], 0)

elif isinstance(value, (tuple, list)) and len(value) == 2:

if not bounds[0] <= value[0] <= value[1] <= bounds[1]: # 若是区间输入,需满足各自的有效区间

raise ValueError("{} values should be between {}".format(name, bounds))

else:

raise TypeError("{} should be a single number or a list/tuple with length 2.".format(name))

return value

def get_params(self):

brightness = random.uniform(self.brightness[0], self.brightness[1])

contrast = random.uniform(self.contrast[0], self.contrast[1])

saturation = random.uniform(self.saturation[0], self.saturation[1])

hue = random.uniform(self.hue[0], self.hue[1])

transforms = [

lambda x: F.adjust_brightness_torchvision(x, brightness),

lambda x: F.adjust_contrast_torchvision(x, contrast),

lambda x: F.adjust_saturation_torchvision(x, saturation),

lambda x: F.adjust_hue_torchvision(x, hue),

]

random.shuffle(transforms) # 各变换顺序随机

return {"transforms": transforms}

def apply(self, img, transforms=(), **params):

if not F.is_rgb_image(img) and not F.is_grayscale_image(img): # 仅支持单通道和三通道图像输入

raise TypeError("ColorJitter transformation expects 1-channel or 3-channel images.")

for transform in transforms:

img = transform(img)

return img

def get_transform_init_args_names(self):

return ("brightness", "contrast", "saturation", "hue")

注意以下结果图上显示的各参数因子是调用各自变化函数传入的参数,并非是ColorJitter的参数,对应关系见上述参数部分描述!

brightness变化:

参数影响:factor越大图像越亮,反之越暗

逻辑:clip(img_value*factor)

# F.adjust_brightness_torchvision函数内容

def _adjust_brightness_torchvision_uint8(img, factor):

lut = np.arange(0, 256) * factor

lut = np.clip(lut, 0, 255).astype(np.uint8)

return cv2.LUT(img, lut)

@preserve_shape

def adjust_brightness_torchvision(img, factor):

if factor == 0:

return np.zeros_like(img)

elif factor == 1:

return img

if img.dtype == np.uint8:

return _adjust_brightness_torchvision_uint8(img, factor)

return clip(img * factor, img.dtype, MAX_VALUES_BY_DTYPE[img.dtype])

contrast变化:

参数影响:factor越小,图像明暗对比越小,factor越大,图像明暗对比越大。

逻辑:clip(img_value * factor + mean * (1 - factor))

# F.adjust_contrast_torchvision函数内容

def _adjust_contrast_torchvision_uint8(img, factor, mean):

lut = np.arange(0, 256) * factor

lut = lut + mean * (1 - factor)

lut = clip(lut, img.dtype, 255)

return cv2.LUT(img, lut)

@preserve_shape

def adjust_contrast_torchvision(img, factor):

if factor == 1:

return img

if is_grayscale_image(img):

mean = img.mean()

else:

mean = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY).mean()

if factor == 0:

return np.full_like(img, int(mean + 0.5), dtype=img.dtype)

if img.dtype == np.uint8:

return _adjust_contrast_torchvision_uint8(img, factor, mean)

return clip(

img.astype(np.float32) * factor + mean * (1 - factor),

img.dtype,

MAX_VALUES_BY_DTYPE[img.dtype],

)

saturation变化:

参数影响:factor越小,图像越偏灰度,factor越大,图像色彩越鲜艳。

逻辑:clip(img * factor + gray * (1 - factor)),原图和灰度图加权融合

# F.adjust_saturation_torchvision函数内容

@preserve_shape

def adjust_saturation_torchvision(img, factor, gamma=0):

if factor == 1:

return img

if is_grayscale_image(img):

gray = img

return gray

else:

gray = cv2.cvtColor(img, cv2.COLOR_RGB2GRAY)

gray = cv2.cvtColor(gray, cv2.COLOR_GRAY2RGB) # 三通道的值一致,方便后面与原图加权

if factor == 0:

return gray

# cv2.addWeighted:两个图像加权融合

# result = img * factor + gray * (1 - factor)+ gamma

result = cv2.addWeighted(img, factor, gray, 1 - factor, gamma=gamma)

if img.dtype == np.uint8:

return result

# OpenCV does not clip values for float dtype

return clip(result, img.dtype, MAX_VALUES_BY_DTYPE[img.dtype])

hue变化:

参数影响:factor越大,色调偏移越严重。factor=0,色调不变。

逻辑:图像转HSV颜色空间,np.mod(hue_value + factor * 180, 180) ,再转回RGB颜色空间

# F.adjust_hue_torchvision函数内容

def _adjust_hue_torchvision_uint8(img, factor):

img = cv2.cvtColor(img, cv2.COLOR_RGB2HSV)

lut = np.arange(0, 256, dtype=np.int16)

lut = np.mod(lut + 180 * factor, 180).astype(np.uint8)

img[..., 0] = cv2.LUT(img[..., 0], lut)

return cv2.cvtColor(img, cv2.COLOR_HSV2RGB)

def adjust_hue_torchvision(img, factor):

if is_grayscale_image(img):

return img

if factor == 0:

return img

if img.dtype == np.uint8:

return _adjust_hue_torchvision_uint8(img, factor)

img = cv2.cvtColor(img, cv2.COLOR_RGB2HSV)

img[..., 0] = np.mod(img[..., 0] + factor * 360, 360)

return cv2.cvtColor(img, cv2.COLOR_HSV2RGB)

补充阅读

对比度和饱和度有什么区别

对比度指的是最高亮度和最低亮度的比值。当图像对比度越高时,说明图像明暗差异越明显;饱和度指的是色彩的纯正程度,越纯正饱和度越高。如纯蓝、纯红、纯绿属于高饱和度,而灰蓝、玫红、草绿属于低饱和度,因此图像的饱和度越高说明图像色彩越鲜艳。对比度与饱和度在主体、特点与作用上都有不小的区别,下面就详细说明一下:

一、主体区别

1、对比度:指的是最高亮度和最低亮度的比值。当图像对比度越高时,那么图像明暗差异越明显。

2、饱和度:指的是色彩的纯正程度。当图像的饱和度越高时,那么图像色彩越鲜艳。二、特点区别

1、对比度:图像色彩差异范围越大代表对比度越大,反之则代表对比度越小。当对比度达到120:1时,就可容易地显示生动、丰富的色彩;而当对比度高达300:1时,就可以可支持各阶的颜色。

2、饱和度:饱和度取决于该色中含色成分和消色成分的比例。含色成分越大,饱和度越大;消色成分越大,饱和度越小。三、作用区别

1、对比度:对比度越大,图像越清晰醒目,色彩也越鲜明艳丽;反之,则会让整个画面都灰蒙蒙的。高对比度对于图像的清晰度、细节表现、灰度层次表现都有很大帮助。

2、饱和度:色度由光度线强弱和在不同波长的强度分布有关。最高的色度一般由单波长的强光达到,在波长分布不变的情况下,光强度越弱则色度越低。

功能:图像虚焦

参数:radius > 0,虚焦半径。若为单个数字,则默认转换为[1, radius_input_value] 。默认区间[3, 10]

alias_blur >= 0,高斯模糊的sigma参数。若为单个数字,则默认转换为[0, alias_blur input_value]。默认区间[0.1, 0.5]

参数影响:radius 参数越大,虚焦程度越高。alias_blur 参数变化,肉眼感受到的变化很小。

# source code

class Defocus(ImageOnlyTransform):

"""

Apply defocus transform. See https://arxiv.org/abs/1903.12261.

Args:

radius ((int, int) or int): range for radius of defocusing.

If limit is a single int, the range will be [1, limit]. Default: (3, 10).

alias_blur ((float, float) or float): range for alias_blur of defocusing (sigma of gaussian blur).

If limit is a single float, the range will be (0, limit). Default: (0.1, 0.5).

p (float): probability of applying the transform. Default: 0.5.

Targets:

image

Image types:

Any

"""

def __init__(

self,

radius: ScaleIntType = (3, 10),

alias_blur: ScaleFloatType = (0.1, 0.5),

always_apply: bool = False,

p: float = 0.5,

):

super().__init__(always_apply, p)

self.radius = to_tuple(radius, low=1)

self.alias_blur = to_tuple(alias_blur, low=0)

if self.radius[0] <= 0:

raise ValueError("Parameter radius must be positive")

if self.alias_blur[0] < 0:

raise ValueError("Parameter alias_blur must be non-negative")

def apply(self, img: np.ndarray, radius: int = 3, alias_blur: float = 0.5, **params) -> np.ndarray:

return F.defocus(img, radius, alias_blur)

def get_params(self) -> Dict[str, Any]:

return {

"radius": random_utils.randint(self.radius[0], self.radius[1] + 1),

"alias_blur": random_utils.uniform(self.alias_blur[0], self.alias_blur[1]),

}

def get_transform_init_args_names(self) -> Tuple[str, str]:

return ("radius", "alias_blur")

radius参数变化:

alias_blur参数变化:

功能:通过先降采样再上采样来降低图像质量 。变换前后不改变图像尺寸。

参数:0 < scale_min <= scale_max < 1,表示图像缩放的倍率。等同于resize函数中的scale参数。

interpolation 可以指定缩放方法,默认最近邻方法:cv2.INTER_NEAREST。有三种指定方式,见下方source code中args说明。

# interpolation 参数举例:

# 方法一:表示下采样和上采样均使用NEAREST方法

interpolation = cv2.INTER_NEAREST

# 方法二:表示下采样使用最近邻差值,上采样使用双线性差值

interpolation = dict(downscale=cv2.INTER_NEAREST, upscale=cv2.INTER_LINEAR)

# 方法三:下采样使用AREA方法,上采样使用CUBIC方法

interpolation = Downscale.Interpolation(downscale=cv2.INTER_AREA, upscale=cv2.INTER_CUBIC)

interpolation 选项:

INTER_NEAREST最近邻插值

INTER_LINEAR双线性插值(默认设置)

INTER_AREA使用像素区域关系进行重采样。 它可能是图像下采样的首选方法,因为它会产生无云纹理的结果。

但是当图像上采样时,它类似于INTER_NEAREST方法。

INTER_CUBIC4x4像素邻域的双三次插值

INTER_LANCZOS48x8像素邻域的Lanczos插值

# source code

class Downscale(ImageOnlyTransform):

"""Decreases image quality by downscaling and upscaling back.

Args:

scale_min (float): lower bound on the image scale. Should be < 1.

scale_max (float): lower bound on the image scale. Should be .

interpolation: cv2 interpolation method. Could be:

- single cv2 interpolation flag - selected method will be used for downscale and upscale.

- dict(downscale=flag, upscale=flag)

- Downscale.Interpolation(downscale=flag, upscale=flag) -

Default: Interpolation(downscale=cv2.INTER_NEAREST, upscale=cv2.INTER_NEAREST)

Targets:

image

Image types:

uint8, float32

"""

class Interpolation:

def __init__(self, *, downscale: int = cv2.INTER_NEAREST, upscale: int = cv2.INTER_NEAREST):

self.downscale = downscale

self.upscale = upscale

def __init__(

self,

scale_min: float = 0.25,

scale_max: float = 0.25,

interpolation: Optional[Union[int, Interpolation, Dict[str, int]]] = None,

always_apply: bool = False,

p: float = 0.5,

):

super(Downscale, self).__init__(always_apply, p)

if interpolation is None:

self.interpolation = self.Interpolation(downscale=cv2.INTER_NEAREST, upscale=cv2.INTER_NEAREST)

warnings.warn(

"Using default interpolation INTER_NEAREST, which is sub-optimal."

"Please specify interpolation mode for downscale and upscale explicitly."

"For additional information see this PR https://github.com/albumentations-team/albumentations/pull/584"

)

elif isinstance(interpolation, int):

self.interpolation = self.Interpolation(downscale=interpolation, upscale=interpolation)

elif isinstance(interpolation, self.Interpolation):

self.interpolation = interpolation

elif isinstance(interpolation, dict):

self.interpolation = self.Interpolation(**interpolation)

else:

raise ValueError(

"Wrong interpolation data type. Supported types: `Optional[Union[int, Interpolation, Dict[str, int]]]`."

f" Got: {type(interpolation)}"

)

if scale_min > scale_max:

raise ValueError("Expected scale_min be less or equal scale_max, got {} {}".format(scale_min, scale_max))

if scale_max >= 1:

raise ValueError("Expected scale_max to be less than 1, got {}".format(scale_max))

self.scale_min = scale_min

self.scale_max = scale_max

def apply(self, img: np.ndarray, scale: Optional[float] = None, **params) -> np.ndarray:

return F.downscale(

img,

scale=scale,

down_interpolation=self.interpolation.downscale,

up_interpolation=self.interpolation.upscale,

)

def get_params(self) -> Dict[str, Any]:

return {"scale": random.uniform(self.scale_min, self.scale_max)}

def get_transform_init_args_names(self) -> Tuple[str, str]:

return "scale_min", "scale_max"

def _to_dict(self) -> Dict[str, Any]:

result = super()._to_dict()

result["interpolation"] = {"upscale": self.interpolation.upscale, "downscale": self.interpolation.downscale}

return result

为方便可视化,scale设置为0.1,以下是用三种方式初始化指定不同插值方法的结果图:

# demo code

import cv2

import matplotlib.pyplot as plt

import albumentations as A

if __name__ == "__main__":

filename = '0'

title_key = 'scale_method'

src_img = cv2.imread(f'imgs/{filename}.jpg')

dst_path = f'imgs/{filename}_aug.jpg'

transform1 = A.Downscale(scale_min=0.1,

scale_max=0.1,

interpolation=cv2.INTER_NEAREST,

p=1)

transform2 = A.Downscale(scale_min=0.1,

scale_max=0.1,

interpolation=dict(downscale=cv2.INTER_LINEAR,

upscale=cv2.INTER_LINEAR),

p=1)

transform3 = A.Downscale(scale_min=0.1,

scale_max=0.1,

interpolation=A.Downscale.Interpolation(

downscale=cv2.INTER_AREA,

upscale=cv2.INTER_AREA),

p=1)

img_aug1 = transform1(image=src_img)['image']

img_aug2 = transform2(image=src_img)['image']

img_aug3 = transform3(image=src_img)['image']

param1 = 'INTER_NEAREST'

param2 = 'INTER_LINEAR'

param3 = 'INTER_AREA'

fontsize = 10

plt.subplot(221)

plt.axis('off')

plt.title('src', fontdict={'fontsize': fontsize})

plt.imshow(src_img[:, :, ::-1])

plt.subplot(222)

plt.axis('off')

plt.title(f'{title_key}={param1}', fontdict={'fontsize': fontsize})

plt.imshow(img_aug1[:, :, ::-1])

plt.subplot(223)

plt.axis('off')

plt.title(f'{title_key}={param2}', fontdict={'fontsize': fontsize})

plt.imshow(img_aug2[:, :, ::-1])

plt.subplot(224)

plt.axis('off')

plt.title(f'{title_key}={param3}', fontdict={'fontsize': fontsize})

plt.imshow(img_aug3[:, :, ::-1])

plt.savefig(dst_path)

功能:叠加浮雕效果

参数说明:

alpha ((float, float)): 调整浮雕图像的可见性,为0时只保留原图,为1.0时只保留浮雕图像。

result = (1 - alpha) * src_image + alpha * emboss_image

strength ((float, float)): 浮雕强度

alpha参数比strength参数影响大。

# source code

class Emboss(ImageOnlyTransform):

"""Emboss the input image and overlays the result with the original image.

Args:

alpha ((float, float)): range to choose the visibility of the embossed image. At 0, only the original image is

visible,at 1.0 only its embossed version is visible. Default: (0.2, 0.5).

strength ((float, float)): strength range of the embossing. Default: (0.2, 0.7).

p (float): probability of applying the transform. Default: 0.5.

Targets:

image

"""

def __init__(self, alpha=(0.2, 0.5), strength=(0.2, 0.7), always_apply=False, p=0.5):

super(Emboss, self).__init__(always_apply, p)

self.alpha = self.__check_values(to_tuple(alpha, 0.0), name="alpha", bounds=(0.0, 1.0))

self.strength = self.__check_values(to_tuple(strength, 0.0), name="strength")

@staticmethod

def __check_values(value, name, bounds=(0, float("inf"))):

if not bounds[0] <= value[0] <= value[1] <= bounds[1]:

raise ValueError("{} values should be between {}".format(name, bounds))

return value

@staticmethod

def __generate_emboss_matrix(alpha_sample, strength_sample):

matrix_nochange = np.array([[0, 0, 0], [0, 1, 0], [0, 0, 0]], dtype=np.float32)

matrix_effect = np.array(

[

[-1 - strength_sample, 0 - strength_sample, 0],

[0 - strength_sample, 1, 0 + strength_sample],

[0, 0 + strength_sample, 1 + strength_sample],

],

dtype=np.float32,

)

matrix = (1 - alpha_sample) * matrix_nochange + alpha_sample * matrix_effect

return matrix

def get_params(self):

alpha = random.uniform(*self.alpha)

strength = random.uniform(*self.strength)

emboss_matrix = self.__generate_emboss_matrix(alpha_sample=alpha, strength_sample=strength)

return {"emboss_matrix": emboss_matrix}

def apply(self, img, emboss_matrix=None, **params):

return F.convolve(img, emboss_matrix) # 卷积

def get_transform_init_args_names(self):

return ("alpha", "strength")

以下是对比可视化结果,alpha参数效果比strength参数效果明显。

功能:直方图均衡化

参数说明: mode (str): {‘cv’, ‘pil’}. 选择使用 OpenCV 或 Pillow 均衡方法。

by_channels (bool): 若为True,表示每个通道单独做直方图均衡;若为False,表示将图像转为YCbCr格式然后对Y通道进行直方图均衡。默认值:True

mask (np.ndarray, callable): 若提供该参数,表示仅mask覆盖范围内进行变换。

mask_params (list of str): Params for mask function.

注意:by_channels 设为False效果更自然些,色相色调差异更小。

# source code

class Equalize(ImageOnlyTransform):

"""Equalize the image histogram.

Args:

mode (str): {'cv', 'pil'}. Use OpenCV or Pillow equalization method.

by_channels (bool): If True, use equalization by channels separately,

else convert image to YCbCr representation and use equalization by `Y` channel.

mask (np.ndarray, callable): If given, only the pixels selected by

the mask are included in the analysis. Maybe 1 channel or 3 channel array or callable.

Function signature must include `image` argument.

mask_params (list of str): Params for mask function.

Targets:

image

Image types:

uint8

"""

def __init__(

self,

mode="cv",

by_channels=True,

mask=None,

mask_params=(),

always_apply=False,

p=0.5,

):

modes = ["cv", "pil"]

if mode not in modes:

raise ValueError("Unsupported equalization mode. Supports: {}. "

"Got: {}".format(modes, mode))

super(Equalize, self).__init__(always_apply, p)

self.mode = mode

self.by_channels = by_channels

self.mask = mask

self.mask_params = mask_params

def apply(self, image, mask=None, **params):

return F.equalize(image,

mode=self.mode,

by_channels=self.by_channels,

mask=mask)

def get_params_dependent_on_targets(self, params):

if not callable(self.mask):

return {"mask": self.mask}

return {"mask": self.mask(**params)}

@property

def targets_as_params(self):

return ["image"] + list(self.mask_params)

def get_transform_init_args_names(self):

return ("mode", "by_channels")

功能:傅里叶域自适应(Fourier Domain Adaptation from https://github.com/YanchaoYang/FDA),实现简单的风格迁移

参数说明:

reference_images (List[str] or List(np.ndarray)): 参考图像列表或者图像路径列表。若提供多个参考图像(列表长度大于1),将从中随机选择一张图像风格进行变换。

beta_limit (float or tuple of float): 论文中的系数,建议小于0.3,默认值为0.1。

read_fn (Callable): 读图的可调用函数,返回numpy array格式。默认值为read_rgb_image。

# 默认读图函数,对应的reference_images参数应为路径列表:

def read_rgb_image(path):

image = cv2.imread(path, cv2.IMREAD_COLOR)

return cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# 若参考图像已经是numpy array格式,read_fn函数恒等读入即可(lambda x: x):

target_image = np.random.randint(0, 256, [100, 100, 3], dtype=np.uint8)

aug = A.FDA([target_image], read_fn=lambda x: x)

class FDA(ImageOnlyTransform):

"""

Fourier Domain Adaptation from https://github.com/YanchaoYang/FDA

Simple "style transfer".

Args:

reference_images (List[str] or List(np.ndarray)): List of file paths for reference images

or list of reference images.

beta_limit (float or tuple of float): coefficient beta from paper. Recommended less 0.3.

read_fn (Callable): Used-defined function to read image. Function should get image path and return numpy

array of image pixels.

Targets:

image

Image types:

uint8, float32

Reference:

https://github.com/YanchaoYang/FDA

https://openaccess.thecvf.com/content_CVPR_2020/papers/Yang_FDA_Fourier_Domain_Adaptation_for_Semantic_Segmentation_CVPR_2020_paper.pdf

Example:

>>> import numpy as np

>>> import albumentations as A

>>> image = np.random.randint(0, 256, [100, 100, 3], dtype=np.uint8)

>>> target_image = np.random.randint(0, 256, [100, 100, 3], dtype=np.uint8)

>>> aug = A.Compose([A.FDA([target_image], p=1, read_fn=lambda x: x)])

>>> result = aug(image=image)

"""

def __init__(

self,

reference_images: List[Union[str, np.ndarray]],

beta_limit=0.1,

read_fn=read_rgb_image,

always_apply=False,

p=0.5,

):

super(FDA, self).__init__(always_apply=always_apply, p=p)

self.reference_images = reference_images

self.read_fn = read_fn

self.beta_limit = to_tuple(beta_limit, low=0)

def apply(self, img, target_image=None, beta=0.1, **params):

return fourier_domain_adaptation(img=img, target_img=target_image, beta=beta)

def get_params_dependent_on_targets(self, params):

img = params["image"]

target_img = self.read_fn(random.choice(self.reference_images))

target_img = cv2.resize(target_img, dsize=(img.shape[1], img.shape[0]))

return {"target_image": target_img}

def get_params(self):

return {"beta": random.uniform(self.beta_limit[0], self.beta_limit[1])}

@property

def targets_as_params(self):

return ["image"]

def get_transform_init_args_names(self):

return ("reference_images", "beta_limit", "read_fn")

def _to_dict(self):

raise NotImplementedError("FDA can not be serialized.")

用已有图像跑的结果( beta_limit=0.1 ):

官方工程中的结果:

功能: RGB图像通过FancyPCA色彩增强。FancyPCA的色彩失真更小。

参数说明:

alpha (float): 影响特征值和特征向量的扰动程度。

class FancyPCA(ImageOnlyTransform):

"""Augment RGB image using FancyPCA from Krizhevsky's paper

"ImageNet Classification with Deep Convolutional Neural Networks"

Args:

alpha (float): how much to perturb/scale the eigen vecs and vals.

scale is samples from gaussian distribution (mu=0, sigma=alpha)

Targets:

image

Image types:

3-channel uint8 images only

Credit:

http://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf

https://deshanadesai.github.io/notes/Fancy-PCA-with-Scikit-Image

https://pixelatedbrian.github.io/2018-04-29-fancy_pca/

"""

def __init__(self, alpha=0.1, always_apply=False, p=0.5):

super(FancyPCA, self).__init__(always_apply=always_apply, p=p)

self.alpha = alpha

def apply(self, img, alpha=0.1, **params):

img = F.fancy_pca(img, alpha)

return img

def get_params(self):

return {"alpha": random.gauss(0, self.alpha)}

def get_transform_init_args_names(self):

return ("alpha", )

附官方网站的可视化结果:https://pixelatedbrian.github.io/2018-04-29-fancy_pca/

以下是三种场景变换结果,中间一列是FancyPCA结果,色彩失真度很小。

功能:像素值乘以最大值,将图像由浮点型变为整型。

相反的函数为ToFloat,除以最大值,由整型变为浮点型([0, 1.0])

# source code

class FromFloat(ImageOnlyTransform):

"""Take an input array where all values should lie in the range [0, 1.0], multiply them by `max_value` and then

cast the resulted value to a type specified by `dtype`. If `max_value` is None the transform will try to infer

the maximum value for the data type from the `dtype` argument.

This is the inverse transform for :class:`~albumentations.augmentations.transforms.ToFloat`.

Args:

max_value (float): maximum possible input value. Default: None.

dtype (string or numpy data type): data type of the output. See the `'Data types' page from the NumPy docs`_.

Default: 'uint16'.

p (float): probability of applying the transform. Default: 1.0.

Targets:

image

Image types:

float32

.. _'Data types' page from the NumPy docs:

https://docs.scipy.org/doc/numpy/user/basics.types.html

"""

def __init__(self, dtype="uint16", max_value=None, always_apply=False, p=1.0):

super(FromFloat, self).__init__(always_apply, p)

self.dtype = np.dtype(dtype)

self.max_value = max_value

def apply(self, img, **params):

return F.from_float(img, self.dtype, self.max_value)

def get_transform_init_args(self):

return {"dtype": self.dtype.name, "max_value": self.max_value}

# F.from_float()

def from_float(img, dtype, max_value=None):

if max_value is None:

try:

max_value = MAX_VALUES_BY_DTYPE[dtype]

except KeyError:

raise RuntimeError(

"Can't infer the maximum value for dtype {}. You need to specify the maximum value manually by "

"passing the max_value argument".format(dtype)

)

return (img * max_value).astype(dtype)

# MAX_VALUES_BY_DTYPE = {

# np.dtype("uint8"): 255,

# np.dtype("uint16"): 65535,

# np.dtype("uint32"): 4294967295,

# np.dtype("float32"): 1.0,

# }

功能: 加高斯噪声

参数说明:

var_limit ((float, float) or float): 噪声方差范围. 若为单个float数值,将转换为区间范围 (0, var_limit). 默认值: (10.0, 50.0).

mean (float): 噪声均值. 默认值: 0

per_channel (bool): 每个通道是否独立采样。默认值: True

# source code

class GaussNoise(ImageOnlyTransform):

"""Apply gaussian noise to the input image.

Args:

var_limit ((float, float) or float): variance range for noise. If var_limit is a single float, the range

will be (0, var_limit). Default: (10.0, 50.0).

mean (float): mean of the noise. Default: 0

per_channel (bool): if set to True, noise will be sampled for each channel independently.

Otherwise, the noise will be sampled once for all channels. Default: True

p (float): probability of applying the transform. Default: 0.5.

Targets:

image

Image types:

uint8, float32

"""

def __init__(self, var_limit=(10.0, 50.0), mean=0, per_channel=True, always_apply=False, p=0.5):

super(GaussNoise, self).__init__(always_apply, p)

if isinstance(var_limit, (tuple, list)):

if var_limit[0] < 0:

raise ValueError("Lower var_limit should be non negative.")

if var_limit[1] < 0:

raise ValueError("Upper var_limit should be non negative.")

self.var_limit = var_limit

elif isinstance(var_limit, (int, float)):

if var_limit < 0:

raise ValueError("var_limit should be non negative.")

self.var_limit = (0, var_limit)

else:

raise TypeError(

"Expected var_limit type to be one of (int, float, tuple, list), got {}".format(type(var_limit))

)

self.mean = mean

self.per_channel = per_channel

def apply(self, img, gauss=None, **params):

return F.gauss_noise(img, gauss=gauss)

def get_params_dependent_on_targets(self, params):

image = params["image"]

var = random.uniform(self.var_limit[0], self.var_limit[1])

sigma = var ** 0.5

random_state = np.random.RandomState(random.randint(0, 2 ** 32 - 1))

if self.per_channel:

gauss = random_state.normal(self.mean, sigma, image.shape)

else:

gauss = random_state.normal(self.mean, sigma, image.shape[:2])

if len(image.shape) == 3:

gauss = np.expand_dims(gauss, -1)

return {"gauss": gauss}

@property

def targets_as_params(self):

return ["image"]

def get_transform_init_args_names(self):

return ("var_limit", "per_channel", "mean")

var_limit值越大,噪声越明显。

功能:用高斯滤波器模糊图像。

参数说明:

- blur_limit (int, (int, int)): 模糊图像的最大高斯核尺寸。必须为0或者奇数,有效值范围:[0, inf)。

如果值为0,将根据sigma的值计算尺寸大小,计算公式为round(sigma * (3 if img.dtype == np.uint8 else 4) * 2 + 1) + 1。

如果该参数为单个数值,将转化为范围(0, blur_limit)内随机取值。

默认值:(3, 7) - sigma_limit (float, (float, float)):高斯核标准差,值有效范围:[0, inf)。

如果该参数为单个数值,将转化为范围(0, sigma_limit)内随机取值。

如果值为0,将根据ksize的值计算大小,计算公式为sigma = 0.3*((ksize-1)*0.5 - 1) + 0.8。

默认值:0

若blur_limit 和sigma_limit 均为0,将修改blur_limit的值为3。

# source code

class GaussianBlur(ImageOnlyTransform):

"""Blur the input image using a Gaussian filter with a random kernel size.

Args:

blur_limit (int, (int, int)): maximum Gaussian kernel size for blurring the input image.

Must be zero or odd and in range [0, inf). If set to 0 it will be computed from sigma

as `round(sigma * (3 if img.dtype == np.uint8 else 4) * 2 + 1) + 1`.

If set single value `blur_limit` will be in range (0, blur_limit).

Default: (3, 7).

sigma_limit (float, (float, float)): Gaussian kernel standard deviation. Must be in range [0, inf).

If set single value `sigma_limit` will be in range (0, sigma_limit).

If set to 0 sigma will be computed as `sigma = 0.3*((ksize-1)*0.5 - 1) + 0.8`. Default: 0.

p (float): probability of applying the transform. Default: 0.5.

Targets:

image

Image types:

uint8, float32

"""

def __init__(

self,

blur_limit: ScaleIntType = (3, 7),

sigma_limit: ScaleFloatType = 0,

always_apply: bool = False,

p: float = 0.5,

):

super().__init__(always_apply, p)

self.blur_limit = to_tuple(blur_limit, 0)

self.sigma_limit = to_tuple(sigma_limit if sigma_limit is not None else 0, 0)

if self.blur_limit[0] == 0 and self.sigma_limit[0] == 0:

self.blur_limit = 3, max(3, self.blur_limit[1])

warnings.warn(

"blur_limit and sigma_limit minimum value can not be both equal to 0. "

"blur_limit minimum value changed to 3."

)

if (self.blur_limit[0] != 0 and self.blur_limit[0] % 2 != 1) or (

self.blur_limit[1] != 0 and self.blur_limit[1] % 2 != 1

):

raise ValueError("GaussianBlur supports only odd blur limits.")

def apply(self, img: np.ndarray, ksize: int = 3, sigma: float = 0, **params) -> np.ndarray:

return F.gaussian_blur(img, ksize, sigma=sigma)

def get_params(self) -> Dict[str, float]:

ksize = random.randrange(self.blur_limit[0], self.blur_limit[1] + 1)

if ksize != 0 and ksize % 2 != 1:

ksize = (ksize + 1) % (self.blur_limit[1] + 1)

return {"ksize": ksize, "sigma": random.uniform(*self.sigma_limit)}

def get_transform_init_args_names(self) -> Tuple[str, str]:

return ("blur_limit", "sigma_limit")

不同高斯核尺寸模糊效果(sigma取默认值0,根据ksize计算得到):

功能:添加玻璃噪音。

参数说明:

- sigma (float): 高斯核的标准差。默认值:0.7

- max_delta (int): 像素交换的最大距离。默认值:4

- iterations (int): 重复次数,有效值范围:[1, inf).默认值:2

- mode (str): 计算模式(fast或exact),默认值:fast。影响运行效率。

# source code

class GlassBlur(Blur):

"""Apply glass noise to the input image.

Args:

sigma (float): standard deviation for Gaussian kernel.

max_delta (int): max distance between pixels which are swapped.

iterations (int): number of repeats.

Should be in range [1, inf). Default: (2).

mode (str): mode of computation: fast or exact. Default: "fast".

p (float): probability of applying the transform. Default: 0.5.

Targets:

image

Image types:

uint8, float32

Reference:

| https://arxiv.org/abs/1903.12261

| https://github.com/hendrycks/robustness/blob/master/ImageNet-C/create_c/make_imagenet_c.py

"""

def __init__(

self,

sigma: float = 0.7,

max_delta: int = 4,

iterations: int = 2,

always_apply: bool = False,

mode: str = "fast",

p: float = 0.5,

):

super().__init__(always_apply=always_apply, p=p)

if iterations < 1:

raise ValueError(f"Iterations should be more or equal to 1, but we got {iterations}")

if mode not in ["fast", "exact"]:

raise ValueError(f"Mode should be 'fast' or 'exact', but we got {mode}")

self.sigma = sigma

self.max_delta = max_delta

self.iterations = iterations

self.mode = mode

def apply(self, img: np.ndarray, dxy: np.ndarray = None, **params) -> np.ndarray: # type: ignore

assert dxy is not None

return F.glass_blur(img, self.sigma, self.max_delta, self.iterations, dxy, self.mode)

def get_params_dependent_on_targets(self, params: Dict[str, Any]) -> Dict[str, np.ndarray]:

img = params["image"]

# generate array containing all necessary values for transformations

width_pixels = img.shape[0] - self.max_delta * 2

height_pixels = img.shape[1] - self.max_delta * 2

total_pixels = width_pixels * height_pixels

dxy = random_utils.randint(-self.max_delta, self.max_delta, size=(total_pixels, self.iterations, 2))

return {"dxy": dxy}

def get_transform_init_args_names(self) -> Tuple[str, str, str]:

return ("sigma", "max_delta", "iterations")

@property

def targets_as_params(self) -> List[str]:

return ["image"]

max_delta 和iterations参数值越大,毛玻璃效果越重。

功能:直方图匹配。调整输入图像的像素值,使其直方图匹配参考图像的直方图。每个通道独立进行,要求输入图与参考图通道数一致 。

直方图匹配可以作为图像处理(例如特征匹配)的轻量级归一化,尤其是图像的来源或条件不同时(例如照明)。

参数说明:(参数与FDA变换参数类似,FDA 中p=0.5,HistogramMatching中默认p=1)

-

reference_images (List[str] or List(np.ndarray)): 参考图像列表或者图像路径列表。若提供多个参考图像(列表长度大于1),将从中随机选择一张图像风格进行变换。

-

blend_ratio (float, float): 原图与变换图像加权叠加的加权因子。

blend_ratio_sample是直方图匹配图像的权重因子,原图权重因子是1 - blend_ratio_sample。img = cv2.addWeighted( matched, blend_ratio, img, 1 - blend_ratio, 0, dtype=get_opencv_dtype_from_numpy(img.dtype), ) -

read_fn (Callable): 读图的可调用函数,返回numpy array格式。默认值为read_rgb_image。

# 默认读图函数,对应的reference_images参数应为路径列表:

def read_rgb_image(path):

image = cv2.imread(path, cv2.IMREAD_COLOR)

return cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# 若参考图像已经是numpy array格式,read_fn函数恒等读入即可(lambda x: x):

target_image = np.random.randint(0, 256, [100, 100, 3], dtype=np.uint8)

aug = A.HistogramMatching([target_image], read_fn=lambda x: x)

# source code

class HistogramMatching(ImageOnlyTransform):

"""

Apply histogram matching. It manipulates the pixels of an input image so that its histogram matches

the histogram of the reference image. If the images have multiple channels, the matching is done independently

for each channel, as long as the number of channels is equal in the input image and the reference.

Histogram matching can be used as a lightweight normalisation for image processing,

such as feature matching, especially in circumstances where the images have been taken from different

sources or in different conditions (i.e. lighting).

See:

https://scikit-image.org/docs/dev/auto_examples/color_exposure/plot_histogram_matching.html

Args:

reference_images (List[str] or List(np.ndarray)): List of file paths for reference images

or list of reference images.

blend_ratio (float, float): Tuple of min and max blend ratio. Matched image will be blended with original

with random blend factor for increased diversity of generated images.

read_fn (Callable): Used-defined function to read image. Function should get image path and return numpy

array of image pixels.

p (float): probability of applying the transform. Default: 1.0.

Targets:

image

Image types:

uint8, uint16, float32

"""

def __init__(

self,

reference_images: List[Union[str, np.ndarray]],

blend_ratio=(0.5, 1.0),

read_fn=read_rgb_image,

always_apply=False,

p=0.5,

):

super().__init__(always_apply=always_apply, p=p)

self.reference_images = reference_images

self.read_fn = read_fn

self.blend_ratio = blend_ratio

def apply(self, img, reference_image=None, blend_ratio=0.5, **params):

return apply_histogram(img, reference_image, blend_ratio)

def get_params(self):

return {

"reference_image": self.read_fn(random.choice(self.reference_images)),

"blend_ratio": random.uniform(self.blend_ratio[0], self.blend_ratio[1]),

}

def get_transform_init_args_names(self):

return ("reference_images", "blend_ratio", "read_fn")

def _to_dict(self):

raise NotImplementedError("HistogramMatching can not be serialized.")

可以看到中间图作为target之后,变换后的图像也偏绿色了。

下图来源:https://scikit-image.org/docs/dev/auto_examples/color_exposure/plot_histogram_matching.html

功能:随机改变图像的色调,饱和度,亮度。

参数说明: hue_shift_limit ,sat_shift_limit ,val_shift_limit 分别表示色调、饱和度、亮度变化范围。若输入是单个数字,将转化为区间( -input_val, input_val),在此区间内随机取值。

若任务对色彩敏感的话,色相hue_shift_limit 范围要小一点。

# source code

class HueSaturationValue(ImageOnlyTransform):

"""Randomly change hue, saturation and value of the input image.

Args:

hue_shift_limit ((int, int) or int): range for changing hue. If hue_shift_limit is a single int, the range

will be (-hue_shift_limit, hue_shift_limit). Default: (-20, 20).

sat_shift_limit ((int, int) or int): range for changing saturation. If sat_shift_limit is a single int,

the range will be (-sat_shift_limit, sat_shift_limit). Default: (-30, 30).

val_shift_limit ((int, int) or int): range for changing value. If val_shift_limit is a single int, the range

will be (-val_shift_limit, val_shift_limit). Default: (-20, 20).

p (float): probability of applying the transform. Default: 0.5.

Targets:

image

Image types:

uint8, float32

"""

def __init__(

self,

hue_shift_limit=20,

sat_shift_limit=30,

val_shift_limit=20,

always_apply=False,

p=0.5,

):

super(HueSaturationValue, self).__init__(always_apply, p)

self.hue_shift_limit = to_tuple(hue_shift_limit)

self.sat_shift_limit = to_tuple(sat_shift_limit)

self.val_shift_limit = to_tuple(val_shift_limit)

def apply(self, image, hue_shift=0, sat_shift=0, val_shift=0, **params):

if not is_rgb_image(image) and not is_grayscale_image(image):

raise TypeError(

"HueSaturationValue transformation expects 1-channel or 3-channel images."

)

return F.shift_hsv(image, hue_shift, sat_shift, val_shift)

def get_params(self):

return {

"hue_shift":

random.uniform(self.hue_shift_limit[0], self.hue_shift_limit[1]),

"sat_shift":

random.uniform(self.sat_shift_limit[0], self.sat_shift_limit[1]),

"val_shift":

random.uniform(self.val_shift_limit[0], self.val_shift_limit[1]),

}

def get_transform_init_args_names(self):

return ("hue_shift_limit", "sat_shift_limit", "val_shift_limit")

功能:加相机传感器噪声。

参数说明: color_shift (float, float): 色调hue变化范围。

intensity ((float, float): 控制颜色强度和亮度噪声的乘数因子。

# source code

class ISONoise(ImageOnlyTransform):

"""

Apply camera sensor noise.

Args:

color_shift (float, float): variance range for color hue change.

Measured as a fraction of 360 degree Hue angle in HLS colorspace.

intensity ((float, float): Multiplicative factor that control strength

of color and luminace noise.

p (float): probability of applying the transform. Default: 0.5.

Targets:

image

Image types:

uint8

"""

def __init__(self,

color_shift=(0.01, 0.05),

intensity=(0.1, 0.5),

always_apply=False,

p=0.5):

super(ISONoise, self).__init__(always_apply, p)

self.intensity = intensity

self.color_shift = color_shift

def apply(self,

img,

color_shift=0.05,

intensity=1.0,

random_state=None,

**params):

return F.iso_noise(img, color_shift, intensity,

np.random.RandomState(random_state))

def get_params(self):

return {

"color_shift": random.uniform(self.color_shift[0],

self.color_shift[1]),

"intensity": random.uniform(self.intensity[0], self.intensity[1]),

"random_state": random.randint(0, 65536),

}

def get_transform_init_args_names(self):

return ("intensity", "color_shift")

为了可视化明显,参数设置较大。

输入参数为区间,所以图中color_shift=0.02表示调用时color_shift=(0.02, 0.02)。

JpegCompression已弃用,功能同ImageCompression。

功能:jpg和webp格式图像压缩

参数说明: quality_lower (float): 图像最低质量. jpg in [0, 100],webp in [1, 100].

quality_upper (float): 图像最高质量. jpg in [0, 100],webp in [1, 100].

compression_type (ImageCompressionType): 压缩类型,内置两个选项: ImageCompressionType.JPEG or ImageCompressionType.WEBP. 默认类型: ImageCompressionType.JPEG

压缩前后分辨率不会变化。

# source code

class ImageCompression(ImageOnlyTransform):

"""Decrease Jpeg, WebP compression of an image.

Args:

quality_lower (float): lower bound on the image quality.

Should be in [0, 100] range for jpeg and [1, 100] for webp.

quality_upper (float): upper bound on the image quality.

Should be in [0, 100] range for jpeg and [1, 100] for webp.

compression_type (ImageCompressionType): should be ImageCompressionType.JPEG or ImageCompressionType.WEBP.

Default: ImageCompressionType.JPEG

Targets:

image

Image types:

uint8, float32

"""

class ImageCompressionType(IntEnum):

JPEG = 0

WEBP = 1

def __init__(

self,

quality_lower=99,

quality_upper=100,

compression_type=ImageCompressionType.JPEG,

always_apply=False,

p=0.5,

):

super(ImageCompression, self).__init__(always_apply, p)

self.compression_type = ImageCompression.ImageCompressionType(

compression_type)

low_thresh_quality_assert = 0

if self.compression_type == ImageCompression.ImageCompressionType.WEBP:

low_thresh_quality_assert = 1

if not low_thresh_quality_assert <= quality_lower <= 100:

raise ValueError(

"Invalid quality_lower. Got: {}".format(quality_lower))

if not low_thresh_quality_assert <= quality_upper <= 100:

raise ValueError(

"Invalid quality_upper. Got: {}".format(quality_upper))

self.quality_lower = quality_lower

self.quality_upper = quality_upper

def apply(self, image, quality=100, image_type=".jpg", **params):

if not image.ndim == 2 and image.shape[-1] not in (1, 3, 4):

raise TypeError(

"ImageCompression transformation expects 1, 3 or 4 channel images."

)

return F.image_compression(image, quality, image_type)

def get_params(self):

image_type = ".jpg"

if self.compression_type == ImageCompression.ImageCompressionType.WEBP:

image_type = ".webp"

return {

"quality": random.randint(self.quality_lower, self.quality_upper),

"image_type": image_type,

}

def get_transform_init_args(self):

return {

"quality_lower": self.quality_lower,

"quality_upper": self.quality_upper,

"compression_type": self.compression_type.value,

}

功能:255 - 像素值

# F.invert(img)

def invert(img):

return 255 - img

功能: 使用中值滤波实现图像模糊。

参数说明:

blur_limit (int or Tuple[int, int]):模糊核大小,范围起止值必须是奇数。有效区间:[3, inf),默认值:(3, 7)

# source code

class MedianBlur(Blur):

"""Blur the input image using a median filter with a random aperture linear size.

Args:

blur_limit (int): maximum aperture linear size for blurring the input image.

Must be odd and in range [3, inf). Default: (3, 7).

p (float): probability of applying the transform. Default: 0.5.

Targets:

image

Image types:

uint8, float32

"""

def __init__(self, blur_limit: ScaleIntType = 7, always_apply: bool = False, p: float = 0.5):

super().__init__(blur_limit, always_apply, p)

if self.blur_limit[0] % 2 != 1 or self.blur_limit[1] % 2 != 1:

raise ValueError("MedianBlur supports only odd blur limits.")

def apply(self, img: np.ndarray, ksize: int = 3, **params) -> np.ndarray:

return F.median_blur(img, ksize)

功能: 给图像应用运动模糊。

参数说明:

blur_limit (int or Tuple[int, int]):模糊核大小,范围起止值必须是奇数。有效区间:[3, inf),默认值:(3, 7)

allow_shifted (bool):核是否有shift,若为True,表示创建无shift的kernel,若为False,则将kernel随机shift。默认值:True。

# source code

class MotionBlur(Blur):

"""Apply motion blur to the input image using a random-sized kernel.

Args:

blur_limit (int): maximum kernel size for blurring the input image.

Should be in range [3, inf). Default: (3, 7).

allow_shifted (bool): if set to true creates non shifted kernels only,

otherwise creates randomly shifted kernels. Default: True.

p (float): probability of applying the transform. Default: 0.5.

Targets:

image

Image types:

uint8, float32

"""

def __init__(

self,

blur_limit: ScaleIntType = 7,

allow_shifted: bool = True,

always_apply: bool = False,

p: float = 0.5,

):

super().__init__(blur_limit=blur_limit, always_apply=always_apply, p=p)

self.allow_shifted = allow_shifted

if not allow_shifted and self.blur_limit[0] % 2 != 1 or self.blur_limit[1] % 2 != 1:

raise ValueError(f"Blur limit must be odd when centered=True. Got: {self.blur_limit}")

def get_transform_init_args_names(self) -> Tuple[str, ...]:

return super().get_transform_init_args_names() + ("allow_shifted",)

def apply(self, img: np.ndarray, kernel: np.ndarray = None, **params) -> np.ndarray: # type: ignore

return FMain.convolve(img, kernel=kernel)

def get_params(self) -> Dict[str, Any]:

ksize = random.choice(np.arange(self.blur_limit[0], self.blur_limit[1] + 1, 2))

if ksize <= 2:

raise ValueError("ksize must be > 2. Got: {}".format(ksize))

kernel = np.zeros((ksize, ksize), dtype=np.uint8)

x1, x2 = random.randint(0, ksize - 1), random.randint(0, ksize - 1)

if x1 == x2:

y1, y2 = random.sample(range(ksize), 2)

else:

y1, y2 = random.randint(0, ksize - 1), random.randint(0, ksize - 1)

def make_odd_val(v1, v2):

len_v = abs(v1 - v2) + 1

if len_v % 2 != 1:

if v2 > v1:

v2 -= 1

else:

v1 -= 1

return v1, v2

if not self.allow_shifted:

x1, x2 = make_odd_val(x1, x2)

y1, y2 = make_odd_val(y1, y2)

xc = (x1 + x2) / 2

yc = (y1 + y2) / 2

center = ksize / 2 - 0.5

dx = xc - center

dy = yc - center

x1, x2 = [int(i - dx) for i in [x1, x2]]

y1, y2 = [int(i - dy) for i in [y1, y2]]

cv2.line(kernel, (x1, y1), (x2, y2), 1, thickness=1)

# Normalize kernel

return {"kernel": kernel.astype(np.float32) / np.sum(kernel)}

注意并不是blur_limit值越大代表图像越模糊,blur_limit仅表示ksize的取值范围,代码中模糊核的产生是在(0,ksize)范围内采样产生的,所以最后采样的值可大可小。blur_limit值大小仅代表模糊程度的上限值。

即使blur_limit参数一致,多运行几次代码,结果图的模糊程度也不尽相同,但是模糊程度最大的结果图一定在blur_limit值最大的函数中产生。

功能:将图像乘以一个随机数或数组。

参数说明: multiplier (float or tuple of floats):图像要乘的数。若输入是区间,乘数因子将在区间[multiplier[0], multiplier[1])内随机采样。 Default: (0.9, 1.1).

per_channel (bool): 是否对每个通道单独操作。若为True,每个通道乘数因子均不同。 Default False.

elementwise (bool): 是否是像素级别操作,若为True,每个像素的乘性因子均随机生成。Default False.

# source code

class MultiplicativeNoise(ImageOnlyTransform):

"""Multiply image to random number or array of numbers.

Args:

multiplier (float or tuple of floats): If single float image will be multiplied to this number.

If tuple of float multiplier will be in range `[multiplier[0], multiplier[1])`. Default: (0.9, 1.1).

per_channel (bool): If `False`, same values for all channels will be used.

If `True` use sample values for each channels. Default False.

elementwise (bool): If `False` multiply multiply all pixels in an image with a random value sampled once.

If `True` Multiply image pixels with values that are pixelwise randomly sampled. Defaule: False.

Targets:

image

Image types:

Any

"""

def __init__(

self,

multiplier=(0.9, 1.1),

per_channel=False,

elementwise=False,

always_apply=False,

p=0.5,

):

super(MultiplicativeNoise, self).__init__(always_apply, p)

self.multiplier = to_tuple(multiplier, multiplier)

self.per_channel = per_channel

self.elementwise = elementwise

def apply(self, img, multiplier=np.array([1]), **kwargs):

return F.multiply(img, multiplier)

def get_params_dependent_on_targets(self, params):

if self.multiplier[0] == self.multiplier[1]:

return {"multiplier": np.array([self.multiplier[0]])}

img = params["image"]

h, w = img.shape[:2]

if self.per_channel:

c = 1 if F.is_grayscale_image(img) else img.shape[-1]

else:

c = 1

if self.elementwise:

shape = [h, w, c]

else:

shape = [c]

multiplier = np.random.uniform(self.multiplier[0], self.multiplier[1], shape)

if F.is_grayscale_image(img) and img.ndim == 2:

multiplier = np.squeeze(multiplier)

return {"multiplier": multiplier}

@property

def targets_as_params(self):

return ["image"]

def get_transform_init_args_names(self):

return "multiplier", "per_channel", "elementwise"

elementwise =True时噪点较多,因为每个像素独立。

功能:图像归一化

归一化公式:img = (img - mean * max_pixel_value) / (std * max_pixel_value)

等同于:img = (img / max_pixel_value - mean) / std

默认参数:

mean=(0.485, 0.456, 0.406),

std=(0.229, 0.224, 0.225),

max_pixel_value=255.0

class Normalize(ImageOnlyTransform):

"""Normalization is applied by the formula: `img = (img - mean * max_pixel_value) / (std * max_pixel_value)`

Args:

mean (float, list of float): mean values

std (float, list of float): std values

max_pixel_value (float): maximum possible pixel value

Targets:

image

Image types:

uint8, float32

"""

def __init__(

self,

mean=(0.485, 0.456, 0.406),

std=(0.229, 0.224, 0.225),

max_pixel_value=255.0,

always_apply=False,

p=1.0,

):

super(Normalize, self).__init__(always_apply, p)

self.mean = mean

self.std = std

self.max_pixel_value = max_pixel_value

def apply(self, image, **params):

return F.normalize(image, self.mean, self.std, self.max_pixel_value)

def get_transform_init_args_names(self):

return ("mean", "std", "max_pixel_value")

功能:

# source code

功能:减少每个颜色通道的位数,达到色调分层。所以参数num_bits有效范围[0, 8]。

参数: num_bits ((int, int) or int, or list of ints [r, g, b], or list of ints [[r1, r1], [g1, g2], [b1, b2]]): number of high bits.

num_bits 数字越小,色调分层越明显。有效值范围:[0, 8],默认值:4。

# source code

class Posterize(ImageOnlyTransform):

"""Reduce the number of bits for each color channel.

Args:

num_bits ((int, int) or int,

or list of ints [r, g, b],

or list of ints [[r1, r1], [g1, g2], [b1, b2]]): number of high bits.

If num_bits is a single value, the range will be [num_bits, num_bits].

Must be in range [0, 8]. Default: 4.

p (float): probability of applying the transform. Default: 0.5.

Targets:

image

Image types:

uint8

"""

def __init__(self, num_bits=4, always_apply=False, p=0.5):

super(Posterize, self).__init__(always_apply, p)

if isinstance(num_bits, (list, tuple)):

if len(num_bits) == 3:

self.num_bits = [to_tuple(i, 0) for i in num_bits]

else:

self.num_bits = to_tuple(num_bits, 0)

else:

self.num_bits = to_tuple(num_bits, num_bits)

def apply(self, image, num_bits=1, **params):

return F.posterize(image, num_bits)

def get_params(self):

if len(self.num_bits) == 3:

return {

"num_bits":

[random.randint(i[0], i[1]) for i in self.num_bits]

}

return {"num_bits": random.randint(self.num_bits[0], self.num_bits[1])}

def get_transform_init_args_names(self):

return ("num_bits", )

功能:RGB每个通道上值偏移

参数说明: r_shift_limit ,g_shift_limit ,b_shift_limit ((int, int) or int) 分别表示R、G、B通道上的值偏移,若输入为单个数字,将转化为区间(-shift_limit, shift_limit),最终应用的值在区间内随机采样获取。

# source code

class RGBShift(ImageOnlyTransform):

"""Randomly shift values for each channel of the input RGB image.

Args:

r_shift_limit ((int, int) or int): range for changing values for the red channel. If r_shift_limit is a single

int, the range will be (-r_shift_limit, r_shift_limit). Default: (-20, 20).

g_shift_limit ((int, int) or int): range for changing values for the green channel. If g_shift_limit is a

single int, the range will be (-g_shift_limit, g_shift_limit). Default: (-20, 20).

b_shift_limit ((int, int) or int): range for changing values for the blue channel. If b_shift_limit is a single

int, the range will be (-b_shift_limit, b_shift_limit). Default: (-20, 20).

p (float): probability of applying the transform. Default: 0.5.

Targets:

image

Image types:

uint8, float32

"""

def __init__(

self,

r_shift_limit=20,

g_shift_limit=20,

b_shift_limit=20,

always_apply=False,

p=0.5,

):

super(RGBShift, self).__init__(always_apply, p)

self.r_shift_limit = to_tuple(r_shift_limit)

self.g_shift_limit = to_tuple(g_shift_limit)

self.b_shift_limit = to_tuple(b_shift_limit)

def apply(self, image, r_shift=0, g_shift=0, b_shift=0, **params):

if not F.is_rgb_image(image):

raise TypeError("RGBShift transformation expects 3-channel images.")

return F.shift_rgb(image, r_shift, g_shift, b_shift)

def get_params(self):

return {

"r_shift": random.uniform(self.r_shift_limit[0], self.r_shift_limit[1]),

"g_shift": random.uniform(self.g_shift_limit[0], self.g_shift_limit[1]),

"b_shift": random.uniform(self.b_shift_limit[0], self.b_shift_limit[1]),

}

def get_transform_init_args_names(self):

return ("r_shift_limit", "g_shift_limit", "b_shift_limit")

# F.shift_rgb,对于逐像素应用统一计算公式可使用查找表方式(cv2.LUT,look up table)

def _shift_image_uint8(img, value):

max_value = MAX_VALUES_BY_DTYPE[img.dtype]

lut = np.arange(0, max_value + 1).astype("float32")

lut += value

lut = np.clip(lut, 0, max_value).astype(img.dtype)

return cv2.LUT(img, lut)

@preserve_shape

def _shift_rgb_uint8(img, r_shift, g_shift, b_shift):

if r_shift == g_shift == b_shift:

h, w, c = img.shape

img = img.reshape([h, w * c])

return _shift_image_uint8(img, r_shift)

result_img = np.empty_like(img)

shifts = [r_shift, g_shift, b_shift]

for i, shift in enumerate(shifts):

result_img[..., i] = _shift_image_uint8(img[..., i], shift)

return result_img

def shift_rgb(img, r_shift, g_shift, b_shift):

if img.dtype == np.uint8:

return _shift_rgb_uint8(img, r_shift, g_shift, b_shift)

return _shift_rgb_non_uint8(img, r_shift, g_shift, b_shift)

功能:随机改变输入图像的亮度、对比度。相似变换:ColorJitter

参数说明:

- brightness_limit ((float, float) or float): 亮度变化因子,若输入为单个数字,将转化为区间

(-limit, limit),默认值:(-0.2, 0.2) - contrast_limit ((float, float) or float): 对比度变化因子,若输入为单个数字,将转化为区间

(-limit, limit),默认值:(-0.2, 0.2) - brightness_by_max (Boolean): 若为 True表示通过图像 dtype 最大值调整对比度,若为False表示通过图像平均值调整对比度。默认值:True

# source code

class RandomBrightnessContrast(ImageOnlyTransform):

"""Randomly change brightness and contrast of the input image.

Args:

brightness_limit ((float, float) or float): factor range for changing brightness.