1. For循环版本:手工实现 卷积-池化-激活

代码:

import numpy as np

x = np.array([[-1, -1, -1, -1, -1, -1, -1, -1, -1],

[-1, 1, -1, -1, -1, -1, -1, 1, -1],

[-1, -1, 1, -1, -1, -1, 1, -1, -1],

[-1, -1, -1, 1, -1, 1, -1, -1, -1],

[-1, -1, -1, -1, 1, -1, -1, -1, -1],

[-1, -1, -1, 1, -1, 1, -1, -1, -1],

[-1, -1, 1, -1, -1, -1, 1, -1, -1],

[-1, 1, -1, -1, -1, -1, -1, 1, -1],

[-1, -1, -1, -1, -1, -1, -1, -1, -1]])

print("x=\n", x)

# 初始化 三个 卷积核

Kernel = [[0 for i in range(0, 3)] for j in range(0, 3)]

Kernel[0] = np.array([[1, -1, -1],

[-1, 1, -1],

[-1, -1, 1]])

Kernel[1] = np.array([[1, -1, 1],

[-1, 1, -1],

[1, -1, 1]])

Kernel[2] = np.array([[-1, -1, 1],

[-1, 1, -1],

[1, -1, -1]])

# --------------- 卷积 ---------------

stride = 1 # 步长

feature_map_h = 7 # 特征图的高

feature_map_w = 7 # 特征图的宽

feature_map = [0 for i in range(0, 3)] # 初始化3个特征图

for i in range(0, 3):

feature_map[i] = np.zeros((feature_map_h, feature_map_w)) # 初始化特征图

for h in range(feature_map_h): # 向下滑动,得到卷积后的固定行

for w in range(feature_map_w): # 向右滑动,得到卷积后的固定行的列

v_start = h * stride # 滑动窗口的起始行(高)

v_end = v_start + 3 # 滑动窗口的结束行(高)

h_start = w * stride # 滑动窗口的起始列(宽)

h_end = h_start + 3 # 滑动窗口的结束列(宽)

window = x[v_start:v_end, h_start:h_end] # 从图切出一个滑动窗口

for i in range(0, 3):

feature_map[i][h, w] = np.divide(np.sum(np.multiply(window, Kernel[i][:, :])), 9)

print("feature_map:\n", np.around(feature_map, decimals=2))

# --------------- 池化 ---------------

pooling_stride = 2 # 步长

pooling_h = 4 # 特征图的高

pooling_w = 4 # 特征图的宽

feature_map_pad_0 = [[0 for i in range(0, 8)] for j in range(0, 8)]

for i in range(0, 3): # 特征图 补 0 ,行 列 都要加 1 (因为上一层是奇数,池化窗口用的偶数)

feature_map_pad_0[i] = np.pad(feature_map[i], ((0, 1), (0, 1)), 'constant', constant_values=(0, 0))

# print("feature_map_pad_0 0:\n", np.around(feature_map_pad_0[0], decimals=2))

pooling = [0 for i in range(0, 3)]

for i in range(0, 3):

pooling[i] = np.zeros((pooling_h, pooling_w)) # 初始化特征图

for h in range(pooling_h): # 向下滑动,得到卷积后的固定行

for w in range(pooling_w): # 向右滑动,得到卷积后的固定行的列

v_start = h * pooling_stride # 滑动窗口的起始行(高)

v_end = v_start + 2 # 滑动窗口的结束行(高)

h_start = w * pooling_stride # 滑动窗口的起始列(宽)

h_end = h_start + 2 # 滑动窗口的结束列(宽)

for i in range(0, 3):

pooling[i][h, w] = np.max(feature_map_pad_0[i][v_start:v_end, h_start:h_end])

print("pooling:\n", np.around(pooling[0], decimals=2))

print("pooling:\n", np.around(pooling[1], decimals=2))

print("pooling:\n", np.around(pooling[2], decimals=2))

# --------------- 激活 ---------------

def relu(x):

return (abs(x) + x) / 2

relu_map_h = 7 # 特征图的高

relu_map_w = 7 # 特征图的宽

relu_map = [0 for i in range(0, 3)] # 初始化3个特征图

for i in range(0, 3):

relu_map[i] = np.zeros((relu_map_h, relu_map_w)) # 初始化特征图

for i in range(0, 3):

relu_map[i] = relu(feature_map[i])

print("relu map :\n",np.around(relu_map[0], decimals=2))

print("relu map :\n",np.around(relu_map[1], decimals=2))

print("relu map :\n",np.around(relu_map[2], decimals=2))

运行结果:

D:\python\python.exe D:\pythonprojects\text\main.py

x=

[[-1 -1 -1 -1 -1 -1 -1 -1 -1]

[-1 1 -1 -1 -1 -1 -1 1 -1]

[-1 -1 1 -1 -1 -1 1 -1 -1]

[-1 -1 -1 1 -1 1 -1 -1 -1]

[-1 -1 -1 -1 1 -1 -1 -1 -1]

[-1 -1 -1 1 -1 1 -1 -1 -1]

[-1 -1 1 -1 -1 -1 1 -1 -1]

[-1 1 -1 -1 -1 -1 -1 1 -1]

[-1 -1 -1 -1 -1 -1 -1 -1 -1]]

feature_map:

[[[ 0.78 -0.11 0.11 0.33 0.56 -0.11 0.33]

[-0.11 1. -0.11 0.33 -0.11 0.11 -0.11]

[ 0.11 -0.11 1. -0.33 0.11 -0.11 0.56]

[ 0.33 0.33 -0.33 0.56 -0.33 0.33 0.33]

[ 0.56 -0.11 0.11 -0.33 1. -0.11 0.11]

[-0.11 0.11 -0.11 0.33 -0.11 1. -0.11]

[ 0.33 -0.11 0.56 0.33 0.11 -0.11 0.78]]

[[ 0.33 -0.56 0.11 -0.11 0.11 -0.56 0.33]

[-0.56 0.56 -0.56 0.33 -0.56 0.56 -0.56]

[ 0.11 -0.56 0.56 -0.78 0.56 -0.56 0.11]

[-0.11 0.33 -0.78 1. -0.78 0.33 -0.11]

[ 0.11 -0.56 0.56 -0.78 0.56 -0.56 0.11]

[-0.56 0.56 -0.56 0.33 -0.56 0.56 -0.56]

[ 0.33 -0.56 0.11 -0.11 0.11 -0.56 0.33]]

[[ 0.33 -0.11 0.56 0.33 0.11 -0.11 0.78]

[-0.11 0.11 -0.11 0.33 -0.11 1. -0.11]

[ 0.56 -0.11 0.11 -0.33 1. -0.11 0.11]

[ 0.33 0.33 -0.33 0.56 -0.33 0.33 0.33]

[ 0.11 -0.11 1. -0.33 0.11 -0.11 0.56]

[-0.11 1. -0.11 0.33 -0.11 0.11 -0.11]

[ 0.78 -0.11 0.11 0.33 0.56 -0.11 0.33]]]

pooling:

[[1. 0.33 0.56 0.33]

[0.33 1. 0.33 0.56]

[0.56 0.33 1. 0.11]

[0.33 0.56 0.11 0.78]]

pooling:

[[0.56 0.33 0.56 0.33]

[0.33 1. 0.56 0.11]

[0.56 0.56 0.56 0.11]

[0.33 0.11 0.11 0.33]]

pooling:

[[0.33 0.56 1. 0.78]

[0.56 0.56 1. 0.33]

[1. 1. 0.11 0.56]

[0.78 0.33 0.56 0.33]]

relu map :

[[0.78 0. 0.11 0.33 0.56 0. 0.33]

[0. 1. 0. 0.33 0. 0.11 0. ]

[0.11 0. 1. 0. 0.11 0. 0.56]

[0.33 0.33 0. 0.56 0. 0.33 0.33]

[0.56 0. 0.11 0. 1. 0. 0.11]

[0. 0.11 0. 0.33 0. 1. 0. ]

[0.33 0. 0.56 0.33 0.11 0. 0.78]]

relu map :

[[0.33 0. 0.11 0. 0.11 0. 0.33]

[0. 0.56 0. 0.33 0. 0.56 0. ]

[0.11 0. 0.56 0. 0.56 0. 0.11]

[0. 0.33 0. 1. 0. 0.33 0. ]

[0.11 0. 0.56 0. 0.56 0. 0.11]

[0. 0.56 0. 0.33 0. 0.56 0. ]

[0.33 0. 0.11 0. 0.11 0. 0.33]]

relu map :

[[0.33 0. 0.56 0.33 0.11 0. 0.78]

[0. 0.11 0. 0.33 0. 1. 0. ]

[0.56 0. 0.11 0. 1. 0. 0.11]

[0.33 0.33 0. 0.56 0. 0.33 0.33]

[0.11 0. 1. 0. 0.11 0. 0.56]

[0. 1. 0. 0.33 0. 0.11 0. ]

[0.78 0. 0.11 0.33 0.56 0. 0.33]]

Process finished with exit code 0

2. Pytorch版本:调用函数完成 卷积-池化-激活

代码:

import numpy as np

import torch

import torch.nn as nn

x = torch.tensor([[[[-1, -1, -1, -1, -1, -1, -1, -1, -1],

[-1, 1, -1, -1, -1, -1, -1, 1, -1],

[-1, -1, 1, -1, -1, -1, 1, -1, -1],

[-1, -1, -1, 1, -1, 1, -1, -1, -1],

[-1, -1, -1, -1, 1, -1, -1, -1, -1],

[-1, -1, -1, 1, -1, 1, -1, -1, -1],

[-1, -1, 1, -1, -1, -1, 1, -1, -1],

[-1, 1, -1, -1, -1, -1, -1, 1, -1],

[-1, -1, -1, -1, -1, -1, -1, -1, -1]]]], dtype=torch.float)

print(x.shape)

print(x)

print("--------------- 卷积 ---------------")

conv1 = nn.Conv2d(1, 1, (3, 3), 1) # in_channel , out_channel , kennel_size , stride

conv1.weight.data = torch.Tensor([[[[1, -1, -1],

[-1, 1, -1],

[-1, -1, 1]]

]])

conv2 = nn.Conv2d(1, 1, (3, 3), 1) # in_channel , out_channel , kennel_size , stride

conv2.weight.data = torch.Tensor([[[[1, -1, 1],

[-1, 1, -1],

[1, -1, 1]]

]])

conv3 = nn.Conv2d(1, 1, (3, 3), 1) # in_channel , out_channel , kennel_size , stride

conv3.weight.data = torch.Tensor([[[[-1, -1, 1],

[-1, 1, -1],

[1, -1, -1]]

]])

feature_map1 = conv1(x)

feature_map2 = conv2(x)

feature_map3 = conv3(x)

print(feature_map1 / 9)

print(feature_map2 / 9)

print(feature_map3 / 9)

print("--------------- 池化 ---------------")

max_pool = nn.MaxPool2d(2, padding=0, stride=2) # Pooling

zeroPad = nn.ZeroPad2d(padding=(0, 1, 0, 1)) # pad 0 , Left Right Up Down

feature_map_pad_0_1 = zeroPad(feature_map1)

feature_pool_1 = max_pool(feature_map_pad_0_1)

feature_map_pad_0_2 = zeroPad(feature_map2)

feature_pool_2 = max_pool(feature_map_pad_0_2)

feature_map_pad_0_3 = zeroPad(feature_map3)

feature_pool_3 = max_pool(feature_map_pad_0_3)

print(feature_pool_1.size())

print(feature_pool_1 / 9)

print(feature_pool_2 / 9)

print(feature_pool_3 / 9)

print("--------------- 激活 ---------------")

activation_function = nn.ReLU()

feature_relu1 = activation_function(feature_map1)

feature_relu2 = activation_function(feature_map2)

feature_relu3 = activation_function(feature_map3)

print(feature_relu1 / 9)

print(feature_relu2 / 9)

print(feature_relu3 / 9)

运行结果

D:\python\python.exe D:\pythonprojects\1\main.py

torch.Size([1, 1, 9, 9])

tensor([[[[-1., -1., -1., -1., -1., -1., -1., -1., -1.],

[-1., 1., -1., -1., -1., -1., -1., 1., -1.],

[-1., -1., 1., -1., -1., -1., 1., -1., -1.],

[-1., -1., -1., 1., -1., 1., -1., -1., -1.],

[-1., -1., -1., -1., 1., -1., -1., -1., -1.],

[-1., -1., -1., 1., -1., 1., -1., -1., -1.],

[-1., -1., 1., -1., -1., -1., 1., -1., -1.],

[-1., 1., -1., -1., -1., -1., -1., 1., -1.],

[-1., -1., -1., -1., -1., -1., -1., -1., -1.]]]])

--------------- 卷积 ---------------

tensor([[[[ 0.7555, -0.1333, 0.0889, 0.3111, 0.5333, -0.1333, 0.3111],

[-0.1333, 0.9778, -0.1333, 0.3111, -0.1333, 0.0889, -0.1333],

[ 0.0889, -0.1333, 0.9778, -0.3556, 0.0889, -0.1333, 0.5333],

[ 0.3111, 0.3111, -0.3556, 0.5333, -0.3556, 0.3111, 0.3111],

[ 0.5333, -0.1333, 0.0889, -0.3556, 0.9778, -0.1333, 0.0889],

[-0.1333, 0.0889, -0.1333, 0.3111, -0.1333, 0.9778, -0.1333],

[ 0.3111, -0.1333, 0.5333, 0.3111, 0.0889, -0.1333, 0.7555]]]],

grad_fn=<DivBackward0>)

tensor([[[[ 0.3014, -0.5875, 0.0792, -0.1430, 0.0792, -0.5875, 0.3014],

[-0.5875, 0.5237, -0.5875, 0.3014, -0.5875, 0.5237, -0.5875],

[ 0.0792, -0.5875, 0.5237, -0.8097, 0.5237, -0.5875, 0.0792],

[-0.1430, 0.3014, -0.8097, 0.9681, -0.8097, 0.3014, -0.1430],

[ 0.0792, -0.5875, 0.5237, -0.8097, 0.5237, -0.5875, 0.0792],

[-0.5875, 0.5237, -0.5875, 0.3014, -0.5875, 0.5237, -0.5875],

[ 0.3014, -0.5875, 0.0792, -0.1430, 0.0792, -0.5875, 0.3014]]]],

grad_fn=<DivBackward0>)

tensor([[[[ 0.3468, -0.0976, 0.5691, 0.3468, 0.1246, -0.0976, 0.7913],

[-0.0976, 0.1246, -0.0976, 0.3468, -0.0976, 1.0135, -0.0976],

[ 0.5691, -0.0976, 0.1246, -0.3198, 1.0135, -0.0976, 0.1246],

[ 0.3468, 0.3468, -0.3198, 0.5691, -0.3198, 0.3468, 0.3468],

[ 0.1246, -0.0976, 1.0135, -0.3198, 0.1246, -0.0976, 0.5691],

[-0.0976, 1.0135, -0.0976, 0.3468, -0.0976, 0.1246, -0.0976],

[ 0.7913, -0.0976, 0.1246, 0.3468, 0.5691, -0.0976, 0.3468]]]],

grad_fn=<DivBackward0>)

--------------- 池化 ---------------

torch.Size([1, 1, 4, 4])

tensor([[[[0.9778, 0.3111, 0.5333, 0.3111],

[0.3111, 0.9778, 0.3111, 0.5333],

[0.5333, 0.3111, 0.9778, 0.0889],

[0.3111, 0.5333, 0.0889, 0.7555]]]], grad_fn=<DivBackward0>)

tensor([[[[0.5237, 0.3014, 0.5237, 0.3014],

[0.3014, 0.9681, 0.5237, 0.0792],

[0.5237, 0.5237, 0.5237, 0.0792],

[0.3014, 0.0792, 0.0792, 0.3014]]]], grad_fn=<DivBackward0>)

tensor([[[[0.3468, 0.5691, 1.0135, 0.7913],

[0.5691, 0.5691, 1.0135, 0.3468],

[1.0135, 1.0135, 0.1246, 0.5691],

[0.7913, 0.3468, 0.5691, 0.3468]]]], grad_fn=<DivBackward0>)

--------------- 激活 ---------------

tensor([[[[0.7555, 0.0000, 0.0889, 0.3111, 0.5333, 0.0000, 0.3111],

[0.0000, 0.9778, 0.0000, 0.3111, 0.0000, 0.0889, 0.0000],

[0.0889, 0.0000, 0.9778, 0.0000, 0.0889, 0.0000, 0.5333],

[0.3111, 0.3111, 0.0000, 0.5333, 0.0000, 0.3111, 0.3111],

[0.5333, 0.0000, 0.0889, 0.0000, 0.9778, 0.0000, 0.0889],

[0.0000, 0.0889, 0.0000, 0.3111, 0.0000, 0.9778, 0.0000],

[0.3111, 0.0000, 0.5333, 0.3111, 0.0889, 0.0000, 0.7555]]]],

grad_fn=<DivBackward0>)

tensor([[[[0.3014, 0.0000, 0.0792, 0.0000, 0.0792, 0.0000, 0.3014],

[0.0000, 0.5237, 0.0000, 0.3014, 0.0000, 0.5237, 0.0000],

[0.0792, 0.0000, 0.5237, 0.0000, 0.5237, 0.0000, 0.0792],

[0.0000, 0.3014, 0.0000, 0.9681, 0.0000, 0.3014, 0.0000],

[0.0792, 0.0000, 0.5237, 0.0000, 0.5237, 0.0000, 0.0792],

[0.0000, 0.5237, 0.0000, 0.3014, 0.0000, 0.5237, 0.0000],

[0.3014, 0.0000, 0.0792, 0.0000, 0.0792, 0.0000, 0.3014]]]],

grad_fn=<DivBackward0>)

tensor([[[[0.3468, 0.0000, 0.5691, 0.3468, 0.1246, 0.0000, 0.7913],

[0.0000, 0.1246, 0.0000, 0.3468, 0.0000, 1.0135, 0.0000],

[0.5691, 0.0000, 0.1246, 0.0000, 1.0135, 0.0000, 0.1246],

[0.3468, 0.3468, 0.0000, 0.5691, 0.0000, 0.3468, 0.3468],

[0.1246, 0.0000, 1.0135, 0.0000, 0.1246, 0.0000, 0.5691],

[0.0000, 1.0135, 0.0000, 0.3468, 0.0000, 0.1246, 0.0000],

[0.7913, 0.0000, 0.1246, 0.3468, 0.5691, 0.0000, 0.3468]]]],

grad_fn=<DivBackward0>)

Process finished with exit code 0

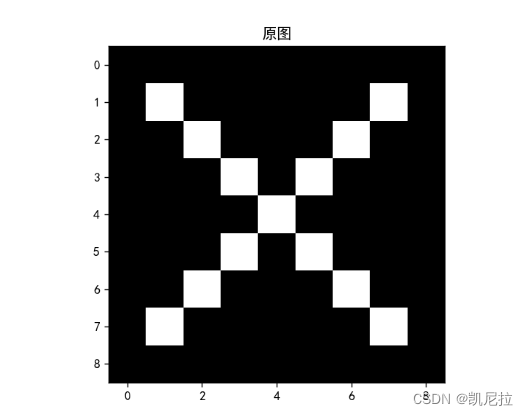

3.可视化:了解数字与图像之间的关系

import torch

import torch.nn as nn

import matplotlib.pyplot as plt

plt.rcParams['font.sans-serif']=['SimHei'] #用来正常显示中文标签

plt.rcParams['axes.unicode_minus']=False #用来正常显示负号 #有中文出现的情况,需要u'内容

x = torch.tensor([[[[-1, -1, -1, -1, -1, -1, -1, -1, -1],

[-1, 1, -1, -1, -1, -1, -1, 1, -1],

[-1, -1, 1, -1, -1, -1, 1, -1, -1],

[-1, -1, -1, 1, -1, 1, -1, -1, -1],

[-1, -1, -1, -1, 1, -1, -1, -1, -1],

[-1, -1, -1, 1, -1, 1, -1, -1, -1],

[-1, -1, 1, -1, -1, -1, 1, -1, -1],

[-1, 1, -1, -1, -1, -1, -1, 1, -1],

[-1, -1, -1, -1, -1, -1, -1, -1, -1]]]], dtype=torch.float)

print(x.shape)

print(x)

img = x.data.squeeze().numpy() # 将输出转换为图片的格式

plt.imshow(img, cmap='gray')

plt.title('原图')

plt.show()

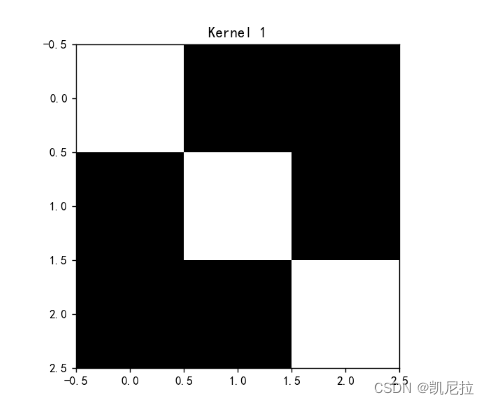

print("--------------- 卷积 ---------------")

conv1 = nn.Conv2d(1, 1, (3, 3), 1) # in_channel , out_channel , kennel_size , stride

conv1.weight.data = torch.Tensor([[[[1, -1, -1],

[-1, 1, -1],

[-1, -1, 1]]

]])

img = conv1.weight.data.squeeze().numpy() # 将输出转换为图片的格式

plt.imshow(img, cmap='gray')

plt.title('Kernel 1')

plt.show()

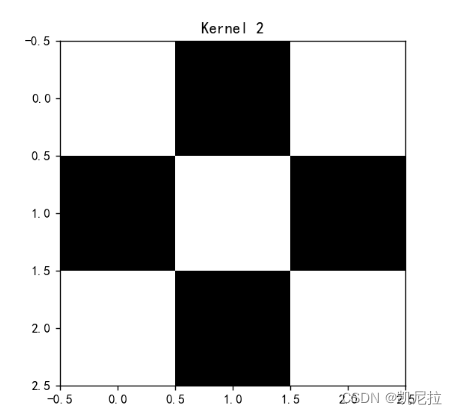

conv2 = nn.Conv2d(1, 1, (3, 3), 1) # in_channel , out_channel , kennel_size , stride

conv2.weight.data = torch.Tensor([[[[1, -1, 1],

[-1, 1, -1],

[1, -1, 1]]

]])

img = conv2.weight.data.squeeze().numpy() # 将输出转换为图片的格式

plt.imshow(img, cmap='gray')

plt.title('Kernel 2')

plt.show()

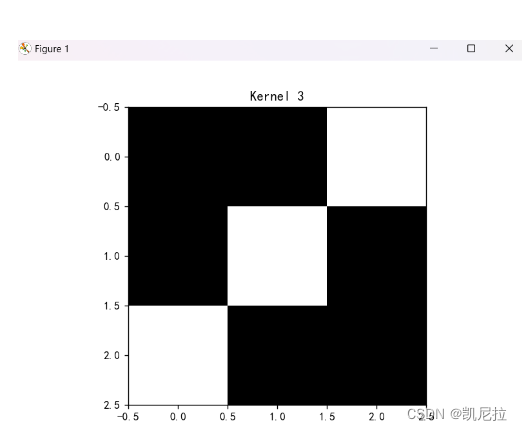

conv3 = nn.Conv2d(1, 1, (3, 3), 1) # in_channel , out_channel , kennel_size , stride

conv3.weight.data = torch.Tensor([[[[-1, -1, 1],

[-1, 1, -1],

[1, -1, -1]]

]])

img = conv3.weight.data.squeeze().numpy() # 将输出转换为图片的格式

plt.imshow(img, cmap='gray')

plt.title('Kernel 3')

plt.show()

feature_map1 = conv1(x)

feature_map2 = conv2(x)

feature_map3 = conv3(x)

print(feature_map1 / 9)

print(feature_map2 / 9)

print(feature_map3 / 9)

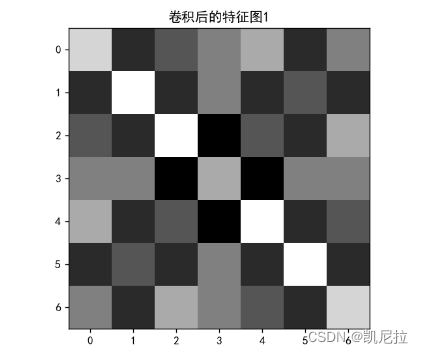

img = feature_map1.data.squeeze().numpy() # 将输出转换为图片的格式

plt.imshow(img, cmap='gray')

plt.title('卷积后的特征图1')

plt.show()

print("--------------- 池化 ---------------")

max_pool = nn.MaxPool2d(2, padding=0, stride=2) # Pooling

zeroPad = nn.ZeroPad2d(padding=(0, 1, 0, 1)) # pad 0 , Left Right Up Down

feature_map_pad_0_1 = zeroPad(feature_map1)

feature_pool_1 = max_pool(feature_map_pad_0_1)

feature_map_pad_0_2 = zeroPad(feature_map2)

feature_pool_2 = max_pool(feature_map_pad_0_2)

feature_map_pad_0_3 = zeroPad(feature_map3)

feature_pool_3 = max_pool(feature_map_pad_0_3)

print(feature_pool_1.size())

print(feature_pool_1 / 9)

print(feature_pool_2 / 9)

print(feature_pool_3 / 9)

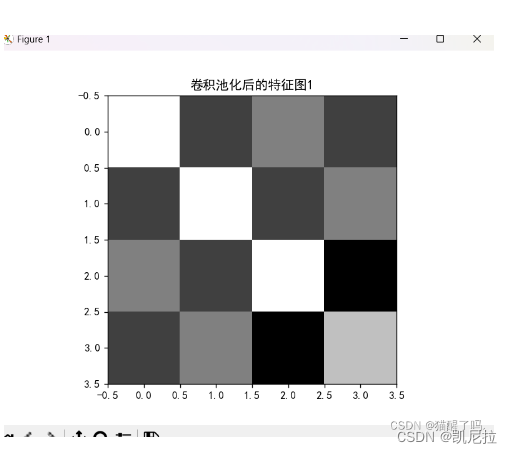

img = feature_pool_1.data.squeeze().numpy() # 将输出转换为图片的格式

plt.imshow(img, cmap='gray')

plt.title('卷积池化后的特征图1')

plt.show()

print("--------------- 激活 ---------------")

activation_function = nn.ReLU()

feature_relu1 = activation_function(feature_map1)

feature_relu2 = activation_function(feature_map2)

feature_relu3 = activation_function(feature_map3)

print(feature_relu1 / 9)

print(feature_relu2 / 9)

print(feature_relu3 / 9)

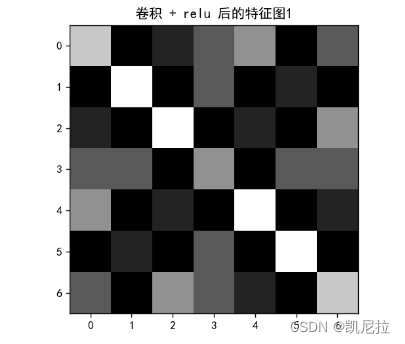

img = feature_relu1.data.squeeze().numpy() # 将输出转换为图片的格式

plt.imshow(img, cmap='gray')

plt.title('卷积 + relu 后的特征图1')

plt.show()

运行结果:

260

260

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?