We propose a fully convolutional one-stage object detector (FCOS) to solve object detection in a per-pixel prediction fashion, analogue to semantic segmentation. Almost all state-of-the-art object detectors such as RetinaNet, SSD,YOLOv3, and Faster R-CNN rely on pre-defined anchor boxes. In contrast, our proposed detector FCOS is anchor-box free, as well as proposal free. By eliminating the pre-defined set of anchor boxes, FCOS completely avoids the complicated computation related to anchor boxes such as calculating overlapping during training and significantly

reduces the training memory footprint. More importantly,we also avoid all hyper-parameters related to anchor boxes,which are often very sensitive to the final detection performance. With the only post-processing non-maximum suppression (NMS), our detector FCOS outperforms previous anchor-based one-stage detectors with the advantage of being much simpler. For the first time, we demonstrate a much simpler and flexible detection framework achieving

improved detection accuracy. We hope that the proposed FCOS framework can serve as a simple and strong alternative for many other instance-level tasks.

我们提出了一种全卷积的 one-stage 目标检测器(FCOS),以每像素预测方式解决目标检测,类似于语义分割。几乎所有最先进的目标检测器,如RetinaNet,SSD,YOLOv3和Faster R-CNN都依赖于预定义的锚框(anchor boxes)。相比之下,我们提出的检测器FCOS不需要锚框,即 proposal free。通过消除预定义的锚框,FCOS完全避免了与锚框相关的复杂计算,例如在训练期间计算重叠并且显著减少了训练内存。更重要的是,我们还避免了与锚框相关的所有超参数,这些参数通常对最终检测性能非常敏感。凭借唯一的后处理:非极大值抑制(NMS),我们的检测器FCOS优于以前基于锚框的one-stage探测器,具有更简单的优势。我们首次展示了一种更加简单灵活的检测框架,可以提高检测精度。我们希望提出的FCOS框架可以作为许多其他实例级任务的简单而强大的替代方案。

Object detection is a fundamental yet challenging task in computer vision, which requires the algorithm to predict a bounding box with a category label for each instance of interest in an image. All current mainstream detectors such as Faster R-CNN [20], SSD [15] and YOLOv2, v3 [19] rely on a set of pre-defined anchor boxes and it has long been believed that the use of anchor boxes is the key to detectors’success. Despite their great success, it is important to note that anchor-based detectors suffer some drawbacks:

- As shown in [12, 20], detection performance is sensitive to the sizes, aspect ratios and number of anchor boxes. For example, in RetinaNet [12], varying these

hyper-parameters affects the performance up to 4% in AP on the COCO benchmark [13]. As a result, these hyper-parameters need to be carefully tuned in anchor-based detectors. - Even with careful design, because the scales and aspect ratios of anchor boxes are kept fixed, detectors encounter difficulties to deal with object candidates with

large shape variations, particularly for small objects.The pre-defined anchor boxes also hamper the generalization ability of detectors, as they need to be redesigned on new detection tasks with different object sizes or aspect ratios. - In order to achieve a high recall rate, an anchor-based detector is required to densely place anchor boxes on the input image (e.g., more than 180K anchor boxes in feature pyramid networks (FPN) [11] for an image with its shorter side being 800). Most of these anchor boxes are labelled as negative samples during training.The excessive number of negative samples aggravates the imbalance between positive and negative samples in training.

- An excessively large number of anchor boxes also significantly increase the amount of computation and memory footprint when computing the intersection-over-union (IOU) scores between all anchor boxes and ground-truth boxes during training.

对象检测是计算机视觉中的基本但具有挑战性的任务,其要求算法针对图像中的每个感兴趣实例预测具有类别标签的边界框。所有目前主流的探测器,如Faster R-CNN [20],SSD [15]和YOLOv2,v3 [19]都依赖于一组预定义的锚箱,长期以来人们一直认为锚箱的使用是关键探测器的成功。尽管取得了巨大的成功,但重要的是要注意基于锚的探测器有一些缺点:

1.如[12,20]所示,检测性能对尺寸,纵横比和锚箱数量敏感。例如,在RetinaNet [12]中,根据COCO基准,改变这些超参数会影响AP的性能达到4%[13]。因此,需要在基于锚的检测器中仔细调整这些超参数。

2.即使经过精心设计,由于锚箱的比例和纵横比保持固定,探测器在处理具有大的形状变化的候选物时遇到困难,特别是对于小物体。预定义的锚箱也妨碍了通用能力。探测器,因为它们需要在具有不同物体尺寸或纵横比的新探测任务上进行重新设计。

3.为了实现高召回率,需要基于锚点的检测器在输入图像上密集地放置锚盒(例如,在特征金字塔网络(FPN)[11]中超过180K锚箱用于图像的短边是800)。大多数这些锚箱在训练期间被标记为阴性样本。过多的阴性样本加剧了训练中阳性和阴性样本之间的不平衡。

4.在训练期间计算所有锚箱和地面实况箱之间的交叉联合(IOU)分数时,过多的锚箱也会显着增加计算量和内存占用量。

In the sequel, we take a closer look at the issue and show that with FPN this ambiguity can be largely eliminated. As a result, our method can already obtain comparable detection accuracy with those traditional anchor based detectors.Furthermore, we observe that our method may produce a number of low-quality predicted bounding boxes at the locations that are far from the center of an target object. In order to suppress these low-quality detections, we introduce a novel “center-ness” branch (only one layer) to predict the deviation of a pixel to the center of its corresponding bounding box, as defined in Eq. (3). This score is then used to down-weight low-quality detected bounding boxes and merge the detection results in NMS. The simple yet effective center-ness branch allows the FCN-based detector to outperform anchor-based counterparts under exactly the same training and testing settings.This new detection framework enjoys the following advantages.

• Detection is now unified with many other FCN-solvable tasks such as semantic segmentation, making it easier re-use ideas from those tasks.

• Detection becomes proposal free and anchor free,which significantly reduces the number of design parameters. The design parameters typically need heuristic tuning and many tricks are involved in order to achieve good performance. Therefore, our new detection framework makes the detector, particular its training, considerably simpler. Moreover, by eliminating the anchor boxes, our new detector completely avoids the complex IOU computation and matching between anchor boxes and ground-truth boxes during training and reduces the total training memory footprint by a factor of 2 or so.

• Without bells and whistles, we achieve state-of-the-art results among one-stage detectors. We also show that the proposed FCOS can be used as a Region Proposal Networks (RPNs) in two-stage detectors and can achieve significantly better performance than its anchor-based RPN counterparts. Given the even better performance of the much simpler anchor-free detector,we encourage the community to rethink the necessity of anchor boxes in object detection, which are currently considered as the de facto standard for detection.

• The proposed detector can be immediately extended to solve other vision tasks with minimal modification,including instance segmentation and key-point detection. We believe that this new method can be the new baseline for many instance-wise prediction problems.

在续集中,我们仔细研究了这个问题,并表明FPN可以在很大程度上消除这种模糊性。因此,我们的方法已经可以获得与传统锚点检测器相当的检测精度。此外,我们观察到我们的方法可能会在远离目标对象中心的位置产生许多低质量的预测边界框。 。为了抑制这些低质量的检测,我们引入了一种新颖的“中心”分支(只有一层)来预测像素与其相应边界框中心的偏差,如公式1所定义。 (3)。然后,该分数用于降低低质量检测到的边界框的权重,并将检测结果合并到NMS中。简单而有效的中心分支允许基于FCN的探测器在完全相同的训练和测试设置下优于基于锚的对应物。这种新的探测框架具有以下优点。

•现在,检测与许多其他FCN可解决的任务(如语义分段)统一,从而可以更轻松地重复使用这些任务中的想法。

•检测变为免提提议且无锚,这大大减少了设计参数的数量。设计参数通常需要启发式调整,并且涉及许多技巧以获得良好的性能。因此,我们的新检测框架使检测器,特别是其培训,变得相当简单。此外,通过消除锚箱,我们的新探测器完全避免了复杂的IOU计算以及训练期间锚箱和地面实体箱之间的匹配,并将总训练记忆足迹减少了2倍左右。

•没有花里胡哨,我们在一级探测器中实现了最先进的结果。我们还表明,所提出的FCOS可以用作两级探测器中的区域提议网络(RPN),并且可以实现比其基于锚的RPN对应物更好的性能。鉴于更简单的无锚探测器具有更好的性能,我们鼓励社区重新考虑物体检测中锚箱的必要性,目前这被认为是检测的事实标准。

•建议的探测器可以立即扩展,以最小的修改来解决其他视觉任务,包括实例分割和关键点检测。我们相信这种新方法可以成为许多实例预测问题的新基线。

Related works

Anchor-free Detectors. The most popular anchor-free detector might be YOLOv1 [17]. Instead of using anchor boxes, YOLOv1 predicts bounding boxes at points near the center of objects. Only the points near the center are used since they are considered to be able to produce higher-quality detection. However, since only points near the center are used to predict bounding boxes, YOLOv1 suffers from low recall as mentioned in YOLOv2 [18]. As a result,YOLOv2 [18] makes use of anchor boxes as well. Compared to YOLOv1, FCOS takes advantages of all points in a ground truth bounding box to predict the bounding boxes and the low-quality detected bounding boxes are suppressed by the proposed “center-ness” branch. As a result, FCOS is able to provide comparable recall with anchor-based detectors as shown in our experiments.

CornerNet [10] is a recently proposed one-stage anchor-free detector, which detects a pair of corners of a bounding box and groups them to form the final detected bounding box. CornerNet requires much more complicated post-processing to group the pairs of corners belonging to the same instance. An extra distance metric is learned for the purpose of grouping.

Another family of anchor-free detectors such as [24] are based on DenseBox [9]. The family of detectors have been considered unsuitable for generic object detection due to difficulty in handling overlapping bounding boxes and the recall being low. In this work, we show that both problems can be largely alleviated with multi-level FPN prediction.

Moreover, we also show together with our proposed centerness branch, the much simpler detector can achieve even better detection performance than its anchor-based counter-parts.

无锚探测器。最流行的无锚探测器可能是YOLOv1 [17]。 YOLOv1不是使用锚框,而是在靠近对象中心的点处预测边界框。仅使用靠近中心的点,因为它们被认为能够产生更高质量的检测。但是,由于只有中心附近的点用于预测边界框,因此YOLOv1在YOLOv2 [18]中提到了低召回率。因此,YOLOv2 [18]也使用了锚箱。与YOLOv1相比,FCOS利用地面实况边界框中的所有点来预测边界框,并且通过所提出的“中心”分支来抑制低质量检测到的边界框。因此,FCOS能够提供与基于锚的探测器相当的召回,如我们的实验所示。

CornerNet [10]是最近提出的一阶段无锚探测器,它探测边界框的一对角并将它们分组以形成最终检测到的边界框。 CornerNet需要更复杂的后处理来对属于同一实例的角对进行分组。为了分组,学习额外的距离度量。

另一类无锚探测器如[24]基于DenseBox [9]。由于难以处理重叠的边界框并且召回率低,因此已经认为探测器系列不适用于通用物体检测。在这项工作中,我们表明,通过多级FPN预测可以大大缓解这两个问题。

此外,我们还与我们提出的中心分支一起显示,更简单的检测器可以实现比其基于锚的对应部件更好的检测性能。 - Our Approach

In this section, we first reformulate object detection in a per-pixel prediction fashion. Next, we show that how we make use of multi-level prediction to improve the recall and resolve the ambiguity resulted from overlapped bounding boxes in training. Finally, we present our proposed “centerness” branch, which helps suppress the low-quality detected bounding boxes and improve the overall performance by a

large margin.

在本节中,我们首先以逐像素预测方式重新构造对象检测。 接下来,我们展示了我们如何利用多级预测来改善召回并解决训练中重叠边界框导致的模糊性。 最后,我们提出了我们提出的“中心性”分支,它有助于抑制低质量检测到的边界框并大幅提高整体性能。

3.1.Fully Convolutional One-Stage Object Detector

For each location (x,y) on the feature map F i , we can map it back onto the input image as A,which is near the center of the receptive field of the location(x,y). Different from anchor-based detectors, which consider the location on the input image as the center of anchor boxes and regress the target bounding box for these anchor boxes, we directly regress the target bounding box for each location. In other words, our detector directly views locations as training samples instead of anchor boxes in anchor-based detectors, which is the same as in FCNs for semantic segmentation [16].

对于特征映射F i上的每个位置(x,y),我们可以将其映射回输入图像作为A,其接近位置(x,y)的感受域的中心。 与基于锚的探测器不同,锚探测器将输入图像上的位置视为锚箱的中心并对这些锚箱的目标边界框进行回归,我们直接回归每个位置的目标边界框。 换句话说,我们的探测器直接将位置视为训练样本而不是基于锚点的探测器中的锚箱,这与用于语义分割的FCN相同[16]。

Specifically, location (x,y) is considered as a positive sample if it falls into any ground-truth bounding box and the class label c ∗ of the location is the class label of B i . Otherwise it is a negative sample and c ∗ = 0 (background class).Besides the label for classification, we also have a 4D real vector t ∗ = (l ∗ ,t ∗ ,r ∗ ,b ∗ ) being the regression target for each sample. Here l ∗ , t ∗ , r ∗ and b ∗ are the distances from the location to the four sides of the bounding box, as shown in Fig. 1 (left). If a location falls into multiple bounding boxes, it is considered as an ambiguous sample. For now,wesimply choose the bounding box with minimal area as its regression target. In the next section, we will show that with multi-level prediction, the number of ambiguous samples can be reduced significantly. Formally, if location (x,y)is associated to a bounding box B i , the training regression targets for the location can be formulated as,

具体而言,如果位置(x,y)落入任何实际边界框并且该位置的类别标签c *是B i的类别标签,则将其视为正样本。 否则它是负样本并且c * = 0(背景类)。除了用于分类的标签,我们还有一个4D实数向量t * =(l *,t *,r *,b *)是回归目标 每个样本。 这里l *,t *,r *和b 是从边界框的位置到四边的距离,如图1(左)所示。 如果某个位置属于多个边界框,则会将其视为模糊样本。 目前,我们只选择具有最小面积的边界框作为其回归目标。 在下一节中,我们将展示通过多级预测,可以显着减少模糊样本的数量。 形式上,如果位置(x,y)与边界框B i相关联,则该位置的训练回归目标可以表示为,

值得注意的是,FCOS可以利用尽可能多的前景样本来训练回归量。 它与基于锚的探测器不同,锚探测器仅考虑具有足够IOU的锚箱,其具有地面实例框作为正样本。 我们认为,这可能是FCOS优于其基于锚点的同行的原因之一。

Network Outputs. Corresponding to the training targets, the final layer of our networks predicts an 80D vectorp of classification labels and a 4D vectort t = (l,t,r,b)bounding box coordinates. Following [12],instead of training a multi-class classifier, we train C binary classifiers. Similar to [12], we add four convolutional layers after the feature maps of the backbone networks respectively for classification and regression branches. Moreover, since the regression targets are always positive, we employ exp(x) to map any real number to (0,∞) on the top of the regression branch. It is worth noting that FCOS has 9× fewer network

output variables than the popular anchor-based detectors[12, 20] with 9 anchor boxes per location.

网络输出。 对应于训练目标,我们网络的最后一层预测分类标签的80D矢量图和4D矢量t =(l,t,r,b)边界框坐标。 在[12]之后,我们不是训练多类分类器,而是训练C二元分类器。 与[12]类似,我们在骨干网络的特征映射后分别为分类和回归分支添加了四个卷积层。 此外,由于回归目标总是正的,我们使用exp(x)将任何实数映射到回归分支顶部的(0,∞)。 值得注意的是,FCOS的网络数量减少了9倍

输出变量比流行的基于锚的探测器[12,20]每个位置有9个锚箱。

我们定义损失函数如下:

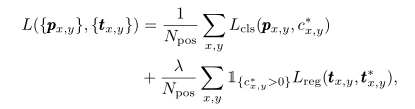

where Lcls is focal loss as in [12] and Lreg is the IOU loss as in UnitBox [24]. N pos denotes the number of positive samples and λ being 1 in this paper is the balance weight for Lreg . The summation is calculated over all locations on the feature maps F i . 1 {c∗i >0} is the indicator function,being 1 if c ∗i> 0 and 0 otherwise.

其中Lcls是[12]中的焦点丢失,Lreg是UnitBox中的IOU丢失[24]。 Npos表示正样本的数量,本文中λ为1是Lreg的平衡权重。 在特征映射Fi上的所有位置上计算求和。 1 {c i> 0}是指示器功能,如果c * i> 0则为1,否则为0。

Inference. The inference of FCOS is straight forward.Given an input images, we forward it through the network and obtain the classification scores px,y and the regression prediction tx,y for each location on the feature maps Fi .Following [12], we choose the location with px,y > 0.05 as positive samples and invert Eq. (1) to obtain the predicted bounding boxes.

推理。 FCOS的推断是直截了当的。给出一个输入图像,我们通过网络转发它,并获得特征图上的每个位置的分类分数px,y和回归预测tx,y。[12],我们选择 px的位置,y> 0.05作为阳性样本并反转Eq。 (1)获得预测的边界框。

3.2. Multi-level Prediction with FPN for FCOS

Here we show that how two possible issues of the proposed FCOS can be resolved with multi-level prediction with FPN [11]. 1) The large stride (e.g., 16×) of the final feature maps in a CNN can result in a relatively low best possible recall (BPR) 1 . For anchor based detectors, low recall rates due to the large stride can be compensated to some extent by lowering the required IOU scores for positive anchor boxes. For FCOS, at the first glance one may think that the BPR can be much lower than anchor-based detectors because it is impossible to recall an object for which no location on the final feature maps encodes due to a large stride.Here, we empirically show that even with a large stride,FCN-based FCOS is still able to produce a good BPR, and it can even better than the BPR of the anchor-based detector RetinaNet [12] in the official implementation Detectron(refer to Table 1). Therefore, the BPR is actually not a problem of FCOS. Moreover, with multi-level FPN prediction, the BPR can be improved further to match the best BPR the anchor-based RetinaNet can achieve. 2) Overlaps in ground-truth boxes can cause intractable ambiguity during training, i.e., w.r.t. which bounding box should a location in the overlap to regress? This ambiguity results in degraded performance of FCN-based detectors. In this work,we show that the ambiguity can be greatly resolved with multi-level prediction, and the FCN-based detector can obtain on par, sometimes even better, performance compared with anchor-based ones.

3.2。使用FPN进行FCOS的多级预测

在这里,我们展示了如何通过FPN的多级预测来解决所提出的FCOS的两个可能问题[11]。 1)CNN中的最大特征图的大步幅(例如,16×)可导致相对较低的最佳可能召回(BPR)1。对于基于锚的检测器,由于大步幅导致的低召回率可以通过降低正锚箱所需的IOU得分来在一定程度上得到补偿。对于FCOS,乍一看可能认为BPR可能远低于基于锚点的探测器,因为无法回想起由于大步幅而最终特征图上没有位置编码的对象。在这里,我们凭经验表明即使步幅很大,基于FCN的FCOS仍然能够产生良好的BPR,它甚至可以比官方实施的Detectron中基于锚点的探测器RetinaNet [12]的BPR更好(参见表1) 。因此,BPR实际上不是FCOS的问题。此外,通过多级FPN预测,可以进一步改进BPR以匹配基于锚的RetinaNet可以实现的最佳BPR。 2)在地面实况框中重叠会导致训练期间难以理解的模糊性,即w.r.t.哪个边界框应该在重叠位置回归?这种模糊性导致基于FCN的检测器的性能下降。在这项工作中,我们表明,使用多级预测可以极大地解决模糊性,并且与基于锚点的检测器相比,基于FCN的检测器可以获得相同的,有时甚至更好的性能。

使用FPN,我们在不同级别的要素图上检测不同大小的对象,我们定义了五个水平的featuremap:{P3,P4,P5,P6,P7},P3,P4,P5是CNN的骨干网络的特征图C3,C4,C5产生,接着是具有横向连接的1×1卷积层,如图2所示。P6和P7是通过在P5和P6上分别施加一个步幅为2的卷积层而产生的。 结果,特征等级P3,P4,P5,P6和P7分别具有步幅8,16,32,64和128。

Unlike anchor-based detectors, which assign anchor boxes with different sizes to different feature levels, we directly limit the range of bounding box regression.More specifically, we firstly compute the regression targets l ∗ ,t ∗ , r∗ and b ∗ for each location on all feature levels. Next,if a location satisfies max(l ∗ ,t ∗ ,r ∗ ,b ∗ ) > mi or max(l ∗ ,t ∗ ,r ∗ ,b ∗ ) < mi−1 , it is set as a negative sample and is thus not required to regress a bounding box anymore. Here mi is the maximum distance that feature level i needs to regress.In this work, m2 , m3 , m4 , m5 , m6 and m 7 are set as 0, 64,128, 256, 512 and ∞, respectively. Since objects with different sizes are assigned to different feature levels and most overlapping happens between objects with considerably different sizes, the multi-level prediction can largely alleviate the aforementioned ambiguity and improve the FCN-based detector to the same level of anchor-based ones, as shown in our experiments.

与锚点检测器不同,锚点检测器将不同大小的锚盒分配给不同的特征级别,我们直接限制边界框回归的范围。更具体地说,我们首先计算每个的回归目标l *,t *,r *和b *所有功能级别的位置。接下来,如果位置满足max(l *,t *,r *,b *)> mi或max(l *,t *,r *,b *)<mi-1,则将其设置为负样本并且因此不需要再退回边界框。这里mi是特征级别i需要回归的最大距离。在这项工作中,m2,m3,m4,m5,m6和m 7分别设置为0,64,128,256,512和∞。由于具有不同大小的对象被分配给不同的特征级别并且大多数重叠发生在具有相当大的不同大小的对象之间,所以多级预测可以在很大程度上减轻上述模糊性并且将基于FCN的检测器改进到基于锚的基于相同级别的检测器,如我们的实验所示。

Finally, following [11, 12], we share the heads between different feature levels, not only making the detector parameter-efficient but also improving the detection performance. However, we observe that different feature levels are required to regress different size range (e.g., the size range is [0,64] for P 3 and [64,128] for P 4 ), and therefore it is not reasonable to make use of identical heads for different feature levels. As a result, instead of using the standard exp(x), we make use of exp(si;x) with a trainable scalar si to automatically adjust the base of the exponential function for feature level Pi , which empirically improves the detection performance.

最后,在[11,12]之后,我们共享不同特征级别之间的头部,不仅使检测器参数有效,而且提高检测性能。 然而,我们观察到需要不同的特征水平来回归不同的尺寸范围(例如,P 3的尺寸范围是[0,64]而P 4的尺寸范围是[64,128]),因此使用相同的尺寸范围是不合理的。 针对不同的功能级别。 因此,我们不使用标准exp(x),而是使用带有可训练标量si的exp(si; x)来自动调整特征级Pi的指数函数的基数,从而凭经验提高检测性能。

3.3. Center-ness for FCOS

After using multi-level prediction in FCOS, there is still a performance gap between FCOS and anchor-based detectors. We observed that it is due to a lot of low-quality predicted bounding boxes produced by locations far away from the center of an object.

We propose a simple yet effective strategy to suppress these low-quality detected bounding boxes without introducing any hyper parameters. Specifically, we add a single layer branch, in parallel with the classification branch to predict the “center-ness” of a location (i.e., the distance from the location to the center of the object that the location is responsible for), as shown in Fig. 2. Given the regression targets l ∗ , t ∗ , r ∗ and b ∗ for a location, the center-ness target is defined as,

3.3。 FCOS的中心

在FCOS中使用多级预测后,FCOS和基于锚的检测器之间仍存在性能差距。 我们观察到这是由于远离物体中心的位置产生的许多低质量预测边界框。

我们提出了一种简单而有效的策略来抑制这些低质量的检测边界框而不引入任何超参数。 具体来说,我们添加一个单层分支,与分类分支并行,以预测位置的“中心”(即,从位置到该位置负责的对象的中心的距离),如图所示 在给定位置的回归目标l *,t *,r *和b *的情况下,中心目标被定义为,

We employ sqrt here to slow down the decay of the centerness. The center-ness ranges from 0 to 1 and is thus trained with binary cross entropy (BCE) loss. The loss is added to the loss function Eq. (2). When testing, the final score (used for ranking the detected bounding boxes) is computed by multiplying the predicted center-ness with the corresponding classification score. Thus the center-ness can downweight the scores of bounding boxes far from the center of an object. As a result, with high probability, these low-quality bounding boxes might be filtered out by the final non-maximum suppression (NMS) process, improving the detection performance remarkably.

我们在这里使用sqrt来减缓中心的衰减。 中心范围从0到1,因此训练有二元交叉熵(BCE)损失。 损失被添加到损失函数Eq。(2)。 在测试时,通过将预测的中心与相应的分类得分相乘来计算最终得分(用于对检测到的边界框进行排名)。 因此,中心可以减少远离物体中心的边界框的分数。 结果,这些低质量的边界框很可能被最终的非最大抑制(NMS)过程滤除,从而显着提高了检测性能。

From the perspective of anchor-based detectors, which use two IOU thresholds Tlow and Thigh to label the anchor boxes as negative, ignored and positive samples, the center-ness can be viewed as a soft threshold. It is learned during the training of networks and does not need to be tuned.

Moreover, with the strategy, our detector can still view any locations falling into a ground box as positive samples, except for the ones set as negative samples in the a forementioned multi-level prediction, so as to use as many training samples as possible for the regressor.

从基于锚的检测器的角度来看,使用两个IOU阈值Tlow和Thigh将锚框标记为负,忽略和正样本,中心可以被视为软阈值。 它是在网络培训期间学习的,不需要进行调整。

此外,根据该策略,我们的探测器仍然可以查看落入地箱的任何位置作为正样本,除了在前面提到的多级预测中设置为负样本的那些,以便尽可能多地使用训练样本 回归者。

Our experiments are conducted on the large-scale detection benchmark COCO [13]. Following the common practice [12, 11, 20], we use the COCO trainval 35k split(115K images) for training and minival split (5K images) as validation for our ablation study. We report our main results on the test dev split (20K images) by uploading our detection results to the evaluation server.

Training Details. Unless specified, ResNet-50 [7] is used as our backbone networks and the same hyperparameters with RetinaNet [12] are used. Specifically, our network is trained with stochastic gradient descent (SGD) for 90K iterations with the initial learning rate being 0.01 and a mini-batch of 16 images. The learning rate is reduced by a factor of 10 at iteration 60K and 80K, respectively.

Weight decay and momentum are set as 0.0001 and 0.9, respectively. We initialize our backbone networks with the weights pre-trained on ImageNet [3]. For the newly added layers, we initialize them as in [12]. Unless specified, the input images are resized to have their shorter side being 800 and their longer side less or equal to 1333.

Inference Details. We firstly forward the input image through the network and obtain the predicted bounding boxes with a predicted class. The following post-processing is exactly the same with RetinaNet [12] and we directly make use of the same post-processing hyper-parameters(such as the threshold of NMS) of RetinaNet. We argue that the performance of our detector can be improved further if the hyper-parameters are optimized for it. We use the same sizes of input images as in training.

我们的实验是在大规模检测基准COCO上进行的[13]。按照惯例[12,11,20],我们使用COCO trainval 35k分割(115K图像)进行训练和迷你分割(5K图像)作为我们消融研究的验证。我们通过将检测结果上传到评估服务器来报告测试开发分割(20K图像)的主要结果。

培训细节。除非另有说明,ResNet-50 [7]用作我们的骨干网络,并使用与RetinaNet [12]相同的超参数。具体来说,我们的网络采用随机梯度下降(SGD)进行90K次迭代训练,初始学习率为0.01,小批量为16张图像。在60K和80K的迭代中,学习率分别降低了10倍。

重量衰减和动量分别设定为0.0001和0.9。我们使用在ImageNet上预先训练的权重来初始化我们的骨干网络[3]。对于新添加的图层,我们将其初始化为[12]。除非指定,否则输入图像的大小调整为短边为800,长边小于或等于1333。

推理细节。我们首先通过网络转发输入图像,并获得具有预测类的预测边界框。以下后处理与RetinaNet [12]完全相同,我们直接使用RetinaNet相同的后处理超参数(例如NMS的阈值)。我们认为,如果超参数针对它进行了优化,我们的探测器的性能可以进一步提高。我们使用与训练相同大小的输入图像。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?