一、环境搭建

1.创建虚拟环境

conda create --name openmmlab python=3.8 -y

激活虚拟环境:

conda activate openmmlab

2.安装pytorch、torchvision

根据自己的配置安装相应版本

pip install torch==1.7.1+cu101 torchvision==0.8.2+cu101 -f https://download.pytorch.org/whl/torch_stable.html

或手动下载,地址:https://download.pytorch.org/whl/torch_stable.html

pip install -U openmim mim install mmengine mim install 'mmcv>=2.0.0rc1'

4.下载mmselfSup并编译

git clone https://github.com/open-mmlab/mmselfsup.git cd mmselfsup git checkout 1.x pip install -v -e .

二、训练自监督模型

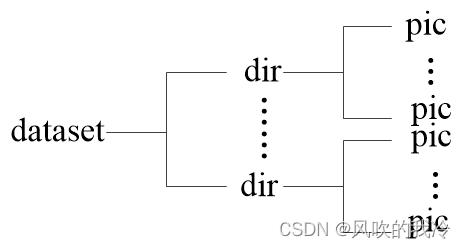

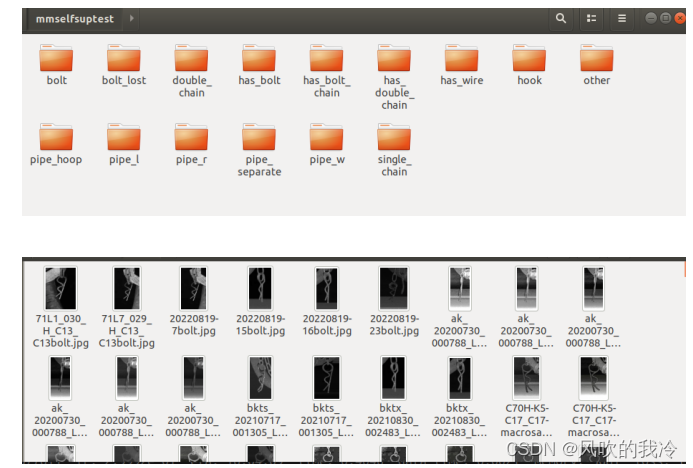

1.构造数据集

数据集结构为datasetdir->{namedirs}->pics

2.写模型自监督预训练的配置文件

新建一个名为 mocov3_resnet50_pretrain_8xb512-amp-coslr-1e-800e_in1k.py 的配置文件

新建位置自定,本人为:configs/selfsup/mocov3下

写入

_base_ = 'mocov3_resnet50_8xb512-amp-coslr-800e_in1k.py'

model = dict(base_momentum=0.996) # 0.99 for 100e and 300e, 0.996 for 800e

# optimizer

optimizer = dict(type='LARS', lr=9.6, weight_decay=1.5e-6)

optim_wrapper = dict(optimizer=optimizer)

# learning rate scheduler

param_scheduler = [

dict(

type='LinearLR',

start_factor=1e-4,

by_epoch=True,

begin=0,

end=10,

convert_to_iter_based=True),

dict(

type='CosineAnnealingLR',

T_max=790,

by_epoch=True,

begin=10,

end=800,

convert_to_iter_based=True)

]

# runtime settings

train_cfg = dict(type='EpochBasedTrainLoop', max_epochs=2000)

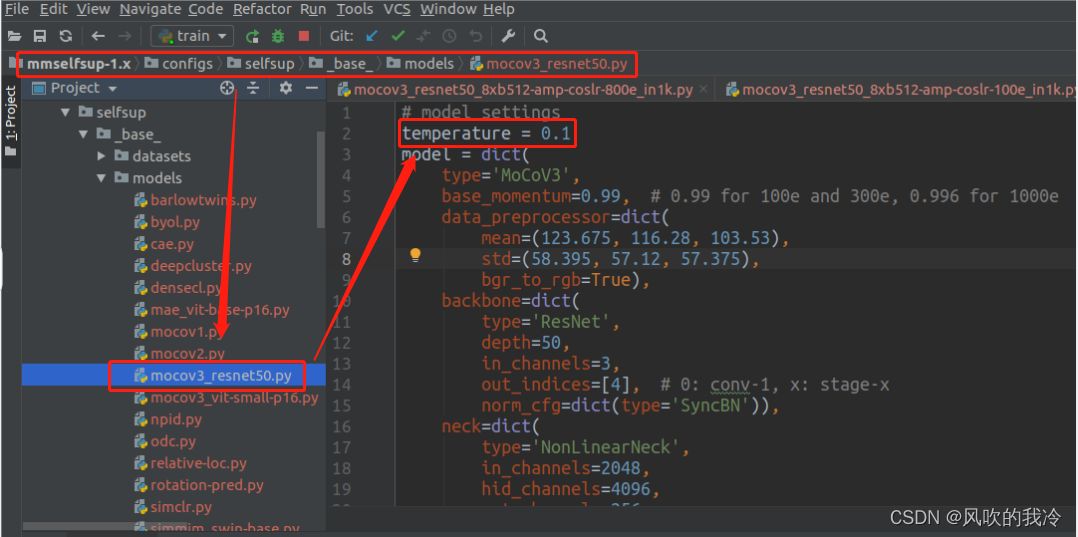

修改mocov3_resnet50_8xb512-amp-coslr-100e_in1k.py

_base_ = [

'../_base_/models/mocov3_resnet50.py',

#'../_base_/datasets/coco_MOCOV3.py',

'../_base_/schedules/lars_coslr-200e_in1k.py',

'../_base_/default_runtime.py',

]

#custom dataset

dataset_type = 'mmcls.CustomDataset'

data_root = '/macrosan/common/group/mjt/to_other/to_zym/mmselfsuptest'

#train_dataloader = dict(batch_size=16, num_workers=1)

file_client_args = dict(backend='disk')

view_pipeline = [

dict(type='RandomResizedCrop', size=224, backend='pillow'),

dict(type='RandomFlip', prob=0.5),

dict(

type='RandomApply',

transforms=[

dict(

type='ColorJitter',

brightness=0.8,

contrast=0.8,

saturation=0.8,

hue=0.2)

],

prob=0.8),

dict(

type='RandomGrayscale',

prob=0.2,

keep_channels=True,

channel_weights=(0.114, 0.587, 0.2989)),

dict(type='RandomGaussianBlur', sigma_min=0.1, sigma_max=2.0, prob=0.5),

]

train_pipeline = [

dict(type='LoadImageFromFile', file_client_args=file_client_args),

dict(type='MultiView', num_views=2, transforms=[view_pipeline]),

dict(type='PackSelfSupInputs', meta_keys=['img_path'])

]

train_dataloader = dict(

batch_size=32,

num_workers=4,

persistent_workers=True,

sampler=dict(type='DefaultSampler', shuffle=True),

collate_fn=dict(type='default_collate'),

dataset=dict(

type=dataset_type,

data_root=data_root,

# ann_file='meta/train.txt',

data_prefix=dict(img_path='./'),

pipeline=train_pipeline))

# <<<<<<<<<<<<<<<<<<<<<< End of Changed <<<<<<<<<<<<<<<<<<<<<<<<<<<

# optimizer

optimizer = dict(type='LARS', lr=9.6, weight_decay=1e-6, momentum=0.9)

optim_wrapper = dict(

type='AmpOptimWrapper',

loss_scale='dynamic',

optimizer=optimizer,

paramwise_cfg=dict(

custom_keys={

'bn': dict(decay_mult=0, lars_exclude=True),

'bias': dict(decay_mult=0, lars_exclude=True),

'downsample.1': dict(decay_mult=0, lars_exclude=True),

}),

)

# learning rate scheduler

param_scheduler = [

dict(

type='LinearLR',

start_factor=1e-4,

by_epoch=True,

begin=0,

end=10,

convert_to_iter_based=True),

dict(

type='CosineAnnealingLR',

T_max=90,

by_epoch=True,

begin=10,

end=100,

convert_to_iter_based=True)

]

# runtime settings

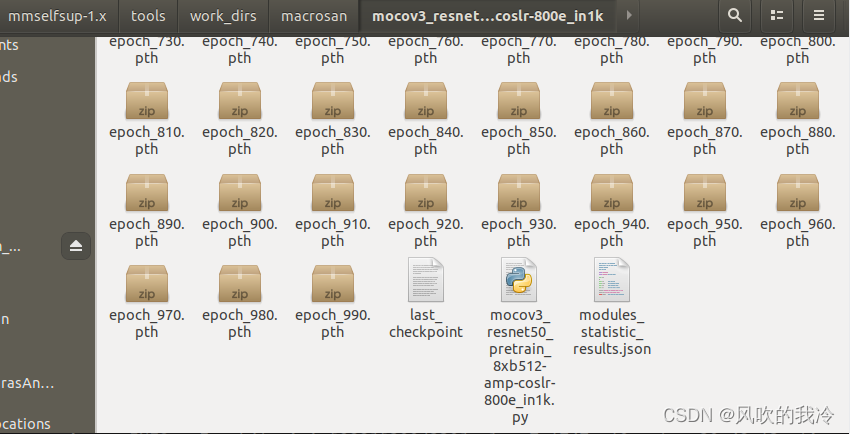

train_cfg = dict(type='EpochBasedTrainLoop', max_epochs=2000)

# runtime settings

# only keeps the latest 3 checkpoints

default_hooks = dict(checkpoint=dict(max_keep_ckpts=50))

3.训练

训练程序在tools/train.py

参数为mocov3_resnet50_pretrain_8xb512-amp-coslr-1e-800e_in1k.py

注:如果遇到不收敛(loss不降低)的情况

首先调整configs/selfsup/_base_/models/mocov3_resnet50.py文件中的温度系数参数

temperature = 0.1

若再不收敛可适当调整学习率

4.结果

1075

1075

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?