PoinTr: Diverse Point Cloud Completion with Geometry-Aware Transformers

基本信息

Xumin Y u*, Y ongming Rao*, Ziyi Wang, Zuyan Liu, Jiwen Lu†, Jie Zhou

1.摘要

由于传感器分辨率、单视点和遮挡的限制,在实际应用中捕获的点云通常是不完整的。因此,从局部点云中恢复完整点云成为许多实际应用中不可缺少的任务。在本文中,我们提出了一种新的方法,将点云完成转化为一个集合到集合的转换问题,并设计了一个新的模型,称为PoinTr,该模型采用transformer-Encoder体系结构来完成点云完成。通过将点云表示为一组具有位置嵌入的无序点组,我们将点云转换为一系列点代理,并使用转换器生成点云。为了便于变压器更好地利用点云三维几何结构的感应偏压,我们进一步设计了一个GeometryWare块,用于显式建模局部几何关系。transformers的迁移使我们的模型能够更好地学习结构知识,并保留详细信息以完成点云计算。此外,我们还提出了两个更具挑战性的基准,其中包含更多不同的不完整点云,可以更好地反映现实世界的场景,以促进未来的研究。实验结果表明,无论是在新的基准测试还是在现有的基准测试中,我们的方法都大大优于现有的方法。

2. 问题

重建完整的点云是一个具有挑战性的问题,因为完成任务所需的结构信息与点云数据的无序和非结构化性质背道而驰。因此,学习点云局部部分之间的结构特征和长期相关性成为更好地完成点云的关键因素。在本文中,我们建议采用Transformers[39],自然语言处理(NLP)中最成功的体系结构之一,学习成对交互的结构信息和点云完成的全局相关性。我们的模型名为Pointr,其特点是由五个关键组件组成:1)编码器-解码器体系结构:我们采用编码器-编码器体系结构将点云完成转换为集对集转换问题。transformers的自我注意机制对编码器中元素之间的所有成对交互进行建模,而解码器基于输入点云和查询的特征之间可学习的成对交互对缺失元素进行推理;2) 点代理:我们将局部区域中的点云集表示为称为点代理的特征向量。将输入点云转换为一系列点代理,作为变压器模型的输入;3) 几何感知变压器块:为了帮助变压器更好地利用点云三维几何结构的感应偏压,我们设计了一个GeometryWare块,用于显式建模几何关系;4) 查询生成器:我们在解码器中使用动态查询而不是固定查询,这些查询由查询生成模块生成,该模块汇总编码器生成的特征,并表示缺失点的初始草图;5) 多尺度点云生成:我们设计了一个多尺度点云生成模块,以从粗到精的方式恢复丢失的点云

3.思路

图2:Pointr的管道。我们首先对输入的部分点云进行下采样以获得中心点。然后,我们使用一个轻量级的DGCNN[44]来提取中心点周围的局部特征。在局部特征中加入位置嵌入后,我们使用transformer编码器-解码器架构来预测缺失部分的点代理。使用简单的MLP和FoldingNet以从粗到精的方式基于预测的点代理完成点云。

4.方法

4.1 Set-to-Set Translation with Transformers

我们方法的主要目标是利用变压器架构令人印象深刻的序列到序列生成能力来完成点云完成任务。我们建议首先将点云转换为一组特征向量,即点代理,它表示点云中的局部区域(我们将在章节3.2中描述)。通过类比语言翻译管道,我们将点云完成建模为一个集到集的翻译任务,其中转换器将部分点云的点代理作为输入,并生成缺失部分的点代理。具体来说,给定点proxiesF={F1, F2,…, FN}表示部分点云,我们将点云补全过程建模为一个集到集的转换问题。

编解码器结构由编码器和解码器中的le&ldb0多头自注意层组成。编码器中的自我注意层首先用远程和短程信息更新代理特性。然后,前馈网络(FFN)使用MLP架构进一步更新代理功能。解码器利用自我注意和交叉注意机制来学习结构知识。自我注意层利用全局信息增强局部特性,而交叉注意层研究编码器的查询和输出之间的关系。为了预测缺失部分的点代理,我们提出使用动态查询嵌入,这使得我们的解码器对于不同类型的对象及其缺失信息更加灵活和可调。关于变压器结构的更多细节可以在补充材料和[8,39]中找到。

4.2 点云代理

图3:普通transformer块和建议的几何感知transformer块的比较

点代理表示点云的局部区域。受[28]中集合抽象操作的启发,我们首先进行最远点采样(FPS)来定位一个固定数量的bernof点中心{q1, q2,…, qN}在偏点云中。然后,利用分层降采样的DGCNN[44]轻量化算法从输入点云中提取点中心的特征。点proxyfii是一个特征向量,它捕获了围绕dqi的局部结构。

4.3 几何感知 transformer 块

将变压器应用于视觉任务的关键挑战之一是,变压器中的自我注意机制缺乏传统视觉模型(如cnn和点云网络)固有的一些归纳偏差,这些模型明确地模拟了视觉数据的结构。为了帮助变压器更好地利用点云的三维几何结构的归纳偏差,我们设计了一个几何感知块来建模几何关系,它可以是一个即插即用模块,在任何变压器架构中与注意力块合并。建议的块的详细信息如图3所示。不同于自我注意模块使用特征相似度来捕获语义关系,我们提出使用kNN模型来捕获点云中的几何关系。给定查询coordinatespQ,我们根据键coordinatespk查询最近的键的特性。然后,我们遵循DGCNN[44]的实践,通过线性层特征聚合和最大pooing操作来学习局部几何结构。然后将几何特征和语义特征连接并映射到原始维度,形成输出。

4.4 查询生成器

queriesq充当预测代理的初始状态。为了确保查询正确地反映完成的点云的轮廓,我们提出了一个查询生成器模块来生成基于编码器输出的动态查询嵌入。具体地说,我们首先用一个向更高维度的线性投影来总结v,然后进行最大pooing操作。与[51]类似,我们使用一个线性投影层来直接generateM×3dimension特性,这些特性可以被重塑为Mcoordinates{c1, c2,…, cM}。最后,我们将编码器和坐标的全局特性连接起来,并使用一个MLP生成查询嵌入。

4.5 多尺度点云生成

我们的编码器-解码器网络的目标是预测不完整点云的缺失部分。然而,我们只能从变压器解码器中获得丢失代理的预测。为此,我们提出了一种多尺度点云生成框架来恢复全分辨率缺失点云。为了减少冗余计算,我们重用查询生成器生成的theMcoordinates作为缺失点云的局部中心。然后,我们利用FoldingNet [50] f来恢复以预测代理为中心的详细局部形状。

4.6 损失函数

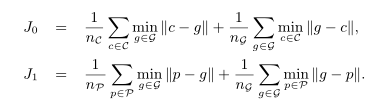

我们用C表示这些nc local中心,用p表示完成的点云的np点。给出ground-truth completed point cloud G,这两种预测的损失函数可写成:

5.平台

在本节中,我们首先介绍不同点云完成的新基准和评估指标。然后,我们在我们的新基准上显示我们的方法和几个基线方法的结果。最后,我们在广泛使用的PCN数据集和KITTI基准上验证了该模型的有效性。我们也提供了我们方法的消融研究和视觉分析。

我们选择基于合成数据集ShapeNet[47]在基准测试中生成样本,因为它包含无法从ScanNet[5]和S3DIS[2]等真实数据集获得的完整对象模型。使我们的基准测试与众不同的是,我们的基准测试包含更多的对象类别、更多的不完整模式和更多的视点。此外,我们更关注网络处理训练集中未出现的新类别对象的能力。

6.实验

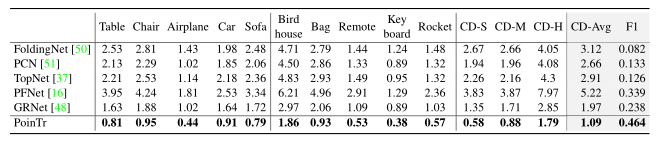

我们的方法和最先进的方法在ShapeNet-34上的结果。我们以3个难度等级报告34个可见类别和21个不可见类别的结果。我们使用CD- s, CD- m和CD- h来表示简单,中等和硬设置下的CD结果。我们还提供基于F-Score@1%度量的结果。

7. 参考

网络结构

PoinTr(

(base_model): PCTransformer(

(grouper): DGCNN_Grouper(

(input_trans): Conv1d(3, 8, kernel_size=(1,), stride=(1,))

(layer1): Sequential(

(0): Conv2d(16, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): GroupNorm(4, 32, eps=1e-05, affine=True)

(2): LeakyReLU(negative_slope=0.2)

)

(layer2): Sequential(

(0): Conv2d(64, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): GroupNorm(4, 64, eps=1e-05, affine=True)

(2): LeakyReLU(negative_slope=0.2)

)

(layer3): Sequential(

(0): Conv2d(128, 64, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): GroupNorm(4, 64, eps=1e-05, affine=True)

(2): LeakyReLU(negative_slope=0.2)

)

(layer4): Sequential(

(0): Conv2d(128, 128, kernel_size=(1, 1), stride=(1, 1), bias=False)

(1): GroupNorm(4, 128, eps=1e-05, affine=True)

(2): LeakyReLU(negative_slope=0.2)

)

)

(pos_embed): Sequential(

(0): Conv1d(3, 128, kernel_size=(1,), stride=(1,))

(1): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): LeakyReLU(negative_slope=0.2)

(3): Conv1d(128, 384, kernel_size=(1,), stride=(1,))

)

(input_proj): Sequential(

(0): Conv1d(128, 384, kernel_size=(1,), stride=(1,))

(1): BatchNorm1d(384, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): LeakyReLU(negative_slope=0.2)

(3): Conv1d(384, 384, kernel_size=(1,), stride=(1,))

)

(encoder): ModuleList(

(0): Block(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): Attention(

(qkv): Linear(in_features=384, out_features=1152, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(drop_path): Identity()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(knn_map): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map): Linear(in_features=768, out_features=384, bias=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=768, bias=True)

(act): GELU()

(fc2): Linear(in_features=768, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(1): Block(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): Attention(

(qkv): Linear(in_features=384, out_features=1152, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(drop_path): Identity()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(knn_map): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map): Linear(in_features=768, out_features=384, bias=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=768, bias=True)

(act): GELU()

(fc2): Linear(in_features=768, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(2): Block(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): Attention(

(qkv): Linear(in_features=384, out_features=1152, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(drop_path): Identity()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(knn_map): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map): Linear(in_features=768, out_features=384, bias=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=768, bias=True)

(act): GELU()

(fc2): Linear(in_features=768, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(3): Block(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): Attention(

(qkv): Linear(in_features=384, out_features=1152, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(drop_path): Identity()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(knn_map): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map): Linear(in_features=768, out_features=384, bias=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=768, bias=True)

(act): GELU()

(fc2): Linear(in_features=768, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(4): Block(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): Attention(

(qkv): Linear(in_features=384, out_features=1152, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(drop_path): Identity()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(knn_map): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map): Linear(in_features=768, out_features=384, bias=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=768, bias=True)

(act): GELU()

(fc2): Linear(in_features=768, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

(5): Block(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): Attention(

(qkv): Linear(in_features=384, out_features=1152, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(drop_path): Identity()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(knn_map): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map): Linear(in_features=768, out_features=384, bias=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=768, bias=True)

(act): GELU()

(fc2): Linear(in_features=768, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

)

)

(increase_dim): Sequential(

(0): Conv1d(384, 1024, kernel_size=(1,), stride=(1,))

(1): BatchNorm1d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): LeakyReLU(negative_slope=0.2)

(3): Conv1d(1024, 1024, kernel_size=(1,), stride=(1,))

)

(coarse_pred): Sequential(

(0): Linear(in_features=1024, out_features=1024, bias=True)

(1): ReLU(inplace=True)

(2): Linear(in_features=1024, out_features=672, bias=True)

)

(mlp_query): Sequential(

(0): Conv1d(1027, 1024, kernel_size=(1,), stride=(1,))

(1): LeakyReLU(negative_slope=0.2)

(2): Conv1d(1024, 1024, kernel_size=(1,), stride=(1,))

(3): LeakyReLU(negative_slope=0.2)

(4): Conv1d(1024, 384, kernel_size=(1,), stride=(1,))

)

(decoder): ModuleList(

(0): DecoderBlock(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(self_attn): Attention(

(qkv): Linear(in_features=384, out_features=1152, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(norm_q): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(norm_v): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): CrossAttention(

(q_map): Linear(in_features=384, out_features=384, bias=False)

(k_map): Linear(in_features=384, out_features=384, bias=False)

(v_map): Linear(in_features=384, out_features=384, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(drop_path): Identity()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=768, bias=True)

(act): GELU()

(fc2): Linear(in_features=768, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(knn_map): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map): Linear(in_features=768, out_features=384, bias=True)

(knn_map_cross): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map_cross): Linear(in_features=768, out_features=384, bias=True)

)

(1): DecoderBlock(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(self_attn): Attention(

(qkv): Linear(in_features=384, out_features=1152, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(norm_q): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(norm_v): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): CrossAttention(

(q_map): Linear(in_features=384, out_features=384, bias=False)

(k_map): Linear(in_features=384, out_features=384, bias=False)

(v_map): Linear(in_features=384, out_features=384, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(drop_path): Identity()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=768, bias=True)

(act): GELU()

(fc2): Linear(in_features=768, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(knn_map): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map): Linear(in_features=768, out_features=384, bias=True)

(knn_map_cross): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map_cross): Linear(in_features=768, out_features=384, bias=True)

)

(2): DecoderBlock(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(self_attn): Attention(

(qkv): Linear(in_features=384, out_features=1152, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(norm_q): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(norm_v): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): CrossAttention(

(q_map): Linear(in_features=384, out_features=384, bias=False)

(k_map): Linear(in_features=384, out_features=384, bias=False)

(v_map): Linear(in_features=384, out_features=384, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(drop_path): Identity()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=768, bias=True)

(act): GELU()

(fc2): Linear(in_features=768, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(knn_map): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map): Linear(in_features=768, out_features=384, bias=True)

(knn_map_cross): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map_cross): Linear(in_features=768, out_features=384, bias=True)

)

(3): DecoderBlock(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(self_attn): Attention(

(qkv): Linear(in_features=384, out_features=1152, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(norm_q): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(norm_v): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): CrossAttention(

(q_map): Linear(in_features=384, out_features=384, bias=False)

(k_map): Linear(in_features=384, out_features=384, bias=False)

(v_map): Linear(in_features=384, out_features=384, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(drop_path): Identity()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=768, bias=True)

(act): GELU()

(fc2): Linear(in_features=768, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(knn_map): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map): Linear(in_features=768, out_features=384, bias=True)

(knn_map_cross): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map_cross): Linear(in_features=768, out_features=384, bias=True)

)

(4): DecoderBlock(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(self_attn): Attention(

(qkv): Linear(in_features=384, out_features=1152, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(norm_q): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(norm_v): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): CrossAttention(

(q_map): Linear(in_features=384, out_features=384, bias=False)

(k_map): Linear(in_features=384, out_features=384, bias=False)

(v_map): Linear(in_features=384, out_features=384, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(drop_path): Identity()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=768, bias=True)

(act): GELU()

(fc2): Linear(in_features=768, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(knn_map): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map): Linear(in_features=768, out_features=384, bias=True)

(knn_map_cross): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map_cross): Linear(in_features=768, out_features=384, bias=True)

)

(5): DecoderBlock(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(self_attn): Attention(

(qkv): Linear(in_features=384, out_features=1152, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(norm_q): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(norm_v): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): CrossAttention(

(q_map): Linear(in_features=384, out_features=384, bias=False)

(k_map): Linear(in_features=384, out_features=384, bias=False)

(v_map): Linear(in_features=384, out_features=384, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(drop_path): Identity()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=768, bias=True)

(act): GELU()

(fc2): Linear(in_features=768, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(knn_map): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map): Linear(in_features=768, out_features=384, bias=True)

(knn_map_cross): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map_cross): Linear(in_features=768, out_features=384, bias=True)

)

(6): DecoderBlock(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(self_attn): Attention(

(qkv): Linear(in_features=384, out_features=1152, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(norm_q): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(norm_v): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): CrossAttention(

(q_map): Linear(in_features=384, out_features=384, bias=False)

(k_map): Linear(in_features=384, out_features=384, bias=False)

(v_map): Linear(in_features=384, out_features=384, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(drop_path): Identity()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=768, bias=True)

(act): GELU()

(fc2): Linear(in_features=768, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(knn_map): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map): Linear(in_features=768, out_features=384, bias=True)

(knn_map_cross): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map_cross): Linear(in_features=768, out_features=384, bias=True)

)

(7): DecoderBlock(

(norm1): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(self_attn): Attention(

(qkv): Linear(in_features=384, out_features=1152, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(norm_q): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(norm_v): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(attn): CrossAttention(

(q_map): Linear(in_features=384, out_features=384, bias=False)

(k_map): Linear(in_features=384, out_features=384, bias=False)

(v_map): Linear(in_features=384, out_features=384, bias=False)

(attn_drop): Dropout(p=0.0, inplace=False)

(proj): Linear(in_features=384, out_features=384, bias=True)

(proj_drop): Dropout(p=0.0, inplace=False)

)

(drop_path): Identity()

(norm2): LayerNorm((384,), eps=1e-05, elementwise_affine=True)

(mlp): Mlp(

(fc1): Linear(in_features=384, out_features=768, bias=True)

(act): GELU()

(fc2): Linear(in_features=768, out_features=384, bias=True)

(drop): Dropout(p=0.0, inplace=False)

)

(knn_map): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map): Linear(in_features=768, out_features=384, bias=True)

(knn_map_cross): Sequential(

(0): Linear(in_features=768, out_features=384, bias=True)

(1): LeakyReLU(negative_slope=0.2)

)

(merge_map_cross): Linear(in_features=768, out_features=384, bias=True)

)

)

)

(foldingnet): Fold(

(folding1): Sequential(

(0): Conv1d(386, 256, kernel_size=(1,), stride=(1,))

(1): BatchNorm1d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv1d(256, 128, kernel_size=(1,), stride=(1,))

(4): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): Conv1d(128, 3, kernel_size=(1,), stride=(1,))

)

(folding2): Sequential(

(0): Conv1d(387, 256, kernel_size=(1,), stride=(1,))

(1): BatchNorm1d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv1d(256, 128, kernel_size=(1,), stride=(1,))

(4): BatchNorm1d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(5): ReLU(inplace=True)

(6): Conv1d(128, 3, kernel_size=(1,), stride=(1,))

)

)

(increase_dim): Sequential(

(0): Conv1d(384, 1024, kernel_size=(1,), stride=(1,))

(1): BatchNorm1d(1024, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): LeakyReLU(negative_slope=0.2)

(3): Conv1d(1024, 1024, kernel_size=(1,), stride=(1,))

)

(reduce_map): Linear(in_features=1411, out_features=384, bias=True)

(loss_func): ChamferDistanceL1()

)

1780

1780

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?