编程实现一个残差网络,数据集还是之前的数据集

code:

import numpy as np

import tensorflow as tf

from tf_utils import *

import matplotlib.pyplot as plt

# 获取训练过程中的损失

class LossHistory(tf.keras.callbacks.Callback):

def on_train_begin(self, logs=None):

self.losses = []

def on_epoch_end(self, epoch, logs=None):

self.losses.append(logs['loss'])

def loss_callback(epoch, logs):

loss = logs['loss']

print('Epoch {}: Loss = {}'.format(epoch, loss.numpy()))

def convolutional_block(x,f,filters,s):

filter1,filter2,filter3=filters

x_shortcut=x

# main path

# first

x=tf.keras.layers.Conv2D(filter1,kernel_size=1,strides=s,padding='valid')(x)

x=tf.keras.layers.BatchNormalization()(x)

x=tf.keras.layers.ReLU()(x)

# second

x=tf.keras.layers.Conv2D(filter2,kernel_size=f,strides=1,padding='same')(x)

x=tf.keras.layers.BatchNormalization()(x)

x=tf.keras.layers.ReLU()(x)

# third

x=tf.keras.layers.Conv2D(filter3,kernel_size=1,strides=1,padding='valid')(x)

x=tf.keras.layers.BatchNormalization()(x)

# shortcut path

x_shortcut=tf.keras.layers.Conv2D(filter3,kernel_size=1,strides=s,padding='valid')(x_shortcut)

x_shortcut=tf.keras.layers.BatchNormalization()(x)

x=tf.keras.layers.add([x,x_shortcut])

x=tf.keras.layers.ReLU()(x)

return x

def identity_block(x,f,filters):

f1,f2,f3=filters

x_shortcut=x

# main

x=tf.keras.layers.Conv2D(f1,kernel_size=1,strides=1,padding='valid')(x)

x=tf.keras.layers.BatchNormalization()(x)

x=tf.keras.layers.ReLU()(x)

x=tf.keras.layers.Conv2D(f2,kernel_size=f,strides=1,padding='same')(x)

x=tf.keras.layers.BatchNormalization()(x)

x=tf.keras.layers.ReLU()(x)

x=tf.keras.layers.Conv2D(f3,kernel_size=1,strides=1,padding='valid')(x)

x=tf.keras.layers.BatchNormalization()(x)

x=tf.keras.layers.add([x,x_shortcut])

x=tf.keras.layers.ReLU()(x)

return x

def build_resnet(input_shape,num_classes=6):

'''num_classes:分类数量,input_shape:输入数据的形状'''

input_tensor=tf.keras.layers.Input(shape=input_shape)

x=tf.keras.layers.ZeroPadding2D(padding=(3,3))(input_tensor)

# 卷积层

x=tf.keras.layers.Conv2D(64,kernel_size=7,strides=2)(x)

x=tf.keras.layers.BatchNormalization()(x)

x=tf.keras.layers.ReLU()(x)

x=tf.keras.layers.MaxPooling2D((3,3),strides=2)(x)

# strage2

x=convolutional_block(x,f=3,filters=[64,64,256],s=1)

x=identity_block(x,f=3,filters=[64,64,256])

x=identity_block(x,f=3,filters=[64,64,256])

# strage3

x=convolutional_block(x,f=3,filters=[128,128,512],s=1)

x=identity_block(x,f=3,filters=[128,128,512])

x=identity_block(x,f=3,filters=[128,128,512])

x=identity_block(x,f=3,filters=[128,128,512])

# strage4

x=convolutional_block(x,f=3,filters=[256,256,1024],s=2)

x=identity_block(x,f=3,filters=[256,256,1024])

x=identity_block(x,f=3,filters=[256,256,1024])

x=identity_block(x,f=3,filters=[256,256,1024])

x=identity_block(x,f=3,filters=[256,256,1024])

x=identity_block(x,f=3,filters=[256,256,1024])

# strage5

x=convolutional_block(x,f=3,filters=[512,512,2048],s=2)

x=identity_block(x,f=3,filters=[256,256,2048])

x=identity_block(x,f=3,filters=[256,256,2048])

x=tf.keras.layers.AveragePooling2D(pool_size=(2,2))(x)

# outer

x=tf.keras.layers.Flatten()(x)

x=tf.keras.layers.Dense(num_classes,activation='softmax')(x)

model=tf.keras.models.Model(inputs=input_tensor,outputs=x)

return model

# 加载数据

X_train_orig, Y_train_orig, X_test_orig, Y_test_orig, classes = load_dataset()

print(X_train_orig.shape)

print(Y_train_orig.shape)

# 将数据归一化

X_train_orig=X_train_orig/255.0

X_test_orig=X_test_orig/255.0

# 转换标签的形状

Y_train_orig=Y_train_orig.T

Y_train_orig=np.squeeze(Y_train_orig)

Y_test_orig=Y_test_orig.T

Y_test_orig=np.squeeze(Y_test_orig)

# 创建和编译模型

model=build_resnet(input_shape=(64,64,3),num_classes=6)

model.compile(optimizer='adam',loss=tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),metrics=['accuracy'])

loss_history = LossHistory()

# 训练模型

model.fit(X_train_orig,Y_train_orig,epochs=50,validation_data=(X_test_orig,Y_test_orig),callbacks=[loss_history])

# 将损失值转换为 NumPy 数组

losses_np = np.array(loss_history.losses)

plt.plot(losses_np)

plt.show()

# # 保存模型

model.save("model.h5")

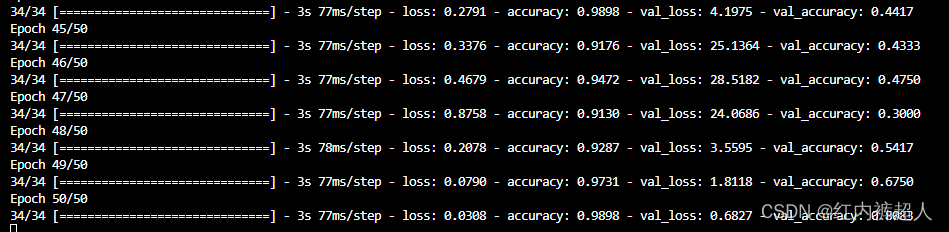

实验结果:

很好,过拟合了,而且测试集的loss出现了极大的波动,问题未知。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?