http://blog.csdn.net/emilmatthew/article/details/1327367

數字圖像處理實踐[3]---夜間圖像增強

EmilMatthew (EmilMatthew@126.com)

[ 類別 ]算法創新、算法對比

[推薦指數]★★★★

[ 摘要 ]本文提出了兩種針對夜間灰度圖像的增強算法:一種基於極暗區域連通集的分割算法,結合非線性動態調整及直方圖均衡化方法對夜間圖像進行增強,該算法的主要特點是對場景中的主要景物有較為突出的表現,輪廓清晰。

另一種算法是針對夜間圖像直方圖分布的特點,采用將圖像的綠色通道轉成灰度圖像的方法,該算法的主要特點是對於場景的細節表現生動,視覺效果好。

[ 關鍵詞 ] 夜間視圖、圖像增強、非線性調整、連通集、廣度優先搜索、綠色通道

Digit Image Process Practice[3] ------Night Image Enhancement

[Classify] Algorithm Innovation , Algorithm Comparison

[ Level ] ★★★★

[Abstract] This article introduces two methods to enhance the night pictures. One method is based on the deeply dark area connected set partition algorithm , and both with the nonlinear adjusting method and equalized method. This algorithm has its special points such as enhancing the important part of the picture and has a clearly outline. Another method is based on the night picture』s grey histogram』s special property , changes its green channel to grey picture. This algorithm has its special points such as showing out the details vividly and has a good vision effect.

[Key Words] Night Vision , Image Enhancement , Nonlinear Dynamic Adjust ,Connected Set , Breadth First Search, Green Channel

[0引言]

夜間圖像的主要特點是微光,暗色區域佔據了畫面的主要部分,相應的灰度直方圖也集中分布於坐標系的左側。因此,夜間圖像的主要增強工作在於將較暗處的細節表達清楚,但又不能過亮,丟失過度的細節信息。[1]提出了將對比度增強方法與直方圖均衡算法級聯的方法,雖然該方法本身會帶來一定的噪聲,但是該文的一個比較有益的啟發在於將多種效果進行組合,以獲得較好的處理效果。[2]提出了一種針對BBHE(雙直方均衡)算法改進的算法,該算法主要特點在於克服簡並保持圖像亮度進行增強。本文主要從圖像的處理不同需求出發,考慮了兩種不同的增強方案:方案一,基於極暗區域連通集的分割算法的處理目標更接進於讓機器去「看」, 主要的特點是在於對於主要景物表現得十分清晰;而方案二,將綠色通道轉成灰度圖像,主要是針對灰度都集中分布在小於64的夜間灰度圖像,處理結果更適合讓人眼去看,主要特點在於體現畫面的細節。

[1符號假設]

p: 最暗灰度像素的個數佔總像素個數的百分比

[2基於極暗區域連通集的分割算法]

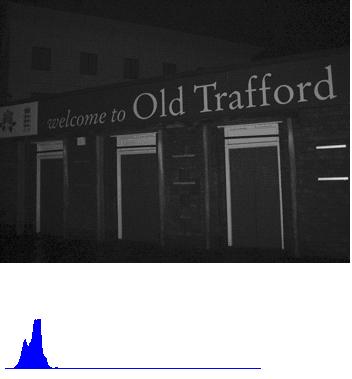

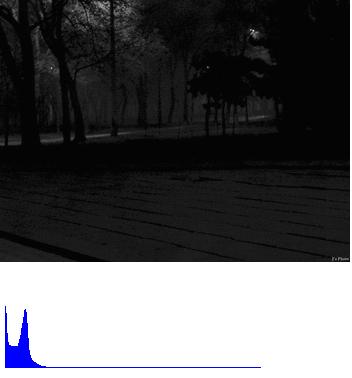

2.1想法的來源

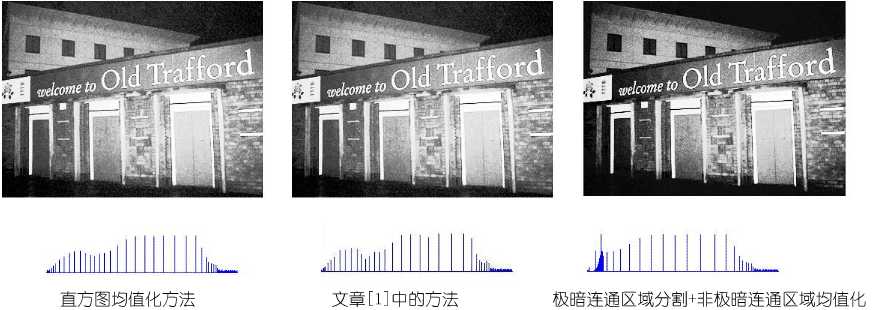

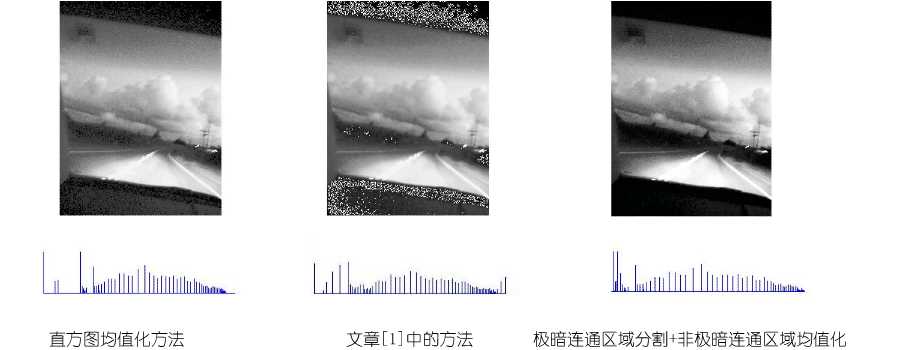

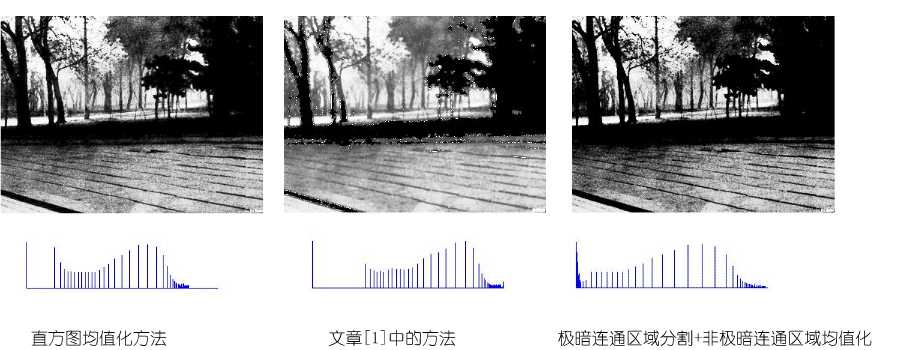

該算法的想法是基於夜間圖像的有較大的連通區域是暗區得出的,所謂暗區,是指某幅圖像中最暗灰度像素的個數佔總像素個數的一定百分比內(不妨設為p )的所有像素。下面是三幅測試圖像及其灰度柱狀圖(注:由於個別像素所佔比例過大,因此此處的灰度柱狀圖采取了「限高」處理):

圖1:測試圖像1及其灰度柱狀圖

圖2:測試圖像2及其灰度柱狀圖

圖3:測試圖像3及其灰度柱狀圖

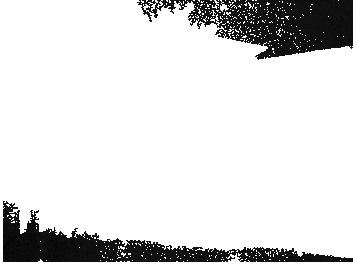

所以,即便都是在視覺上的暗圖像,在灰度的分布上還是有所不同的,圖1中灰度<5像素幾乎可以忽略,而圖2、圖3灰度<5的像素則佔到了全部像素的相當一部分比例。所以,劃分最暗區域的原則不應以灰度的某個上限為限定,而應以某圖中最暗灰度像素佔所有像素的百分比p為依據。下面是三幅圖像當p=0.15時提取的極暗區域情況:

圖4:測試圖像1(p=0.15)

圖5:測試圖像2(p=0.15)

圖6:測試圖像3(p=0.15)

通過對三幅測試圖像極暗區域的提取,可以看出,極暗區域的連通特性在夜間圖像是顯然成立的。

2.1算法的核心部分

之所以要提出連通區域,主要是考慮,如果僅僅以p值作為極暗區域的劃分,會將一些較暗,但是夾雜在較亮區域的像素作與連通的極暗區域相同的粗略處理,難以體現細節。

算法的整體框架如下:

1. 采用廣度優先搜索,根據p值,找出極暗連通區域。

2. 對於非極暗連通區域的像素,作均值化處理。

有兩點要說明一下:

1. 定義極暗連通區域,是以其像素值的個數點總像素的個數的百分比q來

確定的,這裡設q=0.02 .

2. 之所以不再對極暗連通區域作均值化處理,主要是這樣考慮的:因為即

使對極暗連通區域作均值化處理,像素的范圍同樣被限定在一個視覺上幾乎無法辨認的極暗區域,意義不大。

算法的實現部分參附錄[0]。

算法的實驗結果:

圖7:測試圖像1的算法效果對比圖

圖8:測試圖像2的算法效果對比圖

圖9:測試圖像3的算法效果對比圖

從實驗結果的對比中,可以看出:直方圖均值化方法使得畫面稍亮,而文獻[1]的方法除了會帶來噪聲外,亦在細節處使得畫面有些模糊感,本文提出的「極暗連通區域分割+非極暗連通區域均值化」(簡稱DPEQU)方法,具有對比明顯,重點突出的優點。

2.2算法的改進

經過對比後,發現僅使用DPEQU方法仍有一些不足之處,如:一些位於極暗區域附近的像素,很容易在視覺上被極暗區域「吞噬」,從而丟失相應的信息。所以,考慮采用非線性動態范圍調整方法先對畫出進行調整,再用DPEQU,於是得到了 「非線性動態范圍調整+極暗連通區域分割+非極暗連通區域均值化」 增強方法(簡稱DADPEQU)。

此處的非線性動態范圍調整的公式為:

g(i,j)=c·log(1+f(i,j)) (i=1,2,…,m j=1,2,…,n)

其中,c值的計算方式如下:

令圖像的灰度變化范圍為s,則c=s/lg(1+s)

非線性動態范圍調整的算法實現請參附錄[1]。

由於非線性動態范圍調整對稍亮些的圖像,如測試圖像1,就會呈現調整過度的狀況,因此,對這類圖像(判定這類圖像的條件為灰度像素級別<20的像素個數佔不到全畫面像素的15%),不做動態調整,而僅使用DPEQU。

實驗結果:

圖10:測試圖像2經DADPEQU算法增強後的效果

圖11:測試圖像3經DADPEQU算法增強後的效果

從實驗結果來看,較DPEQU算法的改進之處,在於畫面的細節得到突出的體現,不失為一種針對夜間圖像增強的有效方法。當然,這個方法的缺點也是存在的,那就是局部的亮區容易被調整的過亮,從面掩蓋暗區的一些細節,因此,如何在增亮和突出細節上找到一個較好的「平衡點」,仍是一個值得思考和探索的問題。

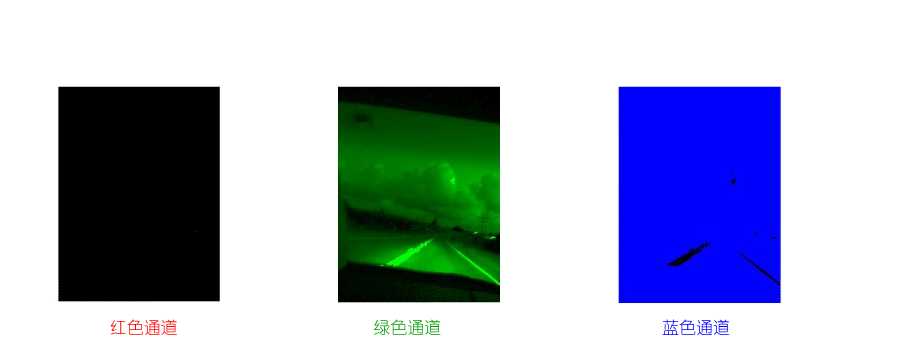

[3綠色通道轉成灰度圖像的方法]

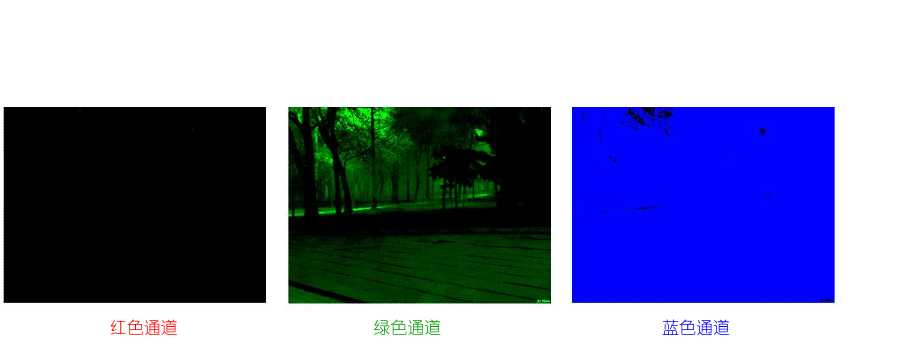

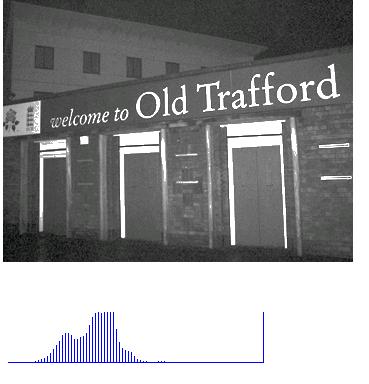

下面介紹的增強方法,可以說是有些討巧的,雖然表面上看上去很簡單,但是針對夜間圖像大部分灰度像素值<64(即綠色通道表現力最強,而紅、藍兩通道沒有表現的區域中)的特性[5],所以將原圖先進行灰度到綠色通道的轉換,然後以綠色通道中的綠度像素級別直接以同級別的灰度像素進行輸出,下面是三幅圖像三個通道所對應的畫面信息的展現:

圖12:測試圖像1三個通道信息

圖13:測試圖像2三個通道信息

圖14:測試圖像3三個通道信息

所以,完全有理由在處理夜間圖像時將紅、藍兩通道的信息舍去,僅保留綠色通道的信息。

該算法的實現請參附錄[2]

實驗結果:

圖15:測試圖像1經綠色通道轉成灰度算法增強後的效果

圖16:測試圖像2經綠色通道轉成灰度算法增強後的效果

圖17:測試圖像2經綠色通道轉成灰度算法增強後的效果

從數學上看,該算法實際上是對於灰度像素<64的像素都做了乘以255/64的操作,對於灰度像素>64的像素點則簡單處理成255。雖然是一個簡單的線性變換,但是從結果上來看,在畫面的真實感和細節表達上,該算法處理出的畫面顯然要勝過本文中所展示的其它增強效果,非常適合當用戶的要求為人眼觀察時使用。當然,本算法亦存在著一定的缺陷,那便是增強後的畫面仍是偏暗的,如果使用相應的增強效果,如均衡化、非線性動態范圍調整,又會回到將細節處的信息「吞噬」掉的老路上。所以,找到適合針對綠色通道轉成的灰度圖像進行增亮的算法,仍是一個值得探索和努力的方向。

[參考文獻與網站]

[0] 朱虹等著,數字圖像處理基礎,科學出版社,2005.

[1] 張 宇、王希勤、彭應寧,一種用於夜間圖像增強的算法,清華大學學報(自然科學版),1999 年第39 卷第9 期 ,79~ 80.

[2] 沈嘉勵、張 宇、王秀壇,一種夜視圖象處理的新算法,中國圖象圖形學報,2000 年6 月,第5 卷(A 版) 第6 期.

[3] 馬志峰、史彩成,自適應圖像對比度模糊增強算法,激光與紅外Vol. 36 , No. 3,2006年3月

[4] 王國權、仲偉波,灰度圖像增強算法的改進與實現研究,計算機應用研究,2004年第12期,p175-176.

[5] EmilMatthew, 玩玩數字圖像處理[2]---圖像增強,http://blog.csdn.net/EmilMatthew/archive/2006/09/27/1290589.aspx

程序完成日:06/10/02

文章完成日:06/10/09

附錄[0]

極暗連通區域分割算法(極暗連通區域分割+非極暗連通區域均值化)

void BMParse::nightPhotoPationMethod

(HDC inHdc,int offsetX,int offsetY,float portionOfDark)

{

//0.Inner value declaration

int darkAreaPixelCount;

int darkAreaUpLimit; //根據p值計算出的某幅圖像灰度門限值

unsigned long totalPixelNum;

unsigned long darkAreaNum;

unsigned long tmpDarkAreaNum;

unsigned long parsedNum;

unsigned long totalDarkAreaNum;

unsigned long tmpULVal;

int histVal[256];

float histPercent[256];

int darkAreaPixelIndex[256];

int darkAreaSetNum;

int usefulDarkSetID[300]; //有效的連通集的標號

int usefulSetNum;

ePoint tmpPoint,tmpPoint2;

PSingleRearSeqQueue searchQueue;

//valuable for equalize op

int arrHists[256];

float pArr[256];

long totalHistVal;

unsigned long outputPixel;

int tmpTableVal;

int i,j,k;

//1.Use Pation Method to indentify the very dark area

//1.0 determine the dark area up limit

searchQueue=createNullSingleRearSeqQueue();

darkAreaPixelCount=0;

darkAreaNum=0;

totalPixelNum=mBMFileInfo.bmHeight*mBMFileInfo.bmWidth;

for(i=0;i<256;i++)

{

histVal[i]=0;

histPercent[i]=0;

}

for(i=0;i<mBMFileInfo.bmHeight;i++)

for(j=0;j<mBMFileInfo.bmWidth;j++)

{

histVal[bmpBWMatrix[i][j]]++;

bmpBWBackMatrix[i][j]=0;

}

for(i=0;i<256;i++)

histPercent[i]=(float)histVal[i]/(float)totalPixelNum;

i=0;

while(darkAreaPixelCount<6&&i<256)

{

if(histPercent[i]>0)

{

darkAreaPixelIndex[darkAreaPixelCount]=i;

darkAreaPixelCount++;

darkAreaNum+=histVal[i];

}

i++;

}

//check if darkAreaPixel>=totalPixelNum*portionOfDark

if(darkAreaNum<totalPixelNum*portionOfDark)

{

while(darkAreaNum<totalPixelNum*portionOfDark)

{

if(histPercent[i]>0)

{

darkAreaPixelIndex[darkAreaPixelCount]=i;

darkAreaNum+=histVal[i];

darkAreaPixelCount++;

}

i++;

}

}

//exception consult

if(i==256)

{

return;

}

//1.1 find dark area set

darkAreaUpLimit=i-1;

darkAreaSetNum=0;

usefulSetNum=0;

parsedNum=0;

totalDarkAreaNum=0;

for(i=0;i<mBMFileInfo.bmHeight;i++)

for(j=0;j<mBMFileInfo.bmWidth;j++)

{

if(bmpBWBackMatrix[i][j]==0)

{

if(bmpBWMatrix[i][j]>darkAreaUpLimit)

{

parsedNum++;

bmpBWBackMatrix[i][j]=-1;// marked ,not in dark area

}

else

{

//a.init part of the breadth first search

darkAreaSetNum++;

tmpDarkAreaNum=0;

tmpPoint.x=j;

tmpPoint.y=i;

enQueue(searchQueue,tmpPoint);

//b.core part of search

while(!isEmpty(searchQueue))

{

tmpPoint=getHeadData(searchQueue);

parsedNum++;

//save to count values bmpBWBackMatrix[tmpPoint.y][tmpPoint.x]=darkAreaSetNum;

tmpDarkAreaNum++;

//left -> bmpBWMatrix[tmpPoint.y][tmpPoint.x-1]

if(tmpPoint.x>0)

{ if(bmpBWBackMatrix[tmpPoint.y][tmpPoint.x-1]==0&&

bmpBWBackMatrix[tmpPoint.y][tmpPoint.x-1]!=-3&&

bmpBWMatrix[tmpPoint.y][tmpPoint.x-1]<=darkAreaUpLimit)

{

tmpPoint2.x=tmpPoint.x-1;

tmpPoint2.y=tmpPoint.y; bmpBWBackMatrix[tmpPoint.y][tmpPoint.x-1]=-3; enQueue(searchQueue,tmpPoint2); }

}

//up -> bmpBWMatrix[tmpPoint.y-1][tmpPoint.x]

if(tmpPoint.y>0)

{

if(bmpBWBackMatrix[tmpPoint.y-1][tmpPoint.x]==0&&

bmpBWBackMatrix[tmpPoint.y-1][tmpPoint.x]!=-3&&

bmpBWMatrix[tmpPoint.y-1][tmpPoint.x]<=darkAreaUpLimit)

{

tmpPoint2.x=tmpPoint.x; tmpPoint2.y=tmpPoint.y-1; bmpBWBackMatrix[tmpPoint.y-1][tmpPoint.x]=-3;

enQueue(searchQueue,tmpPoint2); }

}

//right -> bmpBWMatrix[tmpPoint.y][tmpPoint.x+1]

if(tmpPoint.x<mBMFileInfo.bmWidth-1)

{

if(bmpBWBackMatrix[tmpPoint.y][tmpPoint.x+1]==0&&

bmpBWBackMatrix[tmpPoint.y][tmpPoint.x+1]!=-3&&

bmpBWMatrix[tmpPoint.y][tmpPoint.x+1]<=darkAreaUpLimit)

{ tmpPoint2.x=tmpPoint.x+1;

tmpPoint2.y=tmpPoint.y;

bmpBWBackMatrix[tmpPoint.y][tmpPoint.x+1]=-3;

enQueue(searchQueue,tmpPoint2);

}

}

//down -> bmpBWMatrix[tmpPoint.y+1][tmpPoint.x]

if(tmpPoint.y<mBMFileInfo.bmHeight-1)

{ if(bmpBWBackMatrix[tmpPoint.y+1][tmpPoint.x]==0&&

bmpBWBackMatrix[tmpPoint.y+1][tmpPoint.x]!=-3&&

bmpBWMatrix[tmpPoint.y+1][tmpPoint.x]<=darkAreaUpLimit)

{

tmpPoint2.x=tmpPoint.x;

tmpPoint2.y=tmpPoint.y+1; bmpBWBackMatrix[tmpPoint.y+1][tmpPoint.x]=-3; enQueue(searchQueue,tmpPoint2); }

}

deQueue(searchQueue);

}

if(tmpDarkAreaNum>0.02*totalPixelNum)

{ usefulDarkSetID[usefulSetNum]=darkAreaSetNum;

usefulSetNum++;

totalDarkAreaNum+=tmpDarkAreaNum;

}

}

}

}

for(i=0;i<mBMFileInfo.bmHeight;i++)

for(j=0;j<mBMFileInfo.bmWidth;j++)

{

if(bmpBWBackMatrix[i][j]>0)

{

for(k=0;k<usefulSetNum;k++)

{

if(bmpBWBackMatrix[i][j]==usefulDarkSetID[k])

break;

}

if(k==usefulSetNum)

bmpBWBackMatrix[i][j]=-2;

else

bmpBWBackMatrix[i][j]=0;

}

}

for(i=0;i<mBMFileInfo.bmHeight;i++)

for(j=0;j<mBMFileInfo.bmWidth;j++)

if(bmpBWBackMatrix[i][j]!=0&&bmpBWBackMatrix[i][j]!=-1&&

bmpBWBackMatrix[i][j]!=-2)

{

return;

}

//2.Use equalize method to process the picture

//2.1 cal each grey value's fequence

for(i=0;i<256;i++)

arrHists[i]=0;

for(j=0;j<mBMFileInfo.bmHeight;j++)

for(i=0;i<mBMFileInfo.bmWidth;i++)

{ if(bmpBWBackMatrix[j][i]==-1||bmpBWBackMatrix[j][i]==-2)

arrHists[bmpBWMatrix[j][i]]++;

}

//2.cal total hist value

totalHistVal=0;

for(i=0;i<256;i++)

totalHistVal+=arrHists[i];

pArr[0]=0;

pArr[1]=(float)(arrHists[0]+arrHists[1])/(float)(2*totalHistVal);

for(i=2;i<256;i++)

pArr[i]=pArr[i-1]+(float)arrHists[i]/(float)totalHistVal;

//3.show out adjusted picture:

for(i=0;i<mBMFileInfo.bmHeight;i++)

for(j=0;j<mBMFileInfo.bmWidth;j++)

{ if(bmpBWBackMatrix[i][j]==-1||bmpBWBackMatrix[i][j]==-2)

{

tmpTableVal=255*pArr[bmpBWMatrix[i][j]];

}

else

tmpTableVal=bmpBWMatrix[i][j];

//make to windows color format

outputPixel=((unsigned long)paletteArr[tmpTableVal].b)*65536+

((unsigned long)paletteArr[tmpTableVal].g)*256+

(unsigned long)paletteArr[tmpTableVal].r;

//output pixel SetPixel(inHdc,j+offsetX,i+offsetY,outputPixel);

bmpBWBackMatrix[i][j]=tmpTableVal;

}

}

關於程序的幾點說明:

1) bmpBWBackMatrix是程序中的一個重要的結構,其值的具體意義如下:

搜索過程中:

-3:在搜索過程中被新吸收的可接納點,0:未搜索,>0 :屬於已搜索區域。

搜索結束時:

-2:表示像素點屬於極暗區域,但是所在區域不為連通集。

-1:表示像素點屬於非極暗區域。

0 :表示像素點屬於極暗連通區域。

2) 搜索連通集的過程采用隊列實現了廣度優先搜索。

附錄[1]

非線性動態范圍調整的算法實現

void BMParse::nolinearDynamicAdjust2()

{

//0. Valuables declaration:

float plusConstant;

float plusConstantChanged;

int maxGreyValue,minGreyValue,spanGreyValue;

int avgGreyVal;

int tmpTableVal;

unsigned long sumGreyVal;

unsigned long outputPixel;

unsigned long totalPixelNum;

unsigned long darkAreaNum;

int i,j;

darkAreaNum=0;

totalPixelNum=mBMFileInfo.bmHeight*mBMFileInfo.bmWidth;

for(i=0;i<mBMFileInfo.bmHeight;i++)

for(j=0;j<mBMFileInfo.bmWidth;j++)

{

if(bmpBWMatrix[i][j]<20)

darkAreaNum++;

}

if(darkAreaNum<0.15*totalPixelNum)

return;

//1.Core Process

//1.0Common Process

// find max and min greyvalue

maxGreyValue=-1;

minGreyValue=266;

for(i=0;i<mBMFileInfo.bmHeight;i++)

for(j=0;j<mBMFileInfo.bmWidth;j++)

{

if(bmpBWMatrix[i][j]>maxGreyValue)

{ maxGreyValue=bmpBWMatrix[i][j];

}

if(bmpBWMatrix[i][j]<minGreyValue)

{

minGreyValue=bmpBWMatrix[i][j];

}

}

//2.0.cal plus constant value

spanGreyValue=maxGreyValue-minGreyValue;

plusConstant=spanGreyValue/log10(1+spanGreyValue);

for(i=0;i<mBMFileInfo.bmHeight;i++)

for(j=0;j<mBMFileInfo.bmWidth;j++)

{

//cal adjusted grey table value index

tmpTableVal=(int)(plusConstant*log10(1+bmpBWMatrix[i][j]));

//copy to back bw matrix , for next step of processing use

bmpBWMatrix[i][j]=tmpTableVal;

}

}

附錄[2]

原圖->綠色通道->同級別的灰度像素

void BMParse::changeGreenChannelToGrey

(HDC inHdc,int offsetX,int offsetY)

{

//0.Inner Value declaration

unsigned long outputPixel;

int tmpTableVal;

int i,j;

//1.Core part of false color change

for(i=0;i<mBMFileInfo.bmHeight;i++)

for(j=0;j<mBMFileInfo.bmWidth;j++)

{

//green channel

if(bmpBWMatrix[i][j]<64) tmpTableVal=255/64*bmpBWMatrix[i][j];

else if(bmpBWMatrix[i][j]<192)

tmpTableVal=255;

else tmpTableVal=-255/63*(bmpBWMatrix[i][j]-192)+255;

//make to windows color format

outputPixel=((unsigned long)paletteArr[tmpTableVal].b)*65536+

((unsigned long)paletteArr[tmpTableVal].g)*256+

(unsigned long)paletteArr[tmpTableVal].r;

//1.2show out

SetPixel(inHdc,j+offsetX,i+offsetY,outputPixel);

bmpBWBackMatrix[i][j]=tmpTableVal;

}

}

[源碼下載、源程序下載]

源程序內容列表:

Code1:直方圖均衡化

Code2:文獻[1]中的方法實現

Code3:非線性動態范圍調整_極暗連通區域分割_非極暗連通區域均值化(DADPEQU)

Code4:綠色通道轉成灰度圖像

http://emilmatthew.51.net/EmilPapers/0631ImageProcess3/code1.rar

http://emilmatthew.51.net/EmilPapers/0631ImageProcess3/code2.rar

http://emilmatthew.51.net/EmilPapers/0631ImageProcess3/code3.rar

http://emilmatthew.51.net/EmilPapers/0631ImageProcess3/code4.rar

若直接點擊無法下載(或瀏覽),請將下載(或瀏覽)的超鏈接粘接至瀏覽器地( 推薦MYIE或GREENBORWSER)址欄後按回車.若不出意外,此時應能下載.

若下載中出現了問題,請參考:

http://blog.csdn.net/emilmatthew/archive/2006/04/08/655612.aspx

[參考文獻下載]

[1] 張 宇、王希勤、彭應寧,一種用於夜間圖像增強的算法,清華大學學報(自然科學版),1999 年第39 卷第9 期 ,79~ 80.

[2] 沈嘉勵、張 宇、王秀壇,一種夜視圖象處理的新算法,中國圖象圖形學報,2000 年6 月,第5 卷(A 版) 第6 期.

聲明:參考文獻的著作權為原作者所有,參考文獻的電子版來源為CNKI,在此表示感謝。

本文介紹了兩種針對夜間灰度圖像的增強算法:一種基於極暗區域連通集的分割算法,另一種利用綠色通道轉換。前者強調對主要景物的清晰表現,後者注重細節呈現。通過實驗對比,展示了兩種方法在增強夜間圖像時的不同效果和優點。

本文介紹了兩種針對夜間灰度圖像的增強算法:一種基於極暗區域連通集的分割算法,另一種利用綠色通道轉換。前者強調對主要景物的清晰表現,後者注重細節呈現。通過實驗對比,展示了兩種方法在增強夜間圖像時的不同效果和優點。

124

124

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?