欢迎大家关注我的微信公众号:Skyborn

不定期更新大气科学领域高质量的Python代码

原文显示效果更佳

前言

CMIP6,即第六次国际耦合模式比较计划(Coupled Model Intercomparison Project Phase 6),是一个国际合作项目,由世界气候研究计划(World Climate Research Programme, WCRP)下的气候模拟项目(Climate Model Intercomparison Project, CMIP)主导。CMIP6数据在气象科研工作中经常被使用。

所需下载的模式数量很多时,需先下载多个wget文件,然后再依次执行下载命令,这种方式需要占用大量的时间和精力,而且效率很低。

现在已经有多个推文介绍了CMIP6的批量下载:

有需要的可以参考这些推文来进行下载。

有关CMIP6数据,已经有很多介绍该数据的推文,今天分享我的下载方法。 关于CMIP6下载时所需要的选项可以参考这篇推文:

Note:

gn: native grid,表示发布的是模式原始输出数据,不经过插值,通常可能是格点模式输出数据。

gr: regird grid,表示发布的数据是经过插值后的模式数据。当模式使用的是非规则网格时(如:立方球面网格),就需要插值到常规的经纬网格才发布,否则将无法直接读取使用。

数据下载

该脚本旨在促进 CMIP6数据的下载。它提供了一个命令行界面,用于指定与您希望检索的数据相关的各种参数。

优点:

-

简单,高效,只需要输入你想要的模式、实验、变量,即可开始下载,前提是输入正确

-

并行下载方式下载数据,大大提升效率

-

以更加方便的方式获取CMIP6数据的url

Requirements:

-

Python 3

-

wget

-

urllib

-

netCDF4

使用方法

-

创建一个文件夹并将

cmip6_downloader.py文件放入其中 -

打开终端窗口并将目录更改为代码所在的文件夹

-

输入

python3 cmip6_downloader.py(您可以在交互模式下运行它或提供搜索参数)

现在,你可以使用网站中提供的任何搜索参数以纯命令行模式运行下载程序:--mip_era、--activity_id、--model_cohort、--product、--source_id、--institution_id、--source_type、 --nominal_resolution、--experiment_id、--sub_experiment_id、--variant_label、--grid_label、--table_id、--Frequency、--realm、--variable_id、--cf_standard_name、--data_node。

Use example:

python3 cmip6_downloader.py --variable_id pr --frequency mon --experiment_id amip --variant_label r1i1p1f1 --source_id EC-Earth3

这里我们使用EC-Earth3模式举例子

-

原因是该数据以一年一年输出,让手动下载该模式的人苦不堪言。

Output:

1- Searching for records: https://aims2.llnl.gov/metagrid-backend/proxy/search?source_id=EC-Earth3&experiment_id=amip&variant_label=r1i1p1f1&frequency=mon&variable_id=pr&offset=0&limit=9999&type=Dataset&replica=false&latest=true&project=CMIP6&format=application%2Fsolr%2Bjson

2- 39 files found in 1 records. 0 of these files were already downloaded.

3- No previously downloaded files found

4- Fetching list of files to be downloaded...

https://aims2.llnl.gov/metagrid-backend/proxy/search?dataset_id=CMIP6.CMIP.EC-Earth-Consortium.EC-Earth3.amip.r1i1p1f1.Amon.pr.gr.v20200203%7Cesg-dn2.nsc.liu.se&format=application%2Fsolr%2Bjson&limit=9999&offset=0&type=File

5- 39/39 files added to download list

6- Writing list of files s_EC-Earth3_e_amip_vl_r1i1p1f1_f_mon_v_pr_files_url_list.txt

7- Downloading files...FINALLY

Downloading file [1/39] http://esg-dn2.nsc.liu.se/thredds/fileServer/esg_dataroot1/cmip6data/CMIP6/CMIP/EC-Earth-Consortium/EC-Earth3/amip/r1i1p1f1/Amon/pr/gr/v20200203/pr_Amon_EC-Earth3_amip_r1i1p1f1_gr_197901-197912.nc

Downloading file [2/39] http://esg-dn2.nsc.liu.se/thredds/fileServer/esg_dataroot1/cmip6data/CMIP6/CMIP/EC-Earth-Consortium/EC-Earth3/amip/r1i1p1f1/Amon/pr/gr/v20200203/pr_Amon_EC-Earth3_amip_r1i1p1f1_gr_198001-198012.nc

Downloading file [4/39] http://esg-dn2.nsc.liu.se/thredds/fileServer/esg_dataroot1/cmip6data/CMIP6/CMIP/EC-Earth-Consortium/EC-Earth3/amip/r1i1p1f1/Amon/pr/gr/v20200203/pr_Amon_EC-Earth3_amip_r1i1p1f1_gr_198201-198212.nc

Downloading file [5/39] http://esg-dn2.nsc.liu.se/thredds/fileServer/esg_dataroot1/cmip6data/CMIP6/CMIP/EC-Earth-Consortium/EC-Earth3/amip/r1i1p1f1/Amon/pr/gr/v20200203/pr_Amon_EC-Earth3_amip_r1i1p1f1_gr_198301-198312.nc

Downloading file [6/39] http://esg-dn2.nsc.liu.se/thredds/fileServer/esg_dataroot1/cmip6data/CMIP6/CMIP/EC-Earth-Consortium/EC-Earth3/amip/r1i1p1f1/Amon/pr/gr/v20200203/pr_Amon_EC-Earth3_amip_r1i1p1f1_gr_198401-198412.nc

...

Downloading file [39/39] http://esg-dn2.nsc.liu.se/thredds/fileServer/esg_dataroot1/cmip6data/CMIP6/CMIP/EC-Earth-Consortium/EC-Earth3/amip/r1i1p1f1/Amon/pr/gr/v20200203/pr_Amon_EC-Earth3_amip_r1i1p1f1_gr_201701-201712.nc

It looks like all 39 files were downloaded successfully

Done. Check the data/s_EC-Earth3_e_amip_vl_r1i1p1f1_f_mon_v_pr folder :)

All files no problem

下载完成后,每个数据会进行检查,如果数据存在hdf error将会删除,存在问题的数据名将会被打印且删除。

当你运行此脚本时,某些文件可能无法下载(该脚本甚至会在第一次运行时再次下载它们),如果发生这种情况,你可以再次运行该脚本,提供相同的搜索参数,并且仅提供新的或以前失败的文件将被下载。

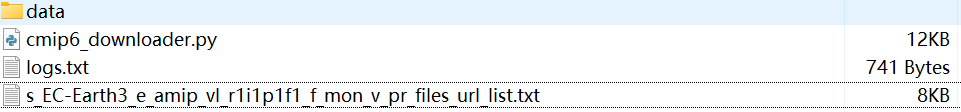

最后,我考虑到有人服务器网速可能很慢,脚本里将你所需要数据的url保存在了txt文件中,如你输入python3 cmip6_downloader.py --variable_id pr --frequency mon --experiment_id amip --variant_label r1i1p1f1 --source_id EC-Earth3,此时目录如图所示。

复制url_list里面的url到IDM下载器里面(使用IDM6.3.8免费破解版下载器批量下载CMIP6数据集教程),即可开始下载。

源代码文件地址

https://github.com/SQYQianYe/cmip6_downloader 有需要的可自行获取,期待大家能够基于这些代码进一步探索和创新。

源代码:

import urllib.request

import json

import multiprocessing

import os

import sys

import argparse

from urllib.parse import urlencode

import time

from netCDF4 import Dataset

number_of_processes = 50

files_to_download = multiprocessing.Manager().list()

failed_files = multiprocessing.Manager().list()

log_file = open('logs.txt', 'w')

def get_files_to_download(url_files_search):

with url_open_retry(url_files_search, 5, 10) as result:

data = json.loads(result.read().decode())

file_urls_to_download = data['response']['docs']

for file_url_to_download in file_urls_to_download:

for file_dataset_to_download in file_url_to_download['url']:

if file_dataset_to_download.split('|')[2] == 'HTTPServer':

files_to_download.append(file_dataset_to_download.split('|')[0])

def download_file(url_to_download, folder_path, index):

print_and_log('\t Downloading file [' + str(index) + '/' + str(len(files_to_download)) +'] ' + url_to_download)

for currentRun in range(0, int(number_of_processes / 10)):

result_code = os.system('wget -nc -c --retry-connrefused --waitretry=10 --quiet -o /dev/null -P ' + folder_path + ' ' + url_to_download)

if result_code == 0:

break

else:

print_and_log('There was a problem downloading ' + url_to_download + '. Retrying...')

if result_code != 0:

print_and_log('Failed to download ' + url_to_download)

failed_files.append(url_to_download)

def get_params():

parser = argparse.ArgumentParser(

description='CMIP 6 Downloader. Version 2.0.0',

epilog='''

For more information on the optional parameters, please refer to:

https://clipc-services.ceda.ac.uk/dreq/index.html

''')

if len(sys.argv) > 1:

parser.add_argument('--mip_era', metavar='', help='MIP Era')

parser.add_argument('--activity_id', metavar='', help='Activity')

parser.add_argument('--model_cohort', metavar='', help='Model Cohort')

parser.add_argument('--product', metavar='', help='Product')

parser.add_argument('--source_id', metavar='', help='Source ID', )

parser.add_argument('--institution_id', metavar='', help='Institution ID')

parser.add_argument('--source_type', metavar='', help='Source Type')

parser.add_argument('--nominal_resolution', metavar='', help='Nominal Resolution')

parser.add_argument('--experiment_id', metavar='', help='Experiment ID')

parser.add_argument('--sub_experiment_id', metavar='', help='Sub-Experiment')

parser.add_argument('--variant_label', metavar='', help='Variant Label')

parser.add_argument('--grid_label', metavar='', help='Grid Label')

parser.add_argument('--table_id', metavar='', help='Table ID')

parser.add_argument('--frequency', metavar='', help='Frequency')

parser.add_argument('--realm', metavar='', help='Realm')

parser.add_argument('--variable_id', metavar='', help='Variable')

parser.add_argument('--cf_standard_name', metavar='', help='CF Standard Name')

parser.add_argument('--data_node', metavar='', help='Data Node')

args = parser.parse_args()

search_params_dictionary = vars(args)

else: # interactive mode

search_params_dictionary = {

'variable_id': input("enter the variable name\n"),

'frequency': input("enter the frequency\n"),

'experiment_id': input("enter the experiment ID\n"),

'source_id': input("enter the source ID\n"),

}

# clean keys that are empty

search_params_dictionary = {k: v for k, v in search_params_dictionary.items() if v}

config_params_dictionary = {

'offset': 0,

'limit': 9999,

'type': 'Dataset',

'replica': 'false',

'latest': 'true',

'project': 'CMIP6',

'format': 'application/solr+json'

}

return config_params_dictionary, search_params_dictionary

def print_and_log(text):

print(text)

log_file.write(str(text))

log_file.write('\n')

def url_open_retry(url, retries=0, retry_interval=10):

result = None

for i in range(0, retries):

try:

result = urllib.request.urlopen(url)

break

except:

if i == (retries - 1):

print_and_log('failed to fetch ' + url)

else:

print_and_log('retrying ' + str((i + 1)) + '/' + str((retries - 1)) + ' ' + url)

time.sleep(retry_interval)

continue

break

return result

def get_folder_path(search_params_dictionary):

search_params_to_abbreviations = {

'mip_era': 'me',

'activity_id': 'a',

'model_cohort': 'mc',

'product': 'p',

'source_id': 's',

'institution_id': 'i',

'source_type': 'st',

'nominal_resolution': 'nr',

'experiment_id': 'e',

'sub_experiment_id': 'se',

'variant_label': 'vl',

'grid_label': 'g',

'table_id': 't',

'frequency': 'f',

'realm': 'r',

'variable_id': 'v',

'cf_standard_name': 'cf',

'data_node': 'd'

}

folder_name_parts = []

for key, value in search_params_dictionary.items():

folder_name_parts.append(search_params_to_abbreviations[key] + '_' + value)

folder_name = '_'.join(folder_name_parts)

return 'data' + os.path.sep + folder_name, folder_name

def get_number_of_previously_downloaded_files(folder_path):

if os.path.exists(folder_path):

return len([name for name in os.listdir(folder_path) if os.path.isfile(folder_path + os.path.sep + name)])

else:

return 0

def check_error_cmip_netcdf_file(folder_path):

error_files = []

cmip6_files = os.listdir(folder_path)

for file in cmip6_files:

data_name = file

test_data = os.path.join(folder_path, data_name)

try:

data = Dataset(test_data)

data.close()

pass

except:

error_files.append(test_data)

if error_files:

print_and_log("The following files cannot be opened using netCDF4:")

for error_file in error_files:

print_and_log(error_file + "have been removed")

# remove error file

os.remove(error_file)

else:

print_and_log("All files no problem")

return

if __name__ == '__main__':

config_params_dictionary, search_params_dictionary = get_params()

if search_params_dictionary:

url_params_dictionary = search_params_dictionary.copy()

url_params_dictionary.update(config_params_dictionary)

folder_path, folder_name = get_folder_path(search_params_dictionary)

records_search_url = 'https://aims2.llnl.gov/metagrid-backend/proxy/search?' + urlencode(url_params_dictionary)

print_and_log('1- Searching for records: ' + records_search_url)

records_content = url_open_retry(records_search_url, 3, 10)

if records_content:

records = json.loads(records_content.read().decode())

total_number_of_records = len(records['response']['docs'])

if total_number_of_records > 0:

total_number_of_files = 0

for record in records['response']['docs']:

total_number_of_files += int(record['number_of_files'])

if total_number_of_files > 0:

number_of_previously_downloaded_files = get_number_of_previously_downloaded_files(folder_path)

print_and_log('2- ' + str(total_number_of_files) + ' files found in ' + str(total_number_of_records) + ' records. ' + str(number_of_previously_downloaded_files) + ' of these files were already downloaded.')

if (number_of_previously_downloaded_files == 0) or (0 < number_of_previously_downloaded_files < total_number_of_files):

if number_of_previously_downloaded_files == 0:

print_and_log('3- No previously downloaded files found')

elif 0 < number_of_previously_downloaded_files < total_number_of_files:

print_and_log('3- ' + str(number_of_previously_downloaded_files) + '/' + str(total_number_of_files) + ' files were previously downloaded. Attempting to download missing ones...')

pool_search = multiprocessing.Pool(number_of_processes)

print_and_log('4- Fetching list of files to be downloaded...')

for record in records['response']['docs']:

url_files_search = 'https://aims2.llnl.gov/metagrid-backend/proxy/search?dataset_id=' + urllib.parse.quote(record['id']) + '&format=application%2Fsolr%2Bjson&limit=9999&offset=0&type=File'

print(url_files_search)

pool_search.apply_async(get_files_to_download, args=[url_files_search])

pool_search.close()

pool_search.join()

print_and_log('5- ' + str(len(files_to_download)) + '/' + str(total_number_of_files) + ' files added to download list')

if len(files_to_download) < total_number_of_files:

print_and_log('Not all files were found and added to the download list. Try running the downloader again with the same params and hopefully the servers will behave next time')

print_and_log('6- Writing list of files ' + folder_name + '_files_url_list.txt')

with open(folder_name + '_files_url_list.txt', 'w') as file:

for file_to_download in files_to_download:

file.write(file_to_download + '\n')

file.close()

print_and_log('7- Downloading files...FINALLY')

pool_download = multiprocessing.Pool(int(number_of_processes / 5))

index = 1

for file_to_download in files_to_download:

pool_download.apply_async(download_file, args=[file_to_download, folder_path, index])

index += 1

pool_download.close()

pool_download.join()

number_of_previously_downloaded_files = get_number_of_previously_downloaded_files(folder_path)

if number_of_previously_downloaded_files == total_number_of_files:

print_and_log('It looks like all ' + str(total_number_of_files) + ' files were downloaded successfully')

else:

print_and_log('Not all files (' + str(number_of_previously_downloaded_files) + '/' + str(total_number_of_files) + ') were downloaded. You can try running the script again')

if len(failed_files) > 0:

print_and_log('These files were not downloaded:')

for failed_file in failed_files:

print_and_log(failed_file)

print_and_log('Done. Check the ' + folder_path + ' folder :)')

# check error netcdf data

check_error_cmip_netcdf_file(folder_path)

else:

print_and_log('All files were previously downloaded. Check ' + folder_path + ' folder')

# check error netcdf data

check_error_cmip_netcdf_file(folder_path)

else:

print_and_log('No files found inside the records')

else:

print_and_log('No records found :(')

else:

print_and_log('There was a problem searching for the records')

else:

print_and_log('No search params provided :(')

7528

7528

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?