Darknet-53

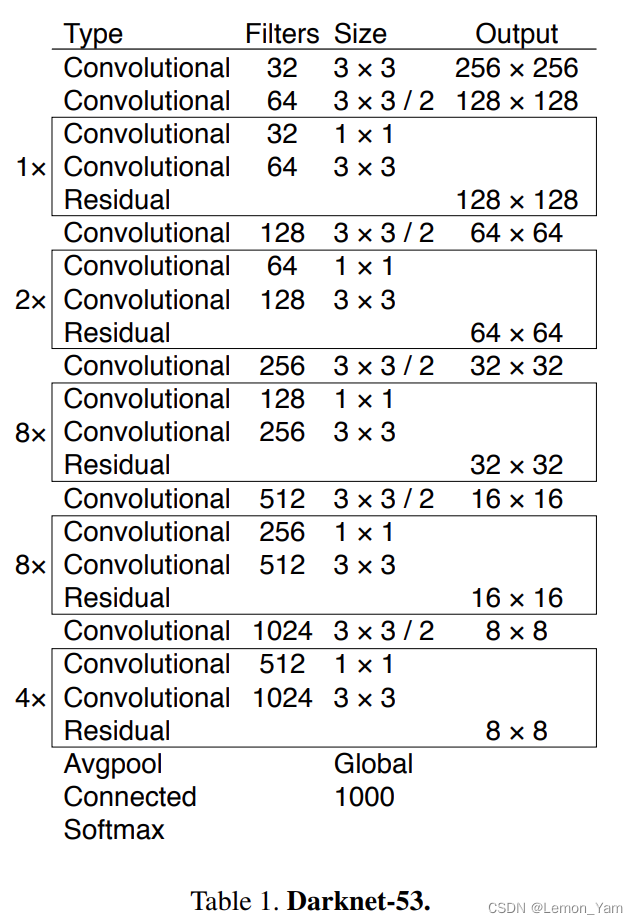

😸Darknet-53 是 YOLOv3 使用的主干网络,该网络主要由一些 Residual block 组成,这些残差块与 ResNet 中的 Basic block 和 BottleNeck 不同,其采用 1x1 卷积接着一组 3x3 卷积,比 bottleneck 少了后面的 1x1 卷积层。其具体结构如下图:

😸pytorch 实现代码:

import torch

import torch.nn as nn

# CBLR -> Conv+BN+LeakyReLU

class CBLR(nn.Sequential):

def __init__(self, in_channels, out_channels, kernel_size=3, stride=1, padding=1, bias=False, negative_slope=0.1):

super(CBLR, self).__init__(

nn.Conv2d(in_channels, out_channels, kernel_size, stride, padding, bias=bias),

nn.BatchNorm2d(out_channels),

nn.LeakyReLU(negative_slope)

)

# 残差块,channels 包括输入通道和输出通道

class BasicBlock(nn.Module):

def __init__(self, channels):

super(BasicBlock, self).__init__()

# block1 降通道数,block2 再将通道数升回去,如 64->32->64

self.conv = nn.Sequential(

CBLR(channels[1], channels[0], kernel_size=1, padding=0),

CBLR(channels[0], channels[1])

)

def forward(self, x):

return self.conv(x) + x

# darknet53

class DarkNet(nn.Module):

def __init__(self, layers, num_classes=1000):

super(DarkNet, self).__init__()

self.init_channels = 32 # 初始的输入通道数

self.layer0 = CBLR(3, self.init_channels) # (3, 416, 416) -> (32, 416, 416)

self.layer1 = self._make_layer([32, 64], layers[0]) # (32, 416, 416) -> (64, 208, 208)

self.layer2 = self._make_layer([64, 128], layers[1]) # (64, 208, 208) -> (128, 104, 104)

self.layer3 = self._make_layer([128, 256], layers[2]) # (128, 104, 104) -> (256, 52, 52)

self.layer4 = self._make_layer([256, 512], layers[3]) # (256, 52, 52) -> (512, 26, 26)

self.layer5 = self._make_layer([512, 1024], layers[4]) # (512, 26, 26) -> (1024, 13, 13)

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.classifier = nn.Sequential(

nn.Dropout(0.2),

nn.Linear(1024, num_classes),

nn.Softmax(dim=1)

)

self._initialize_weights()

# channels 包含输入通道数和输出通道数,blocks 指定每个 layer 中使用的残差块个数

def _make_layer(self, channels, blocks):

layers = []

# 在每一个layer里面,首先利用一个步长为 2 的 3x3 卷积进行下采样

layers.append(CBLR(self.init_channels, channels[1], stride=2))

self.init_channels = channels[1] # 更改初始通道数

for _ in range(0, blocks):

layers.append(BasicBlock(channels))

return nn.Sequential(*layers)

def forward(self, x):

x = self.layer0(x)

x = self.layer1(x)

x = self.layer2(x)

out1 = self.layer3(x)

out2 = self.layer4(out1)

out3 = self.layer5(out2)

out = self.avgpool(out3)

out = torch.flatten(out, 1)

out = self.classifier(out)

return out1, out2, out3, out

# 权值初始化

def _initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out')

if m.bias is not None:

nn.init.zeros_(m.bias)

elif isinstance(m, nn.BatchNorm2d):

nn.init.ones_(m.weight)

nn.init.zeros_(m.bias)

if __name__ == '__main__':

input = torch.randn(1, 3, 416, 416) # 创建随机输入 (batch_size, channels, width, height)

model = DarkNet([1, 2, 8, 8, 4]) # [1, 2, 8, 8, 4] 分别指定残差块重复次数

out1, out2, out3, out = model(input)

print(f'out1: {out1.shape}\nout2: {out2.shape}\nout3: {out3.shape}')

print(f'out: {out.shape}')

617

617

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?