代码

#include <opencv2/opencv.hpp>

#include <opencv2/features2d/features2d.hpp>

#include<opencv2/nonfree/nonfree.hpp>

#include<opencv2/legacy/legacy.hpp>

#include<vector>

using namespace std;

using namespace cv;

//sift进行特征点匹配

void SiftDemo(string fn1, string fn2)

{

Mat input1 = imread(fn1, 1);

Mat input2 = imread(fn2, 1);

SiftFeatureDetector detector;

vector<KeyPoint> keypoint1, keypoint2;

detector.detect(input1, keypoint1);

Mat output1;

drawKeypoints(input1, keypoint1, output1);

imshow("sift_result1.jpg", output1);

imwrite("sift_result1.jpg", output1);

Mat output2;

SiftDescriptorExtractor extractor;

Mat descriptor1, descriptor2;

BruteForceMatcher<L2<float>> matcher;

vector<DMatch> matches;

Mat img_matches;

detector.detect(input2, keypoint2);

drawKeypoints(input2, keypoint2, output2);

imshow("sift_result2.jpg", output2);

imwrite("sift_result2.jpg", output2);

extractor.compute(input1, keypoint1, descriptor1);

extractor.compute(input2, keypoint2, descriptor2);

matcher.match(descriptor1, descriptor2, matches);

drawMatches(input1, keypoint1, input2, keypoint2, matches, img_matches);

imshow("matches", img_matches);

imwrite("matches.jpg", img_matches);

}

//surf进行特征点匹配

void SurfDemo(string fn1, string fn2)

{

// TODO: Add your control notification handler code here

Mat input1 = imread(fn1, 1);

Mat input2 = imread(fn2, 1);

SurfFeatureDetector detector(2500);

vector<KeyPoint> keypoint1, keypoint2;

detector.detect(input1, keypoint1);

Mat output1;

drawKeypoints(input1, keypoint1, output1, cv::Scalar::all(255), cv::DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

//drawKeypoints(input1,keypoint1,output1);

imshow("sift_result1.jpg", output1);

imwrite("sift_result1.jpg", output1);

Mat output2;

SurfDescriptorExtractor extractor;

Mat descriptor1, descriptor2;

BruteForceMatcher<L2<float>> matcher;

vector<DMatch> matches;

Mat img_matches;

detector.detect(input2, keypoint2);

drawKeypoints(input2, keypoint2, output2, cv::Scalar::all(255), cv::DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

imshow("sift_result2.jpg", output2);

imwrite("sift_result2.jpg", output2);

extractor.compute(input1, keypoint1, descriptor1);

extractor.compute(input2, keypoint2, descriptor2);

matcher.match(descriptor1, descriptor2, matches);

drawMatches(input1, keypoint1, input2, keypoint2, matches, img_matches);

imshow("matches", img_matches);

imwrite("matches.jpg", img_matches);

}

//orb进行特征点匹配

void OrbDemo(string fn1, string fn2)

{

// TODO: Add your control notification handler code here

Mat obj = imread(fn1, 1); //载入目标图像

Mat scene = imread(fn2, 1); //载入场景图像

if (obj.empty() || scene.empty())

{

cout << "Can't open the picture!\n";

return;

}

vector<KeyPoint> obj_keypoints, scene_keypoints;

Mat obj_descriptors, scene_descriptors;

ORB detector; //采用ORB算法提取特征点

detector.detect(obj, obj_keypoints);

detector.detect(scene, scene_keypoints);

detector.compute(obj, obj_keypoints, obj_descriptors);

detector.compute(scene, scene_keypoints, scene_descriptors);

BFMatcher matcher(NORM_HAMMING, true); //汉明距离做为相似度度量

vector<DMatch> matches;

matcher.match(obj_descriptors, scene_descriptors, matches);

Mat match_img;

drawMatches(obj, obj_keypoints, scene, scene_keypoints, matches, match_img);

imshow("滤除误匹配前", match_img);

}

//背景差分,读取当前摄像头的图像

void BackDiffTest()

{

// TODO: Add your control notification handler code here

//string videoFile = "F://program//Book//Chapter4//car.avi";

//string videoFile = "F:\\program\\Book\\Chapter4\\car.avi";

VideoCapture capture(0);

//capture.open(videoFile);

if (!capture.isOpened())

{

cout << "read video failure" << std::endl;

return;

}

BackgroundSubtractorMOG2 mog;

Mat foreground;

Mat background;

Mat frame;

long frameNo = 0;

while (capture.read(frame))

{

++frameNo;

//cout<<frameNo<<endl;

// 运动前景检测,并更新背景

mog(frame, foreground, 0.01);

// 腐蚀

erode(foreground, foreground, Mat());

// 膨胀

dilate(foreground, foreground, Mat());

mog.getBackgroundImage(background); // 返回当前背景图像

imshow("foreground", foreground);

imshow("background", background);

imshow("frame", frame);

if (waitKey(25) > 0)

{

break;

}

}

}

//混合高斯模型,读取视频文件,并提取背景

void MixedGaussianTest(string fn)

{

IplImage* pFrameOr; //当前帧

IplImage* pFrame; //当前帧

IplImage* pFrame33; //当前帧

IplImage* pFrame333; //当前帧

CvCapture* pCaptureOr;

CvCapture* pCapture;

CvCapture* pCapture1;

CvCapture* pCapture2;

int nFrmNum;

cvNamedWindow("AVIOriginal1", 0);

cvResizeWindow("AVIOriginal1", 340, 250);

cvMoveWindow("AVIOriginal1", 0, 0);

cvNamedWindow("AVIOriginal2", 0);

cvResizeWindow("AVIOriginal2", 330, 250);

cvMoveWindow("AVIOriginal2", 345, 0);

cvNamedWindow("AVIOriginal3", 0);

cvResizeWindow("AVIOriginal3", 330, 250);

cvMoveWindow("AVIOriginal3", 680, 0);

pCapture = cvCaptureFromFile(fn.c_str());

pCapture1 = cvCaptureFromFile(fn.c_str());

pCapture2 = cvCaptureFromFile(fn.c_str());

pFrame = cvQueryFrame(pCapture1);

//pFrame33=cvQueryFrame( pCapture1 );

//pFrame333=cvQueryFrame( pCapture1 );

pFrame33 = cvCloneImage(pFrame);

pFrame333 = cvCloneImage(pFrame);

float alpha = 10;

float beta = 15;

float gamma = 11;

float alphaR = 1;

float betaR = 1.7;

float gammaR = 1;

IplImage* img = NULL;

IplImage* img1 = NULL;

IplImage* pFramenum1 = NULL;//第一帧图像,不参与循环

IplImage* pFramenumImg1 = NULL;//第一帧灰度化后的图像,不参与循环

IplImage* pFramenumFrImg1 = NULL;//当前帧与第一帧差分并阈值化后的图像

CvMat* pFramenumMat1 = NULL;//第一帧矩阵,不参与循环

CvMat* pFramenumFr1 = NULL;//当前帧与第一帧差分后的矩阵

// IplImage* pFrame = NULL; //当前帧

IplImage* pFrImg = NULL; //当前帧灰度图

CvMat* pFrameMat = NULL;

CvMat* pFrMat = NULL;

// IplImage* pFrame33 = NULL; //当前帧

// IplImage* pFrame333 = NULL; //当前帧

IplImage* pFrame3 = NULL; //当前帧

IplImage* pFrame1 = NULL;//落后当前帧三帧的落后帧

IplImage* pFrImg1 = NULL; //差分后图像

CvMat* pFrameMat1 = NULL;

CvMat* pFrMat1 = NULL;

IplImage* pFrame2 = NULL;//超过当前帧三帧的超前帧

IplImage* pFrImg2 = NULL;

CvMat* pFrameMat2 = NULL;

CvMat* pFrMat2 = NULL;

CvMemStorage* storage = cvCreateMemStorage(0); //创建64K的动态内存块

CvSeq* contours = 0;

CvMemStorage* storage2 = cvCreateMemStorage(0); //创建64K的动态内存块

CvSeq* contours2 = 0;

int i, j;

CvScalar s0, s1;

CvSize size; //搜索时的邻域尺寸

size.width = 3;

size.height = 3;

CvTermCriteria criteria;

criteria.type = CV_TERMCRIT_ITER;

criteria.epsilon = 0.1; //结果的精确性

nFrmNum = 0;

while ((pFrame = cvQueryFrame(pCapture)) && (nFrmNum<250))

{

nFrmNum++;

// pFrameOr=cvCloneImage(pFrame);

pFrame1 = cvQueryFrame(pCapture1);

//如果是第一帧,需要申请内存,并初始化

if (nFrmNum == 1)

{

pFramenum1 = cvCloneImage(pFrame);

pFramenumImg1 = cvCreateImage(cvSize(pFrame->width, pFrame->height), IPL_DEPTH_8U, 1);

pFramenumMat1 = cvCreateMat(pFrame->height, pFrame->width, CV_32FC1);

pFramenumFr1 = cvCreateMat(pFrame->height, pFrame->width, CV_32FC1);

cvCvtColor(pFramenum1, pFramenumImg1, CV_BGR2GRAY);

cvConvert(pFramenumImg1, pFramenumMat1);

pFrImg = cvCreateImage(cvSize(pFrame->width, pFrame->height), IPL_DEPTH_8U, 1);

pFrMat = cvCreateMat(pFrame->height, pFrame->width, CV_32FC1);

pFrameMat = cvCreateMat(pFrame->height, pFrame->width, CV_32FC1);

pFrImg1 = cvCreateImage(cvSize(pFrame->width, pFrame->height), IPL_DEPTH_8U, 1);

pFrMat1 = cvCreateMat(pFrame->height, pFrame->width, CV_32FC1);

pFrameMat1 = cvCreateMat(pFrame->height, pFrame->width, CV_32FC1);

pFrImg2 = cvCreateImage(cvSize(pFrame->width, pFrame->height), IPL_DEPTH_8U, 1);

pFrMat2 = cvCreateMat(pFrame->height, pFrame->width, CV_32FC1);

pFrameMat2 = cvCreateMat(pFrame->height, pFrame->width, CV_32FC1);

img = cvCreateImage(cvSize(pFrame->width, pFrame->height), IPL_DEPTH_8U, 1);

//转化成单通道图像再处理

cvCvtColor(pFrame, pFrImg, CV_BGR2GRAY);

cvConvert(pFrImg, pFrameMat);

cvConvert(pFrImg, pFrMat);

for (i = 0; i<3; i++)

pFrame1 = cvQueryFrame(pCapture1);

}

else if (nFrmNum>7)

{

pFrame2 = cvQueryFrame(pCapture2);

pFrame3 = cvCloneImage(pFrame);

pFrame33 = cvCloneImage(pFrame);

pFrame333 = cvCloneImage(pFrame);

cvCvtColor(pFrame, pFrImg, CV_BGR2GRAY);

cvConvert(pFrImg, pFrameMat);

cvCvtColor(pFrame1, pFrImg1, CV_BGR2GRAY);

cvConvert(pFrImg1, pFrameMat1);

cvCvtColor(pFrame2, pFrImg2, CV_BGR2GRAY);

cvConvert(pFrImg2, pFrameMat2);

cvAbsDiff(pFrameMat, pFrameMat1, pFrMat1);//当前帧跟前面帧相减

cvAbsDiff(pFrameMat, pFrameMat2, pFrMat2);//当前帧与后面帧相减

cvAbsDiff(pFrameMat, pFramenumMat1, pFramenumFr1);//当前帧与第一帧相减

//二值化前景图

cvThreshold(pFrMat1, pFrImg1, 10, 255.0, CV_THRESH_BINARY);

cvThreshold(pFrMat2, pFrImg2, 10, 255.0, CV_THRESH_BINARY);

cvThreshold(pFramenumFr1, pFramenumImg1, 10, 255, CV_THRESH_BINARY);

//膨胀化前景图

cvDilate(pFrImg1, pFrImg1, NULL, 2);

cvDilate(pFrImg2, pFrImg2, NULL, 2);

//当前帧与前面帧相减后提取的轮廓线

cvFindContours(pFrImg1, storage, &contours, sizeof(CvContour), //寻找初始化轮廓并放置于序列中

CV_RETR_LIST, CV_LINK_RUNS);

for (; contours; contours = contours->h_next)

{

if (contours->total<200)

continue;

else break;

}

cvDrawContours(pFrame333, contours, CV_RGB(255, 255, 0), CV_RGB(0, 0, 0), 0, 2, 8);

//前差分图与后差分图相与,得到更准确的轮廓线

cvAnd(pFrImg1, pFrImg2, pFrImg1, NULL);

img1 = cvCloneImage(pFrImg);

cvFindContours(pFrImg1, storage, &contours, sizeof(CvContour), //寻找初始化轮廓并放置于序列中

CV_RETR_LIST, CV_LINK_RUNS);

for (; contours; contours = contours->h_next)

{

if (contours->total<200)

continue;

else break;

}

CvPoint* point = new CvPoint[contours->total]; //分配length个轮廓点

CvSeqReader reader;

CvPoint pt = cvPoint(0, 0); //pt.x=0,pt.y=0

cvStartReadSeq(contours, &reader); //初始化序列读取过程

for (i = 0; i < contours->total; i++)

{

CV_READ_SEQ_ELEM(pt, reader); //将由reader指定的元素复制到pt指定内存中,若reader指向的当前块到块尾,则转到下一块

point[i] = pt;//将pt所存元素移到point数组中

}

criteria.max_iter = 5; //最大迭代次数

cvSobel(pFrImg, pFrImg, 1, 1, 3);

cvSnakeImage(pFrImg, point, contours->total, &alpha, &beta, &gamma, CV_VALUE, size, criteria, 0);

for (i = 0; i<contours->total; i++)

{

j = (i + 1) % (contours->total);

cvLine(pFrame33, point[i], point[j], CV_RGB(255, 255, 0), 2, 8, 0);

}

//图像颠倒,使其反转

pFrImg->origin = 1;

pFrImg1->origin = 1;

pFrImg2->origin = 1;

img->origin = 1;

//显示图像

if (nFrmNum<59 || (nFrmNum>62 && nFrmNum<101) || nFrmNum>103)

{

// pFrame33=cvCloneImage(pFrame3);

// pFrame333=cvCloneImage(pFrame3);

// cvShowImage("AVIOriginal",pFrameOr);

cvShowImage("AVIOriginal1", pFrame333);

cvShowImage("AVIOriginal2", pFrame33);

cvShowImage("AVIOriginal3", pFrame);

}

if (cvWaitKey(10)>0) continue;

}

}

cvReleaseMemStorage(&storage);//释放内存块

cvReleaseMemStorage(&storage2);//释放内存块

//释放图像和矩阵

cvReleaseImage(&pFrImg);

cvReleaseImage(&pFrImg1);

cvReleaseImage(&pFrImg2);

cvReleaseMat(&pFrameMat);

cvReleaseMat(&pFrMat);

}

int main(int argc, char* argv[])

{

int mode = 1;

switch (mode)

{

case 1: SiftDemo("33.jpg", "3333.jpg"); break;

case 2: SurfDemo("33.jpg", "3333.jpg"); break;

case 3: OrbDemo("33.jpg", "3333.jpg"); break;

case 4: BackDiffTest(); break;

case 5: MixedGaussianTest("cat.avi");

}

//此函数等待按键,按键盘任意键就返回

waitKey();

return 0;

}

图片

原图像

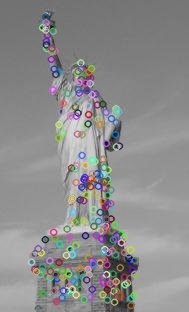

结果图

1308

1308

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?