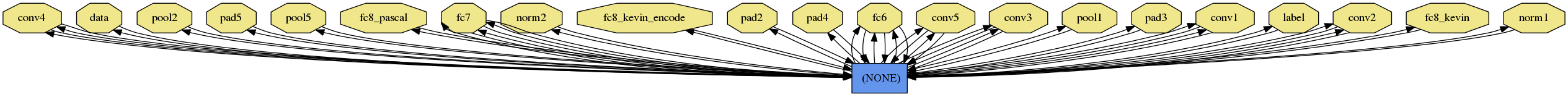

今天在阅读《Deep Learning of Binary Hash Codes for Fast Image Retrieval》论文提供的源码时,本想着将将作者设计的网络结构进行可视化,但是结果根本不对。通过和caffe官方提供的实例进行比对,发现原作者写的prototxt文件早已过时了。

原Prototxt部分内容如下:

name: "KevinNet_CIFAR10"

layers {

layer {

name: "data"

type: "data"

source: "cifar10_train_leveldb"

meanfile: "../../data/ilsvrc12/imagenet_mean.binaryproto"

batchsize: 32

cropsize: 227

mirror: true

det_context_pad: 16

det_crop_mode: "warp"

det_fg_threshold: 0.5

det_bg_threshold: 0.5

det_fg_fraction: 0.25

}

top: "data"

top: "label"

}

layers {

layer {

name: "conv1"

type: "conv"

num_output: 96

kernelsize: 11

stride: 4

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0.

}

blobs_lr: 1.

blobs_lr: 2.

weight_decay: 1.

weight_decay: 0.

}

bottom: "data"

top: "conv1"

}

layers {

layer {

name: "relu1"

type: "relu"

}

bottom: "conv1"

top: "conv1"

}

layers {

layer {

name: "pool1"

type: "pool"

pool: MAX

kernelsize: 3

stride: 2

}

bottom: "conv1"

top: "pool1"

}

layers {

layer {

name: "norm1"

type: "lrn"

local_size: 5

alpha: 0.0001

beta: 0.75

}

bottom: "pool1"

top: "norm1"

}

...可视化结果:

升级后的Prototxt:

name: "upgraded_KevinNet_CIFAR10"

layer{

name:"data"

type:"Data"

top:"data"

top:"label"

window_data_param{

source:"cifar10_train_leveldb"

batch_size:32

mean_file:"../../data/ilsvrc12/imagenet_mean.binaryproto"

crop_size:227

mirror:true

context_pad:16

crop_mode:"wrap"

fg_threshold:0.5

bg_threshold:0.5

fg_fraction:0.25

}

}

layer{

name:"conv1"

type:"Convolution"

bottom:"data"

top:"conv1"

param{

lr_mult:1

decay_mult:1.

}

param{

lr_mult:2

decay_mult:0.

}

convolution_param{

num_output:96

kernel_size:11

stride:4

weight_filler{

type:"guassian"

std:0.01

}

bias_filler{

type:"constant"

value:0.

}

}

}

layer{

name:"relu1"

type:"ReLU"

bottom:"conv1"

top:"conv1"

}

layer{

name:"pool1"

type:"Pooling"

bottom:"conv1"

top:"pool1"

pooling_param{

pool:MAX

kernel_size:3

stride:2

}

}

layer{

name:"norm1"

type:"LRN"

bottom:"pool1"

top:"norm1"

lrn_param{

local_size:5

alpha:0.0001

beta:0.75

}

}

layer{

name:"conv2"

type:"Convolution"

bottom:"norm1"

top:"conv2"

param{

lr_mult:1

decay_mult:1.

}

param{

lr_mult:2

decay_mult:0.

}

convolution_param{

num_output:256

kernel_size:5

group:2

pad:2

weight_filler{

type:"guassian"

std:0.01

}

bias_filler{

type:"constant"

value:1.

}

}

}

layer{

name:"relu2"

type:"ReLU"

bottom:"conv2"

top:"conv2"

}

layer{

name:"pool2"

type:"Pooling"

bottom:"conv2"

top:"pool2"

pooling_param{

pool:MAX

kernel_size:3

stride:2

}

}

layer{

name:"norm2"

type:"LRN"

bottom:"pool2"

top:"norm2"

lrn_param{

local_size:5

alpha:0.0001

beta:0.75

}

}

layer{

name:"conv3"

type:"Convolution"

bottom:"norm2"

top:"conv3"

param{

lr_mult:1

decay_mult:1.

}

param{

lr_mult:2

decay_mult:0.

}

convolution_param{

num_output:384

kernel_size:3

pad:1

weight_filler{

type:"guassian"

std:0.01

}

bias_filler{

type:"constant"

value:0.

}

}

}

layer{

name:"relu3"

type:"ReLU"

bottom:"conv3"

top:"conv3"

}

layer{

name:"conv4"

type:"Convolution"

bottom:"conv3"

top:"conv4"

param{

lr_mult:1

decay_mult:1.

}

param{

lr_mult:2

decay_mult:0.

}

convolution_param{

num_output:384

kernel_size:3

pad:1

group:2

weight_filler{

type:"guassian"

std:0.01

}

bias_filler{

type:"constant"

value:0.

}

}

}

layer{

name:"relu4"

type:"ReLU"

bottom:"conv4"

top:"conv4"

}

layer{

name:"conv5"

type:"Convolution"

bottom:"conv4"

top:"conv5"

param{

lr_mult:1

decay_mult:1.

}

param{

lr_mult:2

decay_mult:0.

}

convolution_param{

num_output:256

kernel_size:3

pad:1

group:2

weight_filler{

type:"guassian"

std:0.01

}

bias_filler{

type:"constant"

value:0.

}

}

}

layer{

name:"relu5"

type:"ReLU"

bottom:"conv5"

top:"conv5"

}

layer{

name:"pool5"

type:"Pooling"

bottom:"conv5"

top:"pool5"

pooling_param{

kernel_size:3

pool:MAX

stride:2

}

}

layer{

name:"fc6"

type:"InnerProduct"

bottom:"pool5"

top:"fc6"

param{

lr_mult:1

decay_mult:1.

}

param{

lr_mult:2

decay_mult:0.

}

inner_product_param{

num_output:4096

weight_filler {

type: "gaussian"

std: 0.005

}

bias_filler {

type: "constant"

value: 1.

}

}

}

layer{

name:"relu6"

type:"ReLU"

bottom:"fc6"

top:"fc6"

}

layer{

name:"drop6"

type:"Dropout"

bottom:"fc6"

top:"fc6"

dropout_param{

dropout_ratio:0.5

}

}

layer{

name:"fc7"

type:"InnerProduct"

bottom:"fc6"

top:"fc7"

param{

lr_mult:1

decay_mult:1.

}

param{

lr_mult:2

decay_mult:0.

}

inner_product_param{

num_output:4096

weight_filler {

type: "gaussian"

std: 0.005

}

bias_filler {

type: "constant"

value: 1.

}

}

}

layer{

name:"relu7"

type:"ReLU"

bottom:"fc7"

top:"fc7"

}

layer{

name:"drop7"

type:"Dropout"

bottom:"fc7"

top:"fc7"

dropout_param{

dropout_ratio:0.5

}

}

layer{

name:"fc8_kevin"

type:"InnerProduct"

bottom:"fc7"

top:"fc8_kevin"

param{

lr_mult:1

decay_mult:1.

}

param{

lr_mult:2

decay_mult:0.

}

inner_product_param{

num_output:48

weight_filler {

type: "gaussian"

std: 0.005

}

bias_filler {

type: "constant"

value: 1.

}

}

}

layer{

name:"fc8_kevin_encode"

type:"Sigmoid"

bottom:"fc8_kevin"

top:"fc8_kevin_encode"

}

layer{

name:"fc8_pascal"

type:"InnerProduct"

bottom:"fc8_kevin_encode"

top:"fc8_pascal"

param{

lr_mult:10

decay_mult:1.

}

param{

lr_mult:20

decay_mult:0.

}

inner_product_param{

num_output:10

weight_filler {

type: "gaussian"

std: 0.01

}

bias_filler {

type: "constant"

value: 0.

}

}

}

layer{

name:"loss"

type:"SofmaxWithLoss"

bottom:"fc8_pascal"

bottom:"label"

}这里我是为了可视化网络结构,只是简单对照新版本升级了语法结构,并没有对后续的训练进行测试。

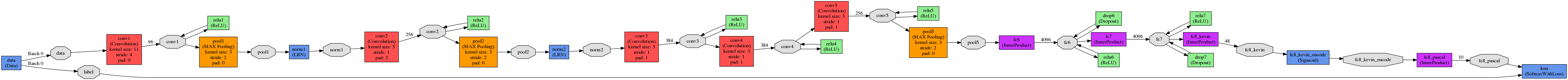

网络结构图如下:

2199

2199

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?