1.逻辑回归

#逻辑回归调优

from sklearn.linear_model.logistic import LogisticRegression

from sklearn.cross_validation import train_test_split,cross_val_score

from sklearn.metrics import *

import matplotlib.pyplot as plt

from sklearn.model_selection import GridSearchCV

X = df_train[['Sex', 'SibSp', 'Parch', 'Fare', 'Cabin', 'Age', 'isalone', 'mother',

'ticket-same', 'Pclass_1', 'Pclass_2', 'Pclass_3', 'Embarked_C',

'Embarked_Q', 'Embarked_S', 'title_1', 'title_2', 'title_3', 'title_4',

'title_5', 'title_6', 'title_7', 'title_8', 'Family_0', 'Family_1',

'Family_2', 'person_adult-man', 'person_adult-woman', 'person_child']]

Y = df_train['Survived']

parameters = {'penalty': ['l1', 'l2'],'C': [0.01,0.1,0.5,1]}

grid_logistic = GridSearchCV(estimator=LogisticRegression(), param_grid=parameters, cv=5)

x_train, x_test, y_train, y_test = train_test_split(X, Y, test_size=0.3, random_state=2018)

grid_logistic.fit(x_train, y_train)

print('best_score:', grid_logistic.best_score_)

best_paramaters = grid_logistic.best_estimator_.get_params()

print('best_paramaters:', best_paramaters)

pred = grid_logistic.predict(x_test)

print('accuracy:', accuracy_score(y_test, pred))

print('precision:', precision_score(y_test, pred))

print('recall:', recall_score(y_test, pred))

2.支持向量机调优

#支持向量机调优

from sklearn import svm

parameters = {'C':[0.01, 0.1, 1, 5, 10]}

grid_svm = GridSearchCV(estimator=svm.SVC(), param_grid=parameters, cv=5)

grid_svm.fit(x_train, y_train)

print('best_score:', grid_svm.best_score_)

print('best_parameters:', grid_svm.best_estimator_.get_params())

pred = grid_svm.predict(x_test)

print('accuracy_score:', accuracy_score(y_test, pred))

print('precision_score:', precision_score(y_test, pred))

print('recall_score:', recall_score(y_test, pred))

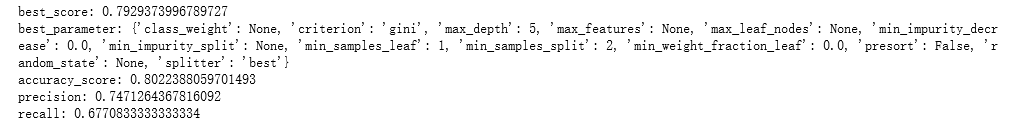

3.决策树调优

#决策树调优

train_data = pd.read_csv('./Titanic-data/task-2-train2.csv')

test_data = pd.read_csv('./Titanic-data/task-2-test2.csv')

X = train_data[['Pclass', 'Sex', 'SibSp', 'Parch', 'Cabin', 'Embarked',

'title', 'isalone', 'Family', 'mother', 'person', 'ticket-same', 'age',

'fare']]

Y = train_data['Survived']

from sklearn import tree

parameter = {'max_depth': [1, 5, 10]}

grid_tree = GridSearchCV(estimator=tree(), param_grid=parameter, cv=5)

grid_tree.fit(x_train, y_train)

print('best_score:', grid_tree.best_score_)

print('best_parameter:', grid_tree.best_estimator_.params())

pred = grid_tree.predict(x_test)

print('accuracy_score:', accuracy_score(y_test, pred))

print('precision:', precision_score(y_test, pred))

print('recall:', recall_score(y_test, pred))3.决策树调优

#决策树调优

train_data = pd.read_csv('./Titanic-data/task-2-train2.csv')

test_data = pd.read_csv('./Titanic-data/task-2-test2.csv')

X = train_data[['Pclass', 'Sex', 'SibSp', 'Parch', 'Cabin', 'Embarked',

'title', 'isalone', 'Family', 'mother', 'person', 'ticket-same', 'age',

'fare']]

Y = train_data['Survived']

from sklearn import tree

parameter = {'max_depth': [1, 5, 10]}

grid_tree = GridSearchCV(estimator=tree.DecisionTreeClassifier(), param_grid=parameter, cv=5)

grid_tree.fit(x_train, y_train)

print('best_score:', grid_tree.best_score_)

print('best_parameter:', grid_tree.best_estimator_.get_params())

pred = grid_tree.predict(x_test)

print('accuracy_score:', accuracy_score(y_test, pred))

print('precision:', precision_score(y_test, pred))

print('recall:', recall_score(y_test, pred))

4.随机森林调优

#随机森林调优

train = pd.read_csv('./Titanic-data/task-2-train2.csv')

X = train[['Pclass', 'Sex', 'SibSp', 'Parch', 'Cabin', 'Embarked',

'title', 'isalone', 'Family', 'mother', 'person', 'ticket-same', 'age',

'fare']]

Y = train['Survived']

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import cross_val_score, train_test_split

from sklearn.metrics import *

import matplotlib.pyplot as plt

parameter = {'n_estimators':[1, 5, 10, 20]}

grid_rf = GridSearchCV(estimator=RandomForestClassifier(), param_grid=parameter, cv=5)

x_train, x_test, y_train, y_test = train_test_split(X, Y, test_size=0.3, random_state=2018)

grid_rf.fit(x_train, y_train)

print('best_score:', grid_rf.best_score_)

print('best_parameter:', grid_rf.best_estimator_.get_params())

pred = grid_rf.predict(x_test)

print('accuracy_score:', accuracy_score(y_test, pred))

print('precision:', precision_score(y_test, pred))

print('recall:', recall_score(y_test, pred))

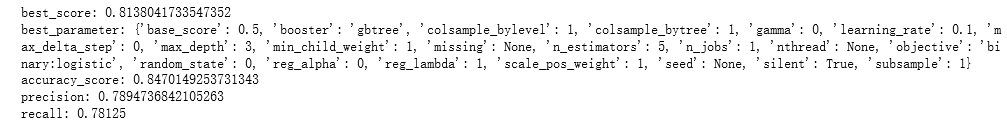

5.xgb调优

from xgboost import XGBClassifier

parameter = {'n_estimators':[1, 5, 10, 20, 40]}

grid_xgb = GridSearchCV(estimator=XGBClassifier(), param_grid=parameter, cv=5)

grid_xgb.fit(x_train, y_train)

print('best_score:', grid_xgb.best_score_)

print('best_parameter:', grid_xgb.best_estimator_.get_params())

pred = grid_xgb.predict(x_test)

print('accuracy_score:', accuracy_score(y_test, pred))

print('precision:', precision_score(y_test, pred))

print('recall:', recall_score(y_test, pred))

945

945

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?