Metaphors are powerful tools to transfer ideas from one mind to another. Alan Kay introduced the alternative meaning of the term ‘desktop’ at Xerox PARC in 1970. Nowadays everyone — for a glimpse of a second — has to wonder what is actually meant when referring to a desktop. Recently, Deep Learning had the pleasure to welcome a new powerful metaphor: The Lottery Ticket Hypothesis (LTH). But what idea does the Hypothesis try to transmit? In todays post we dive deeper into the hypothesis and review the literature after the original ICLR best paper award by Frankle & Carbin (2019).

隐喻是将思想从一种思想转移到另一种思想的强大工具。 艾伦·凯(Alan Kay)于1970年在Xerox PARC上介绍了“台式机”一词的替代含义。如今,每个人(瞥一眼)都不得不怀疑提到台式机时的实际含义。 最近,深度学习很高兴欢迎一个新的强大比喻:彩票假说(LTH)。 但是假说试图传达什么想法? 在今天的帖子中,我们获得了Frankle&Carbin(2019)最初的ICLR最佳论文奖之后,更深入地研究了这一假设并回顾了相关文献。

深度学习中的修剪✂️ (Pruning in Deep Learning ✂️)

Pruning over-parametrized neural networks has a long tradition in Deep Learning. Most commonly it refers to setting a particular weight to 0 and freezing it for the course of any subsequent training. This can easily be done by element-wise multiplying the weights W with a binary pruning mask m. There are several motivating factors for performing such a surgical intervention:

修剪过度参数化的神经网络在深度学习中有着悠久的传统。 最通常地,它是指将特定权重设置为0并在随后的任何训练过程中将其冻结。 这可以通过将权重W与二进制修剪掩码m逐元素相乘来轻松实现。 进行这种外科手术的动机有几个:

- It supports generalization by regularizing overparametrized functions. 它通过规范化过参数化的函数来支持泛化。

- It reduces the memory constraints during inference time by identifying well-performing smaller networks which can fit in memory. 它通过识别性能良好的较小网络(可放入内存中)来减少推理期间的内存限制。

- It reduces energy costs, computations, storage and latency which can all support deployment on mobile devices. 它减少了能源成本,计算,存储和延迟,所有这些都可以支持在移动设备上的部署。

With the recent advent of deeper and deeper networks all 3 factors have been seeing a resurgence in attention. Broadly speaking any competitive pruning algorithm has to address 4 fundamental questions:

随着最近越来越深入的网络的出现,所有这三个因素都引起了人们的关注。 概括地说,任何竞争性修剪算法都必须解决4个基本问题:

What connectivity structures to prune?: Unstructured pruning does not consider any relationships between the pruned weights. Structured pruning, on the other hand, prunes weights in groups, e.g. by removing entire neurons (weight columns), filters or channels of CNNs. Although unstructured pruning often allows to cut down the number of weights more drastically (while maintaining high performance), this does not have to speed up computations on standard hardware. The key here is that dense computations can easily be parallelized while ‘scattered’ computations can’t. Another distinction has to be made between global and local pruning. Local pruning enforces that one prunes s percent of weights from each layer. Global pruning, on the other hand, is unrestricted and simply requires that the total number of weights across the entire network is pruned by s percent.

修剪的连接结构如何? : 非结构化修剪不考虑修剪权重之间的任何关系。 另一方面, 结构化修剪会按组修剪权重,例如通过删除整个神经元(权重列),CNN的过滤器或通道。 尽管非结构化修剪通常可以大大减少权重的数量(同时保持高性能),但这不必加快标准硬件上的计算速度。 这里的关键是密集的计算可以很容易地并行化,而“分散的”计算则不能。 全球和本地修剪之间必须有另一个区别。 本地修剪强制将每一层的权重的百分之一修剪。 另一方面, 全局修剪是不受限制的,仅要求整个网络的权重总数被s %修剪。

How to rank weights to prune?: There are many more or less heuristic ways to score the importance of a particular weight in a network. A common rule of thumb is that large magnitude weights have more impact on the function fit and should be pruned less. While working well in practice, this intuitively may seem to contradict ideas such as L2-weight regularization which actually punishes large magnitude weights. This motivates more involved techniques which learn pruning masks using gradient-based methods or even higher-order curvature information.

如何对砝码进行修剪? :有许多或多或少的启发式方法可以对网络中特定权重的重要性进行评分。 普遍的经验法则是,较大的权重对函数拟合有更大的影响,应减少较少的修剪。 尽管在实践中表现良好,但从直觉上看,这似乎与诸如L2权重正则化的思想相矛盾,后者实际上惩罚了较大的权重。 这激发了更多涉及的技术,这些技术使用基于梯度的方法甚至更高阶的曲率信息来学习修剪蒙版。

How often to prune?: Weight magnitude is often only a noisy proxy for weight importance. Pruning only a single time at the end of training ( one-shot) can become victim to this noise. Iterative procedures, on the other hand, prune only a small number of weights after one training run but reiterate the train — score — prune — rewind cycle. This often times helps to de-noise the overall pruning process. Commonly the pruning rate per iteration is around 20% and a total of 20 to 30 pruning iterations are used (which leaves us with only 1% percent of non-pruned weights). One can be more conservative — at the cost of training for a lot longer!

多久修剪一次? :体重水平通常只是体重重要性的嘈杂代表。 在训练结束时( 一次射击 )仅修剪一次可能成为这种噪音的受害者。 另一方面,迭代过程在一次训练后仅修剪少量砝码,但重复训练-得分-修剪-倒带周期。 通常,这通常有助于消除整个修剪过程的噪音。 通常,每次迭代的修剪率约为20%,并且总共使用20到30个修剪迭代(这使我们只剩下1%的非修剪权重)。 一个人可能会更保守-花费更长的时间来训练!

When to perform the pruning step?: One can perform pruning at 3 different stages of network training — after, during and before training. When pruning after the training has converged the performance often decreases, which makes it necessary to retrain/fine-tune and to give the network a chance to readjust. Pruning during training, on the other hand, is often associated with regularization and ideas of dropout/reinforcing distributed representations. Until very recently it was impossible to trained sparse networks from scratch.

何时执行修剪步骤? :可以在网络培训的三个不同阶段(培训之后,培训期间和培训之前)执行修剪。 在训练结束后进行修剪时,性能通常会下降,这使得有必要进行重新训练/微调,并使网络有机会进行调整。 另一方面,训练期间的修剪通常与正则化和辍学/加强分布式表示的想法相关。 直到最近,才不可能从头开始训练稀疏网络。

彩票假说及其规模Scale (The Lottery Ticket Hypothesis & How to Scale it 🃏)

Frankle&Carbin(2019)—彩票假设:发现稀疏,可训练的神经网络 (Frankle & Carbin (2019) — The Lottery Ticket Hypothesis: Finding Sparse, Trainable Neural Networks)

While the traditional literature has been able to show that a fully trained dense network can be pruned to little parameters without degrading performance too much, for a long time it has been impossible to successfully train a sparse sub-network from scratch. So why might this the case? The smaller subnetwork can approximate a well performing function. But the learning dynamics appear to be very different compared to the dense network.

传统文献已经表明,可以将训练有素的密集网络修剪成很少的参数,而又不会降低性能,但是很长一段时间以来,从头开始成功地训练稀疏子网络是不可能的。 那么为什么会这样呢? 较小的子网可以近似表现良好的功能。 但是与密集网络相比,学习动力似乎有很大不同。

The original lottery ticket hypothesis paper (Frankle & Carbin, 2019) first provided insight why this might be the case: After pruning the resulting sub-networks were randomly initialized. If one instead re-initializes the weights back to their original (but now masked) weights, it is possible to recover performance on par (or even better!) in potentially fewer training iterations. These high-performing sub-networks can then be thought of as winners of the weight initialization lottery. The hypothesis goes as follows:

最初的彩票假设论文(Frankle&Carbin,2019)首先提供了可能的原因的见解:修剪后,对子网络进行随机初始化 。 如果改为将权重重新初始化回其原始(但现在已被屏蔽)权重,则有可能在潜在的更少训练迭代中恢复同等(甚至更好!)的性能。 然后,可以将这些高性能子网视为权重初始化彩票的赢家。 假设如下:

The Lottery Ticket Hypothesis: A randomly-initialized, dense neural network contains a subnetwork that is initialised such that — when trained in isolation — it can match the test accuracy of the original network after training for at most the same number of iterations. — Frankle & Carbin (2019, p.2)

彩票假说 :随机初始化的密集神经网络包含一个子网络,该子网络经过初始化,以便在单独训练时可以在训练最多相同次数的迭代后匹配原始网络的测试精度。 - 弗兰克和卡宾(2019,第2页)

So how can we attempt to find such a winning ticket? Frankle & Carbin (2019) propose iterative magnitude pruning (IMP): Starting from a dense initialization we train our network until convergence. Afterwards, we determine the s percent smallest magnitude weights and create a binary mask that prunes these. We then retrain the sparsified network with its previous initial weights. After convergence we repeat the pruning process (masking additional s² weights) and reset to the initial weights with the newly found mask. We iterate this process until we reach the desired level of sparsity or the test accuracy drops significantly.

那么,我们如何尝试找到这样的中奖彩票呢? Frankle&Carbin(2019)提出了迭代幅度修剪 (IMP):从密集的初始化开始,我们训练网络直到收敛。 此后,我们确定s的最小量级百分比,并创建一个二元掩码将其修剪。 然后,我们使用先前的初始权重来训练稀疏网络。 收敛后,我们重复修剪过程(掩盖其他s²权重),并使用新发现的掩膜重置为初始权重。 我们重复此过程,直到达到所需的稀疏度或测试准确性显着下降为止。

If IMP succeeds it finds the winner of the initialization lottery. This is simply the subnetwork that remains after this iterative pruning process. Note that it is also possible to simply prune in a one-shot fashion — but better results are obtained when spending that extra compute (see figure 4 of Frankle & Carbin (2019)). If we think back to the broad questions of the pruning literature IMP fits in as follows:

如果IMP成功,它将找到初始化彩票的赢家 。 这只是该迭代修剪过程之后剩下的子网。 请注意,也可以一口气简单修剪-但花费额外的计算可以获得更好的结果(请参见Frankle&Carbin(2019)的图4)。 如果我们回想一下修剪文学的广泛问题,那么IMP的情况如下:

Some key empirical insights of the very first LTH paper are summarized in the figure below:

下图总结了第一篇LTH论文的一些关键经验见解:

Panel B: Without any hyperparameter adjustments IMP is able to find sparse subnetworks that are able to outperform un-pruned dense networks in fewer training iterations (the legend refers to the percentage of pruned weights). The gap in final performance between a lottery winning initialization and a random re-initialization is referred to as the lottery ticket effect.

面板B :无需任何超参数调整,IMP就能找到稀疏的子网络,这些子网络在较少的训练迭代中就能胜过未修剪的密集网络(图例指的是修剪权重的百分比)。 彩票中奖初始化和随机重新初始化之间的最终性能差距称为彩票效应 。

Panel C & Panel D: In order to scale the original results to more complicated tasks/architectures like CIFAR-10, VGG-19 and Resnet-20, Frankle & Carbin (2019) had to adapt the learning rate as well as include a warmup (annealing from 0 to the final rate within a predefined set of iterations) schedule. Note that the required Resnet learning rate is a lot smaller than the VGG-19 learning rate.

小组C和小组D :为了将原始结果扩展到更复杂的任务/架构(如CIFAR-10,VGG-19和Resnet-20), Frankle&Carbin(2019)必须适应学习率并包括预热(在预定义的一组迭代中从0退火到最终速率)时间表。 请注意,所需的Resnet学习率比VGG-19学习率小得多。

So what can this tell us? First, IMP can find a substructure that is favourable for the task at hand and that the weight initialization of that subnetwork is special in terms of learning dynamics. Furthermore, over-parametrization is not necessary for successful training — it may only help by providing a combinatorial explosion of available subnetworks. A lot of the interesting follow-up work characterizes potential mechanisms behind the qualitative differences of winning tickets.

那么这能告诉我们什么呢? 首先,IMP可以找到一个有利于当前任务的子结构,并且该子网络的权重初始化在学习动态方面是特殊的 。 此外,过度参数化对于成功的培训不是必需的,它只能通过提供可用子网的组合爆炸来帮助。 许多有趣的跟进工作描述了中奖彩票质量上的差异背后的潜在机制。

弗兰克(Frankle)等人。 (2019)—稳定彩票假说 (Frankle et al. (2019) — Stabilizing the Lottery Ticket Hypothesis)

One limitation of the original lottery ticket paper was that its restriction to small-scale tasks such as MNIST and CIFAR-10. In order to scale the LTH to competitive CIFAR-10 architectures, Frankle & Carbin (2019) had to tune learning rate schedules. Without this adjustment it is not possible to obtain a pruned network that is on par with the original dense network (Liu et al., 2018; Gale et al., 2019). But what if we loosen up some of the ticket restrictions?

原始彩票的一个限制是它只能用于诸如MNIST和CIFAR-10之类的小规模任务。 为了将LTH扩展到具有竞争力的CIFAR-10架构, Frankle&Carbin(2019)必须调整学习率时间表。 如果没有这种调整,就不可能获得与原始密集网络相当的修剪网络( Liu等人,2018年 ; Gale等人,2019年 )。 但是,如果我们放宽一些机票限制怎么办?

In follow-up work Frankle et al. (2019) asked whether it might be possible to robustly obtain pruned subnetworks by not resetting the weights to their initial values but to weights found after a small number of k training iterations. In other words, instead of rewinding to iteration 0 after pruning, we rewind to iteration k. Here is the formal definition and a graphical illustration:

在后续工作中, Frankle等人。 (2019)问是否有可能通过不将权重重置为其初始值,而是将其重置为经过k次训练迭代后发现的权重来稳健地获得修剪后的子网。 换句话说, 不是修剪后倒回到迭代0,而是倒回到迭代k 。 这是正式定义和图形说明:

Since we perform a little bit of training, the resulting ticket is no longer called a lottery ticket but a matching ticket.

由于我们进行了一些培训,因此生成的票证不再称为彩票,而是匹配的票证 。

The key insights are summarized in the figure below: Rewinding to an iteration k > 0 is highly effective when performing IMP. It is possible to prune up to ca. 85% of the weights while still obtaining matching test performance. This holds for both CIFAR-10 and Resnet-20 (rewinding to iteration 500; panel A) as well as ImageNet and Resnet-50 (rewind to epoch 6; panel B). There is no longer a need for a learning rate warmup.

下图中总结了关键的见解:在执行IMP时,回退到k > 0的迭代非常有效。 最多可以修剪约10分钟。 85%的重量,同时仍获得匹配的测试性能。 这适用于CIFAR-10和Resnet-20(后退至迭代500;面板A)以及ImageNet和Resnet-50(后退至第6时期;面板B)。 不再需要学习率预热。

仁达等。 (2020)—比较神经网络修剪中的倒带和微调 (Renda et al. (2020) — Comparing Rewinding and Fine-tuning in Neural Network Pruning)

Until now we have discussed whether IMP is able to identify a matching network initialization. But how does the lottery procedure compare to other pruning methods? In their paper, Renda et al. (2020) compare between three different procedures:

到目前为止,我们已经讨论了IMP是否能够识别匹配的网络初始化。 但是彩票程序与其他修剪方法相比如何? 在他们的论文中, Renda等人。 (2020)比较三种不同的程序:

Fine-Tuning: After pruning, the remaining weights are trained from their final trained values using a small learning rate. Usually this is simply the final learning rate of the original training procedure.

精细调整 :修剪后,剩余的权重会以较小的学习率从最终的权重值进行训练。 通常,这只是原始训练过程的最终学习率。

Weight Rewinding: This corresponds to the previously introduced method by Frankle et al. (2019). After pruning, the remaining weights are reset to the value of a previous SGD iteration k. From that point on the weights are retrained using the learning rate schedule from iteration k onwards. Both weights and learning rate schedule are reset.

重量倒卷 :这对应于Frankle等人先前介绍的方法。 (2019) 。 修剪后,将其余权重重置为先前SGD迭代k的值。 从那时起,权重将使用从迭代k开始的学习速率计划进行重新训练。 权重和学习率计划都将重置。

Learning Rate Rewinding: Instead of rewinding the weight values and setting back the learning rate schedule, learning rate rewinding uses the final unpruned weight and only resets the learning rate schedule to iteration k.

学习速率倒带:学习速率倒带使用最终未修剪的权重,而不是重设权重值并重新设置学习率时间表,而仅将学习率时间表重置为迭代k 。

Renda et al. (2020) contrast gains and losses of the three methods in terms of accuracy, search cost and parameter-efficiency. They answer the following questions:

仁达等。 (2020年)在准确性,搜索成本和参数效率方面对比了三种方法的得失。 他们回答以下问题:

- Given an unlimited budget to spend on search cost, how do final accuracy and parameter efficiency (compression ratio pre/post pruning) behave for the 3 methods? 给定用于搜索成本的无限制预算,这三种方法的最终准确性和参数效率(压缩前/压缩后的压缩率)如何表现?

- Given a fixed budget to spend on search cost, how do all 3 methods compare in terms of accuracy and parameter efficiency? 给定用于搜索成本的固定预算,这三种方法在准确性和参数效率方面如何比较?

Experiments on Resnet-34/50/56 and the natural language benchmark GNMT reveal the following insights:

在Resnet-34 / 50/56和自然语言基准GNMT上进行的实验揭示了以下见解:

- Weight rewinding and retraining outperforms simple fine-tuning and retraining in both unlimited and fixed budget experiments. The same holds when comparing structured and unstructured pruning. 在无限制和固定预算的实验中,重绕和再训练的性能优于简单的微调和再训练。 比较结构化和非结构化修剪时也是如此。

Learning rate rewinding outperforms weight rewinding. Furthermore, while weight rewinding can fail for k=0, it is almost always beneficial to rewind the learning rate to the beginning of the training procedure.

复习的学习速度胜过体重的复习。 此外,尽管在k = 0时重绕可能失败,但将学习率重绕到训练过程的开始几乎总是有益的。

- Weight rewinding LTH networks is the SOTA method for pruning at initialisation in terms of accuracy, compression and search cost efficiency. 权重倒带LTH网络是SOTA方法,用于在准确性,压缩和搜索成本效率方面进行初始化。

As with many empirical insights into Deep Learning which are generated by large-scale experiments there remains one daunting question: What does this actually tell us about these highly non-linear systems we are trying to understand?

如同由大规模实验产生的许多关于深度学习的经验性洞察一样,仍然存在一个令人生畏的问题: 这实际上告诉我们我们正在试图理解的这些高度非线性的系统?

剖析彩票:健壮性和学习动力🔎 (Dissecting Lottery Tickets: Robustness & Learning Dynamics 🔎)

周等。 (2019)—解构彩票:零位,标志和超级遮罩 (Zhou et al. (2019) — Deconstructing lottery tickets: Zeros, signs, and the supermask)

What are the main ingredients that determine whether an initialization is a winning ticket or not? It appears to be the combination of the masking criterion (magnitude of the weights), the rewinding of the non-masked weights, and the masking that sets weights to zero and freezes them. But what if we change any one of these 3 ingredients? What is special about large weights? Do other alternative rewinding strategies preserve winning tickets? And why set weights to zero? Zhou et al. (2019) investigate these questions in 3 ways:

确定初始化是否为中奖彩票的主要因素是什么? 它似乎是掩蔽标准(权重的大小),未掩蔽的权重的倒带以及将权重设置为零并冻结它们的掩蔽的组合。 但是,如果我们更改这三种成分中的任何一种怎么办? 大砝码有什么特别之处? 其他替代倒带策略是否保留中奖彩票? 为什么将权重设置为零? 周等。 (2019)通过3种方式调查这些问题:

By comparing different scoring measures to select which weights to mask. Keeping the smallest trained weights, keeping the largest/smallest weights at initialisation, or the magnitude change/movement in weight space.

通过比较不同的计分方法来选择要掩盖的权重。 保持最小的训练权重,在初始化时保持最大/最小权重,或者权重空间中的大小变化/运动。

By analyzing if one still obtains winning tickets when rewinding weights not to their original initialization. They compare random reinitialisation, reshuffling of kept weights and a constant initialisation.

通过分析倒带时权重是否未达到其原始初始化,是否仍能获得中奖彩票。 他们比较了随机重新初始化,保留权重的改组和恒定初始化。

By freezing masked weights not to the value of 0 but to their initialisation value.

通过将掩盖的权重冻结为0而不是其初始化值。

The conclusions of extensive experiments are the following:

大量实验的结论如下:

- Other scoring criteria that maintain weights “alive” proportionately to their distance from the origin perform equally well as the ‘largest magnitude’ criterion. 保持权重与其到原点的距离成比例的“活着”的其他评分标准与“最大幅度”标准同样有效。

As long as one keeps the same sign as the original sign of the weights at initialisation when performing rewinding, one can obtain lottery tickets that perform on par with the classical IMP formulation (Note: This finding could later not be replicated by Frankle et al. (2020b)).

只要在重绕时保持与初始重量的原始符号相同的符号,就可以获得与经典IMP配方相当的彩票( 注 :后来的Frankle等人无法复制此发现。 (2020b) )。

- Masking weights to the value 0 is crucial. 将权重屏蔽为0至关重要。

Based on these findings they postulate that ‘informed’ masking can be viewed as a form of training: It simply accelerates the trajectory of weights which were already “heading” to zero during their optimization trajectory. Interestingly, a mask obtained from IMP applied to a randomly initialized network already (and without any additional training) yields performance that vastly outperforms a random mask and/or randomly initialized network. Hence, it is a form of supermask that encodes a strong inductive bias. This opens up an exciting perspective of not training network weights at all and instead simply finding the right mask. Zhou et al. (2019) show that it is even possible to learn the mask by making it differentiable and training it with a REINFORCE-style loss. This idea very much reminds me of Gaier & Ha’s (2019) Weight Agnostic Neural Networks. A learned mask can be thought of as a connectivity pattern that encodes a solution regularity. By sampling weights multiple times to evaluate a mask, we essentially make it robust (or agnostic) to the sampled weights.

基于这些发现,他们假定“知情”掩蔽可以看作是一种训练形式 :它只是加速了在优化轨迹过程中已经“前进”到零的权重轨迹。 有趣的是,从IMP获得的掩码已经应用于随机初始化的网络(并且没有任何其他培训),其性能大大优于随机掩码和/或随机初始化的网络。 因此,它是编码强感应偏置的一种 超级掩模形式。 这开辟了一个令人激动的观点,那就是根本不训练网络权重,而只是寻找合适的掩码。 周等。 (2019)表明,甚至可以通过使面罩具有差异性并以REINFORCE风格的损失进行训练来学习面罩。 这个想法让我想起了Gaier&Ha(2019)的体重不可知神经网络。 可以将学习到的掩码视为编码解决方案规则性的连接模式。 通过多次采样权重以评估蒙版,我们实质上使它对采样权重具有鲁棒性(或不可知论)。

弗兰克(Frankle)等人。 (2020a)—线性模式连接性和彩票假设 (Frankle et al. (2020a) — Linear Mode Connectivity & the Lottery Ticket Hypothesis)

When we train a neural network, we usually do so on a random ordering of data batches. Each batch is used to evaluate a gradient of the loss with respect to the network parameters. After a full loop over the dataset (aka an epoch) the batches are usually shuffled and we continue with the next epoch. The sequence of batches can be viewed as a source of noise which we inject into the training procedure. Depending on it, we might obtain very different final weights, but ideally our network training procedure is somewhat robust to such noise. More formally, we can think of single weight initialization which we train on two different batch orders to obtain the two different sets of trained weights. Linear mode connectivity analysis (Frankle et al., 2020a) asks the following question: How does the validation/test accuracy behave if we smoothly interpolate (convex combination) between these two final weight configurations resulting from the different data orders?

训练神经网络时,通常是对数据批次进行随机排序。 每个批次用于评估相对于网络参数的损耗梯度。 在对数据集进行完整循环(又称一个时期)后,通常会对这些批次进行混洗,然后我们继续下一个时期。 批次的顺序可以看作是我们注入训练过程中的噪声源。 依赖于此,我们可能会获得非常不同的最终权重,但是理想情况下,我们的网络训练过程对于这种噪声有些健壮。 更正式地讲,我们可以想到单个权重初始化,我们对两个不同的批处理订单进行训练以获得两个不同的训练权重集。 线性模式连通性分析(Frankle等人,2020a)提出以下问题: 如果我们在由不同数据顺序产生的这两个最终权重配置之间平滑地插值(凸组合),则验证/测试的准确性如何表现?

A network is referred to as stable if the error does not increase significantly as we interpolate between the two final weights. Furthermore, we can train initial weights for k iterations on a single shared data ordering and only later split the training into two separate data orders. For which k does the network then become stable? Trivially, this is the case for k=T, but what about earlier ones? As it turns out this is related to the iteration to which we have to rewind in order to obtain a matching ticket initialisation.

如果当我们在两个最终权重之间进行插值时误差不会显着增加,则将网络称为稳定网络。 此外,我们可以在单个共享数据顺序上训练k次迭代的初始权重,然后再将训练分为两个单独的数据顺序。 然后网络对于哪个k稳定? 通常, k = T就是这种情况,但是较早的情况又如何呢? 事实证明,这与我们必须倒回以获取匹配票证初始化的迭代有关。

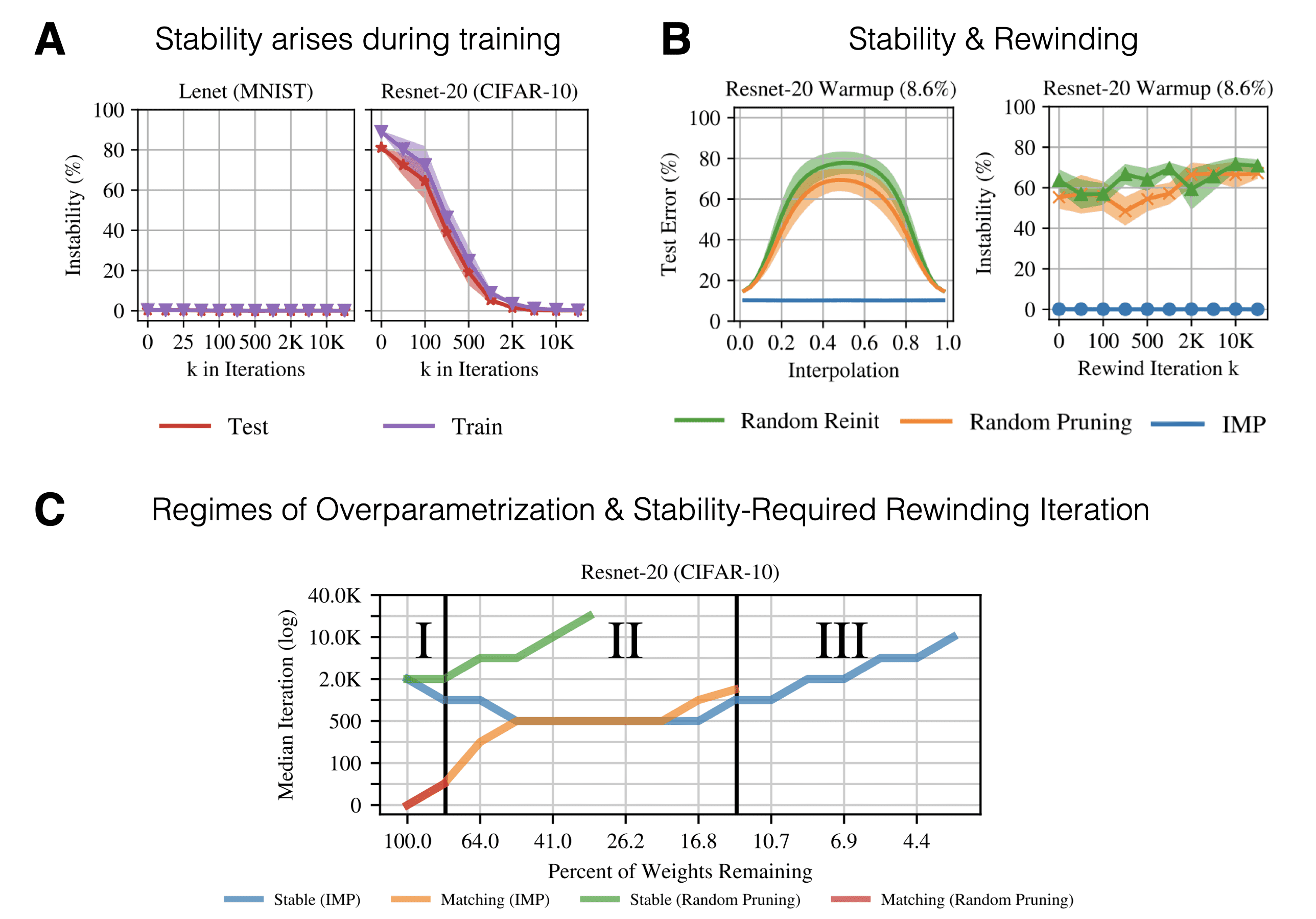

Panel A: No tickets for now, just take a look at when the network becomes interpolation-stable to different data orderings. For LeNet this is already the case at initialization. For Resnet-20 this stability arises only later on in training. Isn’t this reminiscent of the rewinding iteration?

面板A :暂时没有票,请看一下网络何时对不同的数据顺序进行插值稳定。 对于LeNet,初始化时就是这种情况。 对于Resnet-20,这种稳定性只有在以后的培训中才会出现。 这是否使人想起倒带迭代?

Panel B: Iterative magnitude pruning can induce stability. But this only works in combination with the learning rate schedule trick when rewinding to k=0.

B组 :迭代幅度修剪可以诱导稳定性。 但这仅在倒回至k = 0时与学习率调度技巧结合使用。

Panel C: There appear to be 3 regimes of parametrization which we move into by pruning more and more weights (focus on the blue line):

C板块 :我们似乎通过修剪越来越多的权重进入了3种参数化状态(关注蓝线):

-

--

Regime I: The network is strongly overparametrized so that even random pruning still results in good performance.

方案I :网络的参数设置过高,因此,即使是随机修剪也仍会导致良好的性能。

-

--

Regime II: As we increase the percentage of pruned weights only IMP yields matching and stable tickets/networks.

机制II :随着我们增加修剪重量的百分比,只有IMP会产生匹配且稳定的票证/网络。

-

--

Regime III: While IMP is still able to yield stable networks at severe sparsity, the resulting networks are no longer matching.

机制III :尽管IMP仍然能够以严重的稀疏性产生稳定的网络,但最终的网络不再匹配。

This is an example of how lottery tickets have been used to reveal characteristics of learning dynamics. Another more fine-grained analysis of different learning phases is given by the next paper:

这是彩票被用来揭示学习动态特征的一个例子。 下一篇论文给出了对不同学习阶段的另一种更细粒度的分析:

弗兰克(Frankle)等人。 (2020b)—神经网络训练的早期阶段 (Frankle et al. (2020b) — The Early Phase of Neural Network Training)

So far we have seen that only a few training iterations (or epochs on Resnet) result in data-order robustness as well as the ability to obtain matching tickets when rewinding. A more general follow-up question is: How robust are these matching ticket initializations? How much can we wiggle and perturb the initialization before we loose the magical power? And how does this change with k?

到目前为止,我们已经看到,只有很少的训练迭代(或Resnet上的时期)会导致数据顺序的鲁棒性以及倒带时获得匹配票证的能力。 一个更一般的后续问题是:这些匹配的票证初始化有多健壮? 在释放魔力之前,我们可以摆动和干扰多少初始化? 随k的变化如何?

In order to study the emergence of robustness Frankle et al. (2020b) performed permutation experiments using Resnet-20 on CIFAR-10. The protocol goes as follows: Derive a matching ticket using IMP with rewinding. Afterwards, perform a perturbation to the matching ticket and train until convergence. The permutations include:

为了研究鲁棒性的出现, Frankle等人。 (2020b)使用Resif-20在CIFAR-10上进行了置换实验。 协议如下:使用带有倒带的IMP导出匹配的票证。 然后,对匹配的票证进行扰动并进行训练,直到收敛为止。 排列包括:

Setting the weights to the signs and/or magnitudes of iteration 0 or k (see panel A). Any permutation (sign or magnitude — ‘init’) hurts performance at high levels of sparsity. At low levels, on the other hand, the IMP-derived network appears to be robust to sign-flips. The same qualitative result holds for rewinding to k=2000 (see figure 4 of Frankle et al. (2020b)).

将权重设置为迭代0或 k 的符号和/或大小 (请参阅面板A )。 任何排列(符号或大小-“ init”)都会在高度稀疏性时损害性能。 另一方面,在较低级别上,IMP衍生的网络似乎对符号翻转具有鲁棒性。 同样的定性结果适用于倒回至k = 2000(请参见Frankle等人(2020b)的图4)。

Permute the weights at iteration k with structural sub-components of the net (see panel B). This includes exchanging weights of the network globally (across layers), locally (within a layer) and within a given filter. The matching ticket is not robust to any of the perturbations and performance decreases to the level of rewinding to iteration k=0 . Panel C, on the other hand, limits the effect of permutation by only exchanging weights which have the same sign. In this case the network is robust to within filter shuffles at all sparsity levels.

使用网络的 结构子组件 在迭代 k 处权重 (请参阅面板B )。 这包括在全局(跨层),本地(在层内)和给定过滤器内交换网络的权重。 匹配票证对任何扰动都不鲁棒,并且性能降低到回绕到迭代k = 0的级别。 另一方面, 面板C仅通过交换具有相同符号的权重来限制置换的效果。 在这种情况下,该网络对于所有稀疏度级别的过滤器混洗都是鲁棒的。

Add Gaussian noise to the matching ticket weights (see panel D): In order to assure the right scale of the added noise, Frankle et al. (2020b) calculate a layer-wise normalized standard deviation resulting from initialization distribution of the specific layer. The size of the effective standard deviation very much affects the final performance of the perturbed lottery ticket. Larger perturbations = worse performance.

将高斯噪声添加到匹配的票务权重中 (请参阅面板D ):为了确保所添加噪声的正确比例, Frankle等人(2006年)。 (2020b)计算由特定层的初始化分布导致的逐层归一化标准差。 有效标准偏差的大小在很大程度上影响摄动彩票的最终性能。 较大的干扰=较差的性能。

Pretraining of a dense/sparse network with different self-supervised losses (panel E): The previous results are largely preserved when going from k=500 to k=2000. This is indicative that there are other forces than simply weight distribution properties and signs. Instead it appears that the magic lies in the actual training. Hence, Frankle et al. (2020b) asked whether the early phase adaptations depend on information in the conditional label distribution or whether unsupervised representation learning is sufficient. Training for a small number of epochs on random labels does close the gap compared to rewinding to iteration 0. But longer pre-training can eventually hurt the performance of the network. Finally, pre-training (non-ticket) sparse networks is not sufficient to overcome the ‘wrong’/hurtful pruning mask (panel F).

具有不同自我监督损耗的密集/稀疏网络的预训练 (图E ):当从k = 500到k = 2000时,以前的结果在很大程度上得以保留。 这表明除了简单的重量分布特性和符号外,还有其他作用力。 相反,魔术似乎在实际训练中。 因此, Frankle等人。 (2020b)询问早期适应是否取决于条件标签分布中的信息,还是无监督的表示学习是否足够。 与倒退至迭代0相比,对随机标签上的少数几个纪元进行训练确实可以缩小差距。但是更长的预训练最终会损害网络的性能。 最后,预训练(非票务)的稀疏网络不足以克服“错误的” /有害的修剪掩码( 面板F )。

All in all, this provides evidence that it is very hard to overcome the necessity of using rewinding since the emergence of the matching initialization appears highly non-trivial. Self-supervised pre-training appears to provide a potential way to circumvent rewinding. Further insights in the early learning dynamics in training Resnet-20 on CIFAR-10 are summarized in the following awesome visualization by Frankle et al. (2020b):

总而言之,这提供了证明,很难克服使用倒带的必要性,因为匹配初始化的出现显得非常不平凡。 自我监督的预培训似乎为规避重绕提供了一种可能的方法。 下面的Frankle等人的精彩可视化总结了有关在CIFAR-10上培训Resnet-20的早期学习动态的更多见解。 (2020b) :

几乎不需要培训就可以检测中奖彩票! (Detecting Winning Tickets with Little to No Training!)

While the original work by Jonathan Frankle provides an empirical existence proof, finding tickets is tricky and costly. IMP requires repeated training of sparser and sparser networks, something not every PhD researcher can do (without being hated by their lab members 🤗). The natural next question becomes how one can identify such networks with less compute. Here are two recent approaches which attempt to do so:

乔纳森·弗兰克勒(Jonathan Frankle)的原始作品提供了存在的经验证明,而寻找门票则是棘手且昂贵的。 IMP需要对稀疏和稀疏网络进行反复培训,这并不是每个博士研究人员都可以做到的(没有受到实验室成员的hat)。 下一个自然的问题变成了如何用更少的计算量识别这种网络。 以下是尝试这样做的两种最新方法:

你等。 (2020年)—抢先购票:走向更有效的深度网络培训 (You et al. (2020) — Drawing Early-Bird Tickets: Towards more efficient training of deep networks)

You et al. (2020) identify winning tickets early on in training (hence ‘early-bird’ tickets) using a low cost training scheme which combines early stopping, low precision and large learning rates. They argue that this is due to the two-phase nature of optimization trajectories of neural networks:

你等。 (2020年)使用结合了早期停止,低精度和高学习率的低成本培训计划,在训练的早期识别获胜的票证(因此称为“早鸟票”)。 他们认为这是由于神经网络的优化轨迹具有两阶段性质:

- A robust first phase of learning lower frequency/large singular value components. 学习较低频率/大奇异值分量的强大的第一阶段。

- An absorbing phase of learning higher frequency/low singular value components. 学习较高频率/低奇异值分量的吸收阶段。

By focusing on only identifying a connectivity pattern it is possible to identify early-bird tickets already during phase 1. A major difference to standard LTH work is that You et al. (2020) prune entire convolution channels based on their batch normalization scaling factor. Furthermore, pruning is performed iteratively within a single training run.

通过仅专注于识别连通性模式,便可以在第一阶段就已经识别出早鸟票。与标准LTH工作的主要区别在于You等人。 (2020年)基于其批量归一化缩放因子修剪整个卷积通道。 此外,修剪是在一次训练中反复进行的。

The authors empirically observe that the pruning masks change significantly during the first epochs of training but appear to converge soon (see left part of the figure below).

作者凭经验观察到,修剪面罩在训练的第一个时期会发生显着变化,但似乎很快会收敛(请参见下图的左半部分)。

Hence, they conclude that hypothesis of early emergence is true and formulate a suited detection algorithm: To detect the early emergence they propose a mask distance metric that computes the Hamming distance between two pruning masks at two consecutive pruning iterations. If the distance is smaller than a threshold , they stop to prune. The resulting early-bird ticket can then simply be retrained to restore performance.

因此,他们得出的结论是,早出现的假设是正确的,并制定了一种合适的检测算法:为了检测早出现,他们提出了一种掩码距离度量标准,该度量计算两次连续修剪迭代中两个修剪掩码之间的汉明距离。 如果距离小于阈值,它们将停止修剪。 然后可以简单地对生成的早鸟票进行重新培训以恢复性能。

田中等。 (2020)—通过迭代地保存突触流来修剪没有任何数据的神经网络 (Tanaka et al. (2020) — Pruning neural networks without any data by iteratively conserving synaptic flow)

While You et al. (2020) asked whether it is possible to reduce the amount of required computation, they still need to train the model on the data. Tanaka et al. (2020), on the other hand, answer a more ambitious question: Can we obtain winning tickets without any training and in the absence of any data? They argue that the biggest challenge to pruning at initialisation is the problem of layer collapse — the over-eagerly pruning of an entire layer which renders the architecture untrainable (since the gradient flow is cut-off).

当你等。 (2020年)询问是否有可能减少所需的计算量,他们仍然需要在数据上训练模型。 田中等。 (2020) ,另一方面,我们回答了一个更雄心勃勃的问题:我们是否可以在没有任何培训的情况下和没有任何数据的情况下获得中奖彩票? 他们认为,在初始化时进行修剪的最大挑战是层崩溃的问题-整个层的过度修剪会导致体系结构不可训练(因为梯度流被切断)。

Think of an MLP that stacks a set of fully-connected layers. Layer collapse can be avoided by keeping a single weight per layer which corresponds to the theoretically achievable maximal compression. The level of compression which can be achieved by a pruning algorithm without collapse is called the critical compression. Ideally, we would like these two to be equal. Taking inspiration from flow networks, Tanaka et al. (2020) define a gradient-based score called synaptic saliency. This metric is somewhat related to layerwise relevance propagation and measures a form of contribution. The paper then proves two conservation laws of saliency on a “micro”-neuron and “macro”-network level. This allows the authors to show that — for sufficient compression — gradient-based methods will prune large layers entirely (if evaluated once). So why don’t we run into layer collapse in the IMP setting with training? Tanaka et al. (2020) show that this is due to gradient descent encouraging layer-wise conservation as well as iterative pruning at small rates. So any global pruning algorithm that wants to a maximal critical compression has to respect two things: positively score layer-wise conservation and iteratively re-evaluate the scores after pruning.

考虑一个MLP,它堆叠了一组完全连接的层。 可以通过保持每层单个权重来避免层崩溃,该权重对应于理论上可实现的最大压缩 。 可以通过修剪算法而不会崩溃的压缩级别称为临界压缩 。 理想情况下,我们希望两者相等。 田中等人从流动网络中汲取灵感。 (2020)定义了一个基于梯度的得分称为突触显着性。 该度量在某种程度上与分层相关性传播有关,并衡量一种贡献形式。 然后,本文证明了在“微”神经元和“宏”网络水平上的两个显着性守恒律。 这使作者可以证明,对于足够的压缩,基于梯度的方法将完全修剪大图层(如果评估一次)。 那么,为什么不通过培训在IMP设置中遇到层崩溃? 田中等。 (2020)表明,这是由于梯度下降鼓励分层保护以及小速率的迭代修剪。 因此,任何想要最大临界压缩的全局修剪算法都必须注意两点:对分层保守性进行积极评分,并在修剪后迭代地重新评估分数。

Based on these observations the authors define an iterative procedure which generates a mask that preserves the flow of synaptic strengths through the initialized network (see above). Most importantly this procedure is entirely data-agnostic and only requires a random initialization. They are able to outperform other ‘pruning at init’ baselines on CIFAR-10/100 and Tiny ImageNet. I really enjoyed reading this paper since it exploits a theoretical result by turning it into an actionable algorithm.

基于这些观察,作者定义了一个迭代过程,该过程将生成一个掩码,以保留通过初始化网络的突触强度流(请参见上文)。 最重要的是,此过程完全与数据无关,只需要随机初始化即可。 他们能够胜过CIFAR-10 / 100和Tiny ImageNet上的其他“初始修剪”基准。 我真的很喜欢阅读本文,因为它通过将理论结果转化为可行的算法来加以利用。

票证是否可以在数据集,优化器和域中通用化? 🃏 (Do Tickets Generalize across Datasets, Optimizers and Domains? 🃏 👉 🃏)

Morcos等。 (2019)—一张赢得所有人的票:跨数据集和优化程序推广彩票初始化 (Morcos et al. (2019) — One ticket to win them all: Generalizing lottery ticket initializations across datasets and optimizers)

A key question is whether matching tickets overfit. What does overfitting mean in the context of subnet initialization? The ticket is generated on a specific dataset (e.g. MNIST, CIFAR-10/0, ImageNet), with a specific optimizer (SGD, Adam, RMSprop), for a specific domain (vision) and a specific task (object classification). It is not obvious if a ticket would still be a winner if we would change any one of these ingredients. But what we are really interested in is to find the right inductive biases for learning — in a general sense.

一个关键问题是匹配的票证是否过拟合。 在子网初始化的情况下,过度拟合意味着什么? 票证是在特定数据集(例如MNIST,CIFAR-10 / 0,ImageNet)上生成的,具有针对特定域(视觉)和特定任务(对象分类)的特定优化器(SGD,Adam,RMSprop)。 如果我们改变这些因素中的任何一种,票证是否仍然会成为赢家还不是很明显。 但是,我们真正感兴趣的是在一般意义上找到正确的归纳学习偏见 。

Therefore, Morcos et al. (2019) asked whether one could identify a matching ticket on one vision dataset (e.g. ImageNet) and transfer it to another (e.g. CIFAR-100). The key question being whether the lottery ticket effect would hold up after training the matching ticket on the new dataset. The procedure goes as follows:

因此, Morcos等。 (2019)问一个人是否可以在一个视觉数据集(例如ImageNet)上识别匹配的票证并将其转移到另一个(例如CIFAR-100)。 关键问题是在新数据集上训练匹配票证后,彩票票证效果是否会持续。 该过程如下:

Find a lottery ticket on a source dataset using IMP.

使用IMP在源数据集上找到彩票。

Evaluate the source lottery ticket on a new target dataset by training it until convergence.

通过训练新的目标数据集直至收敛,评估源彩票。

So at this point you might ask yourself how do input/output shape work in this case? At least I did 😄. Since we operate on images and the first layer in the of considered networks case is a convolution, we don’t have to change anything about the first hidden layer transformation. Puh. At the stage of network processing where we transition from convolution to fully-connected (FC) layers, Morcos et al. (2019) use global average pooling in order to make sure that regardless of the channel size (which is going to differ between datasets) the FC layer dimensions work out. Last but not least, the final layer has to be excluded from the lottery ticket transfer and is instead randomly initialized. This is because different datasets have different numbers of target classes. This procedure is different from traditional transfer learning since we do not transfer representations (in the form of trained weights) but an initialization and mask found on a separate dataset. So what do Morcos et al. (2019) find?

因此,此时您可能会问自己,在这种情况下输入/输出形状如何工作? 至少我做到了。 由于我们在图像上进行操作,并且在考虑的网络情况下的第一层是卷积,因此我们不必更改有关第一隐藏层转换的任何内容。 h 在网络处理阶段,我们从卷积过渡到完全连接(FC)层, Morcos等人。 (2019)使用全局平均池来确保无论通道大小如何(数据集之间都会有所不同),FC层的尺寸都可以计算出来。 最后但并非最不重要的一点是,最后一层必须从彩票转移中排除,而是随机初始化。 这是因为不同的数据集具有不同数量的目标类别。 此过程与传统的转移学习不同,因为我们不转移表示形式(经过训练的权重),而是转移单独的数据集上的初始化和掩码 。 那么, Morcos等人做了什么。 (2019)找到了吗?

Panel A and Panel B: Transferring VGG-19 tickets from a small source dataset to ImageNet performs well — but worse than a ticket that is directly inferred on the target dataset. Tickets inferred from a larger dataset than the target dataset, on the other hand, perform even better than the ones inferred from the target dataset.

面板A和面板B :将VGG-19票证从小型源数据集传输到ImageNet的效果很好-但比直接在目标数据集上推断出的票证更糟糕。 另一方面,从比目标数据集更大的数据集推断出的票证的性能甚至比从目标数据集推断出的票证还要好。

Panel C and Panel D: Approximately the same holds for Resnet-50. But one can also observe that performance degrades already for smaller pruning fractions than Resnet-50 (sharper “pruning” cliff for Resnet-50 than VGG-19).

面板C和面板D :Resnet-50大致相同。 但是,还可以观察到,与Resnet-50相比,对于较小的修剪比例,性能已经降低了(对于Resnet-50,比VGG-19的锐利“修剪”悬崖)。

Finally, Morcos et al. (2019) also investigated whether a ticket inferred with one optimizer transfers to another. And yes, this is possible for VGG-19 if one carefully tunes learning rates. Again this is indicative that lottery tickets encode inductive biases that are invariant across data and optimization procedure.

最后, Morcos等。 (2019)还调查了用一个优化器推断出的票证是否会转移到另一个优化器。 是的,如果一个人仔细调整学习速度,这对于VGG-19是可能的。 再次表明,彩票编码的感应偏差在数据和优化过程中是不变的。

So what? This highlights a huge potential for tickets as general inductive bias. One could imagine finding a robust matching ticket on a very large dataset (using lots of compute). This universal ticket can then flexibly act as an initializer for (potentially all/most) loosely domain-associated tasks. Tickets, thereby, could — similarly to the concept of meta-learning a weight initialisation — perform a form of amortized search in weight initialization space.

所以呢? 这突显了票证作为一般归纳偏差的巨大潜力。 可以想象在非常大的数据集(使用大量计算)上找到可靠的匹配票证。 然后,该通用票证可以灵活地充当(可能是全部/大部分)与域相关的松散任务的初始化程序。 因此,票证可以(类似于元学习权重初始化的概念) 在权重初始化空间中执行摊销搜索的形式 。

Yu等。 (2019)—使用奖励和多种语言玩彩票:RL和NLP的彩票 (Yu et al. (2019) — Playing the lottery with rewards and multiple languages: lottery tickets in RL and NLP)

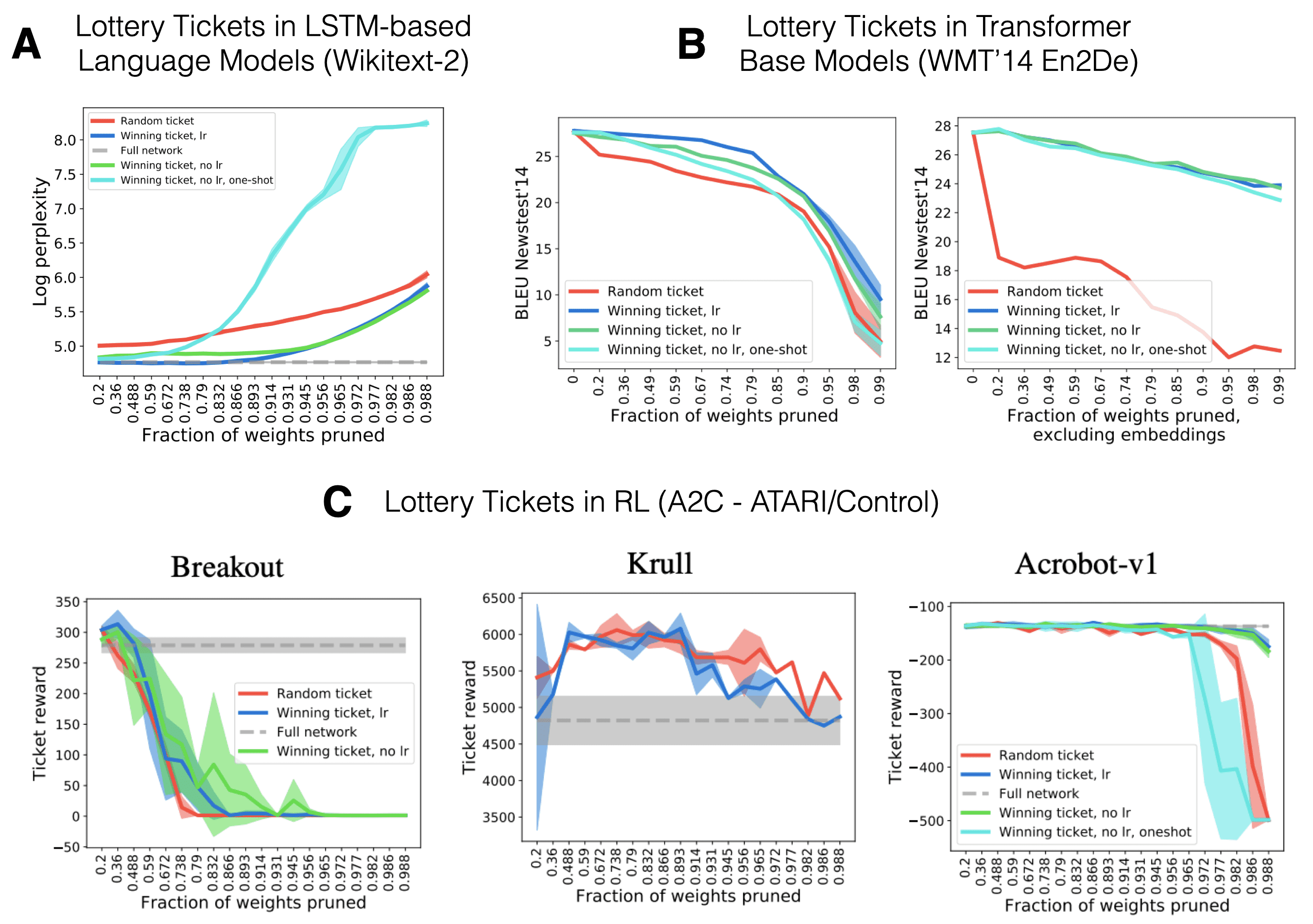

Until now we have looked at subnetwork initialisations in the context computer vision tasks. How about different domains with non-cross-entropy-based loss functions? Yu et al. (2019) investigate the extension to language models (LSTM-based and Transformer models) as well as the Reinforcement Learning (actor-critic methods) setting. There are several differences to the previous work in vision: First, we also prune other types of layers aside from convolutional filters and FC layers (recurrent & embedding layers and attention modules). Second, we train on very different loss surfaces (e.g. a non-stationary actor-critic loss). And finally, RL networks usually have a lot less parameters than large vision networks (especially for continuous control) which potentially makes it harder to prune at high levels of sparsity.

到目前为止,我们已经在上下文计算机视觉任务中研究了子网的初始化。 具有基于非交叉熵的损失函数的不同域又如何呢? Yu等。 (2019)研究了语言模型的扩展(基于LSTM和Transformer模型)以及强化学习(行为批评方法)设置。 与以前的工作在视觉上有几个差异:首先,我们还修剪除卷积过滤器和FC层(循环和嵌入层以及注意模块)以外的其他类型的层。 其次,我们在非常不同的损失表面上进行训练(例如,非平稳演员批评损失)。 最后,RL网络通常比大型视觉网络(特别是连续控制)具有更少的参数,这可能使在稀疏度较高的条件下修剪变得困难。

In short — yes, there exit sparse initializations which significantly outperform random subnetworks for both language as well as RL tasks. Most interestingly, the authors find that one is able to prune up to 2/3 of all transformer weights while still obtaining strong performance. Late rewinding and iterative pruning are again key and embedding layers seem to be very overparametrized. Therefore, they are mainly targeted by IMP. For RL, on the other hand, well-performing pruning levels strongly varied across all considered games. This indicates that for some tasks standard CNN-based actor-critic networks have way too many parameters. This is most likely a function of RL benchmarks consisting of many games which are all being learned by the same architecture setup.

简而言之-是的,在语言和RL任务方面,存在稀疏初始化的性能明显优于随机子网。 最有趣的是,作者发现,一个人能够修剪所有变压器重量的2/3,同时仍能获得强劲的性能。 后期重绕和迭代修剪再次成为关键,并且嵌入层似乎设置得过大。 Therefore, they are mainly targeted by IMP. For RL, on the other hand, well-performing pruning levels strongly varied across all considered games. This indicates that for some tasks standard CNN-based actor-critic networks have way too many parameters. This is most likely a function of RL benchmarks consisting of many games which are all being learned by the same architecture setup.

Open Research Questions ? (Open Research Questions ?)

The lottery ticket hypothesis opens up many different views on topics such as optimization, initialisation, expressivity vs. trainability and the role of sparsity/over-parametrization. At the current point in time there are way more unanswered than answered questions — and that is what excites me! Here are a few of the top of my head:

The lottery ticket hypothesis opens up many different views on topics such as optimization, initialisation, expressivity vs. trainability and the role of sparsity/over-parametrization. At the current point in time there are way more unanswered than answered questions — and that is what excites me! Here are a few of the top of my head:

- What are efficient initialisation schemes that evoke lottery tickets? Can meta-learning yield such winning ticket initialisation schemes (in compute wonderland)? Is it possible to formalise optimisation algorithms that exploit lottery tickets? Can the effects of connectivity and weight initialisation be disentangled? Can winning tickets be regarded as inductive biases? What are efficient initialisation schemes that evoke lottery tickets? Can meta-learning yield such winning ticket initialisation schemes (in compute wonderland)? Is it possible to formalise optimisation algorithms that exploit lottery tickets? Can the effects of connectivity and weight initialisation be disentangled? Can winning tickets be regarded as inductive biases?

A network that has been initialized with weights that were pretrained on only half of the training data, the final network (trained on the full dataset) always yields a generalization gap (Ash & Adams, 2019). In other words — pretraining on a subset of the data generalizes worse than training from a random initialisation. Can lottery tickets overcome this warm starting problem? This should be an easy extension to investigate following Morcos et al. (2019).

A network that has been initialized with weights that were pretrained on only half of the training data, the final network (trained on the full dataset) always yields a generalization gap (Ash & Adams, 2019) . In other words — pretraining on a subset of the data generalizes worse than training from a random initialisation. Can lottery tickets overcome this warm starting problem? This should be an easy extension to investigate following Morcos et al. (2019) .

- In which way do lottery tickets differ between domains (vision, language, RL) and tasks (classification, regression, etc.)? Are the differences a function of the loss surface and can we extract regularities simply from the masks? In which way do lottery tickets differ between domains (vision, language, RL) and tasks (classification, regression, etc.)? Are the differences a function of the loss surface and can we extract regularities simply from the masks?

All in all there is still a lot to discover and many lotteries to be won.

All in all there is still a lot to discover and many lotteries to be won.

Some Final Pointers & Acknowledgements (Some Final Pointers & Acknowledgements)

I would like to thank Jonathan Frankle for valuable feedback, pointers & the open-sourcing of the entire LTH code base (see the open_lth repository). Furthermore, a big shout out goes to Joram Keijser and Florin Gogianu who made this blogpost readable 🤗

I would like to thank Jonathan Frankle for valuable feedback, pointers & the open-sourcing of the entire LTH code base (see the open_lth repository ). Furthermore, a big shout out goes to Joram Keijser and Florin Gogianu who made this blogpost readable 🤗

If you still can’t get enough of the LTH, these pointers are worth checking out:

If you still can't get enough of the LTH, these pointers are worth checking out:

Jonathan Frankle’s ICLR Best Paper Award Talk — great 10 minute introduction of the key ideas and results with very digestible slides!

Jonathan Frankle's ICLR Best Paper Award Talk — great 10 minute introduction of the key ideas and results with very digestible slides!

Ari Morcos’ RE-WORK talk on generalising tickets across datasets.

Ari Morcos' RE-WORK talk on generalising tickets across datasets.

Jonathan Frankle’s contribution to the NeurIPS 2019 retrospectives in ML workshop & the accompanying personal story (beginning at 12:30 minutes)

Jonathan Frankle's contribution to the NeurIPS 2019 retrospectives in ML workshop & the accompanying personal story (beginning at 12:30 minutes)

I recommend everyone to have a look at the evolution of the original paper and the different versions of the ArXiv preprint. Especially for a researcher at the beginning of their path, it was really enlightening to see the trajectory and scaling based on more and more powerful hypothesis testing.

I recommend everyone to have a look at the evolution of the original paper and the different versions of the ArXiv preprint . Especially for a researcher at the beginning of their path, it was really enlightening to see the trajectory and scaling based on more and more powerful hypothesis testing.

If you are interested in some maths and have an affinity for concentration inequalities, check out Malach et al. (2020). They formally prove the LTH as well as posit an even stronger conjecture: Given a large enough dense network there exists an subnetwork that achieves matching performance without any additional training.

If you are interested in some maths and have an affinity for concentration inequalities, check out Malach et al. (2020) . They formally prove the LTH as well as posit an even stronger conjecture: Given a large enough dense network there exists an subnetwork that achieves matching performance without any additional training .

Checkout this GitHub repository with tons of recent (and not so recent) pruning papers.

Checkout this GitHub repository with tons of recent (and not so recent) pruning papers.

You can find a pdf version of this blog post here. If you use this blog in your scientific work, please cite it as:

You can find a pdf version of this blog post here . If you use this blog in your scientific work, please cite it as:

@article{lange2020_lottery_ticket_hypothesis,

title = "The Lottery Ticket Hypothesis: A Survey",

author = "Lange, Robert Tjarko",

journal = "https://roberttlange.github.io/year-archive/posts/2020/06/lottery-ticket-hypothesis/",

year = "2020",

url = "https://roberttlange.github.io/posts/2020/06/lottery-ticket-hypothesis/"

}Originally published at https://roberttlange.github.io on June 27, 2020.

Originally published at https://roberttlange.github.io on June 27, 2020.

翻译自: https://towardsdatascience.com/the-lottery-ticket-hypothesis-a-survey-d1f0f62f8884

213

213

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?