视觉处理

When people ask “What should I use as a GPU?”, most people answer “Nvidia”. Why? Because there are both CUDA and CUDNN available on it. CUDA and CUDNN are accelerators for your deep learning models that work only on Nvidia GPUs. If you have any other GPU than Nvidia, you belong to another time…

当人们问“我应该将什么用作GPU?”时,大多数人都会回答“ Nvidia”。 为什么? 因为同时可以使用CUDA和CUDNN。 CUDA和CUDNN是仅适用于Nvidia GPU的深度学习模型的加速器。 如果您有Nvidia以外的其他GPU,那么您属于另一个时代…

Until now. A few years ago, Intel released a new kind of chip that until now has under the radar. A chip that is both very small and very powerful.

到现在。 几年前,英特尔发布了一种新型芯片,直到现在,这种芯片一直受到关注。 既小又功能强大的芯片。

This chip is called a Vision Processing Unit, or VPU.The primary goal of a VPU is to accelerate machine vision algorithms such as convolutional neural networks (CNNs) or even feature detectors and descriptors like SIFT, SURF, etc.

该芯片称为视觉处理单元或VPU。 VPU的主要目标是加速机器视觉算法,例如卷积神经网络(CNN)甚至特征检测器和描述符,例如SIFT,SURF等。

在我向您解释什么是VPU之前, (Before I explain to you what a VPU is —)

现在,我告诉你一个故事 (Now, let me tell you a story)

On my first day working for MILLA, an autonomous shuttle company, I discovered a shuttle that can drive up to 30 km/h; quite an improvement if you compare it to our competitors at the time driving at 5–8 km/h.

在为自动班车公司MILLA工作的第一天,我发现了一种时速可达30 km / h的班车。 如果您将其与以5–8 km / h的速度行驶时的竞争对手相比,则是一个很大的进步。

At the time, the shuttle was new and there was no GPU yet on it.

当时,航天飞机是新的,还没有GPU。

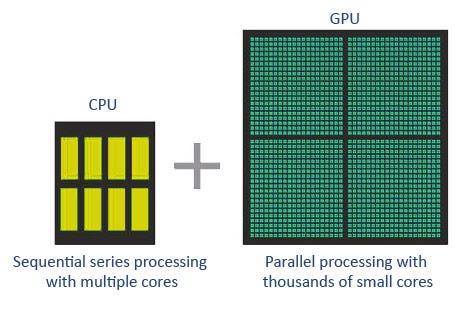

In case you don’t know what a GPU is, here’s a quick picture that explains it well:

如果您不知道什么是GPU,请看下面的快速图片来很好地解释一下:

A GPU (Graphic Processing Unit) parallels the processes so operations are done faster.

GPU(图形处理单元)并行处理,因此可以更快地完成操作。

In a self-driving car, this can be super useful for computer vision or point cloud processing. It was first released in video games because of the need to display multiple things at the same time. While GPUs are awesome for deep learning training, they’re still not great for deployment.

在自动驾驶汽车中,这对于计算机视觉或点云处理非常有用。 它首先在视频游戏中发布,因为需要同时显示多个内容。 尽管GPU非常适合进行深度学习培训,但对于部署而言仍然不是很好。

This is where VPUs come into play. They’re specifically designed to focus on vision algorithms and deployment. Deployment in machine learning is still a very tricky and complicated process. We don’t find open source code we can reuse and we have to get our hands dirty.

这就是VPU发挥作用的地方。 它们专门设计用于视觉算法和部署。 机器学习中的部署仍然是一个非常棘手和复杂的过程。 我们找不到可重用的开放源代码,因此必须动手。

同时运行多种算法 (Running multiple algorithms at the same time)

Once we received the GPU, I had to implement my computer vision algorithms. Obstacle detection, obstacle tracking, traffic light detection, lane line detection, drivable area segmentation, feature tracking, pedestrian behavioral prediction… There was a lot to do! Each task needed to be run on a neural network and was using GPU memory. It was clear that my GPU NVIDIA GTX 1070 couldn’t handle all these tasks at the same time.

收到GPU后,我必须实施我的计算机视觉算法。 障碍物检测,障碍物跟踪,交通信号灯检测,车道线检测,可驾驶区域分割,特征跟踪,行人行为预测……要做的事很多! 每个任务都需要在神经网络上运行,并且正在使用GPU内存。 很明显,我的GPU NVIDIA GTX 1070无法同时处理所有这些任务。

After saturating the GPU with 3 or 4 key algorithms, I realized that I still needed to process the point clouds. Point clouds are the output of LiDARs; there can be millions every second. GPUs work with RAM and can get completely full with only 1 or 2 neural networks running at the same time.

用3或4个关键算法使GPU饱和后,我意识到仍然需要处理点云。 点云是激光雷达的输出 ; 每秒可能有数百万。 GPU与RAM配合使用,并且可以同时运行1个或2个神经网络而完全充满。

One more thing: We had 3 cameras and 3 LiDARs. Redundancy was necessary and we needed different angles, lenses, and options. It meant that all of these had to be done 3 times. I was desperate for a second, third, or fourth GPU.

还有一件事:我们有3台摄像机和3台LiDAR。 冗余是必要的,我们需要不同的角度,镜头和选项。 这意味着所有这些必须完成3次。 我非常渴望第二,第三或第四个GPU。

But space in a self-driving car trunk is limited. Heat is dangerous, and the fan is noisy for customers. We couldn’t allow the technical difficulties to destroy design and comfort. The reality is that running algorithms on 4 GPUs is sometimes hard and impractical when working on an embedded device.

但是自动驾驶汽车后备箱中的空间是有限的。 热量很危险,风扇对客户来说很吵。 我们不能容忍技术难题破坏设计和舒适度。 现实情况是,在嵌入式设备上工作时,有时很难在4个GPU上运行算法,而且不切实际。

So what prevented me from eventually jumping out of a window? VPUs. Vision processing units are emerging types of processors. The difference is that they are 100% dedicated to computer vision. Nothing else.

那么,是什么阻止了我最终跳出窗户呢? VPU。 视觉处理单元是新兴的处理器类型。 不同之处在于它们100%致力于计算机视觉。 没有其他的。

Computer vision is rapidly moving closer to where data is collected — edge devices. Subscribe to the Fritz AI Newsletter to learn more about this transition and how it can help scale your business.

计算机视觉正Swift向收集数据的地方(边缘设备)靠近。 订阅Fritz AI新闻通讯以了解有关此过渡及其如何帮助您扩展业务的更多信息 。

VPU是什么样的? (What does a VPU look like?)

That’s it. A USB stick. This is Intel’s answer to Nvidia, and it’s really powerful. They also have bigger products (but not much bigger), all using the same chip inside: “Movidius Myriad X”.

而已。 USB记忆棒。 这是英特尔对Nvidia的回答,而且功能非常强大。 他们还拥有更大的产品(但没有更大),全部使用内部相同的芯片:“ Movidius Myriad X”。

建筑 (Architecture)

Here’s the high-level architecture of this chip. As you can see, there’s a Neural Compute Engine specifically optimized for neural networks, vision accelerators, imagining accelerators, CPUs, and some more hardware to make it very powerful and efficient.

这是该芯片的高级架构。 如您所见,其中有一个针对神经网络,视觉加速器,成像加速器,CPU和更多硬件而特别优化的神经计算引擎,使其非常强大和高效。

The Movidius is designed for convolutional neural networks and image processing operations.

Movidius专为卷积神经网络和图像处理操作而设计。

演示版 (Demo)

Intel showed their chip doing pedestrian detection, age estimation, gender classification, face detection, body orientation, and mood estimation; all at over 120 FPS. These are 6 different neural networks running on a tiny device. It couldn’t even run on an Nvidia’s GPU without facing memory issues.

英特尔展示了他们的芯片,用于行人检测,年龄估算,性别分类,面部检测,身体朝向和情绪估算; 全部超过120 FPS。 这些是在微型设备上运行的6个不同的神经网络 。 如果没有内存问题,它甚至无法在Nvidia的GPU上运行。

VPU还有哪些其他优点? (What are other great things about VPUs?)

It’s all on the edge; there is no interaction with the cloud. That means no latency and more privacy.

一切都在边缘 ; 与云没有交互。 这意味着没有延迟和更多的隐私。

- It comes with a toolkit and SDK called OpenVINO that can implement deep learning CNN libraries on the dedicated Neural Compute Engine in TensorFlow and Caffe. 它带有一个称为OpenVINO的工具包和SDK,可以在TensorFlow和Caffe中的专用神经计算引擎上实现深度学习CNN库。

When running your algorithms on this USB stick, you can completely free the rest of the computer and GPU for other programs such as point cloud processing.

在此USB记忆棒上运行算法时,您可以将计算机和GPU 的其余部分完全释放给其他程序,例如点云处理。

You can stack multiple USB sticks and double the power as long as you want.

您可以堆叠多个USB记忆棒,并根据需要加倍功率。

它是如何工作的? (How does it work?)

Although all details have not been made public, we can still take a practical look at the work under the hood. From a very long webinar from Intel on May 14, 2020, I managed to capture this slide:

尽管尚未公开所有细节,但我们仍然可以实际研究其幕后工作。 在2020年5月14日来自英特尔的一个很长的网络研讨会上,我设法捕获了这张幻灯片:

Decoding and encoding are done using the OpenVINO toolkit; it’s one line of code for each.

解码和编码是使用OpenVINO工具包完成的。 每个代码只有一行代码。

Preprocessing is done using OpenCV or other libraries, and mostly just includes resizing and fitting the image to the requirements of your network.

预处理是使用OpenCV或其他库完成的,并且通常只包括调整图像大小并使其适合网络需求。

Inference is made using a special function of the toolkit that calls your models trained in TensorFlow and Caffe. It involves VPU, CPU, GPU, and FPGA.

使用工具包的特殊功能进行推理 ,该功能调用在TensorFlow和Caffe中训练的模型。 它涉及VPU,CPU,GPU和FPGA。

We are reaching an exciting era. The Intel NCS 2 been implemented in drones, small robots, and a lot of IoT applications so far. And people seem very impressed with it. It’s still very early, but I expect the market to grow a lot in the coming years.

我们正在进入一个令人兴奋的时代。 到目前为止,英特尔NCS 2已在无人机,小型机器人和许多IoT应用中实现。 人们似乎对此印象深刻。 现在还很早,但是我预计未来几年市场会增长很多。

Imagine the possibilities when neither memory nor processing power is a significant obstacle. Every deep learning application we love, such as medicine, robotics, or drones, now can see the limitations drastically diminish. We would simply lose our minds…

想象一下当存储器和处理能力都不是重大障碍时的可能性。 现在,我们喜欢的每个深度学习应用程序(例如医学,机器人技术或无人机)都可以看到局限性大大减少。 我们只会失去理智……

If you already tried using a VPU or Open VINO, I’d love to have your feedback!

如果您已经尝试使用VPU或Open VINO,希望收到您的反馈!

Below, you will find helpful links for my Autonomous Tech community, website, and links to learn more about VPUs.

在下面,您将找到我的自治技术社区,网站的有用链接,以及有关VPU的更多信息的链接。

走得更远 (Go further)

Subscribe to the daily emails and learn cutting-edge Computer Vision & Self-Driving Cars

订阅每日电子邮件,并学习尖端的计算机视觉和自动驾驶汽车

Visit thinkautonomous.ai and get at the leading-edge of Autonomous Technologies

访问 thinkautonomous.ai 并获得Autonomous Technologies的领先优势

Need to learn more?

需要了解更多吗?

Get OpenVINO

获取OpenVINO

Learn about Movidius Myriad X VPUs

Get the USB stick — Intel NCS 2 (Intel Neural Compute Stick 2) for 70$

只需70美元即可获得USB记忆棒— 英特尔NCS 2(英特尔神经计算棒2)

Jeremy Cohen

杰里米·科恩(Jeremy Cohen)

Editor’s Note: Heartbeat is a contributor-driven online publication and community dedicated to exploring the emerging intersection of mobile app development and machine learning. We’re committed to supporting and inspiring developers and engineers from all walks of life.

编者注: 心跳 是由贡献者驱动的在线出版物和社区,致力于探索移动应用程序开发和机器学习的新兴交集。 我们致力于为各行各业的开发人员和工程师提供支持和启发。

Editorially independent, Heartbeat is sponsored and published by Fritz AI, the machine learning platform that helps developers teach devices to see, hear, sense, and think. We pay our contributors, and we don’t sell ads.

Heartbeat在编辑上是独立的,由以下机构赞助和发布 Fritz AI ,一种机器学习平台,可帮助开发人员教设备看,听,感知和思考。 我们向贡献者付款,并且不出售广告。

If you’d like to contribute, head on over to our call for contributors. You can also sign up to receive our weekly newsletters (Deep Learning Weekly and the Fritz AI Newsletter), join us on Slack, and follow Fritz AI on Twitter for all the latest in mobile machine learning.

如果您想做出贡献,请继续我们的 呼吁捐助者 。 您还可以注册以接收我们的每周新闻通讯(《 深度学习每周》 和《 Fritz AI新闻通讯》 ),并加入我们 Slack ,然后继续关注Fritz AI Twitter 提供了有关移动机器学习的所有最新信息。

翻译自: https://heartbeat.fritz.ai/vision-processing-units-vpus-6a33f282322e

视觉处理

8万+

8万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?