kafka oauth2

By: Stephen Humphreys, Director, is the Head of Product Engineering for Cachematrix within the Aladdin Product Group at BlackRock.

作者:史蒂芬·汉弗莱斯 ( Stephen Humphreys) , 董事 ,是贝莱德 阿拉丁产品集团Cachematrix产品工程负责人。

and

和

Sam Russak, Analyst, is an engineer on the Cachematrix Product Engineering team within the Aladdin Product Group at BlackRock.

分析师 Sam Russak是BlackRock公司 阿拉丁产品组Cachematrix产品工程团队的工程师。

总览 (OVERVIEW)

Cachematrix is a web-based liquidity management platform white-labeled by 25 large global banks and asset management companies. It has a multi-tenant architecture that requires strict isolation of data processing and access control of features and resources by white-label client. To achieve this goal, we need to have a comprehensive and scalable security solution with minimum burden on developers. Specifically, we want fine grained security control on all system and application resources in Kafka; we want these controls to be transparent to developers; and we want the controls to be managed at deployment time or in production. The out-of-the-box Kafka security implementation is not comprehensive or scalable. It is also cumbersome for developers and difficult to configure in a deployment environment. Luckily, Kafka provides hooks for alternative implementations in their security framework and our library implements the standard Kafka OAuth Security Framework.

Cachematrix是基于网络的流动性管理平台,被25家大型全球银行和资产管理公司贴上了白色标签。 它具有多租户架构,要求白标签客户端严格隔离数据处理以及对功能和资源的访问控制。 为了实现这一目标,我们需要一个全面且可扩展的安全解决方案,以最小的开发人员负担。 具体来说,我们希望对Kafka中的所有系统和应用程序资源进行细粒度的安全控制; 我们希望这些控件对开发人员透明; 我们希望在部署时或在生产中对控件进行管理。 开箱即用的Kafka安全实施并不全面或不可扩展。 这对于开发人员来说也很麻烦,并且难以在部署环境中进行配置。 幸运的是,Kafka在其安全框架中为其他实现提供了挂钩,并且我们的库实现了标准的Kafka OAuth安全框架。

Our library is capable of fine grained authorization and authentication for Kafka resources in a multi-tenant or single tenant environment. It can be used either to isolate data processing of different clients in a multi-tenant application or to isolate one application from another on a shared instance. The solution is transparent to the application code and can be added to existing code with minimal configuration. This relieves developers from repetitive code for every resource in their app that requires access-control, and defers security setup to dev ops at deployment time or in production (either manually or through CI). We believe that the comprehensiveness and smooth developer experience is critical for adoption of Kafka as an enterprise grade platform. We want to open source our library so that other enterprise Kafka developers can use or enhance our solution immediately, or extend it with vendor specific features for the Kafka community.

我们的库能够在多租户或单租户环境中对Kafka资源进行细粒度的授权和身份验证。 它可以用于隔离多租户应用程序中不同客户端的数据处理,也可以用于在共享实例上将一个应用程序与另一个应用程序隔离。 该解决方案对应用程序代码是透明的,并且可以使用最少的配置将其添加到现有代码中。 这使开发人员不必为需要访问控制的应用程序中的每个资源使用重复的代码,并且可以在部署时或在生产中(手动或通过CI)将安全设置推迟给开发人员。 我们认为,全面和流畅的开发人员经验对于采用Kafka作为企业级平台至关重要。 我们想开源我们的库,以便其他企业Kafka开发人员可以立即使用或增强我们的解决方案,或者使用针对Kafka社区的供应商特定功能对其进行扩展。

Although our implementation uses KeyCloak as the OAuth server, the solution can be adapted with any OAuth 2.0 compliant product in the marketplace. We picked KeyCloak because it is an open source product that is available both inside and outside of BlackRock developer communities. We have chosen to open source this work to benefit the Kafka developer community at large, as well. By following our implementation guide developers will be able to successfully secure their Kafka clusters with our modern, centralized solution.

尽管我们的实现使用KeyCloak作为OAuth服务器,但是该解决方案可以与市场上任何符合OAuth 2.0的产品相适应。 我们之所以选择KeyCloak,是因为它是BlackRock开发人员社区内部和外部均可使用的开源产品。 我们选择将这项工作开源,以使整个Kafka开发人员社区受益。 通过遵循我们的实施指南,开发人员将能够使用我们现代的集中式解决方案成功保护其Kafka集群。

关键概念 (KEY CONCEPTS)

卡夫卡安全 (KAFKA SECURITY)

Out of the box, Kafka security uses digital cert for authentication and uses Kafka proprietary ACLs for authorization. However, they are basic and not up to latest enterprise security standards.

开箱即用的Kafka安全性使用数字证书进行身份验证,并使用Kafka专有ACL进行授权。 但是,它们是基本的,没有达到最新的企业安全标准。

Kafka does provide SASL based framework to allow developers to plug in more sophisticated and enterprise grade security services.

Kafka确实提供了基于SASL的框架,以允许开发人员插入更复杂的企业级安全服务。

The following diagram shows the out of box security features and extension points for custom security.

下图显示了现成的安全功能和用于自定义安全的扩展点。

Learn More about Kafka Security

我们的解决方案 (Our Solution)

The purpose of the solution is to improve the out of box Kafka security features. Our goals are:

该解决方案的目的是改善现成的Kafka安全功能。 我们的目标是:

- Modern industry standard — ability to expire/refresh credentials for long running processes without downtime 现代行业标准—可以在长时间运行的过程中使凭据过期/刷新,而无需停机

- Enterprise grade — support of centralized management and segregation of duties in operation 企业级—支持集中管理和运营职责分离

- DX focused — capability of deployment time configuration 专注于DX —部署时间配置功能

建筑 (Architecture)

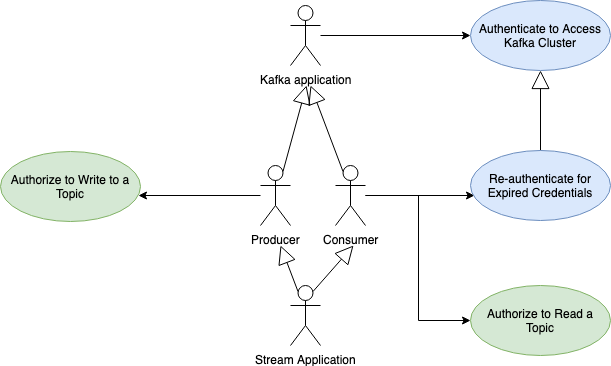

The solution covers the following Kafka application use cases:

该解决方案涵盖以下Kafka应用程序使用案例:

The blue shaded use cases are authentication related and the green ones are for authorization.

蓝色阴影的用例与身份验证相关,绿色的用例用于授权。

OAuth meets our design goal for enterprise grade Kafka authentication and authorization. OAuth is a well-accepted industry standard security protocol. It allows third-party applications to obtain a token that provides access to a resource service. The token expires within a defined time limit. When the token is expired, the application must request a new token to stay authenticated.

OAuth符合我们针对企业级Kafka身份验证和授权的设计目标。 OAuth是一种公认的行业标准安全协议。 它允许第三方应用程序获取用于访问资源服务的令牌。 令牌在定义的期限内到期。 当令牌过期时,应用程序必须请求新令牌以保持身份验证。

OAuth servers allow for several grant types to secure an application. Our solution utilizes the Client Credentials grant type which handles service to service interactions. An OAuth client is an application that is registered within the OAuth server. We can think of a client as a Kafka application running a producer that is sending data to a topic. First, a client uses a Client Id and a Client Secret to obtain a token from the OAuth server. Next, the client makes its request to the resource and passes that token along with the request. Then, the resource authenticates the request by validating the token with the OAuth server. Once authenticated, the resource can use the scopes in the token to authorize the access. An OAuth scope represents permission to access a resource.

OAuth服务器允许几种授权类型来保护应用程序。 我们的解决方案利用“客户证书”授予类型来处理服务与服务之间的交互。 OAuth客户端是在OAuth服务器中注册的应用程序。 我们可以将客户端视为运行生产者的Kafka应用程序,该生产者将数据发送到主题。 首先,客户端使用客户端ID和客户端密钥从OAuth服务器获取令牌。 接下来,客户端向资源发出请求,并将该令牌与请求一起传递。 然后,资源通过使用OAuth服务器验证令牌来认证请求。 身份验证之后,资源可以使用令牌中的范围来授权访问。 OAuth范围代表访问资源的权限。

In the diagram below, you can see a basic flow of how an OAuth client and an OAuth resource would interact with an OAuth server.

在下图中,您可以看到OAuth客户端和OAuth资源如何与OAuth服务器进行交互的基本流程。

Our solution is a library that utilizes SASL which is provided by Kafka for custom authentication implementation. The library uses the SASL OAUTHBEARER mechanism for OAuth workflow and implements the Kafka SASL interfaces.

我们的解决方案是一个利用Kafka提供的SASL进行自定义身份验证实现的库。 该库对OAuth工作流程使用SASL OAUTHBEARER机制,并实现Kafka SASL接口。

For authorization, the library overrides the Authorizer to use OAuth scopes. Once a token is authenticated, it will be forwarded to the authorizer to check the permissions granted to that token. This allows the solution to combine authentication and authorization into a single workflow in a centralized OAuth server. The following diagram describes how our solution implements the SASL framework.

对于授权,该库将覆盖授权者以使用OAuth范围。 令牌通过身份验证后,将被转发给授权者以检查授予该令牌的权限。 这使解决方案可以将身份验证和授权组合到集中式OAuth服务器中的单个工作流程中。 下图描述了我们的解决方案如何实现SASL框架。

The sequence diagram below describes the OAuth flow for a Kafka producer application.

下面的序列图描述了Kafka生产者应用程序的OAuth流程。

Source code for the library is available at https://github.com/kafka-security/oauth

该库的源代码位于https://github.com/kafka-security/oauth

使用我们的解决方案实施Kafka OAuth (Implementing Kafka OAuth with Our Solution)

实施认证 (Implement Authentication)

OAuth服务器配置 (OAuth Server Configuration)

Define OAuth client in KeyCloak for the Kafka broker, producer and consumer applications using Client Credential grant type KeyCloak Guide

使用“客户端证书”授予类型“ KeyCloak指南”在KeyCloak中为Kafka经纪人,生产者和使用者应用程序定义OAuth客户端

- Create an OAuth properties file for each application (broker, producer, and consumer) 为每个应用程序(代理,生产者和使用者)创建OAuth属性文件

经纪人配置 (Broker Configuration)

Create a broker-configurations.properties file as the OAuth configuration (specified above).

创建一个broker-configurations.properties文件作为OAuth配置( 上面指定 )。

Edit the server.properties file located in the Kafka configs directory (kafka/config/server.properties). Add the following to the bottom of the properties file.

编辑位于Kafka configs目录( kafka / config / server.properties )中的server.properties文件。 将以下内容添加到属性文件的底部。

The updated server.properties file will now configure our Kafka broker to use the SASL/OAUTHBEARER protocol when communicating with producers and consumers. We have also told Kafka to use our custom OAuthAuthenticateLoginCallbackHandler and OAuthAuthenticateValidatorCallbackHandler classes. These classes will use the OAuth properties file that we configured for our broker client to request and validate tokens against KeyCloak. The connections.max.reauth.ms fields specifies the maximum amount of time before a client is required to re-authenticate itself. The default value for this field is 0, no re-authentication, so it must be set to a value greater than 0. Kafka will use the token’s lifetime if it is shorter than the specified amount of time set for this property. This allows you to either set it to a value larger than your token’s lifetime and let Kafka use your token until it expires or set this property to a shorter amount of time than the token to re-authenticate multiple times a session.

现在,更新的server.properties文件将配置我们的Kafka代理与生产者和消费者进行通信时使用SASL / OAUTHBEARER协议。 我们还告诉Kafka使用我们的自定义OAuthAuthenticateLoginCallbackHandler和OAuthAuthenticateValidatorCallbackHandler类。 这些类将使用为代理客户端配置的OAuth属性文件,以针对KeyCloak请求和验证令牌。 connections.max.reauth.ms字段指定在要求客户端重新进行身份验证之前的最长时间。 该字段的默认值为0,不进行重新认证,因此必须将其设置为大于0的值。如果Kafka的令牌生存期短于为此属性设置的指定时间,它将使用令牌的生存期。 这样一来,您可以将其设置为大于令牌生存期的值,并让Kafka使用令牌直到令牌过期,或者将此属性设置为比令牌更短的时间,以多次验证会话。

3. Create a Java Authentication and Authorization Service (JAAS) configuration file, kafka_server_jaas.conf. Kafka uses the JAAS file for all SASL configuration, so we will configure ours to use the OAuth bearer login module. The contents of the file are specified below.

3.创建一个Java身份验证和授权服务(JAAS)配置文件kafka_server_jaas.conf。 Kafka将JAAS文件用于所有SASL配置,因此我们将配置为使用OAuth承载者登录模块。 文件的内容在下面指定。

4. Move all required files into the Kafka directory. The configuration and property files will be moved into Kafka’s config directory, and the Kafka OAuth library JAR will need to be moved to Kafka’s libs directory.

4.将所有必需的文件移到Kafka目录中。 配置和属性文件将移至Kafka的config目录中,而Kafka OAuth库JAR将需要移至Kafka的libs目录中。

5. Configure the environment to run the broker.

5.配置环境以运行代理。

Note: you will need to run the broker, producer and consumer in separate shells to not overwrite the KAFKA_OAUTH_SERVER_PROP_FILE variable with a different client’s information.

注意:您将需要在单独的外壳中运行代理,生产者和使用者,以免用其他客户的信息覆盖KAFKA_OAUTH_SERVER_PROP_FILE变量。

生产者/消费者配置 (Producer/Consumer Configuration)

- Define OAuth properties file specified in OAuth Server Configuration section, oauth-configuration.properties. Be sure to place the appropriate client information into each file. 定义在OAuth服务器配置部分oauth-configuration.properties中指定的OAuth属性文件。 确保将适当的客户信息放入每个文件中。

- Add the libkafka.oauthbearer dependency to the projects pom.xml file. 将libkafka.oauthbearer依赖项添加到项目pom.xml文件中。

3. Add the following configuration to Producer/Consumer Java source code.

3.将以下配置添加到Producer / Consumer Java源代码。

4. Set the KAFKA_OAUTH_SERVER_PROP_FILE environment variable for both clients.

4.为两个客户端设置KAFKA_OAUTH_SERVER_PROP_FILE环境变量。

授权书 (Authorization)

1.定义客户范围 (1. Define Client Scopes)

To authorize a client to perform a specific operation, we need to create scopes in the OAuth server. Client scopes are defined using the Uniform Resource Name (URN) format. We use this format because there are several pieces of information we need to parse out of the scope. We need the type of resource performing an action, the name of the resource that is performing the action, and the operation that is being performed. Please see Kafka documentation for valid combinations of resource types to operations.

要授权客户端执行特定操作,我们需要在OAuth服务器中创建范围。 客户端作用域是使用统一资源名称(URN)格式定义的。 之所以使用这种格式,是因为我们需要解析超出范围的几条信息。 我们需要执行操作的资源的类型,执行操作的资源的名称以及正在执行的操作。 请参阅Kafka文档,以获取资源类型与操作的有效组合。

For this example, we will be using a topic called “test” and a consumer group called “foo”. Create these scopes to the OAuth server and then add them to their designated client.

在此示例中,我们将使用名为“ test”的主题和名为“ foo”的消费者组。 将这些范围创建到OAuth服务器,然后将它们添加到其指定的客户端。

2.经纪人配置 (2. Broker Configuration)

This step is to let the Kafka broker know we want to use our custom authorization. This is done by editing the server.properties file and adding in the fields specified below. Be sure the server.properties file is located in the kafka/config/ directory after editing. The first line says to use our CustomAuthorizer class from the libkafka.oauthbearer-1.0.0.jar, this class will perform the logic of parsing out scope information from the token and determining if the scopes match the resource and operation. The second line tells Kafka to use our CustomPrincipalBuilder class, this class allows us to pass the token information to the CustomAuthorizer class. The standard Kafka Principal does not contain this information, so we must override it to successfully authorize a client.

这一步是让Kafka经纪人知道我们要使用我们的自定义授权。 通过编辑server.properties文件并添加以下指定的字段来完成此操作。 编辑后,请确保server.properties文件位于kafka / config /目录中。 第一行说使用libkafka.oauthbearer-1.0.0.jar中的CustomAuthorizer类,该类将执行从令牌中解析范围信息并确定范围是否与资源和操作匹配的逻辑。 第二行告诉Kafka使用我们的CustomPrincipalBuilder类,该类允许我们将令牌信息传递给CustomAuthorizer类。 标准的Kafka委托人不包含此信息,因此我们必须重写它才能成功授权客户端。

步骤1的替代方法:ACL命令行界面 (Alternative for Step 1: ACL Command Line Interface)

An optional add-on to this library is the use of our customized CLI for adding/removing scopes from KeyCloak clients. This tool takes advantage of the KeyCloak Admin API to save you the time of interacting with the KeyCloak UI. The first thing you will need to do is create an Admin Service Client within KeyCloak. Next, you will need to override the Kafka ACL script to run our custom CLI tool. Go to Kafka’s bin directory and edit the kafka-acls.sh scripts (NOTE: Windows users will need to go into the windows folders and edit the kafka-acls.bat script). Change the command to use the following Java class.

该库的一个可选附加组件是使用我们的自定义CLI从KeyCloak客户端添加/删除范围。 该工具利用KeyCloak Admin API的优势来节省与KeyCloak UI交互的时间。 您需要做的第一件事是在KeyCloak中创建一个Admin Service Client。 接下来,您将需要覆盖Kafka ACL脚本以运行我们的自定义CLI工具。 转到Kafka的bin目录并编辑kafka-acls.sh脚本(注意:Windows用户将需要进入Windows文件夹并编辑kafka-acls.bat脚本)。 更改命令以使用以下Java类。

Save the file and now you are set to use the new KeyCloak CLI. Below is an example command to add a the scope “urn:kafka:topic:test:read” to the kafka-broker client.

保存文件,现在您可以使用新的KeyCloak CLI。 以下是将范围“ urn:kafka:topic:test:read”添加到kafka-broker客户端的示例命令。

摘要 (Summary)

We set out to solve the problem of authentication and authorization within Kafka, and in doing so created a fully functional library that implements the OAuth 2.0 protocol. Designed around the use of a KeyCloak OAuth server, we have created working code that combines Kafka’s SASL OAUTHBEARER and ACL mechanisms into a single workflow and expanded upon them. For more details on implementing our solution please follow the Kafka guide in our repository, Kafka OAuth Config. By following our implementation guide, developers will be able to successfully secure their Kafka clusters with our modern, centralized solution.

我们着手解决Kafka中的身份验证和授权问题,并为此创建了一个实现OAuth 2.0协议的功能齐全的库。 围绕使用KeyCloak OAuth服务器进行设计,我们创建了工作代码,该工作代码将Kafka的SASL OAUTHBEARER和ACL机制组合到一个工作流程中,并在其上进行了扩展。 有关实现我们的解决方案的更多详细信息,请遵循我们的存储库Kafka OAuth Config中的Kafka指南。 遵循我们的实施指南,开发人员将能够使用我们现代的集中式解决方案成功保护其Kafka集群。

Originally published at http://rockthecode.io on May 8, 2020

最初于 2020年5月8日 发布在 http://rockthecode.io

翻译自: https://medium.com/blackrock-engineering/utilizing-oauth-for-kafka-security-5c1da9f3d3d

kafka oauth2

本文详细介绍了如何将OAuth2应用于Kafka的安全设置,以增强消息系统的安全性。通过这种方式,可以确保只有经过身份验证的用户才能访问Kafka集群。

本文详细介绍了如何将OAuth2应用于Kafka的安全设置,以增强消息系统的安全性。通过这种方式,可以确保只有经过身份验证的用户才能访问Kafka集群。

6694

6694

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?