Numerical Computation

1 Overflow and Underflow

overflow: when numbers with large magnitude are approximated as ∞ or −∞.

underflow: when numbers near zero are rounded to zero

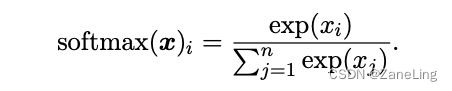

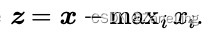

against underflow and overflow: softmax function:

use softmax(z) instead of softmax(x)

make sure the max argument to exp() is 0, no possibility of overflow.

at least one term in the denominator has a value of 1, no possibility of underflow.

2 Poor Conditioning 病态条件

condition number: how rapidly the function changes with respect to small changes in its input.

3 Gradient-Based Optimization 基于梯度的优化方法

most optimization algorithms are minimization algorithm

objective function / criterion: the function we want to minimize or maximize

cost function / loss function / error function: minimize objective function

gradient descent: reduce f by moving x in small steps with opposite sign of derivative

critical points / stationary points: derivative of f(x) equals to 0

to be divided into three classes: local minimum / local maximum / saddle point

global minimum: a point that obtains the absolute lowest value of f(x)

For functions with multiple inputs:

partial derivatives: measures how f changes as only the variable x increases

gradient: the vector containing all of the partial derivatives

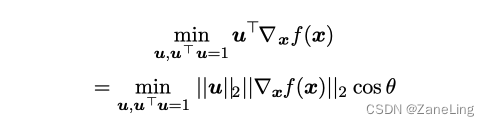

directional derivative in direction u: the slope of the function f in direction u

u is a unit vector

we have to find the direction if which f decreases the fastest:

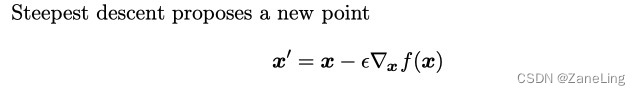

the method of steepest descent / gradient descent

where epsilon is the learning rate, a positive scalar determining the size of the step

popular approach to choose epsilon:

set it to a small constant / linear search

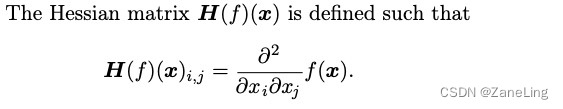

4 Beyond the gradient: Jacobian and Hessian Matrices

Jacobian matrix: The matrix containing all partial derivatives of function f

Hessian matrix:

Equivalently, the Hessian is the Jacobian of the gradient.

Anywhere that the second partial derivatives are continuous, the differentialoperators are commutative,Hi,j = H j,i, so the Hessian matrix is symmetric at such points.

849

849

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?