提速了5倍,因为cuda加速使用了异步非默认流

solution已经完成效果

正常的用一张卡

答案

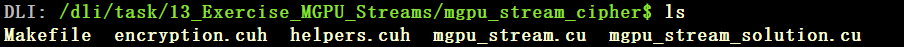

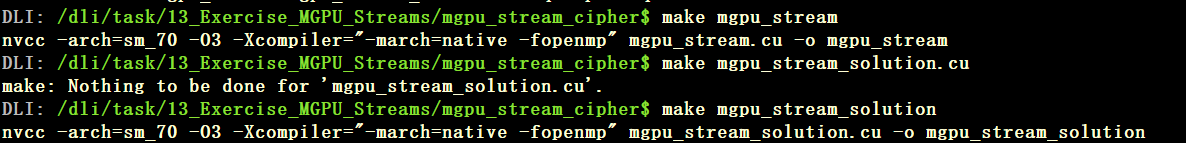

makefile 文件

CUDACXX=nvcc

CUDACXXFLAGS=-arch=sm_70 -O3

CXXFLAGS=-march=native -fopenmp

NSYS=nsys profile

NSYSFLAGS=--stats=true --force-overwrite=true

all: mgpu_stream

mgpu_stream: mgpu_stream.cu

$(CUDACXX) $(CUDACXXFLAGS) -Xcompiler="$(CXXFLAGS)" mgpu_stream.cu -o mgpu_stream

mgpu_stream_solution: mgpu_stream_solution.cu

$(CUDACXX) $(CUDACXXFLAGS) -Xcompiler="$(CXXFLAGS)" mgpu_stream_solution.cu -o mgpu_stream_solution

profile: mgpu_stream

$(NSYS) $(NSYSFLAGS) -o mgpu-stream-report ./mgpu_stream

profile_solution: mgpu_stream_solution

$(NSYS) $(NSYSFLAGS) -o mgpu-stream-solution-report ./mgpu_stream_solution

clean:

rm -f mgpu_stream mgpu_stream_solution *.qdrep *.sqlite

mgpu_solution.cu

#include <cstdint>

#include <iostream>

#include "helpers.cuh"

#include "encryption.cuh"

void encrypt_cpu(uint64_t * data, uint64_t num_entries,

uint64_t num_iters, bool parallel=true) {

#pragma omp parallel for if (parallel)

for (uint64_t entry = 0; entry < num_entries; entry++)

data[entry] = permute64(entry, num_iters);

}

__global__

void decrypt_gpu(uint64_t * data, uint64_t num_entries,

uint64_t num_iters) {

const uint64_t thrdID = blockIdx.x*blockDim.x+threadIdx.x;

const uint64_t stride = blockDim.x*gridDim.x;

for (uint64_t entry = thrdID; entry < num_entries; entry += stride)

data[entry] = unpermute64(data[entry], num_iters);

}

bool check_result_cpu(uint64_t * data, uint64_t num_entries,

bool parallel=true) {

uint64_t counter = 0;

#pragma omp parallel for reduction(+: counter) if (parallel)

for (uint64_t entry = 0; entry < num_entries; entry++)

counter += data[entry] == entry;

return counter == num_entries;

}

int main (int argc, char * argv[]) {

Timer timer;

Timer overall;

const uint64_t num_entries = 1UL << 26;

const uint64_t num_iters = 1UL << 10;

const bool openmp = true;

const uint64_t num_gpus = 4;

const uint64_t num_streams = 32;

// Each stream needs num_entries/num_gpus/num_streams data. We use round up division for

// reasons previously discussed.

const uint64_t stream_chunk_size = sdiv(sdiv(num_entries, num_gpus), num_streams);

// It will be helpful to also to have handy the chunk size for an entire GPU.

const uint64_t gpu_chunk_size = stream_chunk_size*num_streams;

// 2D array containing number of streams for each GPU.

cudaStream_t streams[num_gpus][num_streams];

timer.start();

// For each available GPU device...

for (uint64_t gpu = 0; gpu < num_gpus; gpu++) {

// ...set as active device...

cudaSetDevice(gpu);

for (uint64_t stream = 0; stream < num_streams; stream++)

// ...create and store its number of streams.

cudaStreamCreate(&streams[gpu][stream]);

}

timer.stop("create streams");

check_last_error();

timer.start();

// Store GPU data pointers in an array.

uint64_t * data_cpu, * data_gpu[num_gpus];

cudaMallocHost(&data_cpu, sizeof(uint64_t)*num_entries);

// For each gpu device...

for (uint64_t gpu = 0; gpu < num_gpus; gpu++) {

// ...set device as active...

cudaSetDevice(gpu);

// ...use a GPU chunk's worth of data to calculate indices and width...

const uint64_t lower = gpu_chunk_size*gpu;

const uint64_t upper = min(lower+gpu_chunk_size, num_entries);

const uint64_t width = upper-lower;

// ...allocate data.

cudaMalloc(&data_gpu[gpu], sizeof(uint64_t)*width);

}

timer.stop("allocate memory");

check_last_error();

timer.start();

encrypt_cpu(data_cpu, num_entries, num_iters, openmp);

timer.stop("encrypt data on CPU");

overall.start();

timer.start();

// For each gpu...

for (uint64_t gpu = 0; gpu < num_gpus; gpu++) {

// ...set device as active.

cudaSetDevice(gpu);

// For each stream (on each GPU)...

for (uint64_t stream = 0; stream < num_streams; stream++) {

// Calculate index offset for this stream's chunk of data within the GPU's chunk of data...

const uint64_t stream_offset = stream_chunk_size*stream;

// ...get the lower index within all data, and width, of this stream's data chunk...

const uint64_t lower = gpu_chunk_size*gpu+stream_offset;

const uint64_t upper = min(lower+stream_chunk_size, num_entries);

const uint64_t width = upper-lower;

// ...perform async HtoD memory copy...

cudaMemcpyAsync(data_gpu[gpu]+stream_offset, // This stream's data within this GPU's data.

data_cpu+lower, // This stream's data within all CPU data.

sizeof(uint64_t)*width, // This stream's chunk size worth of data.

cudaMemcpyHostToDevice,

streams[gpu][stream]); // Using this stream for this GPU.

decrypt_gpu<<<80*32, 64, 0, streams[gpu][stream]>>> // Using this stream for this GPU.

(data_gpu[gpu]+stream_offset, // This stream's data within this GPU's data.

width, // This stream's chunk size worth of data.

num_iters);

cudaMemcpyAsync(data_cpu+lower, // This stream's data within all CPU data.

data_gpu[gpu]+stream_offset, // This stream's data within this GPU's data.

sizeof(uint64_t)*width,

cudaMemcpyDeviceToHost,

streams[gpu][stream]); // Using this stream for this GPU.

}

}

// Synchronize streams to block on memory transfer before checking on host.

for (uint64_t gpu = 0; gpu < num_gpus; gpu++) {

cudaSetDevice(gpu);

for (uint64_t stream = 0; stream < num_streams; stream++) {

cudaStreamSynchronize(streams[gpu][stream]);

}

}

timer.stop("asynchronous H2D -> kernel -> D2H multiGPU");

overall.stop("total time on GPU");

check_last_error();

timer.start();

const bool success = check_result_cpu(data_cpu, num_entries, openmp);

std::cout << "STATUS: test "

<< ( success ? "passed" : "failed")

<< std::endl;

timer.stop("checking result on CPU");

timer.start();

for (uint64_t gpu = 0; gpu < num_gpus; gpu++) {

cudaSetDevice(gpu);

for (uint64_t stream = 0; stream < num_streams; stream++) {

cudaStreamDestroy(streams[gpu][stream]);

}

}

timer.stop("destroy streams");

check_last_error();

timer.start();

cudaFreeHost(data_cpu);

for (uint64_t gpu = 0; gpu < num_gpus; gpu++) {

cudaSetDevice(gpu);

cudaFree(data_gpu[gpu]);

}

timer.stop("free memory");

check_last_error();

}

353

353

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?