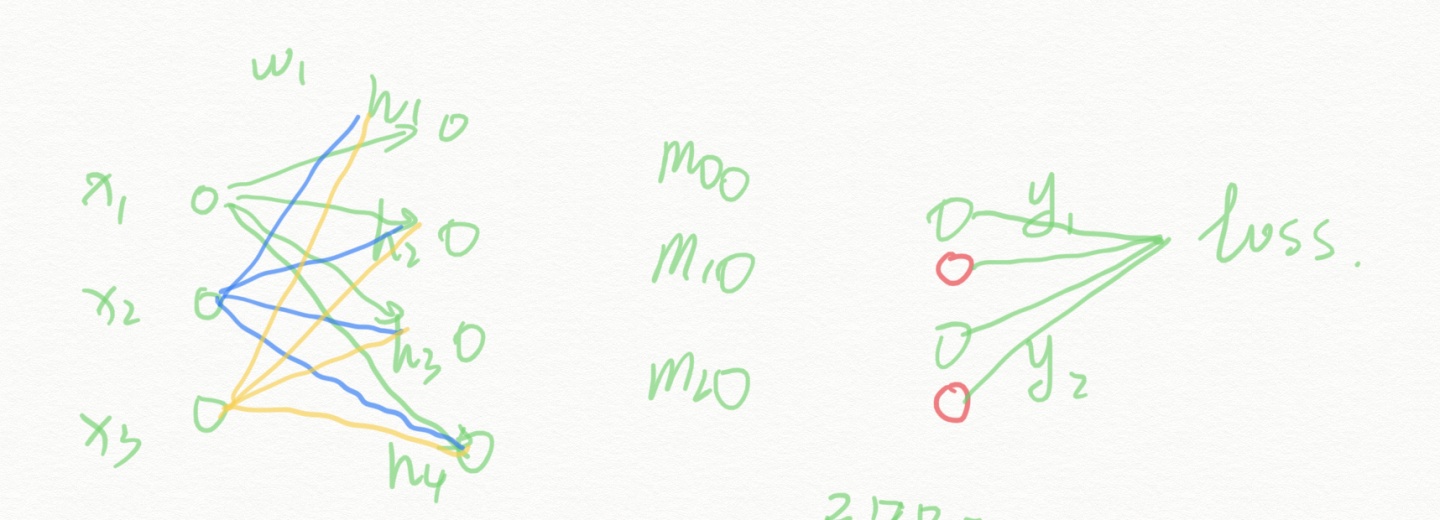

模型结构:

4层bp模型如下

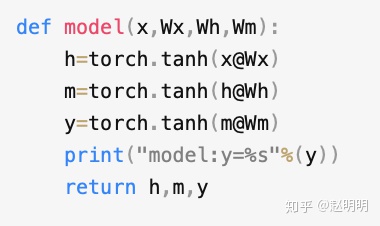

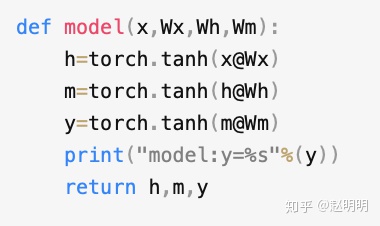

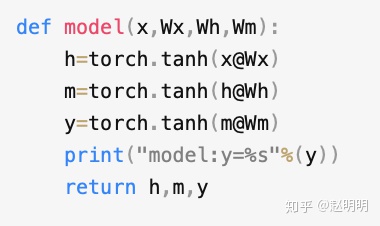

代码:

使用方法;

python bp_train_use_matrix.py 0.16# coding: utf-8

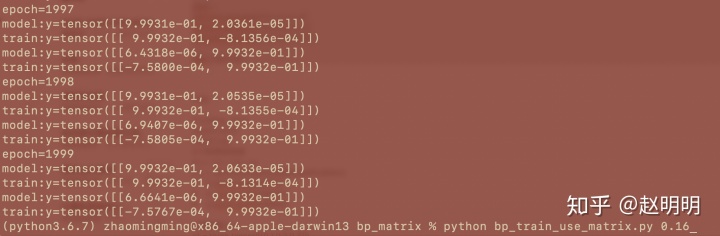

运行结果如下:

推导

一共4层:x,h,m,y

模型公式为:(@表示矩阵乘法)

h=torch.tanh(x@Wx)

m=torch.tanh(h@Wh)

y=torch.tanh(m@Wm)

x=[x0,x1,x2]

h=[h0,h1,h2,h3]

m=[m0,m1,m2]

y=[y0,y1]

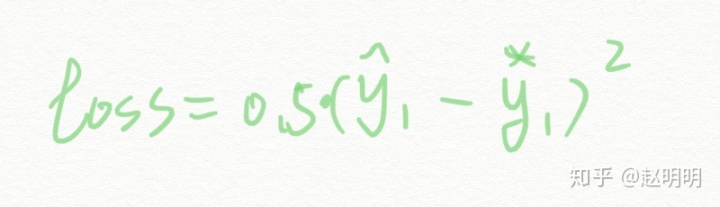

loss = 0.5(y^-y*)

y^:模型预测

y*:标注(真实值)

Loss对Wm求导:DWm

Wm = [ [wm00,wm01],

[wm10,wm11],

[wm20,wm21]]

DL/Dwm00 = DL/Dy0*Dy0/Dwm01 = (y0^-y0*) * (1-y0**2)*m0

DL/Dwm01 = DL/Dy1*Dy1/Dwm02 = (y1^-y1*) * (1-y1**2)*m0

DL/Dwm10 = DL/Dy0*Dy0/Dwm11 = (y0^-y0*) * (1-y0**2)*m1

DL/Dwm11 = DL/Dy1*Dy1/Dwm12 = (y1^-y1*) * (1-y1**2)*m1

DL/Dwm20 = DL/Dy0*Dy0/Dwm21 = (y0^-y0*) * (1-y0**2)*m2

DL/Dwm21 = DL/Dy1*Dy1/Dwm22 = (y1^-y1*) * (1-y1**2)*m2

若:EY = [y0^-y0*,y1^-y1*],DY=[1-y0**2,1-y1**2]

则:EY.*DY = [(y0^-y0*)(1-y0**2),(y1^-y1*)*(1-y1**2)]=[e0,e1]

则:

DL/Dwm00 = DL/Dy0*Dy0/Dwm00 = (y0^-y0*) * (1-y0**2)*m0 = e0*m0

DL/Dwm01 = DL/Dy1*Dy1/Dwm00 = (y1^-y1*) * (1-y1**2)*m0 =e1*m0

DL/Dwm10 = DL/Dy0*Dy0/Dwm10 = (y0^-y0*) * (1-y0**2)*m1 = e0*m1

DL/Dwm11 = DL/Dy1*Dy1/Dwm11 = (y1^-y1*) * (1-y1**2)*m1 = e1*m1

DL/Dwm20 = DL/Dy0*Dy0/Dwm20 = (y0^-y0*) * (1-y0**2)*m2 = e0*m2

DL/Dwm21 = DL/Dy1*Dy1/Dwm21 = (y1^-y1*) * (1-y1**2)*m2 = e1*m2

即:

Dwm =[[e0*m0,e1**m0]

[e0*m1,e1**m1]

[e0*m2,e1**m2] ]

=

[e0,e1].*[m0,m0]

[e0,e1].*[m1,m1]

[e0,e1].*[m2,m2]

=

[EY.*DY].*[m0,m0]

[EY.*DY].*[m1,m1]

[EY.*DY].*[m2,m2]

Loss对Wh求导:DWh

Wh = [ [wh00,wh01,wh02],

[wh10,wh11,wh12],

[wh20,wh21,wh22],

[wh30,wh31,wh32]]

则Dwh中各项:

DL/Dwh00= DL/Dm0*Dm0/Dwh00

DL/Dwh01= DL/Dm1*Dm1/Dwh01

DL/Dwh02= DL/Dm2*Dm2/Dwh02

DL/Dwh10= DL/Dm0*Dm0/Dwh10

DL/Dwh11= DL/Dm1*Dm1/Dwh11

DL/Dwh12= DL/Dm2*Dm2/Dwh12

DL/Dwh20= DL/Dm0*Dm0/Dwh20

DL/Dwh21= DL/Dm1*Dm1/Dwh21

DL/Dwh22= DL/Dm2*Dm2/Dwh22

DL/Dwh30= DL/Dm0*Dm0/Dwh30

DL/Dwh31= DL/Dm1*Dm1/Dwh31

DL/Dwh32= DL/Dm2*Dm2/Dwh32

上式子中的DL/Dm需要先求出:

DL/Dm0 = DL/Dy0*Dy0/Dm0 + DL/Dy1*Dy1/Dm0

= (y0^-y0*)*(1-y0^^2)*wm01 + (y1^-y1*)*(1-y1^^2)*wm02

= [EY.*DY] * [wm01,wm02]

= [EY.*DY] * Wm[0,:].t()

DL/Dm1 = DL/Dy0*Dy0/Dm1 + DL/Dy1*Dy1/Dm1

= EY.*DY * Wm[1,:].t()

DL/Dm2 = DL/Dy0*Dy0/Dm2 + DL/Dy1*Dy1/Dm2

= EY.*DY * Wm[2,:].t()

化简为矩阵的形式:

[ DL/Dm0, DL/Dm1, DL/Dm2] =EY.*DY * Wm.t() = [em0,em1,em2]=EM

DM = [1-m0**2,1-m1**2,1-m2**2]

EM.*DM = [em0*(1-m0**2),em1*(1-m1**2),em2*(1-m2**2)]=[dm0,dm1,dm2]

则Dwh中各项:

DL/Dwh00= DL/Dm0*Dm0/Dwh00 = dm0*h0

DL/Dwh01= DL/Dm1*Dm1/Dwh01 =dm1*h0

DL/Dwh02= DL/Dm2**Dm2/Dwh02 =dm2*h0

DL/Dwh10= DL/Dm0*Dm0/Dwh10 =dm0*h1

DL/Dwh11= DL/Dm1*Dm1/Dwh11 =dm1*h1

DL/Dwh12= DL/Dm2*Dm2/Dwh12 =dm2*h1

DL/Dwh20= DL/Dm0*Dm0/Dwh20 =dm0*h2

DL/Dwh21= DL/Dm1*Dm1/Dwh21 =dm1*h2

DL/Dwh22= DL/Dm2*Dm2/Dwh22. =dm2*h2

DL/Dwh30= DL/Dm0*Dm0/Dwh30 =dm1*h3

DL/Dwh31= DL/Dm1*Dm1/Dwh31 =dm2*h3

DL/Dwh32= DL/Dm2*Dm2/Dwh32. =dm3*h3

即:

Dwh =[[dm0*h0,dm1*h0,dm2*h0],

[dm0*h1,dm1*h1,dm2*h1],

[dm0*h2,dm1*h2,dm2*h3],

[dm0*h3,dm1*h3,dm2*h3]]

=

[dm0,dm1,dm2].*[h0,h0,h0]

[dm0,dm1,dm2].*[h1,h1,h1]

[dm0,dm1,dm2].*[h2,h2,h2]

[dm0,dm1,dm2].*[h3,h3,h3]

=

[EM.*DM].*[h0,h0,h0]

[EM.*DM].*[h1,h1,h1]

[EM.*DM].*[h2,h2,h2]

[EM.*DM].*[h3,h3,h3]

最后Loss对Wx求导:DWx

由EM=EY.*DY*Wm.t(),类比可得:

EH=EM.*DM*Wh.t()

m层的规律可适用于h层:DH = [1-h0**2,1-h1**2,1-h2**2,1-h3**2]DWx =

[EH.*DH].*[x0,x0,x0,x0]

[EH.*DH].*[x1,x1,x1,x1]

[EH.*DH].*[x2,x2,x2,x2]

致此,已求得梯度值:

DWm = D(loss)/D(Wm)DWh = D(loss)/D(Wh)DWx = D(loss)/D(Wx)

可用于更新参数:

Wm = Wm - DWm*learn_rate Wh = Wh - DWh*learn_rate Wx = Wx - DWx*learn_rate

总结:

拿到Y^ 与Y*后,

Y^ = [y0^,y1^]

Y* = [y0*,y0*]

算m层:

先算:Y^-Y*=[y0^-y0*,y1^-y1*]=EY

然后:1-Y^^2 = [1-y0^^2,1-y1^^2]=DY

EY.*DY = [Y^-Y*].*[1-Y^^2]

= [(y0^-y0*)*(1-y0^^2),(y1^-y1*)*(1-y1^^2)]

=[dy0,dy1]

DWm=

[EY.*DY].*[m0,m0]

[EY.*DY].*[m1,m1]

[EY.*DY].*[m2,m2]

若有bias的话:

DBm = EY.*DY =[dbm0,dbm1,dbm2]

算h层:

EM = EY.*DY*Wm.t

DM =[1-m0^^2,1-m1^^2,1-m2^^2]

DWh=

[EM.*DM].*[h0,h0,h0]

[EM.*DM].*[h1,h1,h1]

[EM.*DM].*[h2,h2,h2]

[EM.*DM].*[h3,h3,h3]

若有bias的话:

DBh = EM.*DM

算x层:

EH = EM.*DM*Wh.t

DH = [1-h0^^2,1-h1^^2,1-h2^^2,1-h3^^2]

DWx =

[EH.*DH].*[x0,x0,x0,x0]

[EH.*DH].*[x1,x1,x1,x1]

[EH.*DH].*[x2,x2,x2,x2]

若有bias的话:

DBx = EH.*DH

附录:

符号说明:

DL:D(Loss)

Dwm01:D(wm01)

DL/Dy1 = 2*0.5(y1^-y1*)=(y1^-y1*)

DL/Dy2 = 2*0.5(y2^-y2*)=(y2^-y2*)

y1 = tanh(wm01*m0+wm11*m1+m21*m2)

tanh的导数为:1-tanh**2

Dy1/Dwm01 = (1-y1**2)*m0

Dy2/Dwm02 = (1-y2**2)*m0

507

507

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?