1.Train SVM on features

################################################################################

# TODO: #

# Use the validation set to set the learning rate and regularization strength. #

# This should be identical to the validation that you did for the SVM; save #

# the best trained classifer in best_svm. You might also want to play #

# with different numbers of bins in the color histogram. If you are careful #

# you should be able to get accuracy of near 0.44 on the validation set. #

################################################################################

for rs in regularization_strengths:

for lr in learning_rates:

svm=LinearSVM()

loss_hist=svm.train(X_train_feats,y_train,lr,rs,num_iters=100)

y_train_pred=svm.predict(X_train_feats)

train_accuracy=np.mean(y_train==y_train_pred)

y_val_pred=svm.predict(X_val_feats)

val_accuracy=np.mean(y_val==y_val_pred)

if val_accuracy>best_val:

best_val=val_accuracy

best_svm=svm

results[(lr,rs)]=(train_accuracy,val_accuracy)

################################################################################

# END OF YOUR CODE #

################################################################################

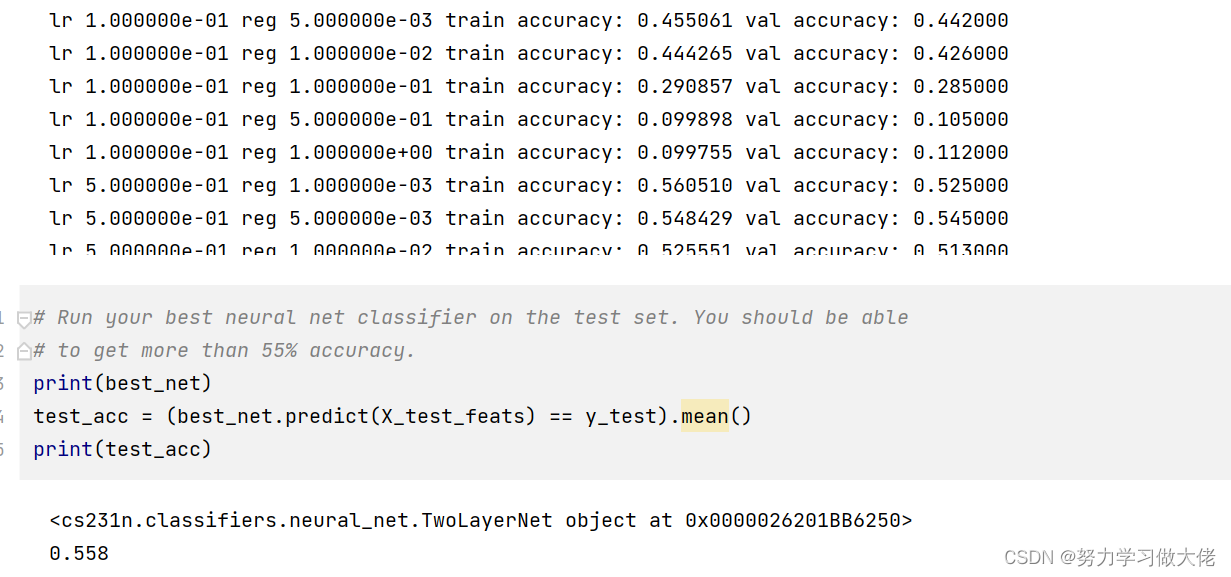

2.Neural Network on image features

from cs231n.classifiers.neural_net import TwoLayerNet

input_dim = X_train_feats.shape[1]

hidden_dim = 500

num_classes = 10

net = TwoLayerNet(input_dim, hidden_dim, num_classes)

best_net = None

best_val=-1

best_stats=None

################################################################################

# TODO: Train a two-layer neural network on image features. You may want to #

# cross-validate various parameters as in previous sections. Store your best #

# model in the best_net variable. #

################################################################################

learning_rates=[1e-2,1e-1,5e-1,1,5]

regularization_strengths=[1e-3,5e-3,1e-2,1e-1,0.5,1]

iters=500

for lr in learning_rates:

for rs in regularization_strengths:

net=TwoLayerNet(input_dim,hidden_dim,num_classes)

stats=net.train(X_train_feats,y_train,X_val_feats,y_val,num_iters=iters,batch_size=200,learning_rate=lr,learning_rate_decay=0.95,reg=rs)

y_train_pred=net.predict(X_train_feats)

acc_train=np.mean(y_train_pred==y_train)

y_val_pred=net.predict(X_val_feats)

acc_val=np.mean(y_val==y_val_pred)

results[(lr,rs)]=(acc_train,acc_val)

if best_val<acc_val:

best_stats=stats

best_val=acc_val

best_net=net

for lr, reg in sorted(results):

train_accuracy, val_accuracy = results[(lr, reg)]

print('lr %e reg %e train accuracy: %f val accuracy: %f' % (

lr, reg, train_accuracy, val_accuracy))

print('best validation accuracy achieved during cross-validation: %f' % best_val)

################################################################################

# END OF YOUR CODE #

################################################################################

760

760

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?