import tensorflow as tf

import numpy as np

import cv2

class Generator(tf.keras.Model):

def __init__(self):

super(Generator, self).__init__()

self.fc = tf.keras.Sequential([

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(512, activation='relu'),

tf.keras.layers.Dense(28 * 28, activation='sigmoid'),

])

def call(self, inputs):

return self.fc(inputs)

class Discriminator(tf.keras.Model):

def __init__(self):

super(Discriminator, self).__init__()

self.fc = tf.keras.Sequential([

tf.keras.layers.Dense(512, activation='relu'),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(1, activation='linear')

])

def call(self, inputs):

return self.fc(inputs)

class WGAN_GP():

def __init__(self):

self.noise_size = 16

self.generator = Generator()

self.discriminator = Discriminator()

self.generator.build(input_shape=(None, self.noise_size))

self.discriminator.build(input_shape=(None, 28 * 28))

self.g_optimizer = tf.keras.optimizers.Adam(learning_rate=1e-3)

self.d_optimizer = tf.keras.optimizers.Adam(learning_rate=1e-3)

def train(self, dataset, batch_size=1024, epochs=500):

for e in range(epochs):

generator_loss = list()

discriminator_loss = list()

for i in range(int(len(dataset) / batch_size)):

real_image = dataset[i * batch_size: (i + 1) * batch_size]

normal_z = np.random.normal(size=(batch_size, self.noise_size))

with tf.GradientTape() as tape:

d_loss = self.d_loss(self.generator, self.discriminator, normal_z, real_image)

grads = tape.gradient(d_loss, self.discriminator.trainable_variables)

self.d_optimizer.apply_gradients(zip(grads, self.discriminator.trainable_variables))

with tf.GradientTape() as tape:

g_loss = self.g_loss(self.generator, self.discriminator, normal_z)

grads = tape.gradient(g_loss, self.generator.trainable_variables)

self.g_optimizer.apply_gradients(zip(grads, self.generator.trainable_variables))

generator_loss.append(g_loss)

discriminator_loss.append(d_loss)

g_l = np.mean(generator_loss)

d_l = np.mean(discriminator_loss)

print("epoch: {} / {}, generator loss: {}, discriminator loss: {}".format(

e + 1, epochs, g_l, d_l

))

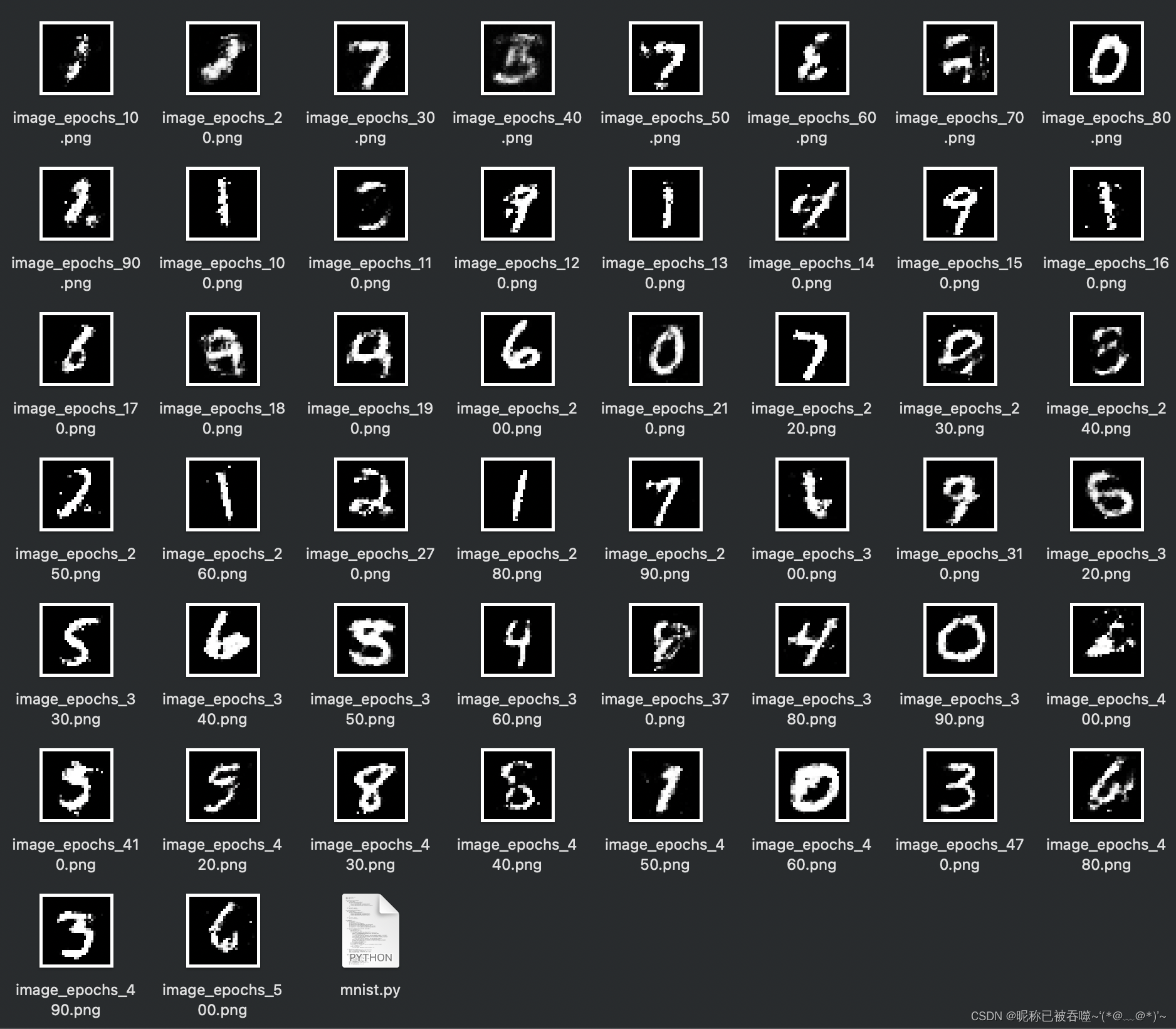

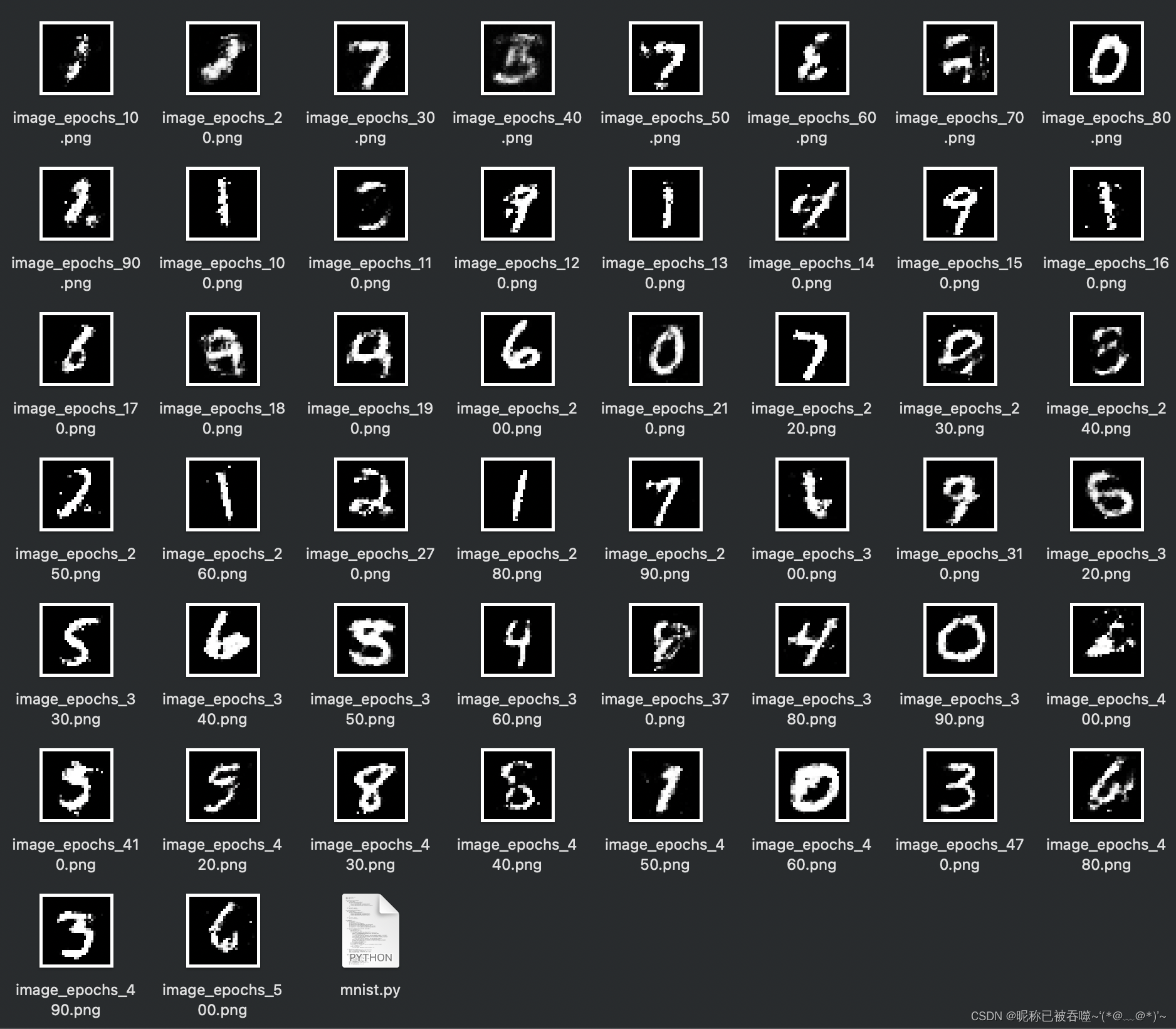

if (e + 1) % 10 == 0:

self.save_image('image_epochs_{}.png'.format(e + 1))

def save_image(self, path):

normal_z = np.random.normal(size=(1, self.noise_size))

image = self.generator.predict(normal_z)

image = np.reshape(image, newshape=(28, 28)) * 255.0

cv2.imwrite(path, image)

@staticmethod

def gradient_penalty(discriminator, real_image, fake_image):

assert real_image.shape[0] == fake_image.shape[0]

batch_size = real_image.shape[0]

eps = tf.random.uniform([batch_size, 1])

inter = eps * real_image + (1. - eps) * fake_image

with tf.GradientTape() as tape:

tape.watch([inter])

d_inter_logits = discriminator(inter)

grads = tape.gradient(d_inter_logits, inter)

grads = tf.reshape(grads, [grads.shape[0], -1])

gp = tf.norm(grads, axis=1)

gp = tf.reduce_mean((gp - 1.) ** 2)

return gp

@staticmethod

def g_loss(generator, discriminator, noise_z):

fake_image = generator(noise_z)

d_fake_logits = discriminator(fake_image)

loss = - tf.reduce_mean(d_fake_logits)

return loss

@staticmethod

def d_loss(generator, discriminator, noise_z, real_image):

fake_image = generator(noise_z)

d_fake_logits = discriminator(fake_image)

d_real_logits = discriminator(real_image)

gp = WGAN_GP.gradient_penalty(discriminator, real_image, fake_image)

loss = tf.reduce_mean(d_fake_logits) - tf.reduce_mean(d_real_logits) + 10. * gp

return loss, gp

if __name__ == '__main__':

dataset = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = dataset.load_data()

x_train = np.reshape(x_train, newshape=(-1, 28 * 28)) / 255.0

gen = WGAN_GP()

gen.train(dataset=x_train)

生成效果

340

340

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?