Use Python to analyze data, automate complex workflows\optimizations, and produce publication-quality plots. Lumerical's inverse design optimization makes extensive use of the Python API. The API can be used for developing scripts or programs that treat Lumerical solvers as clients, or in high-performance computing settings where performance and license utilization are imperative.

Python v3 is included with Lumerical's software, which avoids the need for any complex setup or configuration.

Requirements

- Lumerical product version 2019a R3 or later

- Gnome or Mate desktop environment for supported Linux systems when running from the Lumerical CAD/GUI.

- A graphical interface/connection to the machine running the API

- A Lumerical GUI license.

Note: The Python API requires interfacing with the Lumerical GUI and will utilize a GUI license.

Getting Started

Session management - Python API

This article demonstrates the feasibility of integrating Lumerical tools with Python to develop complex automated workflows and perform advanced data processing/plotting. Interoperability is made possible using the Python API, a python library known as lumapi. Hereafter these terms will be used interchangeably. Users will develop methods of managing a Lumerical session, and learn techniques of initializing and editing Lumerical simulation objects from Python script.

It should be possible to store the files in any working directory; however, it is recommended to put the Lumerical and Python files in the same folder. Basic commands for appending the environment path are given in Setting the Python Before Importing, but sophisticated manipulation of the directories is beyond the scope of this article. To modify the Lumerical working directory find instructions here.

A connection to the lumapi.py file is the key to enabling the Lumerical-Python API interface. This is accomplished by importing the lumapi module, and initializing a session. When initializing a session this will consume a GUI license. Accessing simulation objects is discussed below. Using script commands, and passing data are discussed in the articles Script commands as methods - Python API, and Passing Data - Python API.

Importing Modules

Python Path is Configured

For convenience, a basic Python 3 distro is shipped with Lumerical's solvers allowing users to get started immediately; by running any of our examples from the CAD script editor where lumapi can be loaded like any module.

import lumapi

Many users would like to integrate lumapi into their own Python environment. This is as simple as importing the lumapi module; however, by default python will not know where to find it. This can be addressed by modifying the search path. Please refer to the Python foundations guide on installing modules. Essentially we allow Python to find the lumapi module, by including its parent directory in the list that import class will search. Usually, a one-time update, see explicit path import for the OS specific directories. If you are not ready or to update the Python path then either of the following methods should work

Adding the Python Before Importing

To temporarily add the lumapi directory to your path, you can use the sys.path.append() method. This is the case if you have not yet added lumapi to your search path, and it is also useful when adding directories of other helpful lsf, fsp, py files. The following code adds the lumapi folder and current file directory.

Windows:

import sys, os

#default path for current release

sys.path.append("C:\\Program Files\\Lumerical\\v232\\api\\python\\")

sys.path.append(os.path.dirname(__file__)) #Current directory

Linux:

import sys, os

#default path for current release

sys.path.append("/opt/lumerical/v232/api/python/lumapi.py")

sys.path.append(os.path.dirname(__file__)) #Current directory

Explicit Import

If you need to specify the path to your lumapi distribution then the importlib.util() is the best method for pointing to the lumapi module. This may be the case if you are working on a different branch, or comparing lumapi versions.

Windows:

import importlib.util

#default path for current release

spec_win = importlib.util.spec_from_file_location('lumapi', 'C:\\Program Files\\Lumerical\\v232\\api\\python\\lumapi.py')

#Functions that perform the actual loading

lumapi = importlib.util.module_from_spec(spec_win) #windows

spec_win.loader.exec_module(lumapi)

Linux:

import importlib.util

#default path for current release

spec_lin = importlib.util.spec_from_file_location('lumapi', "/opt/lumerical/v232/api/python/lumapi.py")

#Functions that perform the actual loading

lumapi = importlib.util.module_from_spec(spec_lin)

spec_lin.loader.exec_module(lumapi)

See also: How to run Lumerical API (Python) scripts in Linux – Ansys Optics

Starting a session

This is as simple as calling the relevant constructor for the Lumerical product and storing it in an object.

fdtd = lumapi.FDTD()

Since the 2023 R1.2 release, the Python API can be used remotely on a Linux machine running the interop server (see Interop Server - Remote API to configure and run the interop server). To use the remote API, an additional parameter is required when starting a session, to specify the IP address and port to use to connect to the interop server. This port has to be the starting port defined for the interop server.

This parameter is an dictionary with 2 fields, "hostname" and port.

remoteArgs = { "hostname": "192.168.215.129",

"port": 8989 }

fdtd = lumapi.FDTD(hide=True, remoteArgs=remoteArgs)

You can create multiple sessions of the same product and different products at once, as long as they all have unique names.

mode1 = lumapi.MODE() mode2 = lumapi.MODE() device = lumapi.DEVICE()

Each of the product's constructor supports the following named optional parameters:

- hide (default to False): Shows or hides the lumerical GUI/CAD environment on startup

- filename (default empty): Launches a new application if it is empty, and will run the script if an lsf file is provided. If a project filename is provided; it will try and load the project if it can be found in the path. See the section setting the python path before importing to add folder or use the full path to load files from other directories.

# loads and runs script.lsf while hiding the application window inc = lumapi.INTERCONNECT(filename="script.lsf", hide=True)

Import Methods

Besides defining methods/functions in Python, user can take advantage of the auto-syncing function feature in lumapi and import functions that are pre-defined in a Lumerical script file (.lsf file or format). Once the script has been excuted using the "eval" command, the methods can be called as pre-defined methods in lumapi.

Follow is an example of importing functions from a script file "testScript.lsf" and from a script format string.

fdtd = lumapi.FDTD()

# import function defined in script format string

fdtd .eval("function helloWorld() { return \"hello world\"; }\nfunction returnFloat() { return 1.; }\nfunction addTest(a, b){ return a*b; }")

print(fdtd .helloWorld())

# import function defined in the script file "testScript.lsf"

code = open('C:/XXX/testScript.lsf', 'r').read()

fdtd .eval(code)

The script can also be passed as a parameter in the constructor to define a method:

def testAddingMethodsFromConstructor(self):

app = self.appConstructor(script="any_product_script_workspace_functions_available_in_python_test.lsf")

expectedMethods = {'helloWorld'}

expectedResults = ['hello world from script file']

results = []

results.append(app.helloWorld())

self.assertEqual(results, expectedResults)

app.close()

Advanced session management

When the variables local to the function or context manager go out of scope, they will be deleted automatically, and a Lumerical session will automatically close when all variable references pointing to it are deleted.

Wrapping the session in a function

In Python you can use functions if you need to run numerous similar instances; for example, when sweeping over some optional parameters. For more information on how the important results are returned see Passing data - Python API.

def myFunction(someOptionalParameter): fdtd = lumapi.FDTD() ... return importantResult

Using the "with" context manager

We support Python "with" statement by giving well-defined entrance and exit behavior to Lumerical session objects in Python. If there was any error within the "with" code block, the session still closes successfully (unlike in a function). Also note that any error message you typically see in a Lumerical script environment would be displayed in the Python exception.

with lumapi.FDTD(hide=True) as fdtd:

fdtd.addfdtd()

fdtd.setnamed("bad name") ## you will see

LumApiError: "in setnamed, no items matching the name 'bad name' can be found."

...

## fdtd still successfully closes

Simulation Objects

Since the release of 2019a, Python API supports adding objects using a constructor and setting sim-objects from a constructor.

Adding simulation objects using a constructor

When adding an object, its constructor can be used to set the values of properties at creation.

fdtd.addfdtd(dimension="2D", x=0.0e-9, y=0.0e-9, x_span=3.0e-6, y_span=1.0e-6)

In Python, dict ordering is not guaranteed, so if there are properties that depend on other properties, an ordered dict is necessary. For example, in the below line of Python, 'override global monitor settings' must be true before 'frequency points' can be set.

props = OrderedDict([("name", "power"),("override global monitor settings", True),("x", 0.),("y", 0.4e-6),

("monitor type", "linear x"),("frequency points", 10.0)])

fdtd.addpower(properties=props)

If you do not have properties with dependencies you can use regular Python dictionaries.

props = {"name": "power",

"x" : "0.0",

"y" : 0.4e-6",

"monitor type" : "linear x"}

fdtd.addpower(properties=props)

Manipulating simulation-objects

When adding a new object to a Lumerical product session, a representative Python object is returned. This object can be used to make changes to the corresponding object in the Lumerical product.

rectangle = fdtd.addrect(x = 2e-6, y = 0.0, z = 0.0) rectangle.x = -1e-6 rectangle.x_span = 10e-6

Dict-like access

The below line of python code shows an example of the dict-like access of parameters in an FDTD rectangle object.

rectangle = fdtd.addrect(x = 2e-6, y = 0.0, z = 0.0) rectangle["x"] = -1e-6 rectangle["x span"] = 10e-6

Parent and children access

The tree of objects in a Lumerical product can be traversed using the parent or children of an object.

device.addstructuregroup(name="A")

device.addrect(name="in A")

device.addtogroup("A")

device.addstructuregroup(name="B")

device.addtogroup("A")

bRect = device.addrect(name="in B")

device.addtogroup("A::B")

# Go up two parents from the rectangle in "B" group

aGroup = bRect.getParent().getParent()

# Print the names of all members of "A" group

for child in aGroup.getChildren():

print child["name"]

| Note: Python will automatically delete variables as they removed from scope, so most of the time you will not need to close a session manually. To do so explicitly, you can call the close session using. inc.close() #inc is the name of the active session |

Script commands as methods - Python API

FDTD MODE DGTD CHARGE HEAT FEEM INTERCONNECT Automation API

Almost all script commands in the Lumerical script language can be used as methods on your session object in Python. The lumapi methods and the Lumerical script commands share the same name and can be called directly on the session once it's been created. For example,

fdtd.addrect() # Note the added brackets since this is a method in Python.

For more information on Lumerical scripting language please see:

- Scripting Learning Track on Aansys Innovation Courses (AIC)

- Lumerical scripting language - Alphabetical list

- Lumerical scripting language - By category

Documentation Docstrings

For information on the lumapi methods from within the environment we support Python docstrings for Lumerical session objects. This is the simplest way to determine the available script commands, and syntax. This contains information that is similar to the Alphabetical List of Script Commands . You can view the docstring by using the Python built-in function "help" or most ways rich interactive Python shells display docstrings (e.g. IPython, Jupyter Notebook):

>>> help(f.addfdtd) Help on method addfdtd in module lumapi: addfdtd(self, *args) method of lumapi.FDTD instance Adds an FDTD solver region to the simulation environment. The extent of the solver region determines the simulated volume/area in FDTD Solutions. +-----------------------------------+-----------------------------------+ | Syntax | Description | +-----------------------------------+-----------------------------------+ | o.addfdtd() | Adds an FDTD solver region to the | | | simulation environment. | | | | | | This function does not return any | | | data. | +-----------------------------------+-----------------------------------+ See Also set(), run() https://kb.lumerical.com/en/ref_scripts_addfdtd.html

| Note: We still support previous version of the Python API, yet we strongly recommend to use the newer Python API. |

Missing Methods

Since the list of methods available through the API is nearly complete it is more illuminating to look at the syntax which is not acceptable in Python to understand the subtle variations. Special characters, and reserved keywords in lsf cannot be arbitrarily overloaded in Python; thus, the variations can be reduced to differing methods to access data, script operators. If you require the use of lsf characters it is recommended to use lumapi.eval() method, but one should be mindful that variables that exist in the Python are not necessarily available in Lumerical script environment and vice-versa. See Passing Data - Python API for more information.

Operators

The Script operators that are used in lsf cannot be overloaded in Python, so Algebraic: * , / , + , - , ^,... and Logical: >= , < , > , & , and , | , or , ! , ~ cannot be accessed. One should use the native Python operators to manipulate variables although some slight variations may exist.

A very helpful operator in Lumerical is ? (print, display) - Script operator, which allows you to print to screen and query available results. In Python your should use the print function to display and querynamed method, queryanalysisprop method, etc to access simulation object properties in Lumerical.

Datatypes

Lumerical and Python datatypes will differ in the associated operations, methods, and access style. For a summary of how datatypes are passed between environments see getv(), and putv(). Information on Lumerical datatypes and access is available in Introduction to Lumerical datasets. A reference on built-in Python types documentation is available from the Python Software Foundation.

lumapi.getv - Python API method

- 3 years ago

- Updated

| Syntax | Description |

|---|---|

| py_var = lumapi.getv( 'var_name') | When executed in Python, this function will get a variable from an active Lumerical session. The variable can be a string, real/complex numbers, matrix, cell or struct. |

A quick reference guide for translated datatypes.

| Lumerical | python |

|---|---|

| String | string |

| Real | float |

| Complex | np.array |

| Matrix | np.array |

| Cell array | list |

| Struct | dictionary |

| Dataset | dictionary |

String

When getv() is executed in Python, with the name of a Lumerical string variable passed as the argument, this function will return the string value of that variable to the Python environment.

with lumapi.FDTD(hide = True) as fdtd:

#######################

#Strings

Lumerical = 'Lumerical Inc'

fdtd.putv('Lum_str',Lumerical)

print(type(fdtd.getv('Lum_str')),str(fdtd.getv('Lum_str')))

Returns

<class 'str'> Lumerical Inc

Real Number

When getv() is executed in Python, with the name of a number lsf variable passed as the argument, this function will return the value of that variable to the python environment. Integer types are not supported in Lumerical and will be returned as type float.

with lumapi.FDTD(hide = True) as fdtd:

#Real Number

n = int(5)

fdtd.putv('num',n)

print('Real number n is returned as ',type(fdtd.getv('num')),str(fdtd.getv('num')))

Returns

Real number n is returned as <class 'float'> 5.0

Complex Number

When getv() is executed in Python, with the name of a Lumerical complex number variable passed as the argument, this function will return a numpy array with a single element to the Python environment. Likewise passing complex numbers require that they be encapsulated in a single element array. For more information see lumapi.putv(). Alternatively one can use the lumapi.eval() method to define a complex variable.

with lumapi.FDTD(hide = True) as fdtd:

#Complex Numbers

z = 1 + 1.0*1j

Z = np.zeros((1,1),dtype=complex) + z

fdtd.putv('Z',Z[0])

fdtd.eval('z = 1 +1i;')

print('Complex number Z is returned as ',type(fdtd.getv('Z')),str(fdtd.getv('Z')))

print('Complex number z is returned as ',type(fdtd.getv('z')),str(fdtd.getv('z')))

Returns

Complex number Z is returned as <class 'numpy.ndarray'> [[1.+1.j]] Complex number z is returned as <class 'numpy.ndarray'> [[1.+1.j]]

Note: the different imaginary unit conventions. In python complex numbers are initialized using ‘j’ and in Lumerical this accomplished using ‘i’.

Matrix

When getv() is executed in Python, with the name of a Lumerical matrix passed as the argument, this function will return the values of that matrix variable to the python environment as a numpy array. As we saw previously these support complex data. This can be useful when defining vectors, matrices or when calling Lumerical methods that return arrays.

with lumapi.FDTD(hide = True) as fdtd:

## Vector

rad = np.linspace(1,11)*1e-6 #np array

height = np.arange(1,3,0.1)*1e-6 #np array

#Pass to Lumerical

fdtd.putv('rad',rad)

fdtd.putv('height',height)

#Get from Lumerical

rad_rt=fdtd.getv('rad')

height_rt=fdtd.getv('height')

print('After round trip rad is ', type(rad_rt))

print('After round trip height is ', type(height_rt))

### Matrix

M1 = np.matrix([[0,0.5,0.3,0.4,0.5,0.6], [0,2,3,4,5,6],[0,2.2,3.5,4.4,5.5,6.5]])

M2 = np.zeros((2,2),dtype=complex)

#Pass to Lumerical

fdtd.putv('M1',M1)

fdtd.putv('M2',M2)

#Get from Lumerical

M1_rt=fdtd.getv('M1')

M2_rt=fdtd.getv('M2')

print('The matrix M is ', type(M1_rt), str(M1_rt))

print('The np array M2 is ', type(M2_rt), str(M2_rt))

# Return from Lumerical

A = fdtd.randmatrix(2,3)

B = fdtd.zeros(1,5)

print('The matrix A is ', type(A), str(A))

print('The matrix B is ', type(B), str(B))

Returns

After round trip rad is <class 'numpy.ndarray'> After round trip height is <class 'numpy.ndarray'> The matrix M is <class 'numpy.ndarray'> [[0. 0.5 0.3 0.4 0.5 0.6] [0. 2. 3. 4. 5. 6. ] [0. 2.2 3.5 4.4 5.5 6.5]] The np array M2 is <class 'numpy.ndarray'> [[0.+0.j 0.+0.j] [0.+0.j 0.+0.j]] The matrix A is <class 'numpy.ndarray'> [[0.806134 0.99917605 0.23325807] [0.69062098 0.53113442 0.18275802]] The matrix B is <class 'numpy.ndarray'> [[0. 0. 0. 0. 0.]]

Cell array

When getv() is executed in Python, with the name of a Lumerical cell passed as the argument, this function will return the cell to the Python environment as a list.

Cell arrays are ordered and can include any type of data already mentioned above, themselves and structs. Similarly, python lists are ordered and can include numerous classes as elements. These datatypes are logically equivalent.

with lumapi.FDTD(hide = True) as fdtd:

#Cells

C1 = [ i for i in range(11)] #list

C2 = [[1,2,3],[4,5,6]] #list of lists

C3 = [C1,C2,'string', 3.59]

#Pass to Lumerical

fdtd.putv('C_1',C1)

fdtd.putv('C_2',C2)

fdtd.putv('C_3',C3)

#Get from Lumerical

C1_rt=fdtd.getv('C_1')

C2_rt=fdtd.getv('C_2')

C3_rt=fdtd.getv('C_3')

print('The cell C1 is ', type(C1_rt), str(C1_rt))

print('The cell C2 is ', type(C2_rt), str(C2_rt))

print('The cell C3 is ', type(C3_rt), str(C3_rt))

Returns

The cell C1 is <class 'list'> [0.0, 1.0, 2.0, 3.0, 4.0, 5.0, 6.0, 7.0, 8.0, 9.0, 10.0] The cell C2 is <class 'list'> [[1.0, 2.0, 3.0], [4.0, 5.0, 6.0]] The cell C3 is <class 'list'> [[0.0, 1.0, 2.0, 3.0, 4.0, 5.0, 6.0, 7.0, 8.0, 9.0, 10.0], [[1.0, 2.0, 3.0], [4.0, 5.0, 6.0]], 'string', 3.59]

Struct

When getv() is executed in Python, with the name of a Lumerical struct passed as the argument, this function will return the struct to the Python environment as a dictionary.

Structures are indexed by fields, but not ordered and can include any datatype mentioned above and themselves as members. Similarly, python dictionaries are indexed by keys and can include numerous classes as elements. When getv() is executed in Python, with the name of a struct lsf variable passed as the argument, this function will return the cell to the Python environment as a list.

with lumapi.FDTD(hide = True) as fdtd:

#Struct

D = {'greeting' : 'Hello World', # String data

'pi' : 3.14, # Double data

'm' : np.array([[1.,2.,3.],[4.,5.,6.]]), # Matrix data

'nested_struct' : { 'e' : 2.71 } } # Struct

fdtd.putv('Dict', D)

D_rt = fdtd.getv('Dict')

print('The dictionary D is ', type(D_rt), str(D_rt))

Returns

The dictionary D is <class 'dict'> {'greeting': 'Hello World', 'm': array([[1., 2., 3.],

Datasets

When Lumerical datasets are returned to the Python environment they are converted into Python dictionaries. For more information see Passing data - Python API.

| Note: Previous versions of the API employed the getVar() method. Although this syntax still functions it will not continue to be supported. For more information on this deprecated technique of driving Lumericals tools from Python see the Session Management -python API.

|

Passing Data - Python API

When driving Lumerical's tools from the Python API there is a connection established between the environments, but they do not share a workspace. Instead, as variables are passed back and forth an exact copy is created according to the type conversion defined in getv(), and putv(). As of 2020a R4 typical transfer speeds are about 300 MBs, and reduced the memory overhead required to transfer data to 3x. This does not present an issue in terms of fidelity nor does it typically present a bottleneck in terms of speed; however, when working with very large datasets it may be important to take this into consideration if efficiency is imperative.

A quick reference guide for translated datatypes.

| Lumerical | Python |

|---|---|

| String | string |

| Real | float |

| Complex | np.array |

| Matrix | np.array |

| Cell array | list |

| Struct | dictionary |

| Dataset | dictionary |

Users of the API will often be interested in returning the following data types from monitors.

Raw Data

When accessing the raw data from a simulations it is available as a matrix in Lumerical and will be returned as a numpy array when passed to the Python environment.

The size of the matrices will be consistent with the lengths of the associated parameters.

- Attribute: The actual data of a dataset. For example the electric field components Ex, Ey, Ez are the attributes of a field profile monitor.

- Parameter: The associated position vectors of a dataset. For example x, y, z, and f could be the parameters of a field profile monitor.

Matrix dataset

- Scalar attribute: [Np1;Np2;...;Npn

- ]

- Vector attribute: [Np1;Np2;...;Npn;3

- ]

where Npi

is the length of the ith

parameter.

To access the raw monitor data use the getdata(), and Python squeeze or pinch() method to remove singleton dimensions.

with lumapi.FDTD() as fdtd:

fdtd.addfdtd(dimension="2D", x=0.0e-9, y=0.0e-9, x_span=3.0e-6, y_span=1.0e-6)

fdtd.addgaussian(name = 'source', x=0., y=-0.4e-6, injection_axis="y", waist_radius_w0=0.2e-6, wavelength_start=0.5e-6, wavelength_stop=0.6e-6)

fdtd.addring( x=0.0e-9, y=0.0e-9, z=0.0e-9, inner_radius=0.1e-6, outer_radius=0.2e-6, index=2.0)

fdtd.addmesh(dx=10.0e-9, dy=10.0e-9, x=0., y=0., x_span=0.4e-6, y_span=0.4e-6)

fdtd.addtime(name="time", x=0.0e-9, y=0.0e-9)

fdtd.addprofile(name="profile", x=0., x_span=3.0e-6, y=0.)

# Dict ordering is not guaranteed, so if there properties dependant on other properties an ordered dict is necessary

# In this case 'override global monitor settings' must be true before 'frequency points' can be set

props = OrderedDict([("name", "power"),

("override global monitor settings", True),

("x", 0.),("y", 0.4e-6),("monitor type", "linear x"),

("frequency points", 10.0)])

fdtd.addpower(properties=props)

fdtd.save("fdtd_file.fsp")

fdtd.run()

#Return raw E field data

Ex = fdtd.getdata("profile","Ex")

f = fdtd.getdata("profile","f")

x = fdtd.getdata("profile","x")

y = fdtd.getdata("profile","y")

print('Frequency field profile data Ex is type', type(Ex),' with shape', str(Ex.shape ))

print('Frequency field profile data f is type', type(f), 'with shape', str(f.shape ))

print('Frequency field profile data x is type', type(x), 'with shape', str(x.shape ))

print('Frequency field profile data y is type', type(y), 'with shape', str(y.shape ))

Returns

Frequency field profile result Ex is type <class 'numpy.ndarray'> with shape (101, 61, 1, 5) Frequency field profile result f is type <class 'numpy.ndarray'> with shape (5, 1) Frequency field profile result x is type <class 'numpy.ndarray'> with shape (101, 1) Frequency field profile result y is type <class 'numpy.ndarray'> with shape (61, 1)

Datasets

Datasets are relevant results that have been packaged in a form that makes it possible to readily visualize, and explore in Lumerical.

Rectilinear dataset

- Scalar attribute: [Nx;Ny;Nz;Np1;Np2;...;Npn

- ]

- Vector attribute: [Nx;Ny;Nz;Np1;Np2;...;Npn;3

- ]

- Tensor attribute: [Nx;Ny;Nz;Np1;Np2;...;Npn;9

- ]

where Nk,k=x,y,z

are the lengths of the coordinate vectors, and Npi is the length of the ith parameter. If the dataset is 2D or 1D then you will have singleton dimensions, so that one or multiple Nk=1

. These dimensions can be removed with the pinch command.

Unstructured spatial datasets are similar but contain the connectivity of the gridpoints as a spatial attribute. These are used extensively in the Finite Element solvers CHARGE, HEAT, FEEM, and DGTD.

Spatial datasets passed to the python environment will be converted to dictionaries, with keys associated to the various attributes and parameters. Since the attributes are are matrices they will converted to numpy arrays. Furthermore they will have a special metadata tag 'Lumerical_dataset' which allows their structure to be preserved when performing a roundtrips back to the Lumerical environment. When passing a dictionary from python to Lumerical it will be converted into an arbitrary unstructured dataset, unless it has the metadata section. Use the getresult() method to return datasets.

with lumapi.FDTD('fdtd_file.fsp') as fdtd:

#Return 2 different types of rectilinear datasets

T, time = fdtd.getresult("power", "T"), fdtd.getresult("time","E")

#Create an unstructured dataset

fdtd.eval('x = [0;1;2];y = [0;sqrt(3);0];z = [0;0;0];C = [1,3,2];ds = unstructureddataset(x,y,z,C);')

ds = fdtd.getv('ds')

print('Transmission result T is type', type(T),' with keys', str(T.keys()) )

print('Time monitor result E is type', type(time),' with keys', str(time.keys()) )

print('Unstructured dataset is type', type(ds),' with keys', str(ds.keys()) )

Returns

Transmission result T is type <class 'dict'> with keys dict_keys(['lambda', 'f', 'T', 'Lumerical_dataset']) Time monitor result E is type <class 'dict'> with keys dict_keys(['t', 'x', 'y', 'z', 'E', 'Lumerical_dataset']) Unstructured dataset is type <class 'dict'> with keys dict_keys(['x', 'y', 'z', 'connectivity', 'Lumerical_dataset'])

Related articles

- Python API overview

- Session management - Python API

- lumapi.getv - Python API method

- Using the Python API in the nanowire application example

- lumapi.putv - Python API method

Recently viewed articles

- Python API overview

- Script commands as methods - Python API

- Introduction to Lumerical datasets

- lumapi.putv - Python API method

- Session management - Python

Interop Server - Remote API

With Lumerical 2023 R1.2 and later, it is possible to use the Python API remotely. This documentation will show how to run the interop server required to use the remote API.

Note: the interop server is available on Linux only. Three shell scripts are provided, located in

[path to Lumerical]/api/interop-server/scripts/linux

- generate_certificate.sh

- interop_server_start.sh

- interop_server_stop.sh

Generate certificates

The interop server requires a certificate to encrypt the communication between the client and the server. You can use your own certificate, or use the script generate_certificate.sh to generate them and place them in the user’s home folder, in

$HOME/.config/Lumerical/interop_server_certs/

To run the script, move to the folder and run generate_certificate.sh. For example, if Lumerical 2023 R1.2 is installed in the default location:

cd /opt/Lumerical/v231/api/interop-server/script/linux ./generate_certificate.sh

Start and stop the interop server

To start the interop server, run interop_server_start.sh from the same folder.

./interop_server_start.sh

The server accepts the following options:

- interface IP address [default is 127.0.0.1]

- port [default is 8989]

- Path to server's key

- Path to server’s certificate

Note the parameters have to be specified in that order. For example, if you want to specify the server’s key, you have to specify the previous ones as well (IP address and port).

The interop server should be able to receive incoming connection on the specified port. You may want to open the port or disable the firewall on the machine. Please refer to your Linux distribution documentation. You will need to open a range of ports above the starting port in order to handle multiple connections. We suggest the starting port plus a range of at least 5.

To stop the server, run (from the same folder):

./interop_server_stop.sh

Lumopt

Photonic Inverse Design Overview - Python API

Motivation

Photonic integrated circuits are becoming increasingly complex with higher device density and integration. Typically, several competing design constraints need to be balanced such as performance, manufacturing tolerances, compactness etc. Traditional design cycles involved systematically varying a small set of characteristic parameters, simulating these devices to determine their performance and iterating to meet design requirements. Although this process is straightforward even an experienced designer struggles with their intuition once you introduce more than a few design parameters. To overcome human limitations a clever engineer may employ mathematical methods to begin anticipating strong candidates and more quickly converge on a satisfactory design.

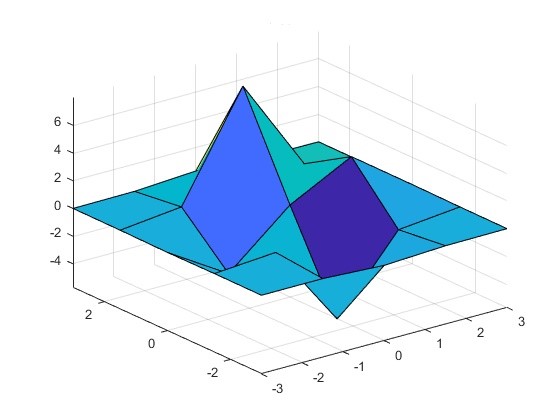

Let us consider gradient descent optimization; we can think of the parameters as coordinates in a design space, and the figure of merit as a function of these parameters represented by a surface in this space. First, we need to define a figure of merit that encapsulates the performance of our design. Theoretically, this could be anything, but as you will see we restrict ourselves to considering only power transfer in guided mode optics. Although, we do not know what the figure of merit surface looks like beforehand we can calculate it for a given set of parameters using a physics simulator such as FDTD. Here we see what the FOM surface of two parameters might look like.

Figure 1: Figure of Merit surface

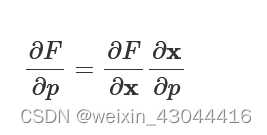

To plot an analytic function of two parameters it is straightforward to define a structured grid and calculate the output at each point; however, in our framework, each FOM calculation requires a computationally expensive simulation. Sweeping two parameters we could crudely map the FOM, but there is no guarantee that we have resolved important features, as the following figure illustrates.

Figure 2: Undersampled parameter space

Ultimately our goal is to find an ‘optimal’ solution, and systematically calculating the surface is unnecessarily expensive; furthermore, this process becomes intractable when the dimension of our space scales up to 10, 100, or 1000s of parameters. If instead, we introduced some overhead to calculate the gradients at each function call, we can determine the next set of parameters more carefully, and in this way, we take a shorter path to find the best solution.

Figure 3: Gradient descent streamlines

Figure 3 illustrates the gradient of the function using streamlines. The optimizer will move in the direction of the streamline, but we have not considered other important factors like step-size or preconditioning. Notice the local minima serve as collectors based on the starting position; yet, some starting positions will not reach these minima such as those along the edge of a ridge. In our implementation, we minimize the FOM, often called the cost function, which is equivalent to maximizing the FOM. For a further discussion on gradient descent methods please see the following articles.

- Gradient Descent: All you Need to Know

- Intro to optimization in deep learning: Gradient Descent

- Scipy Tutorial on Mathematical Optimization

Adjoint Method

Using gradient descent requires finding the partial derivatives with respect to each parameter by varying each individually to get a finite difference approximation of the local derivative. At each step, this results in 1+2N simulations which introduces some overhead but is more efficient than mapping the entire space. It should be noted that once we have an FOM defined we could use any heuristic optimization technique, but at this point, we present some higher mathematics that provides an elegant solution to the problem. Consider the gradient of the FOM with respect to the parameters.

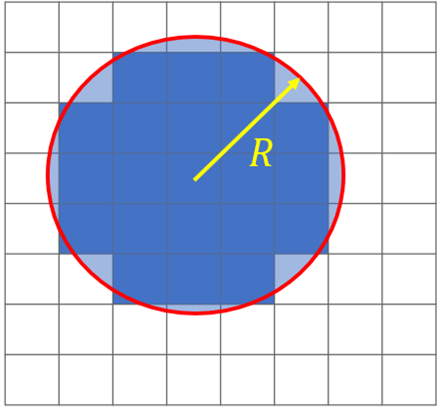

∇pF=(∂F∂p1,⋯,∂F∂pN)

The adjoint state method allows us to efficiently calculate the gradient regardless of the number of parameters. Using integration by parts the original differential equations are recast in terms of the dual space. This has the powerful advantage of requiring only two simulations, forward and adjoint, to calculate the gradient.

In FDTD, the general electromagnetics problem is described by a governing system of linear algebraic equations.

A(p)x=b(p)

Here A

contains Maxwell's equations, as well as the material permittivity and permeability of the underlying structures at each discretized point in space. The vector x describes all the fields in the simulation region, and b contains the free currents or equivalently the sources. The figure of merit function we have described above, is a function of design parameters, yet a more physically intuitive picture would be to consider F(p)=F(x(p))

.

∂F∂p=∂F∂x∂x∂p

If we find how the FOM varies as a function of the fields and determine the sensitivity of the fields to changes in the parameters we will know the gradient. FDTD will return ∂F/∂x

through the initial forward simulation, but to find how the fields change with each of the parameters ∂x/∂p

we must return to the governing equation. Taking the partial derivative with respect to p, we find the expression.

A∂x∂p=∂b∂p−∂A∂px

Recall that b is the illumination so ∂b/∂p

is the change in illumination as the parameters change. The term (∂A/∂p)x can be considered the change to the geometry as a result of changing the parameters. This expression for ∂x/∂p does not actually reduce our costs much, but by introducing a vector vT

the entire equation to reduces to a scaler equality.

vTA∂x∂p=vT(∂b∂p−∂A∂px)

Although, this simplifies the problem we still need to specify this vector vT

. If we require that vTA=∂F/∂x

we immediately obtain the quantity of interest, the design sensitivity.

∂F∂p=vT(∂b∂p−∂A∂px)

The implication of the above expression is that we can calculate the gradient using exactly two simulations. From the forward simulation, we get the fields, x

of the simulation volume, which arise due to the defined sources, b, in the presence of an optimizable geometry. We support a figure of merit that is calculated as the power through an aperture projected onto the field profile of a specified mode. In the adjoint simulation, the source is replaced with the adjoint source which takes the place of the FOM monitor. The resulting fields are again recorded everywhere in the optimization region to calculate v

which can be conceptualized as a time-reversal simulation to find the adjoint Maxwell’s equations.

ATv=∂FT∂x

For a more rigorous derivation and further information please see the following excellent resources.

- Adjoint shape optimization applied to electromagnetic design, Optics Express 2013 Vol. 21, Issue 18(2013)

- Optimizing Nanophotonics: from Photorecievers to Waveguides (THESIS), Christopher Lalau-Keraly

Lumopt - Shape vs Topology

This paradigm shift in the design cycle of passive photonic components we often refer to as Inverse Design. For this scheme to work the engineer provides the following:

- Simulation setup

- Parameterization of the design

- Figure of merit

- Reasonable starting point including footprint and materials

Once initialized the optimizer automatically traverses the higher dimensional design space, explores novel designs, and converges on devices with excellent performance. Furthermore, implemented techniques to bound solutions within manufacturable constraints and include performance considerations at process corners.

The adjoint methods outlined above are packaged as a python module called lumopt, which uses Lumerical FDTD as a client to solve Maxwell’s equations and scipy libraries to perform the optimization. The initial efforts were undertaken by Christopher Keraly, and this formulation is documented under Lumopt Read the Docs. There are some differences between the version hosted on Github and the lumopt module that ships with the Lumerical installer. We refer to the bundled Lumerical version here exclusively although there are many commonalities. Using this lumopt framework will require a solver license and an API license.

Lumopt comes in two different flavors - shape and topology optimization. With shape optimization one directly defines the geometry parameters to vary, and the bounds of these parameters. For example, the coordinates along the edge of a y-splitter. This allows the user to take a design from a good initial starting point and improve upon it; however, it takes a bit of work to define the geometry and the set of solutions which can be realized with this parameterization may be restrictive. Explicit parameterization like this means that the design rules for manufacturability can be easily enforced.

Topological inverse design requires users to simply define an optimizable footprint and the materials involved. As a result, the parameters are simply the voxels of this region which allows for very unintuitive shapes to be realized. Often inexplicably shapes and excellent performance is achieved, yet the structures tend to be harder to fabricate with photolithography owing to the small features that arise. We have taken several measures to ensure their manufacturability including smoothing functions and penalty terms to reduce small features.

For installation and the mechanics of lumopt please see the next section.

Getting Started with lumopt - Python API

Inverse design using lumopt can be run from the CAD script editor, the command line or any python IDE. In the first sub-section, we will briefly describe how to import the lumopt and lumapi modules; although an experienced python user can likely skip this part. As a starting point, it is recommended to run the AppGallery examples and use these as templates for your own project. It may be helpful following along with these files as you read this page or you may simply reference this page when running the examples later. In the project Init section, we outline the project inputs and necessary simulation objects that should be included. Important lumopt specific considerations are highlighted; however, a valid simulation set-up is imperative, so convergence testing should be considered a pre-requisite. Next important lumopt set-up classes, that should be updated to reflect your specifications are documented. Finally, a description of the scipy optimizer and lumopt optimization classes are presented. Shape and topology optimization primarily differ in how they handle the optimizable geometry which is the subject of the next page in this series.

Install

Running from the CAD script editor has the advantage that it requires no set-up and uses a Python 3 distro that ships with the Lumerical installer, so there is no need to install a separate python. This method automatically configures the workspace to find the lumopt and lumapi modules and would be the preferred method for users with little experience in python. Using your own version of python and running from an IDE or the command line may be preferable for more experienced users. To do this one simply needs to import lumopt and lumapi which will require specifying the correct path. Either pass an explicit path using importlibutil, or updating the search path permanently appending the PythonPath object. Advanced user working with numerous libraries might want to create a Lumerical virtual environment. For more information on these methods and os specific paths, see Session management - Python API.

| Note Lumerical ships with a version of Python 3, including lumapi and lumopt modules, already installed. To run any of our examples 'out of the box' simply run the scripts from the script file editor in the CAD. |

Project Init

Base Sim

The base simulation needs to be defined using one of the following options

- Predefined simulation file - Initialize a python variable that specifies the path to the base file. Example Grating coupler.

base_sim = os.path.join(os.path.dirname(__file__), 'grating_base.fsp')

- An lsf set-up script - Create a python variable using the load_from_lsf function. Example Waveguide crossing.

from lumopt.utilities.load_lumerical_scripts import load_from_lsf

crossing_base = load_from_lsf('varFDTD_crossing.lsf')

- Callable python code that does the set-up using the API - This can be a function defined in the same file or an imported function. Example Y-branch.

sys.path.append(os.path.dirname(__file__)) #Add current directory to Python path from varFDTD_y_branch import y_branch_init_ #Import y_branch_init function from file y_branch_base = y_branch_init_ #New handle for function

Each method produces a file which the optimizer updates and runs. Since the resulting project files should be equivalent; the method each user employs is a matter of preference or convenience.

Required Objects

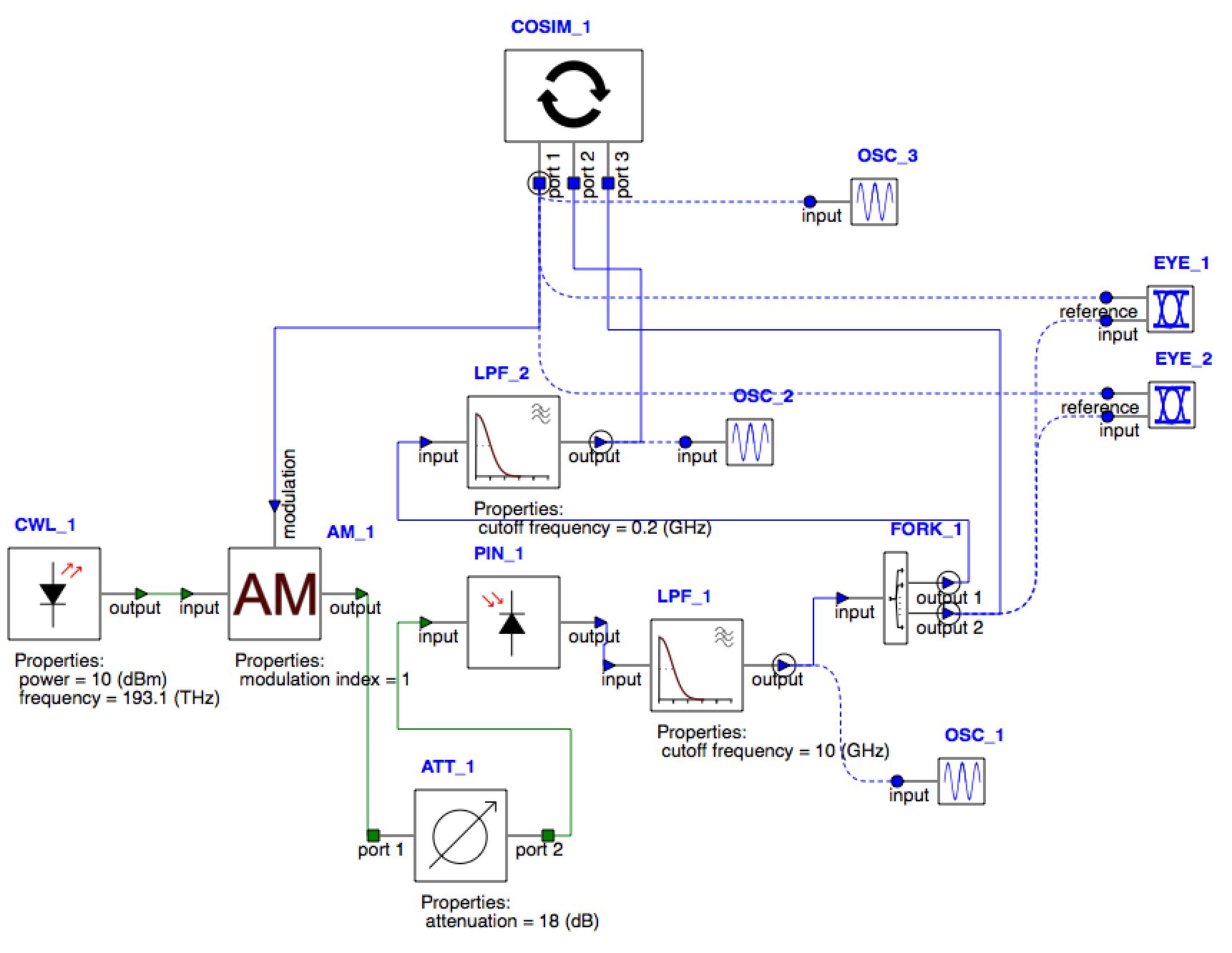

In the varFDTD, and FDTD base simulation it is also necessary to provide input/output geometry and define the following simulation objects that are used by lumopt.

Figure 1: Required simulation inputs

- A mode source - Typically named source, but can be set in the Optimization class.

- A mesh overide region covering the optimization volume - This is a static object, and the pixel/voxel size should be uniform.

- A frequency monitor over the optimization volume - Should be named opt_field, these field values are important for the adjoint method.

- A frequency monitor over the output waveguides - Used to calculate the FOM. The name of this monitor is passed to the ModeMatch class.

The mode source should have a large enough span and the modes should be compared to expectations see FDE convergence. This is used as the forward source. A mesh override is placed over the optimization region to ensure that a fine uniform grid covers this space, and the opt_field monitor is used to extract the fields in this region. The FOM monitor should be aligned to the interface of a mesh cell to avoid interpolation errors; therefore, it is a good idea to have a mesh override co-located with the FOM monitor. In the adjoint simulation, the adjoint source will take the place of the FOM monitor. Passing the name of the FOM monitor, to modematch class, allows multiple FOM monitors to be defined in the same base file which is helpful for SuperOptimization.

Set-up Classes

Two important lumopt classes that should be updated with your parameters are wavelengths and modematch. These are used to define the spectrum and mode number(or polarization) of the FOM respectively. It should be noted here that the FOM we accept is power coupling of guided modes. Other figures of merit, such as optimizing the target phase or power to a specified grating order are not supported. To compute the broadband figure of merit we take the mean of the target minus the error using the p-norm.

F=(1λ2−λ1∫λ2λ1|T0(λ)|pdλ)1/p−(1λ2−λ1∫λ2λ1|T(λ)−T0(λ)|pdλ)1/p

where

- T0

- is the target_T_fwd

- λ1 and λ2

- are the lower and upper limits of the wavelength points

- T

- is the actual mode expansion power transmission

- p

- is the value of the generalized p-norm

Wavelengths

Defines the simulation bandwidth, and wavelength resolution. Defining the target FOM spectrum is done in modematch.

from lumopt.utilities.wavelengths import Wavelengths

class Wavelengths(start,

stop,

points)

: start: float

Shortest wavelength [m]

: stop: float

Longest wavelength [m]

: points: int

The number of points, uniformly spaced including the endpoints.

Example

wavelengths = Wavelengths(start = 1260e-9, stop = 1360e-9, points = 11)

ModeMatch

This class is used to define target mode, propagation direction, and specify the broadband power coupling.

from lumopt.figures_of_merit.modematch import ModeMatch

class ModeMatch(monitor_name,

mode_number,

direction,

target_T_fwd,

norm_p,

target_fom)

: monitor_name: str

Name of the FOM monitor in the file.

: mode_number : str or int

Used to specify the mode.

If the varFDTD solver is used:

- ‘fundamental mode’

- int - user select mode number

If the FDTD solver is used:

- 'fundamental mode'

- 'fundamental TE mode'

- 'fundamental TM mode'

- int - user select mode number

: direction : str

The direction is determined by the FDTD coordinates; for mode traveling in the positive direction the direction is forward.

- 'Backward'

- 'Forward'

: multi_freq_source: boolean, optional

Should only be enabled by advanced users. See frequency Frequency dependent mode profile for more info. Default = False

: target_T_fwd: float or function

A function which will take the number of Wavelengths points and return values [0,1]. Usually passed as a lambda function or a single float value for single wavelength FOM. To specify a more advanced spectrum one can define a function, it may be helpful to use, numpy windows as a template.

: norm_p: float

Is the generalized p-norm used in the FOM calculation. The p-norm, with p≥1

allows the user to increase the weight of the error. Since p=1

provides a lower bound on this function, a higher p-number will increase the weight of the error term. Default p =1.0

: target_fom: float

A target value for the figure of merit. This will change the behavior of the printing and plotting only. If this is enabled, by setting a value other than 0.0, the distance of the current FOM is given. Default = 0.0

Example

class ModeMatch(monitor_name = 'fom', mode_number = 3, direction = 'Backward', target_T_fwd = lambda wl: np.ones(wl.size), norm_p = 1)

Optimization Classes

Here we describe the generic ScipyOptimizer wrapper, and lumopt Optimization class which is used to encapsulate the project.

ScipyOptimizer

This is a wrapper for the generic and powerful SciPy optimization package.

from lumopt.optimizers.generic_optimizers import ScipyOptimizers

Class ScipyOptimizers(max_iter,

method,

scaling_factor,

pgtol,

ftol,

scale_initial_gradient_to,

penalty_fun,

penalty_jac)

: max_iter: int

Maximum number of iterations; each iteration can make multiple figure of merit and gradient evaluations. Default = 100

: method: str

Chosen minimization algorithm; experimenting with this option should only be done by advanced users. Default = ‘L-BFGS-B'

: scaling_factor: none, float, np.array

None, scalar or a vector the same length as the optimization parameters. This is used to scale the optimization parameters. As of 2021R1.1, the default behavior in shape optimization is to automatically map the parameters the range [0,1] within the optimization routines; which was always the case in topology. The bounds, defined in the geometry class, or eps_min/eps_max are used for this. Default = None

: pgtol: float

The iteration will stop when max(|proj gi| i = 1, ..., n)<=pgtol|![]()

where gi

is the i-th component of the projected gradient. Default = 1.0e-5

: ftol: float

The iteration stops when ((fk−fk+1)/max(|fk| , |fk+1| , 1))<=ftol

. Default = 1.0e-5

: scale_initial_gradient_to: float

Enforces a rescaling of the gradient to change the optimization parameters by at least this much; the default value of zero disables automatic scaling. Default = 0.0

: penalty_fun: function, optional

Penalty function to be added to the figure of merit; it must be a function that takes a vector with the optimization parameters and returns a single value. Advanced feature. Default = None

:penalty_jac: function, optional

The gradient of the penalty function; must be a function that takes a vector with the optimization parameters and returns a vector of the same length. If a penalty_fun is included with no penalty_jac, lumopt will approximate the derivative. Advanced feature. Default = None

Example

optimizer = ScipyOptimizers(max_iter = 200,

method = 'L-BFGS-B',

scaling_factor = 1.0,

pgtol = 1.0e-5,

ftol = 1.0e-5,

scale_initial_gradient_to = 0.0,

penalty_fun = penalty_fun,

penalty_jac = None)

Optimization

Encapuslates and orchestrates all of the optimization pieces, and routines. Calling the opt.run method will perform the optimization.

from lumopt.optimization import Optimization

class Optimization(base_script,

wavelengths,

fom,

geometry,

optimizer,

use_var_fdtd,

hide_fdtd_cad,

use_deps,

plot_history,

store_all_simulations,

save_global_index,

label,

source_name)

: base_script: callable, or str

Base simulation - See project init.

- Python function in the workspace

- String that points to base file

- Variable that loads from lsf script

: wavelengths: float or class Wavelengths

Provides the optimization bandwidth. Float value for single wavelength optimization and Wavelengths class provides a broadband spectral range for all simulations.

: fom: class ModeMatch

The figure of merit FOM, see ModeMatch

: geometry: Lumopt geometry class

This defines the optimizable geometry, see Optimizeable Geometry

: optimizer: class ScipyOptimizers

See ScipyOptimizer for more information.

: hide_fdtd_cad: bool

Flag to run FDTD CAD in the background. Default = False

: use_deps: bool

Flag to use the numerical derivatives calculated directly from FDTD. Default = True

: plot_history: bool

Plot the history of all parameters (and gradients). Default = True

: store_all_simulations: bool

Indicates if the project file for each iteration should be stored or not. Default = True

: save_global_index: bool

Flag to save the results from a global index monitor to file after each iteration (used for visualization purposes). Default = False

: label: str, optional

If the optimization is part of a super-optimization, this string is used for the legend of the corresponding FOM plot. Default = None

: source_name: str, optional

Name of the source object in the simulation project. Default = "source"

Example

opt_2d = Optimization(base_script = base_sim_2d,

wavelengths = wavelengths,

fom = fom,

geometry = geometry,

optimizer = optimizer,

use_var_fdtd = False,

hide_fdtd_cad = True,

use_deps = True,

plot_history = True,

store_all_simulations = True,

save_global_index = False,

label = None)

Note(Advanced): For 2020R2 we exposed, a prototype user-requested debugging function that allows the user to perform checks of the gradient calculation. This is a method of the optimization class and can be called as follows.

opt.check_gradient(intitial_guess, dx=1e-3)

Where initial_guess is a numpy array that specifies the optimization parameters at which the gradient should be checked. The scalar parameter dx is used for the central finite difference approximation

Has two limitations; one the check performs an unnecessary adjoint simulation. Two the check_gradient is only available for regular optimizations and not superoptimizations of multiple FOMs.

Superoptimization

The + operator has been overloaded in the optimization class so it is trivial to simultaneously optimize:

- Different output waveguides and\or wavelength bands CWDM

- Ensure balanced TE\TM performance or suppress one over the other

- Robust manufacturing by simultaneously optimizing underetch\overtech\nominal variations.

- Etc ...

Simply adds the various Optimization classes together to create a new SuperOptimization object. Then you will call run on this object to co-optimize the various optimization definitions. Each FOM calculation requires 1 optimization object so the number of simulations at each iteration will be Nsim=2×NFOM

.

Example

opt = opt_TE + opt_TM opt.run(working_dir = working_dir)

Optimizable Geometry - Python API

This section covers the details of shape and topology based inverse design using lumopt. As prerequisite please familiarize yourself with photonic inverse design and understand the basics of the lumopt workflow.

The following examples can help provide context to the information presented here.

Shape

Shape based optimization allows users to take strong existing designs and improve their performance. Constraints can be easily included in the parameterization such as design rules for manufacturing. To do this the user needs to parameterize their geometry, through a shape function s(p)

and provide bounds on the p values. This is supported with a handful of geometry classes FunctionDefinedPolygon which accepts a python function of parameters, and ParamterizedGeometry which accepts a python API function. FDTD is used to calculate the FOM(s(p))

.

Figure 1: Shape parameters and the shape function

The Adjoint method motivation section described how the parameters enter the equations through the permittivity. When users define a parameterized geometry an additional step, we refer to as the d_eps_calculation, is required to determine how varying each parameter changes the permittivity in Maxwell’s equations and the subsequent shape derivative. This is accomplished by meshing the simulation and returning the ϵ(x,y,z)

, but without running the solver. Since this must be done for each parameter, including more parameters comes at some extra cost; although, this should be small compared to running the simulation; however, in large 3D simulations this meshing step can be intensive even if it is brief. If you need assistance improving the performance, and parallelization of the d_eps calculation please reach out to Lumerical support. For more information on the shape derivative approximation method see Chpt 5 of Owen Miller's thesis.

It is important to consider that the choice of parameters may be too restrictive, and that gradient based techniques are inherently dependent on initial conditions. It not always obvious what a better choice of parameters and initial conditions may be so some experimentation may be required to achieve the greatest performance.

Shape Geometry Classes

For each geometry class the user must pass the shape function, parameter bounds, geometry specifications and material properties. It is important to verify that your geometry varies smoothly and does not self-intersect for any combination of acceptable parameters as this can produce discontinuities in the gradient calculation. Spline points are not bounded in the same way the parameters are which can be problematic. When end points are clamped this may lead to discontinuities, employing "not-a-knot spline" usually avoids these errors. By visualizing different shapes realized from possible sets of bounded parameters most of these errors can be recognized and avoided.

Functiondefinedpolygon

This python class takes a user defined function of the optimization parameters. The function defines a polygon by returning a set of vertices, as a numpy array of coordinate pairs np.array([(x0,y0),…,(xn,yn)]), where the vertices must be ordered in a counter-clockwise direction.

from lumopt.geometries.polygon import FunctionDefinedPolygon

class FunctionDefinedPolygon(func,

initial_params,

bounds,

z,

depth,

eps_out,

eps_in,

edge_precision,

dx,

deps_num_threads)

: func : function

function(parameters) - Returns s(p)

an np.array([(x0,y0),…,(xn,yn)]) of coordinate tuples ordered CCW.

Typically, a subset of polygon points p

will be used as parameters with a spline technique to up-sample these values and create a smoother curve. This user defined function is a common source of error.

: initial_params: np.array

Parameters in a form accepted by the func, that define the initial geometry. Size = [1, n_param]

: bounds: np.array

A set of tuples that define the min and max of each parameter. Size = [2, n_param]

: z : float

Center of polygon along the z-axis. Default = 0.0

: depth: float

Span of polygon along the z-axis.

: eps_out: float

permittivity of the material around the polygon.

: eps_in: float

Permittivity of the polygon material.

: edge_precision: int

Number of quadrature points along each edge for computing the FOM gradient using the shape derivative approximation method.

: dx: float > 0.0

Step size for computing the FOM gradient using permittivity perturbations. Default = 1e-5

: deps_num_threads: int, optional

Number of threads to be uses in the d_eps calculation. This utilizes the job manager, and changes your resource configuration. By default this will be performed serially, but it is possible to utilize more cores simultaneously by setting this greater than 1. This is an advanced, experimental feature with some some additional overhead associated; thus, testing and benchmarking is required. In the event of an unexpected termination one will have to manually fix the update. Resource configuration elements and controls. Default = 1

ParameterizedGeometry

from lumopt.geometries.parameterized_geometry import ParameterizedGeometry

class ParameterizedGeometry(func,

initial_params,

bounds,

dx,

deps_num_threads)

: func : python API function

function(parameters, fdtd, only_update, (optional arguments))

This function is less concise, but more intuitive and flexible than FunctionDefinePolygon. It allows users to call the API methods, in the same way you would use lsf script commands to set-up and update the geometry rather than passing the (x,y) vertices. The flag 'only_update' is used to avoid frequent recreations of the parameterized geometry which reduces the performance. When the flag is true, it is assumed that the geometry was already added at least once to the CAD. For an example of it's usage see Inverse design of grating coupler.

: initial_params: np.array

Parameters in a form accepted by the func, that define the initial geometry. Size = [1, n_param]

: bounds: np.array

A set of tuples that define the min and max of each parameter. Size = [2, n_param]

: dx: float > 0.0

Step size for computing the FOM gradient using permittivity perturbations. Default = 1e-5

: deps_num_threads: int, optional

Number of threads to be uses in the d_eps calculation. This utilizes the job manager, and changes your resource configuration. By default this will be performed serially, but it is possible to utilize more cores simultaneously by setting this greater than 1. This is an advanced, experimental feature with some some additional overhead associated; thus, testing and benchmarking is required. In the event of an unexpected termination one will have to manually fix the update. Resource configuration elements and controls. Default = 1

Shape Considerations

From 2D to 3D

Shape optimization in 2D is supported through MODE varFDTD and FDTD. Using varFDTD will automatically collapse the material and geometric contributions in the z-direction to more accurately reflect the effective indices. FDTD simply uses the material index, ignoring the 3rd dimension. For in-plane integrated photonic components varFDTD provides the most straightforward route to accurate permittivity values. One can use FDTD in this situation as well, but determining the effective indices is up to the user. In situations where the propagation is not entirely in plane, such as with the grating coupler example, FDTD is required. To use varFDTD set the use_varFDTD = True in the optimization class.

In shape optimization it is straightforward to move from 2D to 3D since all the supported geometry classes are transferable. The only update required is to change the base file monitors and simulation region from 2D to 3D FDTD. Bulk material indices should be specified for eps_in and eps_out as the geometric contributions are automatically incorporated into the update equations. We have found strong correlation between high performing devices in 2D and device performance in 3D; however, expect the FOM to be reduced when moving to 3D as new loss mechanisms are considered. Due to the complexity and time required for 3D optimizations we recommend starting in 2D and then importing those optimized structures into 3D for a final abbreviated optimization run.

GDS Export

When exporting the optimized geometry we recommend using the encrypted GDS export script, outlined and available for download here:

Topology

In topology optimization the user does not need to parameterize the geometry, which simplifies the workflow; however, the simulation needs to perform a few more complicated steps to produce physically realizable devices. To set up topology optimization simply define the optimizable footprint and materials in the relevant 2D or 3D geometry class, outline below. Each FDTD mesh cell in the discretized optimization volume becomes a parameter. This technique often scales to thousands of parameters, but with the adjoint method we still only need 2 simulations per iteration to calculate the gradient. Furthermore, since the permittivity values are the optimization parameters, there is a direct link to Maxwell’s equations so there is no need to perform a d_eps as calculation.

Figure 2: Topology optimization schematic

In the initialization section we describe important aspects of the setup, and how to provide initial conditions. Once the optimization is running it proceeds in two or three steps. First the greyscale phase where the parameters vary continuously between the core and cladding index. Next, a binarization phase is run to force the mesh cells to take either the core or cladding index. Finally, an optional design for manufacturing step is implemented. In this step a penalty function is added to the FOM. The magnitude of the penalty function is determined by the degree to which the minimum feature size constraint is violated. Finally, we will discuss how to move from 2D to 3D, and export your designs to GDS .

Topology Geometry Classes

TopologyOptimization2D

This class is used in 2D simulations.

from lumopt.geometries.topology import TopologyOptimization2D

class TopologyOptimization2D(params,

eps_min,

eps_max,

x, y, z,

filter_R,

eta,

beta,

min_feature_size)

: params : np.array

Initial parameters that define the initial geometry. Size = [n_x, n_y]

: eps_min : float

Permittivity of the core material

: eps_max: float

Permittivity of the cladding

: x: np.array

The x position of the parameters and FDTD unit cells in [m].

: y : np.array

The y positions of the parameters and FDTD unit cells in [m].

: z : float

Z position of the topology object in [m]. Default 0.0

: filter_R: float

The radius of the Heaviside filter in [m]. Outlined in greyscale. Default = 200e-9

: eta : float

Eta parameter of the Heaviside filter. Provides a shift of the binarization threshold towards eps_min if eta <0.5, and towards eps_max if eta >0.5. Default = 0.5

: beta: float

Beta parameter of the Heaviside filter. This is the starting value used, but the beta parameter is ramped up to ~1000 during the binarization phase. Default = 1

: min_feature_size: float, optional

Minimum feature size in the DFM phase. Features smaller than this value will be penalized in the final DFM stage. Must be filter_R < min_feature_size < 2.0 * filter_R. A value of 0.0 will disable the DFM phase. Default = 0.0

TopologyOptimization3DLayered

This class is used in 3D simulations, and operates in the same way as the 2D geometry with the exception that z is now a required nump array of points, and the optimization region is a volume. To reduce the field results to the XY plane there is an integration along z. The pattern of the layer is optimized for, but not each mesh cell individually: ie it is not a full 3D voxelated topological geometry, that feature is currently not supported.

from lumopt.geometries.topology import TopologyOptimization3DLayered

class TopologyOptimization3DLayered(params,

eps_min,

eps_max,

x, y, z,

filter_R,

eta,

beta,

min_feature_size)

: params : np.array

Initial parameters that define the initial geometry. Size = [n_x, n_y, n_z]

: eps_min : float

Permittivity of the cladding

: eps_max: float

Permittivity of the core material

: x: np.array

The x position of the parameters and FDTD unit cells in [m].

: y : np.array

The y positions of the parameters and FDTD unit cells in [m].

: z : np.array

The z position of the parameters and FDTD unit cells in [m].

: filter_R: float

The radius of the Heaviside filter in [m]. Outlined in greyscale. Default = 200e-9

: eta : float

Eta parameter of the Heaviside filter. Provides a shift of the binarization threshold towards eps_min if eta <0.5, and towards eps_max if eta >0.5. Default = 0.5

: beta: float

Beta parameter of the Heaviside filter. This is the starting value used, but the beta parameter is ramped up to ~1000 during the binarization phase. Default = 1

: min_feature_size: float, optional

Minimum feature size in the DFM phase. Features smaller than this value will be penalized in the final DFM stage. Must be filter_R < min_feature_size < 2.0 * filter_R. A value of 0.0 will disable the DFM phase. Default = 0.0

Running

This is section describes the various stages of topology optimization.

Figure 3: Topology optimization steps

Initialization

It is important the mesh defined in the FDTD simulation precisely matches the optimization parameters defined in Python. To do this make sure a uniform mesh is defined over the optimizable footprint. The python parameters may be defined as follows.

#Footprint and pixel size size_x = 3000 #< Length of the device (in nm). Longer devices typically lead to better performance delta_x = 25 #< Size of a pixel along x-axis (in nm) size_y = 3000 #<Extent along the y-axis (in nm). When using symmetry this should half the size. delta_y = 25 #< Size of a pixel along y-axis (in nm) size_z = 240 #< Size of device in z direction delta_z = 40 #< Size of a pixel along z-axis (in nm) x_points=int(size_x/delta_x)+1 y_points=int(size_y/delta_y)+1 z_points=int(size_z/delta_z)+1 #Convert to SI position vectors x_pos = np.linspace(-size_x/2,size_x/2,x_points)*1e-9 y_pos = np.linspace(-size_y/2,size_y/2,y_points)*1e-9 z_pos = np.linspace(-size_z/2,size_z/2,z_points)*1e-9

In the lumerical script that generates the base file ensure that the grid matches. Alternative methods such as a python function or base file are also allowed, but the grid and parameter values will also need to match.

#Define footprint

opt_size_x=3e-6;

opt_size_y=3e-6;

opt_size_z=240e-9;

#Define pixel size

dx = 25e-9;

dy = 25e-9;

dz = 40e-9;

addmesh;

set('name','opt_fields_mesh');

set('override x mesh',true); set('dx',dx);

set('override y mesh',true); set('dy',dy);

set('override z mesh',true); set('dy',dz);

set('x',0); set('x span',opt_size_x);

set('y',0); set('y span',opt_size_y);

set('z',0); set('z span',opt_size_z);

To set the initial conditions you can pass an np.array the same size as the parameters. If you want start with a uniform permittivity value across the optimizable domain this is straightforward.

##Initial conditions #initial_cond = np.ones((x_points,y_points)) #< Start with the domain filled with eps_max #initial_cond = 0.5*np.ones((x_points,y_points)) #< Start with the domain filled with (eps_max+eps_min)/2 #initial_cond = np.zeros((x_points,y_points)) #< Start with the domain filled with eps_min

Alternatively, define an initial structure in the base simulation, and pass None. In this case the optimizer meshes the simulation to determine the initial parameter set. This should be a structure group containing the relevant geometry called initial_guess.

Figure 4: Topology optimization set-up

Greyscale

In the python optimization each cell corresponds to a parameter ρ∈[0,1]

which is linearly mapped to the ϵ

values in FDTD. A circular top hat convolution with a user specified radius is used to remove sharp corner, small holes or islands which can’t be manufactured through convolution. Depending on you manufacturing process, typical values for the filter radius will be between 50 and 500nm.

Figure 5: Top-hat convolution diagram

In this stage of the optimization the FOM error tends to very quickly reduce but may take 100’s of iterations to reach a minima. The values obtained during greyscale are typically very good, but the filtering process is imperfect, and there will be intermediate eps values and unproducible features via lithographic means. The following steps are used to produce realizable devices.

Binarization

During binarization the Heaviside filter changes the mapping of the python elements to FDTD permittivity. In the greyscale phase the beta parameter is 1, producing a linear mapping. During binarization the beta parameter is incrementally ramped up. The Heaviside filter is defined as follows, and ramping up the beta parameter converts it from a linear mapping to a step function.

ϵi=ϵlow+tanh(βη)+tanh(β(ρi−η))tanh(βη)+tanh(β(1−η))Δϵ

Figure 6: Heaviside filter

Ramping β

up will perturbs the system producing sharp peaks in the FOM. It is followed by a set of optimization iterations to adjust to the perturbation, and reduce the FOM error. It is possible to change the number of iterations between increments of Beta, by changing the opt.continuation_max parameter.

Figure 7: FOM during optimization steps

Design For Manufacturing (optional)

Even when using the Heaviside filter for spatial filtering, the topology optimization designs tend to create features that break process design rules. To ensure manufacturability we explored ways of explicitly enforcing specific minimum feature size constraints. Our implementation uses a technique published by Zhou et al. in 2015[1]. This paper was focused structural mechanics, so we had to make some small adjustments to make it work reliably for photonic devices.

The main idea is to come up with an indicator function which is minimal if the minimum feature size constraints are fulfilled everywhere. This term is then added as a penalty term to the FOM during optimization. To implement this phase pass a non-zero min_feature_size constraint to the geometry class.

Topology Considerations

From 2D to 3D

All dimensional considerations from shape apply to topology. We recommend using effective indices as calculated in varFDTD as the permittivity values in 2D opt, but one should work with FDTD for all topology optimizations.

When going from 2D to 3D in topology the performance does not correlate as well, as in shape based designs. That being said good 2D results demonstrate the possibility of producing strong 3D designs which is important. Performance improvements in 3D tend to proceed more quickly if one imports a design that is not fully binarized, which relaxes the abillity of the 3D optimizer to vary parameters. Importing partially binarized 2D designs into 3D is outlined below.

## First, we specify in which path to find the 2d optimization

path2d = os.path.join(cur_path,"[Name of 2D opt folder]")

## Next, check the log-file to figure out which iteration we should load from.

# It is usually best to load something mid-way through the binarization

convergence_data = np.genfromtxt(os.path.join(path2d, 'convergence_report.txt'), delimiter=',')

## Find the first row where the beta value (3rd column) is larger than beta_threshold (here 10)

beta_threshold = 10

beta_filter = convergence_data[:,2]>beta_threshold

convergence_data = convergence_data[beta_filter,:] #< Trim data down

iteration_number = int(convergence_data[0,0]-2)

## Load the 2d parameter file for the specified iteration

geom2d = TopologyOptimization2D.from_file(os.path.join(path2d, 'parameters_{}.npz'.format(iteration_number) ), filter_R=filter_R, eta=0.5)

startingParams = geom2d.last_params #< Use the loaded parameters as starting parameters for 3d

startingBeta = geom2d.beta #< Also start with the same beta value. One could start with 1 as well.

Export to GDS

Since the topology designed devices do not have a well defined shape we developed an alternate method to extract the GDSII designs using contours. This functionality is outlined in the following KX page.

Further Resources

Webinars

- Feb 2019 - Broadband shape

- Aug 2019 - Updates to Shape, Grating Couplers, and Intro to Topology Optimization

- April 2020 - Update to Topology Optimization

Examples

- GDS Pattern Extraction Using getcontours

- Inverse Design of an Efficient Narrowband Grating Coupler

- Broadband Grating Coupler Design Bandwidth vs Peak Efficiency

- Inverse Design of a Y-Splitter using Topology Optimization

- Topology Optimization of an O,C Band Splitter with min_feature_size Constraint

References

Application Examples

Using the Python API in the nanowire application example

This example demonstrates the feasibility of integrating Lumerical FDTD with Python using Application Programming Interface (API). In this example, we will set the geometry based on 2D Mie scattering example and then run the simulation using Python script. Once the simulation is finished, simulation results will be imported to Python, and plots comparing simulation and theoretical results as well as a plot of Ey intensity will be provided.

Requirements: Lumerical products 2018a R4 or newer

Note:

- Versions : The example files were created using Lumerical 2018a R4, Python 3.6 (and numpy), matplotlib 0.99.1.1, and Windows 7

- Working directory : It should be possible to store the files in any locations as desired. However, it is recommended to put the Lumerical and Python files in the same folder in order for the above script files to work properly. It is also important to check the Lumerical working directory has the correct path, see here for instructions to change the Lumerical working directory.

- Linux: /opt/lumerical/interconnect/api/python/

- Windows: C:\Program Files\Lumerical\FDTD\api\python

During the Lumerical product installation process, a lumapi.py file should be automatically installed in this location.