1 Introduction

作为一个机器学习的小白,希望和karpathy 大神的这个课程,掌握机器学习的基础概念和方法。

2 任务

根据一个名字文件,做一个起名字的模型。

3 方案1

用统计的方法来实现,我们希望统计每个词的关联性,一个词后面接下个词的概率。

3.1 思路

step1: 从文本上把所有的text读出来,然后看看有哪些字符;

lines = [line.strip() for line in open('names.txt', 'r')]

chars = [char for line in open('names.txt', 'r') for char in line.strip()]

unique_chars = sorted(list(set(chars)))

stoi = {char: index + 1 for index, char in enumerate(unique_chars)}

stoi['.'] = 0

step2: 映射字符和数字的查找表;

N = torch.zeros((27,27), dtype=torch.int32)

# 遍历输入数据,进行统计

for line in open('names.txt', 'r'):

line = '.' + line.strip() + '.'

for i in range(len(line) - 1):

c1 = stoi[line[i]]

c2 = stoi[line[i + 1]]

N[c1, c2] += 1

用imshow(N)查看一下数据的分布:

import matplotlib.pyplot as plt

%matplotlib inline

plt.imshow(N)

```python

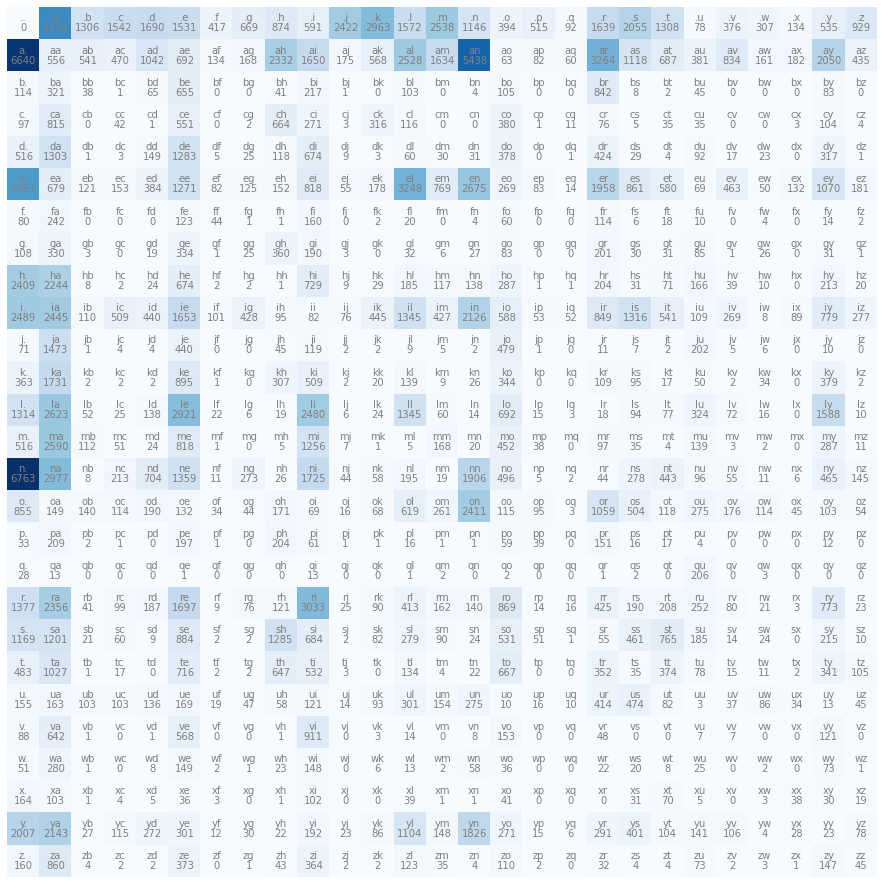

可以在这个工具的基础上,将具体的数字进行显示

```python

import matplotlib.pyplot as plt

%matplotlib inline

plt.figure(figsize=(16,16))

plt.imshow(N, cmap='Blues')

# 在每个色块上添加次数和字母

for i in range(N.shape[0]):

for j in range(N.shape[1]):

count = N[i, j]

letter_i = itos[i]

letter_j = itos[j]

chstr = letter_i + letter_j

plt.text(j, i, chstr, ha="center", va="bottom", color="gray")

plt.text(j, i, N[i, j].item(), ha="center", va="top", color="gray")

plt.axis('off')

step3: 用key-value统计一下字符搭配的概率分布;

# 计算每行的总次数

P = (N + 1).float()

P /= P.sum(dim=1, keepdim=True)

step4: 按照概率分布进行随机,取名字;

ix = 0

g = torch.Generator().manual_seed(2147483647)

for i in range(10):

out = []

ix = 0

while True:

weights = P[ix]

# 采用放回抽样的方法产生新样本

ix = torch.multinomial(weights, num_samples = 1, replacement=True, generator=g).item()

out.append(itos[ix])

if ix == 0:

break

print(''.join(out))

step5: 对我们的方法进行评估

我们需要搞到验证集,验证集中的样本的ground truth 概率为1,而概样本在我们的系统中则是一个独立分布,也就是p(s1|s2|s3|s4)=p(s1)*p(s2)*p(s3)*p(s4),采用log的方法。

Cross-entropy 的计算公式如下:

H ( p , q ) = − ∑ i p ( i ) log q ( i ) H(p,q) = -\sum_{i} p(i) \log q(i) H(p,q)=−i∑p(i)logq(i)

其中,

p

p

p表示真实标签的概率分布,

q

q

q表示模型预测的概率分布。

一开始我们想到,对整段预测进行评估,这样实际上是不科学的,我们的预测器是根据前一个字符去预测后一个字符,所以我们应该要计算的是我们预测的cross-entropy

sample = ".karpathy." # 样本字符串,末尾添加了'.'字符

err = 0

for i in range(len(sample) - 1):

char = sample[i]

next_char = sample[i + 1] # 获取下一个字符

ix1 = stoi[char]

ix2 = stoi[next_char]

p = P[ix1, ix2]

err -= torch.log(p)

print(err)

修改成针对字符预测的cross-entropy

log_likelihood = 0.0

num_chars = 0

lines = ["andrejq"] # 样本文本行列表

for line in lines:

words = '.' + line.strip() + '.' # 在每行的开头和结尾添加'.'字符

for ch1, ch2 in zip(words, words[1:]):

ix1 = stoi[ch1]

ix2 = stoi[ch2]

log_likelihood += torch.log(P[ix1, ix2])

num_chars += 1

avg_log_likelihood = -log_likelihood / num_chars

print("Average Log-Likelihood:", avg_log_likelihood)

print("Perplexity:", torch.exp(avg_log_likelihood))

3.2 采用神经网络进行预测

step1: 将数据集分成training, test, validation

from sklearn.model_selection import train_test_split

train_set, temp_set = train_test_split(lines, test_size=0.2, random_state=42)

test_set, val_set = train_test_split(temp_set, test_size=0.5, random_state=42)

step2: 输入字符变成one-hot encoding, 使用batch-size进行training;

import torch.nn.functional as F

# # creating the training set of bigrams

xs, ys = [], []

for w in train_set[:1]:

chs = ['.'] + list(w) + ['.']

# zip会自动判断,是否后面还有元素

for ch1, ch2 in zip(chs, chs[1:]):

ix1 = stoi[ch1]

ix2 = stoi[ch2]

print(ch1, ch2)

xs.append(ix1)

ys.append(ix2)

#Tensor默认是float32

xs = torch.tensor(xs)

ys = torch.tensor(ys)

num_classes = 27

xenc = F.one_hot(xs, num_classes=num_classes).float()

yenc = F.one_hot(ys, num_classes=num_classes).float()

step3: 定义网络结构和初始化参数

# 创建权重和偏置张量

output_size = 27

input_size = 27

w = torch.empty(input_size, output_size, requires_grad=True)

b = torch.empty(1, output_size, requires_grad=True)

# 初始化权重和偏置

torch.nn.init.xavier_uniform_(w)

torch.nn.init.constant_(b, 0.0)

y = xenc @ w + b

y_prob = F.softmax(y, dim=1)

loss = F.cross_entropy(y, ys)

print(loss)

使用交叉熵的结果相同

# 计算样本级别的交叉熵损失

sample_losses = -torch.sum(yenc * torch.log(y_prob), dim=1)

# 计算批次平均损失

loss2 = torch.mean(sample_losses)

# 也可以更加简单一点,并且加上参数权重

loss = -probs[-1, ys].log().mean() + 0.01 * (w**2).sum()

使用softmax将结果转换成概率分布

y = xenc @ w + b

y_prob = F.softmax(y, dim=1).float()

counts = torch.exp(y)

y_prob2 = counts / counts.sum(dim=1, keepdim=True).float()

step4: 训练和验证

for i in range(100):

# 前向传播

y = xenc @ w + b

y_prob = F.softmax(y, dim=1)

# 计算 cross-entropy 损失

loss = -torch.mean(torch.sum(yenc * torch.log(y_prob), dim=1))

# loss = F.cross_entropy(y, ys)

# 反向传播

loss.backward()

# 更新权重和偏置

learning_rate = 0.1

with torch.no_grad():

w -= learning_rate * w.grad

b -= learning_rate * b.grad

# 清空梯度

w.grad.zero_()

b.grad.zero_()

# 打印中间结果

print(f"Iteration {i+1}:")

print(f" Loss: {loss.item():.4f}")

print(f" Probability of true class: {y_prob[0, ys[0]].item():.4f}")

关注初始error:

一开始是概率是均匀分布,那么Probability of true class=1/27=0.0370

对应cross-entropy=-log(0.037) = 3.31

Iteration 1:

Loss: 3.3133

Probability of true class: 0.0370

Iteration 2:

Loss: 3.2850

Probability of true class: 0.0385

Iteration 3:

Loss: 3.2569

Probability of true class: 0.0400

Iteration 4:

Loss: 3.2289

Probability of true class: 0.0416

Iteration 5:

Loss: 3.2012

Probability of true class: 0.0432

Iteration 6:

Loss: 3.1736

Probability of true class: 0.0448

Iteration 7:

Loss: 3.1461

Probability of true class: 0.0466

Iteration 8:

Loss: 3.1189

Probability of true class: 0.0483

Iteration 9:

…

Probability of true class: 0.3994

Iteration 100:

Loss: 1.4113

Probability of true class: 0.4034

step5: 加入更多的样本:

# # creating the training set of bigrams

xs, ys = [], []

for word in train_set:

chs = ['.'] + list(word) + ['.']

# zip会自动判断,是否后面还有元素

for ch1, ch2 in zip(chs, chs[1:]):

ix1 = stoi[ch1]

ix2 = stoi[ch2]

print(ch1, ch2)

xs.append(ix1)

ys.append(ix2)

#Tensor默认是float32

xs = torch.tensor(xs)

ys = torch.tensor(ys)

num_classes = 27

xenc = F.one_hot(xs, num_classes=num_classes).float()

yenc = F.one_hot(ys, num_classes=num_classes).float()

# 创建权重和偏置张量

output_size = 27

input_size = 27

w = torch.empty(input_size, output_size, requires_grad=True)

b = torch.empty(1, output_size, requires_grad=True)

# 初始化权重和偏置

torch.nn.init.xavier_uniform_(w)

torch.nn.init.constant_(b, 0.0)

num = xs.nelement()

for i in range(1000):

# 前向传播

y = xenc @ w + b

y_prob = F.softmax(y, dim=1)

# 计算 cross-entropy 损失

loss = -y_prob[torch.arange(num), ys].log().mean() + 0.01*(w**2).mean()

# loss = F.cross_entropy(y, ys)

# 反向传播

w.grad = None

b.grad = None

loss.backward()

learning_rate = 10

w.data -= learning_rate * w.grad

b.data -= learning_rate * b.grad

# 打印中间结果

print(f"Iteration {i+1}:")

print(f" Loss: {loss.item():.4f}")

print(f" Probability of true class: {y_prob[0, ys[0]].item():.4f}")

进行预测

ix = 0

g = torch.Generator().manual_seed(2147483647)

for i in range(10):

out = []

ix = 0

while True:

input = torch.tensor([ix])

x = F.one_hot(input, num_classes=num_classes).float()

y = x @ w + b

counts = torch.exp(y)

y_prob = counts / counts.sum(dim=1, keepdim=True)

ix = torch.multinomial(y_prob, num_samples = 1, replacement=True, generator=g).item()

out.append(itos[ix])

if ix == 0:

break

print(''.join(out))

mor.

axuaninaymoryles.

kondlaisah.

anchshizarie.

odaren.

iaddash.

h.

jionatien.

egushk.

h.

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?