由于前几天学习了ResNet,于是想要写篇博文,来有个更深的印象

- 在学习的时候我也遇到了资料搜集的不全,导致的学习效率低下,一定要耐住性子,技术这个东西,不是一朝一夕就能够搞懂

接下来有几个概念需要理解一下,什么是残差网络?相比之前的普通的网络结构有什么不同?

- 残差网络:

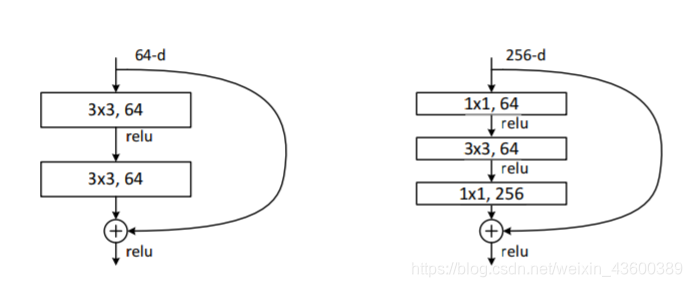

上面的图片,具体的解释了什么是残差网络,可能会有一些小伙伴看不懂的情况,接下来我用文字描述。残差网络就是在之前的网络结构上加上了一个残差快(短接层),如下图所示:

此时,可以把输入的当作F(x) = H(x) - x,这个只是推导的过程

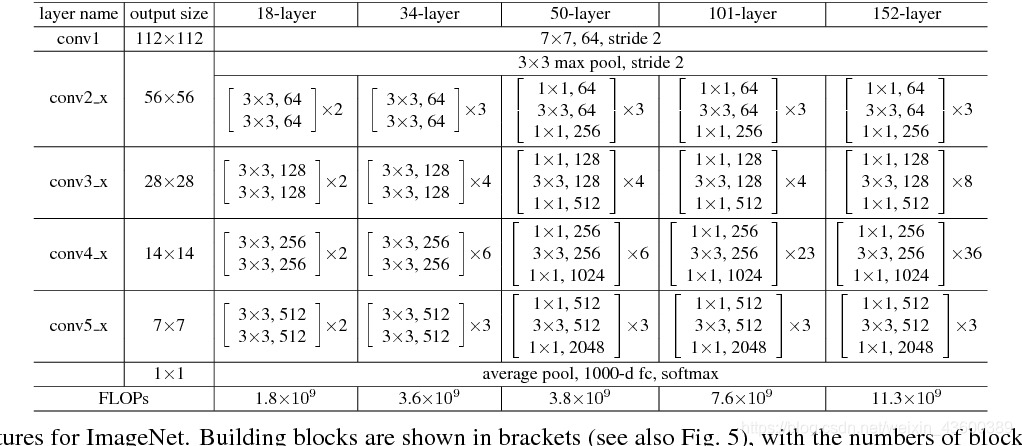

那么 H(x) = F(x) + x ,只要是有这个过程的都可以称为残差网络,从上图中可以看出输入的F(x) add了一个短接层 - 残差网络分为两个部分:50层以下的网络和50层以上的网络,50层称为Bottleneck接下来使用代码实现50层以下的网络,Basicblock,相比官方代码做了些精简

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, inplanes, planes, stride=1, downsample=None, norm_layer=None):

super(BasicBlock, self).__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

self.conv1 = conv3x3(inplanes, planes, stride)

self.bn1 = norm_layer(planes)

self.relu = nn.ReLU(inplace=True)

self.conv2 = conv3x3(inplanes, planes, stride)

self.bn2 = norm_layer(planes)

self.downsample = downsample

self.stride = stride

def forward(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

# 下采样,网络中有的地方尺寸发生了变化,这时候对x也需要进行同时变化,即为下采样

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

# 这里是50层及以上

class BottleNeck(nn.Module):

# 输出的时候通道要比输入的时候放大四倍,第一张图可以看出

expansion = 4

def __init__(self, inplanes, planes, stride=1, downsample=None, norm_layer=None):

super(BottleNeck, self).__init__()

if norm_layer is None:

norm_layer = nn.BatchNorm2d

self.conv1 = conv1x1(inplanes, planes, stride)

self.bn1 = norm_layer(planes)

self.conv2 = conv3x3(inplanes, planes, stride)

self.bn2 = norm_layer(planes)

self.conv3 = conv1x1(inplanes, planes * self.expansion)

self.bn3 = norm_layer(planes * self.expansion)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride = stride

def foward(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

out = self.relu(out)

if self.downsample is not None:

identity = self.downsample(x)

out += identity

out = self.relu(out)

return out

接下来我们定义以下ResNet:

# 定义网络

class ResNet(nn.Module):

def __init__(self, block, layers, num_class=1000, norm_layer=None):

super(ResNet, self).__init__()

if norm_layer is not None:

norm_layer = nn.BatchNorm2d

# 图像传入的时候,通道初始值是64

self.inplanes = 64

# 根据ResNet的网络图来看,第一层的conv1,使用的是7x7的图像,通道64,stride = 2

self.conv1 = nn.Conv2d(3, self.inplanes, kernel_size=7, stride=2, padding=3, bias=False)

self.bn1 = norm_layer(self.inplanes)

self.relu = nn.ReLU(inplace=True)

self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1)

self.layer1 = self._make_layer(block, 64, layers[0])

self.layer2 = self._make_layer(block, 128, layers[1], stride=2)

self.layer3 = self._make_layer(block, 256, layers[2], stride=2)

self.layer4 = self._make_layer(block, 512, layers[3], stride=2)

self.avgpool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512 * block.expansion, num_class)

# 参数初始化

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu')

elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):

nn.init.constant_(m.weight, 1)

nn.init.constant_(m.bias, 0)

# 重点

def _make_layer(self, block, planes, blocks, stride=1):

norm_layer = self._norm_layer

downsample = None

if stride != 1 or self.inplanes != planes * block.expansion:

downsample = nn.Sequential(

conv1x1(self.inplanes, planes * block.expansion, stride),

norm_layer(planes * block.expansion)

)

layers = []

layers.append(block(self.inplanes, planes, stride, downsample, norm_layer))

self.inplanes = planes * self.expansion

for _ in range(1, blocks):

layers.append(block(self.inplanes, planes, norm_layer=norm_layer))

return nn.Sequential(*layers)

def forward(self, x):

x = self.conv1(x)

x = self.bn1(x)

x = self.relu(x)

x = self.maxpool(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = self.avgpool(x)

x = torch.flattern(x, 1)

x = self.fc(x)

return x

以上代码均为手敲,可能有不对的地方,详细的代码可以参阅:

https://github.com/pytorch/vision/blob/master/torchvision/models/resnet.py

本文仅作为简单解读

471

471

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?