1--代码

#include "argsParser.h"

#include "buffers.h"

#include "common.h"

#include "logger.h"

#include "NvCaffeParser.h"

#include "NvInfer.h"

#include <cuda_runtime_api.h>

#include <cstdlib>

#include <fstream>

#include <iostream>

#include <sstream>

using samplesCommon::SampleUniquePtr;

const std::string gSampleName = "TensorRT.sample_googlenet";

class SampleGoogleNet

{

public:

// 构造函数

SampleGoogleNet(const samplesCommon::CaffeSampleParams& params): mParams(params){}

// 定义成员函数

// Builds the network engine

bool build();

// Runs the TensorRT inference engine for this sample

bool infer();

// Used to clean up any state created in the sample class

bool teardown();

samplesCommon::CaffeSampleParams mParams;

private:

// Parses a Caffe model for GoogleNet and creates a TensorRT network

void constructNetwork(

SampleUniquePtr<nvcaffeparser1::ICaffeParser>& parser, SampleUniquePtr<nvinfer1::INetworkDefinition>& network);

std::shared_ptr<nvinfer1::ICudaEngine> mEngine{nullptr}; // The TensorRT engine used to run the network

};

// 成员函数实现

bool SampleGoogleNet::build()

{

// 创建 builder

auto builder = SampleUniquePtr<nvinfer1::IBuilder>(nvinfer1::createInferBuilder(sample::gLogger.getTRTLogger()));

if (!builder)

{

return false;

}

// 使用 builder创建 network

auto network = SampleUniquePtr<nvinfer1::INetworkDefinition>(builder->createNetworkV2(0));

if (!network)

{

return false;

}

// 创建config 用于配置模型

auto config = SampleUniquePtr<nvinfer1::IBuilderConfig>(builder->createBuilderConfig());

if (!config)

{

return false;

}

// 创建parser,用于解析模型

auto parser = SampleUniquePtr<nvcaffeparser1::ICaffeParser>(nvcaffeparser1::createCaffeParser());

if (!parser)

{

return false;

}

// 调用成员函数利用 parser 创建 network

constructNetwork(parser, network);

builder->setMaxBatchSize(mParams.batchSize); // 设定 batchsize

config->setMaxWorkspaceSize(16_MiB); // 设定 workspace

samplesCommon::enableDLA(builder.get(), config.get(), mParams.dlaCore); // 启用DLA

// CUDA stream used for profiling by the builder.

auto profileStream = samplesCommon::makeCudaStream(); // 创建 Cuda stream

if (!profileStream)

{

return false;

}

config->setProfileStream(*profileStream);

// 创建推理引擎

SampleUniquePtr<IHostMemory> plan{builder->buildSerializedNetwork(*network, *config)};

if (!plan)

{

return false;

}

// 序列化模型后,创建 Runtime 接口

SampleUniquePtr<IRuntime> runtime{createInferRuntime(sample::gLogger.getTRTLogger())};

if (!runtime)

{

return false;

}

// 反序列化

mEngine = std::shared_ptr<nvinfer1::ICudaEngine>(

runtime->deserializeCudaEngine(plan->data(), plan->size()), samplesCommon::InferDeleter());

if (!mEngine)

{

return false;

}

return true;

}

// 私有成员函数 constructNetwork 的实现

void SampleGoogleNet::constructNetwork(

SampleUniquePtr<nvcaffeparser1::ICaffeParser>& parser, SampleUniquePtr<nvinfer1::INetworkDefinition>& network)

{

const nvcaffeparser1::IBlobNameToTensor* blobNameToTensor = parser->parse(

mParams.prototxtFileName.c_str(), mParams.weightsFileName.c_str(), *network, nvinfer1::DataType::kFLOAT);

for (auto& s : mParams.outputTensorNames)

{

network->markOutput(*blobNameToTensor->find(s.c_str()));

}

}

// 推理成员函数 infer() 实现

bool SampleGoogleNet::infer()

{ // 创建 buffer 管理对象

samplesCommon::BufferManager buffers(mEngine, mParams.batchSize);

// 创建上下文

auto context = SampleUniquePtr<nvinfer1::IExecutionContext>(mEngine->createExecutionContext());

if (!context)

{

return false;

}

for (auto& input : mParams.inputTensorNames)

{

const auto bufferSize = buffers.size(input);

if (bufferSize == samplesCommon::BufferManager::kINVALID_SIZE_VALUE)

{

sample::gLogError << "input tensor missing: " << input << "\n";

return EXIT_FAILURE;

}

memset(buffers.getHostBuffer(input), 0, bufferSize); // memset的作用是将某一块内存的所有内容置为特定值

}

buffers.copyInputToDevice(); // buffer的内容从 Host 到 Device

// 执行推理

bool status = context->execute(mParams.batchSize, buffers.getDeviceBindings().data());

if (!status)

{

return false;

}

// 将执行结果从 Device 传递到 Host

buffers.copyOutputToHost();

return true;

}

bool SampleGoogleNet::teardown()

{

nvcaffeparser1::shutdownProtobufLibrary();

return true;

}

samplesCommon::CaffeSampleParams initializeSampleParams(const samplesCommon::Args& args)

{

samplesCommon::CaffeSampleParams params; // 利用 命名空间samplesCommon 中的 CaffeSampleParams结构体 创建参数对象

if (args.dataDirs.empty()) // 加载默认参数

{

params.dataDirs.push_back("data/googlenet/");

params.dataDirs.push_back("data/samples/googlenet/");

}

else

{

params.dataDirs = args.dataDirs;

}

params.prototxtFileName = locateFile("googlenet.prototxt", params.dataDirs); // 加载网络原型配置文件

params.weightsFileName = locateFile("googlenet.caffemodel", params.dataDirs); // 加载网络训练权重文件

params.inputTensorNames.push_back("data"); // 输入向量名称

params.batchSize = 4; // batchsize

params.outputTensorNames.push_back("prob"); // 输出向量名称

params.dlaCore = args.useDLACore;

return params;

}

void printHelpInfo()

{

std::cout

<< "Usage: ./sample_googlenet [-h or --help] [-d or --datadir=<path to data directory>] [--useDLACore=<int>]\n";

std::cout << "--help Display help information\n";

std::cout << "--datadir Specify path to a data directory, overriding the default. This option can be used "

"multiple times to add multiple directories. If no data directories are given, the default is to use "

"data/samples/googlenet/ and data/googlenet/"

<< std::endl;

std::cout << "--useDLACore=N Specify a DLA engine for layers that support DLA. Value can range from 0 to n-1, "

"where n is the number of DLA engines on the platform."

<< std::endl;

}

int main(int argc, char** argv)

{

samplesCommon::Args args; // 创建一个 args 对象

bool argsOK = samplesCommon::parseArgs(args, argc, argv); // 解析 argc 和 argv,赋值给 args

if (!argsOK)

{

sample::gLogError << "Invalid arguments" << std::endl;

printHelpInfo();

return EXIT_FAILURE;

}

if (args.help) // 打印帮助信息

{

printHelpInfo();

return EXIT_SUCCESS;

}

auto sampleTest = sample::gLogger.defineTest(gSampleName, argc, argv);

sample::gLogger.reportTestStart(sampleTest);

samplesCommon::CaffeSampleParams params = initializeSampleParams(args); // 调用定义的 initializeSampleParams() 初始化参数设置

SampleGoogleNet sample(params); // 利用 SampleGoogleNet 实例化对象

sample::gLogInfo << "Building and running a GPU inference engine for GoogleNet" << std::endl;

if (!sample.build()) // 执行 build

{

return sample::gLogger.reportFail(sampleTest);

}

if (!sample.infer()) // 执行推理

{

return sample::gLogger.reportFail(sampleTest);

}

if (!sample.teardown()) // 执行清理

{

return sample::gLogger.reportFail(sampleTest);

}

sample::gLogInfo << "Ran " << argv[0] << " with: " << std::endl; // 打印

std::stringstream ss;

ss << "Input(s): ";

for (auto& input : sample.mParams.inputTensorNames)

{

ss << input << " ";

}

sample::gLogInfo << ss.str() << std::endl;

ss.str(std::string());

ss << "Output(s): ";

for (auto& output : sample.mParams.outputTensorNames)

{

ss << output << " ";

}

sample::gLogInfo << ss.str() << std::endl;

return sample::gLogger.reportPass(sampleTest);

}

2--编译

① CMakeLists.txt

cmake_minimum_required(VERSION 3.13)

project(TensorRT_test)

set(CMAKE_CXX_STANDARD 11)

set(SAMPLES_COMMON_SOURCES "/home/liujinfu/Downloads/TensorRT-8.2.5.1/samples/common/logger.cpp")

add_executable(TensorRT_test_GoogleNet sampleGoogleNet.cpp ${SAMPLES_COMMON_SOURCES})

# add TensorRT8

include_directories(/home/liujinfu/Downloads/TensorRT-8.2.5.1/include)

include_directories(/home/liujinfu/Downloads/TensorRT-8.2.5.1/samples/common)

set(TENSORRT_LIB_PATH "/home/liujinfu/Downloads/TensorRT-8.2.5.1/lib")

file(GLOB LIBS "${TENSORRT_LIB_PATH}/*.so")

# add CUDA

find_package(CUDA 11.3 REQUIRED)

message("CUDA_LIBRARIES:${CUDA_LIBRARIES}")

message("CUDA_INCLUDE_DIRS:${CUDA_INCLUDE_DIRS}")

include_directories(${CUDA_INCLUDE_DIRS})

# link

target_link_libraries(TensorRT_test_GoogleNet ${LIBS} ${CUDA_LIBRARIES})② 编译

mkdir build && cd build

cmake ..

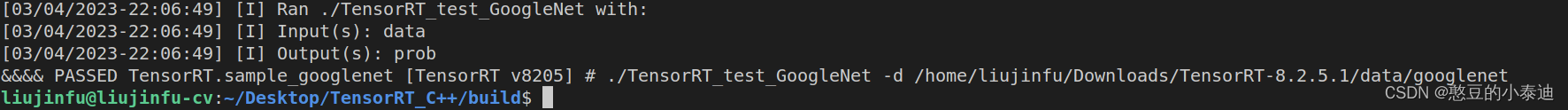

make3--运行

./TensorRT_test_GoogleNet -d /home/liujinfu/Downloads/TensorRT-8.2.5.1/data/googlenet

746

746

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?