找到lvm配置文件:vim /etc/lvm/lvm.conf,搜索找到devices字段中filter

# Configuration option devices/issue_discards.

# Issue discards to PVs that are no longer used by an LV.

# Discards are sent to an LV's underlying physical volumes when the LV

# is no longer using the physical volumes' space, e.g. lvremove,

# lvreduce. Discards inform the storage that a region is no longer

# used. Storage that supports discards advertise the protocol-specific

# way discards should be issued by the kernel (TRIM, UNMAP, or

# WRITE SAME with UNMAP bit set). Not all storage will support or

# benefit from discards, but SSDs and thinly provisioned LUNs

# generally do. If enabled, discards will only be issued if both the

# storage and kernel provide support.

issue_discards = 0

# Configuration option devices/allow_changes_with_duplicate_pvs.

# Allow VG modification while a PV appears on multiple devices.

# When a PV appears on multiple devices, LVM attempts to choose the

# best device to use for the PV. If the devices represent the same

# underlying storage, the choice has minimal consequence. If the

# devices represent different underlying storage, the wrong choice

# can result in data loss if the VG is modified. Disabling this

# setting is the safest option because it prevents modifying a VG

# or activating LVs in it while a PV appears on multiple devices.

# Enabling this setting allows the VG to be used as usual even with

# uncertain devices.

allow_changes_with_duplicate_pvs = 0

# Configuration option devices/allow_mixed_block_sizes.

# Allow PVs in the same VG with different logical block sizes.

# When allowed, the user is responsible to ensure that an LV is

# using PVs with matching block sizes when necessary.

allow_mixed_block_sizes = 0

#filter = ["a|^/dev/disk/by-id/lvm-pv-uuid-IrtXBh-SUfm-t6C6-We8L-WB5I-byci-cpBYCK$|", "r|.*|"]

filter = ["a|^/dev/disk/by-id/lvm-pv-uuid-IrtXBh-SUfm-t6C6-We8L-WB5I-byci-cpBYCK$|", "a|^/dev/disk/by-id/lvm-pv-uuid-nAG78p-lcmT-D5Bt-6w6Z-feKS-cWzG-6YZJjn$|", "a|sdc|","a|sdd|","r|.*|"]

}

找到global_filter一行发现,此filter允许(a)/dev/sda7,拒绝(r)其他所有此目录下名称(如上,此目录为dir=/dev下),

bingo,问题就出在这里,/dev/sdb/在这里被过滤掉了,因此不搜索。

解决之道就出来了,在此行【】中添加:“a|sdb1|”,注意不能用/dev/sdb1,因为/dev/已经有了,就是上面说的dir=/dev/。

之后再执行pvcreate命令,通过,问题解决。

[root@ovirt106 gluster_bricks]# fdisk /dev/sdc

Welcome to fdisk (util-linux 2.32.1).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Command (m for help): m

Help:

DOS (MBR)

a toggle a bootable flag

b edit nested BSD disklabel

c toggle the dos compatibility flag

Generic

d delete a partition

F list free unpartitioned space

l list known partition types

n add a new partition

p print the partition table

t change a partition type

v verify the partition table

i print information about a partition

Misc

m print this menu

u change display/entry units

x extra functionality (experts only)

Script

I load disk layout from sfdisk script file

O dump disk layout to sfdisk script file

Save & Exit

w write table to disk and exit

q quit without saving changes

Create a new label

g create a new empty GPT partition table

G create a new empty SGI (IRIX) partition table

o create a new empty DOS partition table

s create a new empty Sun partition table

Command (m for help): d

Selected partition 1

Partition 1 has been deleted.

Command (m for help): p

Disk /dev/sdc: 836 GiB, 897648164864 bytes, 1753219072 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0x34fb55a9

Command (m for help): w

The partition table has been altered.

Calling ioctl() to re-read partition table.

Syncing disks.

[root@ovirt106 gluster_bricks]# pvcreate /dev/sdc

WARNING: dos signature detected on /dev/sdc at offset 510. Wipe it? [y/n]: y

Wiping dos signature on /dev/sdc.

Physical volume "/dev/sdc" successfully created.

[root@ovirt106 gluster_bricks]#

[root@node103 ~]# pvcreate /dev/sdc

Cannot use /dev/sdc: device is rejected by filter config

[root@node103 ~]# Cannot use /dev/sdc: device is rejected by filter config

-bash: Cannot: command not found

[root@node103 ~]# vi /etc/lvm/lvm.conf

[root@node103 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 558.9G 0 disk

├─gluster_vg_sda-gluster_lv_engine 253:17 0 100G 0 lvm /gluster_bricks/engine

├─gluster_vg_sda-gluster_thinpool_gluster_vg_sda_tmeta 253:18 0 3G 0 lvm

│ └─gluster_vg_sda-gluster_thinpool_gluster_vg_sda-tpool 253:20 0 452.9G 0 lvm

│ ├─gluster_vg_sda-gluster_thinpool_gluster_vg_sda 253:21 0 452.9G 1 lvm

│ └─gluster_vg_sda-gluster_lv_data 253:22 0 480G 0 lvm /gluster_bricks/data

└─gluster_vg_sda-gluster_thinpool_gluster_vg_sda_tdata 253:19 0 452.9G 0 lvm

└─gluster_vg_sda-gluster_thinpool_gluster_vg_sda-tpool 253:20 0 452.9G 0 lvm

├─gluster_vg_sda-gluster_thinpool_gluster_vg_sda 253:21 0 452.9G 1 lvm

└─gluster_vg_sda-gluster_lv_data 253:22 0 480G 0 lvm /gluster_bricks/data

sdb 8:16 0 838.4G 0 disk

sdc 8:32 0 838.4G 0 disk

sdd 8:48 0 278.9G 0 disk

├─sdd1 8:49 0 1G 0 part /boot

└─sdd2 8:50 0 277.9G 0 part

├─onn-pool00_tmeta 253:0 0 1G 0 lvm

│ └─onn-pool00-tpool 253:2 0 206G 0 lvm

│ ├─onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 253:3 0 169G 0 lvm /

│ ├─onn-pool00 253:10 0 206G 1 lvm

│ ├─onn-var_log_audit 253:11 0 2G 0 lvm /var/log/audit

│ ├─onn-var_log 253:12 0 8G 0 lvm /var/log

│ ├─onn-var_crash 253:13 0 10G 0 lvm /var/crash

│ ├─onn-var 253:14 0 15G 0 lvm /var

│ ├─onn-tmp 253:15 0 1G 0 lvm /tmp

│ └─onn-home 253:16 0 1G 0 lvm /home

├─onn-pool00_tdata 253:1 0 206G 0 lvm

│ └─onn-pool00-tpool 253:2 0 206G 0 lvm

│ ├─onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 253:3 0 169G 0 lvm /

│ ├─onn-pool00 253:10 0 206G 1 lvm

│ ├─onn-var_log_audit 253:11 0 2G 0 lvm /var/log/audit

│ ├─onn-var_log 253:12 0 8G 0 lvm /var/log

│ ├─onn-var_crash 253:13 0 10G 0 lvm /var/crash

│ ├─onn-var 253:14 0 15G 0 lvm /var

│ ├─onn-tmp 253:15 0 1G 0 lvm /tmp

│ └─onn-home 253:16 0 1G 0 lvm /home

└─onn-swap 253:4 0 15.7G 0 lvm [SWAP]

sr0 11:0 1 1024M 0 rom

[root@node103 ~]# pvcreate /dev/sdb

WARNING: xfs signature detected on /dev/sdb at offset 0. Wipe it? [y/n]: y

Wiping xfs signature on /dev/sdb.

Physical volume "/dev/sdb" successfully created.

[root@node103 ~]# pvcreate /dev/sdc

WARNING: xfs signature detected on /dev/sdc at offset 0. Wipe it? [y/n]: y

Wiping xfs signature on /dev/sdc.

Physical volume "/dev/sdc" successfully created.

[root@node103 ~]#

vgcreate

[root@node101 gluster_bricks]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 838.4G 0 disk

sdb 8:16 0 838.4G 0 disk

sdc 8:32 0 558.9G 0 disk

├─gluster_vg_sdc-gluster_lv_engine 253:14 0 100G 0 lvm /gluster_bricks/engine

├─gluster_vg_sdc-gluster_thinpool_gluster_vg_sdc_tmeta 253:15 0 3G 0 lvm

│ └─gluster_vg_sdc-gluster_thinpool_gluster_vg_sdc-tpool 253:17 0 452.9G 0 lvm

│ ├─gluster_vg_sdc-gluster_thinpool_gluster_vg_sdc 253:18 0 452.9G 1 lvm

│ └─gluster_vg_sdc-gluster_lv_data 253:19 0 480G 0 lvm /gluster_bricks/data

└─gluster_vg_sdc-gluster_thinpool_gluster_vg_sdc_tdata 253:16 0 452.9G 0 lvm

└─gluster_vg_sdc-gluster_thinpool_gluster_vg_sdc-tpool 253:17 0 452.9G 0 lvm

├─gluster_vg_sdc-gluster_thinpool_gluster_vg_sdc 253:18 0 452.9G 1 lvm

└─gluster_vg_sdc-gluster_lv_data 253:19 0 480G 0 lvm /gluster_bricks/data

sdd 8:48 0 278.9G 0 disk

├─sdd1 8:49 0 1G 0 part /boot

└─sdd2 8:50 0 277.9G 0 part

├─onn-pool00_tmeta 253:0 0 1G 0 lvm

│ └─onn-pool00-tpool 253:2 0 206G 0 lvm

│ ├─onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 253:3 0 169G 0 lvm /

│ ├─onn-pool00 253:7 0 206G 1 lvm

│ ├─onn-var_log_audit 253:8 0 2G 0 lvm /var/log/audit

│ ├─onn-var_log 253:9 0 8G 0 lvm /var/log

│ ├─onn-var_crash 253:10 0 10G 0 lvm /var/crash

│ ├─onn-var 253:11 0 15G 0 lvm /var

│ ├─onn-tmp 253:12 0 1G 0 lvm /tmp

│ └─onn-home 253:13 0 1G 0 lvm /home

├─onn-pool00_tdata 253:1 0 206G 0 lvm

│ └─onn-pool00-tpool 253:2 0 206G 0 lvm

│ ├─onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 253:3 0 169G 0 lvm /

│ ├─onn-pool00 253:7 0 206G 1 lvm

│ ├─onn-var_log_audit 253:8 0 2G 0 lvm /var/log/audit

│ ├─onn-var_log 253:9 0 8G 0 lvm /var/log

│ ├─onn-var_crash 253:10 0 10G 0 lvm /var/crash

│ ├─onn-var 253:11 0 15G 0 lvm /var

│ ├─onn-tmp 253:12 0 1G 0 lvm /tmp

│ └─onn-home 253:13 0 1G 0 lvm /home

└─onn-swap 253:4 0 15.7G 0 lvm [SWAP]

sr0 11:0 1 1024M 0 rom

[root@node101 gluster_bricks]# vgcreate vmstore /dev/sda /dev/sdb

Volume group "vmstore" successfully created

[root@node101 gluster_bricks]#

创建LV

[root@ovirt106 gluster_bricks]# blkid

/dev/mapper/onn-var_crash: UUID="de2ea7ce-29f3-441a-8b8d-47bbcbca2b59" BLOCK_SIZE="512" TYPE="xfs"

/dev/sda2: UUID="IrtXBh-SUfm-t6C6-We8L-WB5I-byci-cpBYCK" TYPE="LVM2_member" PARTUUID="d77c4977-02"

/dev/sdb: UUID="nAG78p-lcmT-D5Bt-6w6Z-feKS-cWzG-6YZJjn" TYPE="LVM2_member"

/dev/sda1: UUID="084af988-29ed-4350-ad70-1d2f5afb8d1f" BLOCK_SIZE="512" TYPE="xfs" PARTUUID="d77c4977-01"

/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1: UUID="5d49651c-c911-4a4e-94a1-d2df627a1d89" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-swap: UUID="98ff7321-fcb6-45c9-a68d-70ebc264d09f" TYPE="swap"

/dev/mapper/gluster_vg_sdb-gluster_lv_engine: UUID="52d64ba1-3064-4334-a84a-a12078f7b32b" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/gluster_vg_sdb-gluster_lv_data: UUID="51401db2-bf3a-4aba-af15-130c2d497d94" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-var_log_audit: UUID="bc108545-59d6-4f3b-bf0c-cc5777be2a3c" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-var_log: UUID="78fd9245-76a1-472f-8b71-2a167429ddae" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-var: UUID="0e00bbf7-2d8c-45c4-a814-a7be1e04f0bd" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-tmp: UUID="9192cbbb-e48b-4b1f-8885-a681d190a363" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-home: UUID="14416f3f-0b8b-479e-af22-51f8fbf8dc2b" BLOCK_SIZE="512" TYPE="xfs"

/dev/sdc: UUID="Q3GgE2-MYmY-p5ZP-3NTz-YgA2-mJZX-Pptxst" TYPE="LVM2_member"

/dev/sdd: UUID="V003Vh-9mYN-0H2C-znGd-Xvuw-5xu9-32AoAx" TYPE="LVM2_member"

[root@ovirt106 gluster_bricks]# lvcreate -l 100%VG -n lv_vmstore vmstore

WARNING: LVM2_member signature detected on /dev/vmstore/lv_vmstore at offset 536. Wipe it? [y/n]: y

Wiping LVM2_member signature on /dev/vmstore/lv_vmstore.

Logical volume "lv_vmstore" created.

[root@ovirt106 gluster_bricks]# mkfs.xfs /dev/vmstore/lv_vmstore

meta-data=/dev/vmstore/lv_vmstore isize=512 agcount=4, agsize=109575680 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1 bigtime=0 inobtcount=0

data = bsize=4096 blocks=438302720, imaxpct=5

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=214015, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

格式化LV,查看uuid

[root@node103 ~]# pvcreate /dev/sdc

Cannot use /dev/sdc: device is rejected by filter config

[root@node103 ~]# Cannot use /dev/sdc: device is rejected by filter config

-bash: Cannot: command not found

[root@node103 ~]# vi /etc/lvm/lvm.conf

[root@node103 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 558.9G 0 disk

├─gluster_vg_sda-gluster_lv_engine 253:17 0 100G 0 lvm /gluster_bricks/engine

├─gluster_vg_sda-gluster_thinpool_gluster_vg_sda_tmeta 253:18 0 3G 0 lvm

│ └─gluster_vg_sda-gluster_thinpool_gluster_vg_sda-tpool 253:20 0 452.9G 0 lvm

│ ├─gluster_vg_sda-gluster_thinpool_gluster_vg_sda 253:21 0 452.9G 1 lvm

│ └─gluster_vg_sda-gluster_lv_data 253:22 0 480G 0 lvm /gluster_bricks/data

└─gluster_vg_sda-gluster_thinpool_gluster_vg_sda_tdata 253:19 0 452.9G 0 lvm

└─gluster_vg_sda-gluster_thinpool_gluster_vg_sda-tpool 253:20 0 452.9G 0 lvm

├─gluster_vg_sda-gluster_thinpool_gluster_vg_sda 253:21 0 452.9G 1 lvm

└─gluster_vg_sda-gluster_lv_data 253:22 0 480G 0 lvm /gluster_bricks/data

sdb 8:16 0 838.4G 0 disk

sdc 8:32 0 838.4G 0 disk

sdd 8:48 0 278.9G 0 disk

├─sdd1 8:49 0 1G 0 part /boot

└─sdd2 8:50 0 277.9G 0 part

├─onn-pool00_tmeta 253:0 0 1G 0 lvm

│ └─onn-pool00-tpool 253:2 0 206G 0 lvm

│ ├─onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 253:3 0 169G 0 lvm /

│ ├─onn-pool00 253:10 0 206G 1 lvm

│ ├─onn-var_log_audit 253:11 0 2G 0 lvm /var/log/audit

│ ├─onn-var_log 253:12 0 8G 0 lvm /var/log

│ ├─onn-var_crash 253:13 0 10G 0 lvm /var/crash

│ ├─onn-var 253:14 0 15G 0 lvm /var

│ ├─onn-tmp 253:15 0 1G 0 lvm /tmp

│ └─onn-home 253:16 0 1G 0 lvm /home

├─onn-pool00_tdata 253:1 0 206G 0 lvm

│ └─onn-pool00-tpool 253:2 0 206G 0 lvm

│ ├─onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 253:3 0 169G 0 lvm /

│ ├─onn-pool00 253:10 0 206G 1 lvm

│ ├─onn-var_log_audit 253:11 0 2G 0 lvm /var/log/audit

│ ├─onn-var_log 253:12 0 8G 0 lvm /var/log

│ ├─onn-var_crash 253:13 0 10G 0 lvm /var/crash

│ ├─onn-var 253:14 0 15G 0 lvm /var

│ ├─onn-tmp 253:15 0 1G 0 lvm /tmp

│ └─onn-home 253:16 0 1G 0 lvm /home

└─onn-swap 253:4 0 15.7G 0 lvm [SWAP]

sr0 11:0 1 1024M 0 rom

[root@node103 ~]# pvcreate /dev/sdb

WARNING: xfs signature detected on /dev/sdb at offset 0. Wipe it? [y/n]: y

Wiping xfs signature on /dev/sdb.

Physical volume "/dev/sdb" successfully created.

[root@node103 ~]# pvcreate /dev/sdc

WARNING: xfs signature detected on /dev/sdc at offset 0. Wipe it? [y/n]: y

Wiping xfs signature on /dev/sdc.

Physical volume "/dev/sdc" successfully created.

[root@node103 ~]# ^C

[root@node103 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 558.9G 0 disk

├─gluster_vg_sda-gluster_lv_engine 253:17 0 100G 0 lvm /gluster_bricks/engine

├─gluster_vg_sda-gluster_thinpool_gluster_vg_sda_tmeta 253:18 0 3G 0 lvm

│ └─gluster_vg_sda-gluster_thinpool_gluster_vg_sda-tpool 253:20 0 452.9G 0 lvm

│ ├─gluster_vg_sda-gluster_thinpool_gluster_vg_sda 253:21 0 452.9G 1 lvm

│ └─gluster_vg_sda-gluster_lv_data 253:22 0 480G 0 lvm /gluster_bricks/data

└─gluster_vg_sda-gluster_thinpool_gluster_vg_sda_tdata 253:19 0 452.9G 0 lvm

└─gluster_vg_sda-gluster_thinpool_gluster_vg_sda-tpool 253:20 0 452.9G 0 lvm

├─gluster_vg_sda-gluster_thinpool_gluster_vg_sda 253:21 0 452.9G 1 lvm

└─gluster_vg_sda-gluster_lv_data 253:22 0 480G 0 lvm /gluster_bricks/data

sdb 8:16 0 838.4G 0 disk

sdc 8:32 0 838.4G 0 disk

sdd 8:48 0 278.9G 0 disk

├─sdd1 8:49 0 1G 0 part /boot

└─sdd2 8:50 0 277.9G 0 part

├─onn-pool00_tmeta 253:0 0 1G 0 lvm

│ └─onn-pool00-tpool 253:2 0 206G 0 lvm

│ ├─onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 253:3 0 169G 0 lvm /

│ ├─onn-pool00 253:10 0 206G 1 lvm

│ ├─onn-var_log_audit 253:11 0 2G 0 lvm /var/log/audit

│ ├─onn-var_log 253:12 0 8G 0 lvm /var/log

│ ├─onn-var_crash 253:13 0 10G 0 lvm /var/crash

│ ├─onn-var 253:14 0 15G 0 lvm /var

│ ├─onn-tmp 253:15 0 1G 0 lvm /tmp

│ └─onn-home 253:16 0 1G 0 lvm /home

├─onn-pool00_tdata 253:1 0 206G 0 lvm

│ └─onn-pool00-tpool 253:2 0 206G 0 lvm

│ ├─onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 253:3 0 169G 0 lvm /

│ ├─onn-pool00 253:10 0 206G 1 lvm

│ ├─onn-var_log_audit 253:11 0 2G 0 lvm /var/log/audit

│ ├─onn-var_log 253:12 0 8G 0 lvm /var/log

│ ├─onn-var_crash 253:13 0 10G 0 lvm /var/crash

│ ├─onn-var 253:14 0 15G 0 lvm /var

│ ├─onn-tmp 253:15 0 1G 0 lvm /tmp

│ └─onn-home 253:16 0 1G 0 lvm /home

└─onn-swap 253:4 0 15.7G 0 lvm [SWAP]

sr0 11:0 1 1024M 0 rom

[root@node103 ~]# vgcreate vmstore /dev/sdb /dev/sdc

Volume group "vmstore" successfully created

[root@node103 ~]# vg

-bash: vg: command not found

[root@node103 ~]# fdisk -l

Disk /dev/sdd: 278.9 GiB, 299439751168 bytes, 584843264 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xe6ac2d12

Device Boot Start End Sectors Size Id Type

/dev/sdd1 * 2048 2099199 2097152 1G 83 Linux

/dev/sdd2 2099200 584843263 582744064 277.9G 8e Linux LVM

Disk /dev/sda: 558.9 GiB, 600127266816 bytes, 1172123568 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdb: 838.4 GiB, 900185481216 bytes, 1758174768 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdc: 838.4 GiB, 900185481216 bytes, 1758174768 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1: 169 GiB, 181466562560 bytes, 354426880 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-swap: 15.7 GiB, 16840130560 bytes, 32890880 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/onn-var_log_audit: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-var_log: 8 GiB, 8589934592 bytes, 16777216 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-var_crash: 10 GiB, 10737418240 bytes, 20971520 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-var: 15 GiB, 16106127360 bytes, 31457280 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-tmp: 1 GiB, 1073741824 bytes, 2097152 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-home: 1 GiB, 1073741824 bytes, 2097152 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/gluster_vg_sda-gluster_lv_engine: 100 GiB, 107374182400 bytes, 209715200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/gluster_vg_sda-gluster_lv_data: 480 GiB, 515396075520 bytes, 1006632960 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 131072 bytes / 262144 bytes

[root@node103 ~]# lvcreate -l 100%VG -n lv_store vmstore

Logical volume "lv_store" created.

[root@node103 ~]# fdisk -l

Disk /dev/sdd: 278.9 GiB, 299439751168 bytes, 584843264 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xe6ac2d12

Device Boot Start End Sectors Size Id Type

/dev/sdd1 * 2048 2099199 2097152 1G 83 Linux

/dev/sdd2 2099200 584843263 582744064 277.9G 8e Linux LVM

Disk /dev/sda: 558.9 GiB, 600127266816 bytes, 1172123568 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdb: 838.4 GiB, 900185481216 bytes, 1758174768 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdc: 838.4 GiB, 900185481216 bytes, 1758174768 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1: 169 GiB, 181466562560 bytes, 354426880 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-swap: 15.7 GiB, 16840130560 bytes, 32890880 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/onn-var_log_audit: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-var_log: 8 GiB, 8589934592 bytes, 16777216 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-var_crash: 10 GiB, 10737418240 bytes, 20971520 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-var: 15 GiB, 16106127360 bytes, 31457280 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-tmp: 1 GiB, 1073741824 bytes, 2097152 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-home: 1 GiB, 1073741824 bytes, 2097152 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/gluster_vg_sda-gluster_lv_engine: 100 GiB, 107374182400 bytes, 209715200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/gluster_vg_sda-gluster_lv_data: 480 GiB, 515396075520 bytes, 1006632960 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 131072 bytes / 262144 bytes

Disk /dev/mapper/vmstore-lv_store: 1.7 TiB, 1800363048960 bytes, 3516334080 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

[root@node103 ~]# blkid

/dev/mapper/onn-var_crash: UUID="00050fc2-5319-46da-b6c2-b0557f602845" BLOCK_SIZE="512" TYPE="xfs"

/dev/sdd2: UUID="DWXIm1-1QSa-qU1q-0urm-1sRl-BEiz-NaemIW" TYPE="LVM2_member" PARTUUID="e6ac2d12-02"

/dev/sdd1: UUID="840d0f88-c5f0-4a98-a7dd-73ebf347a85b" BLOCK_SIZE="512" TYPE="xfs" PARTUUID="e6ac2d12-01"

/dev/sda: UUID="kMvSz0-GoE5-Q0vf-qrjM-3H5m-NCLg-fSTZb8" TYPE="LVM2_member"

/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1: UUID="8bef5e42-0fd1-4595-8b3c-079127a2c237" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-swap: UUID="18284c2e-3596-4596-b0e8-59924944c417" TYPE="swap"

/dev/mapper/onn-var_log_audit: UUID="928237b1-7c78-42d0-afca-dfd362225740" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-var_log: UUID="cb6aafa3-9a97-4dc5-81f6-d2da3b0fdcd9" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-var: UUID="47b2e20c-f623-404d-9a12-3b6a31b71096" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-tmp: UUID="599eda13-d184-4a59-958c-93e37bc6fdaa" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-home: UUID="ef67a11a-c786-42d9-89d0-ee518b50fce5" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/gluster_vg_sda-gluster_lv_engine: UUID="3b99a374-baa4-4783-8da8-8ecbfdb227a4" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/gluster_vg_sda-gluster_lv_data: UUID="eb5ef30a-cb48-4491-99c6-3e6c6bfe3f69" BLOCK_SIZE="512" TYPE="xfs"

/dev/sdb: UUID="m8ZIeU-j2eU-8ZMK-q52W-Zcjd-hqij-JSG8XF" TYPE="LVM2_member"

/dev/sdc: UUID="pHlCKc-wvGK-4DKX-m5IF-IXig-BZJM-YqX38s" TYPE="LVM2_member"

[root@node103 ~]# lsblk -f /dev/mapper/vmstore-lv_store

NAME FSTYPE LABEL UUID MOUNTPOINT

vmstore-lv_store

[root@node103 ~]# lsblk /dev/mapper/vmstore-lv_store -f

NAME FSTYPE LABEL UUID MOUNTPOINT

vmstore-lv_store

[root@node103 ~]# mkfs.xfs /dev/mapper/vmstore-lv_store

meta-data=/dev/mapper/vmstore-lv_store isize=512 agcount=4, agsize=109885440 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1 bigtime=0 inobtcount=0

data = bsize=4096 blocks=439541760, imaxpct=5

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=214620, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@node103 ~]# lsblk /dev/mapper/vmstore-lv_store -f

NAME FSTYPE LABEL UUID MOUNTPOINT

vmstore-lv_store xfs 0ab91915-a144-4e81-bca9-996daf719c5c

[root@node103 ~]# blkid

/dev/mapper/onn-var_crash: UUID="00050fc2-5319-46da-b6c2-b0557f602845" BLOCK_SIZE="512" TYPE="xfs"

/dev/sdd2: UUID="DWXIm1-1QSa-qU1q-0urm-1sRl-BEiz-NaemIW" TYPE="LVM2_member" PARTUUID="e6ac2d12-02"

/dev/sdd1: UUID="840d0f88-c5f0-4a98-a7dd-73ebf347a85b" BLOCK_SIZE="512" TYPE="xfs" PARTUUID="e6ac2d12-01"

/dev/sda: UUID="kMvSz0-GoE5-Q0vf-qrjM-3H5m-NCLg-fSTZb8" TYPE="LVM2_member"

/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1: UUID="8bef5e42-0fd1-4595-8b3c-079127a2c237" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-swap: UUID="18284c2e-3596-4596-b0e8-59924944c417" TYPE="swap"

/dev/mapper/onn-var_log_audit: UUID="928237b1-7c78-42d0-afca-dfd362225740" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-var_log: UUID="cb6aafa3-9a97-4dc5-81f6-d2da3b0fdcd9" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-var: UUID="47b2e20c-f623-404d-9a12-3b6a31b71096" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-tmp: UUID="599eda13-d184-4a59-958c-93e37bc6fdaa" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-home: UUID="ef67a11a-c786-42d9-89d0-ee518b50fce5" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/gluster_vg_sda-gluster_lv_engine: UUID="3b99a374-baa4-4783-8da8-8ecbfdb227a4" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/gluster_vg_sda-gluster_lv_data: UUID="eb5ef30a-cb48-4491-99c6-3e6c6bfe3f69" BLOCK_SIZE="512" TYPE="xfs"

/dev/sdb: UUID="m8ZIeU-j2eU-8ZMK-q52W-Zcjd-hqij-JSG8XF" TYPE="LVM2_member"

/dev/sdc: UUID="pHlCKc-wvGK-4DKX-m5IF-IXig-BZJM-YqX38s" TYPE="LVM2_member"

/dev/mapper/vmstore-lv_store: UUID="0ab91915-a144-4e81-bca9-996daf719c5c" BLOCK_SIZE="512" TYPE="xfs"

[root@node103 ~]#

创建 gluster文件存储

[root@ovirt106 /]# gluster volume create gsvmstore replica 2 node101.com:/gluster_bricks/xgvmstore node103.com:/gluster_bricks/xgvm store ovirt106.com:/gluster_bricks/xgvmstore force

number of bricks is not a multiple of replica count

Usage:

volume create <NEW-VOLNAME> [stripe <COUNT>] [[replica <COUNT> [arbiter <COUNT>]]|[replica 2 thin-arbiter 1]] [disperse [<COUNT>]] [disperse-data <COUNT>] [redundancy <COUNT>] [transport <tcp|rdma|tcp,rdma>] <NEW-BRICK> <TA-BRICK>... [force]

[root@ovirt106 /]# gluster volume create gsvmstore replica 2 node101.com:/gluster_bricks/xgvmstore node103.com:/gluster_bricks/xgvm store ovirt106.com:/gluster_bricks/xgvmstore force

number of bricks is not a multiple of replica count

Usage:

volume create <NEW-VOLNAME> [stripe <COUNT>] [[replica <COUNT> [arbiter <COUNT>]]|[replica 2 thin-arbiter 1]] [disperse [<COUNT>]] [disperse-data <COUNT>] [redundancy <COUNT>] [transport <tcp|rdma|tcp,rdma>] <NEW-BRICK> <TA-BRICK>... [force]

[root@ovirt106 /]# gluster peer status

Number of Peers: 2

Hostname: node101.com

Uuid: 8e61e896-60a0-44b5-8136-2477715d1dcd

State: Peer in Cluster (Connected)

Hostname: node103.com

Uuid: 8695cb7c-7813-4139-beb6-6eed940b6ff7

State: Peer in Cluster (Connected)

[root@ovirt106 /]# gluster volume create gs_store replica 2 node101.com:/gluster_bricks/xgvmstore node103.com:/gluster_bricks/xgvmstore ovirt106.com:/gluster_bricks/xgvmstore

Replica 2 volumes are prone to split-brain. Use Arbiter or Replica 3 to avoid this. See: http://docs.gluster.org/en/latest/Administrator%20Guide/Split%20brain%20and%20ways%20to%20deal%20with%20it/.

Do you still want to continue?

(y/n) y

number of bricks is not a multiple of replica count

Usage:

volume create <NEW-VOLNAME> [stripe <COUNT>] [[replica <COUNT> [arbiter <COUNT>]]|[replica 2 thin-arbiter 1]] [disperse [<COUNT>]] [disperse-data <COUNT>] [redundancy <COUNT>] [transport <tcp|rdma|tcp,rdma>] <NEW-BRICK> <TA-BRICK>... [force]

[root@ovirt106 /]# gluster volume create gs_store replica 3 node101.com:/gluster_bricks/xgvmstore node103.com:/gluster_bricks/xgvmstore ovirt106.com:/gluster_bricks/xgvmstore

volume create: gs_store: failed: The brick ovirt106.com:/gluster_bricks/xgvmstore is a mount point. Please create a sub-directory under the mount point and use that as the brick directory. Or use 'force' at the end of the command if you want to override this behavior.

[root@ovirt106 /]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 7.4G 0 7.4G 0% /dev

tmpfs 7.5G 4.0K 7.5G 1% /dev/shm

tmpfs 7.5G 193M 7.3G 3% /run

tmpfs 7.5G 0 7.5G 0% /sys/fs/cgroup

/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 38G 7.0G 31G 19% /

/dev/mapper/gluster_vg_sdb-gluster_lv_engine 100G 752M 100G 1% /gluster_bricks/engine

/dev/sda1 1014M 350M 665M 35% /boot

/dev/mapper/gluster_vg_sdb-gluster_lv_data 200G 1.5G 199G 1% /gluster_bricks/data

/dev/mapper/onn-home 1014M 40M 975M 4% /home

/dev/mapper/onn-var 15G 507M 15G 4% /var

/dev/mapper/onn-tmp 1014M 40M 975M 4% /tmp

/dev/mapper/onn-var_log 8.0G 292M 7.8G 4% /var/log

/dev/mapper/onn-var_crash 10G 105M 9.9G 2% /var/crash

/dev/mapper/onn-var_log_audit 2.0G 56M 2.0G 3% /var/log/audit

ovirt106.com:/data 200G 3.5G 197G 2% /rhev/data-center/mnt/glusterSD/ovirt106.com:_data

ovirt106.com:/engine 100G 1.8G 99G 2% /rhev/data-center/mnt/glusterSD/ovirt106.com:_engine

tmpfs 1.5G 0 1.5G 0% /run/user/0

/dev/mapper/vmstore-lv_vmstore 836G 5.9G 830G 1% /gluster_bricks/xgvmstore

[root@ovirt106 /]# df -HT

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 8.0G 0 8.0G 0% /dev

tmpfs tmpfs 8.0G 4.1k 8.0G 1% /dev/shm

tmpfs tmpfs 8.0G 202M 7.8G 3% /run

tmpfs tmpfs 8.0G 0 8.0G 0% /sys/fs/cgroup

/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 xfs 41G 7.5G 33G 19% /

/dev/mapper/gluster_vg_sdb-gluster_lv_engine xfs 108G 788M 107G 1% /gluster_bricks/engine

/dev/sda1 xfs 1.1G 367M 697M 35% /boot

/dev/mapper/gluster_vg_sdb-gluster_lv_data xfs 215G 1.6G 214G 1% /gluster_bricks/data

/dev/mapper/onn-home xfs 1.1G 42M 1.1G 4% /home

/dev/mapper/onn-var xfs 17G 532M 16G 4% /var

/dev/mapper/onn-tmp xfs 1.1G 42M 1.1G 4% /tmp

/dev/mapper/onn-var_log xfs 8.6G 306M 8.3G 4% /var/log

/dev/mapper/onn-var_crash xfs 11G 110M 11G 2% /var/crash

/dev/mapper/onn-var_log_audit xfs 2.2G 58M 2.1G 3% /var/log/audit

ovirt106.com:/data fuse.glusterfs 215G 3.7G 211G 2% /rhev/data-center/mnt/glusterSD/ovirt106.com:_data

ovirt106.com:/engine fuse.glusterfs 108G 1.9G 106G 2% /rhev/data-center/mnt/glusterSD/ovirt106.com:_engine

tmpfs tmpfs 1.6G 0 1.6G 0% /run/user/0

/dev/mapper/vmstore-lv_vmstore xfs 898G 6.3G 891G 1% /gluster_bricks/xgvmstore

[root@ovirt106 /]# fdisk -l

Disk /dev/sdb: 557.8 GiB, 598931931136 bytes, 1169788928 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sda: 100 GiB, 107374182400 bytes, 209715200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xd77c4977

Device Boot Start End Sectors Size Id Type

/dev/sda1 * 2048 2099199 2097152 1G 83 Linux

/dev/sda2 2099200 209715199 207616000 99G 8e Linux LVM

Disk /dev/sdc: 836 GiB, 897648164864 bytes, 1753219072 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdd: 836 GiB, 897648164864 bytes, 1753219072 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1: 37.7 GiB, 40479227904 bytes, 79060992 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-swap: 4.3 GiB, 4588568576 bytes, 8962048 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/gluster_vg_sdb-gluster_lv_engine: 100 GiB, 107374182400 bytes, 209715200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/gluster_vg_sdb-gluster_lv_data: 200 GiB, 214748364800 bytes, 419430400 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 262144 bytes / 262144 bytes

Disk /dev/mapper/onn-var_log_audit: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-var_log: 8 GiB, 8589934592 bytes, 16777216 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-var_crash: 10 GiB, 10737418240 bytes, 20971520 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-var: 15 GiB, 16106127360 bytes, 31457280 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-tmp: 1 GiB, 1073741824 bytes, 2097152 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-home: 1 GiB, 1073741824 bytes, 2097152 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/vmstore-lv_vmstore: 1.6 TiB, 1795287941120 bytes, 3506421760 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

[root@ovirt106 /]# umount /gluster_bricks/xgvmstore/

[root@ovirt106 /]# lvremove /gluster_bricks/xgvmstore/

"/gluster_bricks/xgvmstore/": Invalid path for Logical Volume.

[root@ovirt106 /]# lvremove lv_vmstore

Volume group "lv_vmstore" not found

Cannot process volume group lv_vmstore

[root@ovirt106 /]# lvremove /dev/mapper/vmstore-lv_vmstore

Do you really want to remove active logical volume vmstore/lv_vmstore? [y/n]: y

Logical volume "lv_vmstore" successfully removed.

[root@ovirt106 /]# lvcreate -l 100%VG -n lv_store vmstore

WARNING: xfs signature detected on /dev/vmstore/lv_store at offset 0. Wipe it? [y/n]: y

Wiping xfs signature on /dev/vmstore/lv_store.

Logical volume "lv_store" created.

[root@ovirt106 /]# mkfs.xfs /dev/mapper/vmstore-lv_vmstore

Error accessing specified device /dev/mapper/vmstore-lv_vmstore: No such file or directory

Usage: mkfs.xfs

/* blocksize */ [-b size=num]

/* metadata */ [-m crc=0|1,finobt=0|1,uuid=xxx,rmapbt=0|1,reflink=0|1,

inobtcount=0|1,bigtime=0|1]

/* data subvol */ [-d agcount=n,agsize=n,file,name=xxx,size=num,

(sunit=value,swidth=value|su=num,sw=num|noalign),

sectsize=num

/* force overwrite */ [-f]

/* inode size */ [-i log=n|perblock=n|size=num,maxpct=n,attr=0|1|2,

projid32bit=0|1,sparse=0|1]

/* no discard */ [-K]

/* log subvol */ [-l agnum=n,internal,size=num,logdev=xxx,version=n

sunit=value|su=num,sectsize=num,lazy-count=0|1]

/* label */ [-L label (maximum 12 characters)]

/* naming */ [-n size=num,version=2|ci,ftype=0|1]

/* no-op info only */ [-N]

/* prototype file */ [-p fname]

/* quiet */ [-q]

/* realtime subvol */ [-r extsize=num,size=num,rtdev=xxx]

/* sectorsize */ [-s size=num]

/* version */ [-V]

devicename

<devicename> is required unless -d name=xxx is given.

<num> is xxx (bytes), xxxs (sectors), xxxb (fs blocks), xxxk (xxx KiB),

xxxm (xxx MiB), xxxg (xxx GiB), xxxt (xxx TiB) or xxxp (xxx PiB).

<value> is xxx (512 byte blocks).

[root@ovirt106 /]# fdisk -l

Disk /dev/sdb: 557.8 GiB, 598931931136 bytes, 1169788928 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sda: 100 GiB, 107374182400 bytes, 209715200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xd77c4977

Device Boot Start End Sectors Size Id Type

/dev/sda1 * 2048 2099199 2097152 1G 83 Linux

/dev/sda2 2099200 209715199 207616000 99G 8e Linux LVM

Disk /dev/sdc: 836 GiB, 897648164864 bytes, 1753219072 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdd: 836 GiB, 897648164864 bytes, 1753219072 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1: 37.7 GiB, 40479227904 bytes, 79060992 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-swap: 4.3 GiB, 4588568576 bytes, 8962048 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/gluster_vg_sdb-gluster_lv_engine: 100 GiB, 107374182400 bytes, 209715200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/gluster_vg_sdb-gluster_lv_data: 200 GiB, 214748364800 bytes, 419430400 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 262144 bytes / 262144 bytes

Disk /dev/mapper/onn-var_log_audit: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-var_log: 8 GiB, 8589934592 bytes, 16777216 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-var_crash: 10 GiB, 10737418240 bytes, 20971520 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-var: 15 GiB, 16106127360 bytes, 31457280 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-tmp: 1 GiB, 1073741824 bytes, 2097152 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-home: 1 GiB, 1073741824 bytes, 2097152 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/vmstore-lv_store: 1.6 TiB, 1795287941120 bytes, 3506421760 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

[root@ovirt106 /]# mkfs.xfs /dev/mapper/vmstore-lv_store

meta-data=/dev/mapper/vmstore-lv_store isize=512 agcount=4, agsize=109575680 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1 bigtime=0 inobtcount=0

data = bsize=4096 blocks=438302720, imaxpct=5

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=214015, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@ovirt106 /]# df -HT

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 8.0G 0 8.0G 0% /dev

tmpfs tmpfs 8.0G 4.1k 8.0G 1% /dev/shm

tmpfs tmpfs 8.0G 202M 7.8G 3% /run

tmpfs tmpfs 8.0G 0 8.0G 0% /sys/fs/cgroup

/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 xfs 41G 7.5G 33G 19% /

/dev/mapper/gluster_vg_sdb-gluster_lv_engine xfs 108G 788M 107G 1% /gluster_bricks/engine

/dev/sda1 xfs 1.1G 367M 697M 35% /boot

/dev/mapper/gluster_vg_sdb-gluster_lv_data xfs 215G 1.6G 214G 1% /gluster_bricks/data

/dev/mapper/onn-home xfs 1.1G 42M 1.1G 4% /home

/dev/mapper/onn-var xfs 17G 532M 16G 4% /var

/dev/mapper/onn-tmp xfs 1.1G 42M 1.1G 4% /tmp

/dev/mapper/onn-var_log xfs 8.6G 306M 8.3G 4% /var/log

/dev/mapper/onn-var_crash xfs 11G 110M 11G 2% /var/crash

/dev/mapper/onn-var_log_audit xfs 2.2G 58M 2.1G 3% /var/log/audit

ovirt106.com:/data fuse.glusterfs 215G 3.7G 211G 2% /rhev/data-center/mnt/glusterSD/ovirt106.com:_data

ovirt106.com:/engine fuse.glusterfs 108G 1.9G 106G 2% /rhev/data-center/mnt/glusterSD/ovirt106.com:_engine

tmpfs tmpfs 1.6G 0 1.6G 0% /run/user/0

[root@ovirt106 /]# blkid

/dev/mapper/onn-var_crash: UUID="de2ea7ce-29f3-441a-8b8d-47bbcbca2b59" BLOCK_SIZE="512" TYPE="xfs"

/dev/sda2: UUID="IrtXBh-SUfm-t6C6-We8L-WB5I-byci-cpBYCK" TYPE="LVM2_member" PARTUUID="d77c4977-02"

/dev/sdb: UUID="nAG78p-lcmT-D5Bt-6w6Z-feKS-cWzG-6YZJjn" TYPE="LVM2_member"

/dev/sda1: UUID="084af988-29ed-4350-ad70-1d2f5afb8d1f" BLOCK_SIZE="512" TYPE="xfs" PARTUUID="d77c4977-01"

/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1: UUID="5d49651c-c911-4a4e-94a1-d2df627a1d89" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-swap: UUID="98ff7321-fcb6-45c9-a68d-70ebc264d09f" TYPE="swap"

/dev/mapper/gluster_vg_sdb-gluster_lv_engine: UUID="52d64ba1-3064-4334-a84a-a12078f7b32b" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/gluster_vg_sdb-gluster_lv_data: UUID="51401db2-bf3a-4aba-af15-130c2d497d94" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-var_log_audit: UUID="bc108545-59d6-4f3b-bf0c-cc5777be2a3c" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-var_log: UUID="78fd9245-76a1-472f-8b71-2a167429ddae" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-var: UUID="0e00bbf7-2d8c-45c4-a814-a7be1e04f0bd" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-tmp: UUID="9192cbbb-e48b-4b1f-8885-a681d190a363" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-home: UUID="14416f3f-0b8b-479e-af22-51f8fbf8dc2b" BLOCK_SIZE="512" TYPE="xfs"

/dev/sdc: UUID="Q3GgE2-MYmY-p5ZP-3NTz-YgA2-mJZX-Pptxst" TYPE="LVM2_member"

/dev/sdd: UUID="V003Vh-9mYN-0H2C-znGd-Xvuw-5xu9-32AoAx" TYPE="LVM2_member"

/dev/mapper/vmstore-lv_store: UUID="ae6d1e8a-669f-4716-abf4-c914651061fb" BLOCK_SIZE="512" TYPE="xfs"

[root@ovirt106 /]# vi /etc/fstab

[root@ovirt106 /]# mount -a

[root@ovirt106 /]# df -HT

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 8.0G 0 8.0G 0% /dev

tmpfs tmpfs 8.0G 4.1k 8.0G 1% /dev/shm

tmpfs tmpfs 8.0G 202M 7.8G 3% /run

tmpfs tmpfs 8.0G 0 8.0G 0% /sys/fs/cgroup

/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 xfs 41G 7.5G 33G 19% /

/dev/mapper/gluster_vg_sdb-gluster_lv_engine xfs 108G 788M 107G 1% /gluster_bricks/engine

/dev/sda1 xfs 1.1G 367M 697M 35% /boot

/dev/mapper/gluster_vg_sdb-gluster_lv_data xfs 215G 1.6G 214G 1% /gluster_bricks/data

/dev/mapper/onn-home xfs 1.1G 42M 1.1G 4% /home

/dev/mapper/onn-var xfs 17G 532M 16G 4% /var

/dev/mapper/onn-tmp xfs 1.1G 42M 1.1G 4% /tmp

/dev/mapper/onn-var_log xfs 8.6G 306M 8.3G 4% /var/log

/dev/mapper/onn-var_crash xfs 11G 110M 11G 2% /var/crash

/dev/mapper/onn-var_log_audit xfs 2.2G 58M 2.1G 3% /var/log/audit

ovirt106.com:/data fuse.glusterfs 215G 3.7G 211G 2% /rhev/data-center/mnt/glusterSD/ovirt106.com:_data

ovirt106.com:/engine fuse.glusterfs 108G 1.9G 106G 2% /rhev/data-center/mnt/glusterSD/ovirt106.com:_engine

tmpfs tmpfs 1.6G 0 1.6G 0% /run/user/0

/dev/mapper/vmstore-lv_store xfs 1.8T 13G 1.8T 1% /gluster_bricks/xgvmstore

[root@ovirt106 /]# gluster volume create gs_store replica 3 node101.com:/gluster_bricks/xgvmstore node103.com:/gluster_bricks/xgvmstore ovirt106.com:/gluster_bricks/xgvmstore force

volume create: gs_store: success: please start the volume to access data

[root@ovirt106 /]#

添加磁盘,显示没有权限

[root@ovirt106 /]# gluster volume create gs_store replica 3 node101.com:/gluster_bricks/xgvmstore node103.com:/gluster_bricks/xgvmstore ovirt106.com:/gluster_bricks/xgvmstore force

volume create: gs_store: success: please start the volume to access data

[root@ovirt106 /]# ^C

[root@ovirt106 /]# gluster volume list

data

engine

gs_store

[root@ovirt106 /]# cd /gluster_bricks/xgvmstore/

[root@ovirt106 xgvmstore]# ls

[root@ovirt106 xgvmstore]# gluseter volume start gs_store

-bash: gluseter: command not found

[root@ovirt106 xgvmstore]# gluster volume start gs_store

volume start: gs_store: success

[root@ovirt106 xgvmstore]# cd ..

[root@ovirt106 gluster_bricks]# l,s

-bash: l,s: command not found

[root@ovirt106 gluster_bricks]# ls

data engine xgvmstore

[root@ovirt106 gluster_bricks]# ll

total 0

drwxr-xr-x. 3 root root 18 May 31 11:15 data

drwxr-xr-x. 3 root root 20 May 31 11:14 engine

drwxr-xr-x. 3 root root 24 Jun 7 11:21 xgvmstore

[root@ovirt106 gluster_bricks]# cd engine/

[root@ovirt106 engine]# ll

total 8

drwxr-xr-x. 5 vdsm kvm 8192 Jun 7 11:29 engine

[root@ovirt106 engine]# cd engine/

[root@ovirt106 engine]# ls

5afdcc5b-9de7-49ea-af48-85575bbcc482

[root@ovirt106 engine]# ll

total 0

drwxr-xr-x. 4 vdsm kvm 34 Jun 6 14:54 5afdcc5b-9de7-49ea-af48-85575bbcc482

[root@ovirt106 engine]# cd 5afdcc5b-9de7-49ea-af48-85575bbcc482/

[root@ovirt106 5afdcc5b-9de7-49ea-af48-85575bbcc482]# ll

total 0

drwxr-xr-x. 2 vdsm kvm 89 Jun 6 14:55 dom_md

drwxr-xr-x. 4 vdsm kvm 94 Jun 6 14:59 images

[root@ovirt106 5afdcc5b-9de7-49ea-af48-85575bbcc482]# cd ..

[root@ovirt106 engine]# ls

5afdcc5b-9de7-49ea-af48-85575bbcc482

[root@ovirt106 engine]# ls

5afdcc5b-9de7-49ea-af48-85575bbcc482

[root@ovirt106 engine]# ..

-bash: ..: command not found

[root@ovirt106 engine]# ls

5afdcc5b-9de7-49ea-af48-85575bbcc482

[root@ovirt106 engine]# cd ..

[root@ovirt106 engine]# ls

engine

[root@ovirt106 engine]# cd ..

[root@ovirt106 gluster_bricks]# ls

data engine xgvmstore

[root@ovirt106 gluster_bricks]# cd data/

[root@ovirt106 data]# ll

total 8

drwxr-xr-x. 5 vdsm kvm 8192 Jun 7 11:30 data

[root@ovirt106 data]# cd ..

[root@ovirt106 gluster_bricks]# ll

total 0

drwxr-xr-x. 3 root root 18 May 31 11:15 data

drwxr-xr-x. 3 root root 20 May 31 11:14 engine

drwxr-xr-x. 3 root root 24 Jun 7 11:21 xgvmstore

[root@ovirt106 gluster_bricks]# cd xgvmstore/

[root@ovirt106 xgvmstore]# ls

[root@ovirt106 xgvmstore]# mkdir store

[root@ovirt106 xgvmstore]# ls

store

[root@ovirt106 xgvmstore]# gluster peer status

Number of Peers: 2

Hostname: node101.com

Uuid: 8e61e896-60a0-44b5-8136-2477715d1dcd

State: Peer in Cluster (Connected)

Hostname: node103.com

Uuid: 8695cb7c-7813-4139-beb6-6eed940b6ff7

State: Peer in Cluster (Connected)

[root@ovirt106 xgvmstore]# gluster info

unrecognized word: info (position 0)

Usage: gluster [options] <help> <peer> <pool> <volume>

Options:

--help Shows the help information

--version Shows the version

--print-logdir Shows the log directory

--print-statedumpdir Shows the state dump directory

[root@ovirt106 xgvmstore]# gluster volume status

Status of volume: data

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick node101.com:/gluster_bricks/data/data 49154 0 Y 11362

Brick node103.com:/gluster_bricks/data/data 49152 0 Y 2963

Brick ovirt106.com:/gluster_bricks/data/dat

a 49152 0 Y 9180

Self-heal Daemon on localhost N/A N/A Y 9204

Self-heal Daemon on node103.com N/A N/A Y 3032

Self-heal Daemon on node101.com N/A N/A Y 2974

Task Status of Volume data

------------------------------------------------------------------------------

There are no active volume tasks

Status of volume: engine

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick node101.com:/gluster_bricks/engine/en

gine 49155 0 Y 11373

Brick node103.com:/gluster_bricks/engine/en

gine 49153 0 Y 2988

Brick ovirt106.com:/gluster_bricks/engine/e

ngine 49153 0 Y 9193

Self-heal Daemon on localhost N/A N/A Y 9204

Self-heal Daemon on node101.com N/A N/A Y 2974

Self-heal Daemon on node103.com N/A N/A Y 3032

Task Status of Volume engine

------------------------------------------------------------------------------

There are no active volume tasks

Status of volume: gs_store

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick node101.com:/gluster_bricks/xgvmstore 49152 0 Y 103067

Brick node103.com:/gluster_bricks/xgvmstore 49154 0 Y 54523

Brick ovirt106.com:/gluster_bricks/xgvmstor

e 49154 0 Y 165193

Self-heal Daemon on localhost N/A N/A Y 9204

Self-heal Daemon on node101.com N/A N/A Y 2974

Self-heal Daemon on node103.com N/A N/A Y 3032

Task Status of Volume gs_store

------------------------------------------------------------------------------

There are no active volume tasks

[root@ovirt106 xgvmstore]# ls

store

[root@ovirt106 xgvmstore]# rm store

rm: cannot remove 'store': Is a directory

[root@ovirt106 xgvmstore]# rm -rf store/

[root@ovirt106 xgvmstore]# ls

[root@ovirt106 xgvmstore]# cd ..

[root@ovirt106 gluster_bricks]# ls

data engine xgvmstore

[root@ovirt106 gluster_bricks]# chown -R vdsm:kvm /gluster_bricks/xgvmstore/

[root@ovirt106 gluster_bricks]# ll

total 0

drwxr-xr-x. 3 root root 18 May 31 11:15 data

drwxr-xr-x. 3 root root 20 May 31 11:14 engine

drwxr-xr-x. 3 vdsm kvm 24 Jun 7 11:38 xgvmstore

[root@ovirt106 gluster_bricks]# pwd

/gluster_bricks

[root@ovirt106 gluster_bricks]# cd data/

[root@ovirt106 data]# ll

total 8

drwxr-xr-x. 5 vdsm kvm 8192 Jun 7 11:40 data

[root@ovirt106 data]#

删除gluster卷,重建分布式卷

node status: OK

See `nodectl check` for more information

Admin Console: https://172.16.100.106:9090/

[root@ovirt106 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 7.4G 0 7.4G 0% /dev

tmpfs 7.5G 4.0K 7.5G 1% /dev/shm

tmpfs 7.5G 17M 7.5G 1% /run

tmpfs 7.5G 0 7.5G 0% /sys/fs/cgroup

/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 38G 7.0G 31G 19% /

/dev/mapper/vmstore-lv_store 1.7T 12G 1.7T 1% /gluster_bricks/xgvmstore

/dev/mapper/gluster_vg_sdb-gluster_lv_engine 100G 99G 1.3G 99% /gluster_bricks/engine

/dev/sda1 1014M 350M 665M 35% /boot

/dev/mapper/gluster_vg_sdb-gluster_lv_data 200G 7.0G 193G 4% /gluster_bricks/data

/dev/mapper/onn-var 15G 624M 15G 5% /var

/dev/mapper/onn-home 1014M 40M 975M 4% /home

/dev/mapper/onn-var_crash 10G 105M 9.9G 2% /var/crash

/dev/mapper/onn-var_log 8.0G 298M 7.7G 4% /var/log

/dev/mapper/onn-tmp 1014M 40M 975M 4% /tmp

/dev/mapper/onn-var_log_audit 2.0G 56M 2.0G 3% /var/log/audit

tmpfs 1.5G 0 1.5G 0% /run/user/0

[root@ovirt106 ~]# blkid

/dev/mapper/onn-var_crash: UUID="de2ea7ce-29f3-441a-8b8d-47bbcbca2b59" BLOCK_SIZE="512" TYPE="xfs"

/dev/sda2: UUID="IrtXBh-SUfm-t6C6-We8L-WB5I-byci-cpBYCK" TYPE="LVM2_member" PARTUUID="d77c4977-02"

/dev/sda1: UUID="084af988-29ed-4350-ad70-1d2f5afb8d1f" BLOCK_SIZE="512" TYPE="xfs" PARTUUID="d77c4977-01"

/dev/sdb: UUID="nAG78p-lcmT-D5Bt-6w6Z-feKS-cWzG-6YZJjn" TYPE="LVM2_member"

/dev/sdc: UUID="Q3GgE2-MYmY-p5ZP-3NTz-YgA2-mJZX-Pptxst" TYPE="LVM2_member"

/dev/sdd: UUID="V003Vh-9mYN-0H2C-znGd-Xvuw-5xu9-32AoAx" TYPE="LVM2_member"

/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1: UUID="5d49651c-c911-4a4e-94a1-d2df627a1d89" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-swap: UUID="98ff7321-fcb6-45c9-a68d-70ebc264d09f" TYPE="swap"

/dev/mapper/vmstore-lv_store: UUID="ae6d1e8a-669f-4716-abf4-c914651061fb" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/gluster_vg_sdb-gluster_lv_engine: UUID="52d64ba1-3064-4334-a84a-a12078f7b32b" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/gluster_vg_sdb-gluster_lv_data: UUID="51401db2-bf3a-4aba-af15-130c2d497d94" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-var_log_audit: UUID="bc108545-59d6-4f3b-bf0c-cc5777be2a3c" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-var_log: UUID="78fd9245-76a1-472f-8b71-2a167429ddae" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-var: UUID="0e00bbf7-2d8c-45c4-a814-a7be1e04f0bd" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-tmp: UUID="9192cbbb-e48b-4b1f-8885-a681d190a363" BLOCK_SIZE="512" TYPE="xfs"

/dev/mapper/onn-home: UUID="14416f3f-0b8b-479e-af22-51f8fbf8dc2b" BLOCK_SIZE="512" TYPE="xfs"

[root@ovirt106 ~]# fdisk -l

Disk /dev/sda: 100 GiB, 107374182400 bytes, 209715200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Disk identifier: 0xd77c4977

Device Boot Start End Sectors Size Id Type

/dev/sda1 * 2048 2099199 2097152 1G 83 Linux

/dev/sda2 2099200 209715199 207616000 99G 8e Linux LVM

Disk /dev/sdb: 557.8 GiB, 598931931136 bytes, 1169788928 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdc: 836 GiB, 897648164864 bytes, 1753219072 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sdd: 836 GiB, 897648164864 bytes, 1753219072 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1: 37.7 GiB, 40479227904 bytes, 79060992 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-swap: 4.3 GiB, 4588568576 bytes, 8962048 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/vmstore-lv_store: 1.6 TiB, 1795287941120 bytes, 3506421760 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/gluster_vg_sdb-gluster_lv_engine: 100 GiB, 107374182400 bytes, 209715200 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/mapper/gluster_vg_sdb-gluster_lv_data: 200 GiB, 214748364800 bytes, 419430400 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 262144 bytes / 262144 bytes

Disk /dev/mapper/onn-var_log_audit: 2 GiB, 2147483648 bytes, 4194304 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-var_log: 8 GiB, 8589934592 bytes, 16777216 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-var_crash: 10 GiB, 10737418240 bytes, 20971520 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-var: 15 GiB, 16106127360 bytes, 31457280 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-tmp: 1 GiB, 1073741824 bytes, 2097152 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

Disk /dev/mapper/onn-home: 1 GiB, 1073741824 bytes, 2097152 sectors

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 65536 bytes / 65536 bytes

[root@ovirt106 ~]# lvscan

ACTIVE '/dev/vmstore/lv_store' [1.63 TiB] inherit

ACTIVE '/dev/gluster_vg_sdb/gluster_thinpool_gluster_vg_sdb' [<198.00 GiB] inherit

ACTIVE '/dev/gluster_vg_sdb/gluster_lv_engine' [100.00 GiB] inherit

ACTIVE '/dev/gluster_vg_sdb/gluster_lv_data' [200.00 GiB] inherit

ACTIVE '/dev/onn/pool00' [<74.70 GiB] inherit

ACTIVE '/dev/onn/var_log_audit' [2.00 GiB] inherit

ACTIVE '/dev/onn/var_log' [8.00 GiB] inherit

ACTIVE '/dev/onn/var_crash' [10.00 GiB] inherit

ACTIVE '/dev/onn/var' [15.00 GiB] inherit

ACTIVE '/dev/onn/tmp' [1.00 GiB] inherit

ACTIVE '/dev/onn/home' [1.00 GiB] inherit

inactive '/dev/onn/root' [<37.70 GiB] inherit

ACTIVE '/dev/onn/swap' [4.27 GiB] inherit

inactive '/dev/onn/ovirt-node-ng-4.4.10.2-0.20220303.0' [<37.70 GiB] inherit

ACTIVE '/dev/onn/ovirt-node-ng-4.4.10.2-0.20220303.0+1' [<37.70 GiB] inherit

[root@ovirt106 ~]# pvscan

PV /dev/sdc VG vmstore lvm2 [<836.00 GiB / 0 free]

PV /dev/sdd VG vmstore lvm2 [<836.00 GiB / 0 free]

PV /dev/sdb VG gluster_vg_sdb lvm2 [<300.00 GiB / 0 free]

PV /dev/sda2 VG onn lvm2 [<99.00 GiB / 18.02 GiB free]

Total: 4 [2.02 TiB] / in use: 4 [2.02 TiB] / in no VG: 0 [0 ]

[root@ovirt106 ~]# df -HT

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 8.0G 0 8.0G 0% /dev

tmpfs tmpfs 8.0G 4.1k 8.0G 1% /dev/shm

tmpfs tmpfs 8.0G 18M 8.0G 1% /run

tmpfs tmpfs 8.0G 0 8.0G 0% /sys/fs/cgroup

/dev/mapper/onn-ovirt--node--ng--4.4.10.2--0.20220303.0+1 xfs 41G 7.5G 33G 19% /

/dev/mapper/vmstore-lv_store xfs 1.8T 13G 1.8T 1% /gluster_bricks/xgvmstore

/dev/mapper/gluster_vg_sdb-gluster_lv_engine xfs 108G 107G 1.4G 99% /gluster_bricks/engine

/dev/sda1 xfs 1.1G 367M 697M 35% /boot

/dev/mapper/gluster_vg_sdb-gluster_lv_data xfs 215G 7.5G 208G 4% /gluster_bricks/data

/dev/mapper/onn-var xfs 17G 532M 16G 4% /var

/dev/mapper/onn-home xfs 1.1G 42M 1.1G 4% /home

/dev/mapper/onn-var_crash xfs 11G 110M 11G 2% /var/crash

/dev/mapper/onn-var_log xfs 8.6G 313M 8.3G 4% /var/log

/dev/mapper/onn-tmp xfs 1.1G 42M 1.1G 4% /tmp

/dev/mapper/onn-var_log_audit xfs 2.2G 59M 2.1G 3% /var/log/audit

tmpfs tmpfs 1.6G 0 1.6G 0% /run/user/0

ovirt106.com:/data fuse.glusterfs 215G 9.6G 206G 5% /rhev/data-center/mnt/glusterSD/ovirt106.com:_data

ovirt106.com:/engine fuse.glusterfs 108G 108G 229M 100% /rhev/data-center/mnt/glusterSD/ovirt106.com:_engine

[root@ovirt106 ~]# gluster volume gs_store

unrecognized word: gs_store (position 1)

Usage: gluster [options] <help> <peer> <pool> <volume>

Options:

--help Shows the help information

--version Shows the version

--print-logdir Shows the log directory

--print-statedumpdir Shows the state dump directory

[root@ovirt106 ~]# gluster volume status

Status of volume: data

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick node101.com:/gluster_bricks/data/data 49154 0 Y 11362

Brick node103.com:/gluster_bricks/data/data 49152 0 Y 2963

Brick ovirt106.com:/gluster_bricks/data/dat

a 49152 0 Y 1979

Self-heal Daemon on localhost N/A N/A Y 2067

Self-heal Daemon on node101.com N/A N/A Y 2974

Self-heal Daemon on node103.com N/A N/A Y 3032

Task Status of Volume data

------------------------------------------------------------------------------

There are no active volume tasks

Status of volume: engine

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick node101.com:/gluster_bricks/engine/en

gine 49155 0 Y 11373

Brick node103.com:/gluster_bricks/engine/en

gine 49153 0 Y 2988

Brick ovirt106.com:/gluster_bricks/engine/e

ngine 49153 0 Y 2007

Self-heal Daemon on localhost N/A N/A Y 2067

Self-heal Daemon on node101.com N/A N/A Y 2974

Self-heal Daemon on node103.com N/A N/A Y 3032

Task Status of Volume engine

------------------------------------------------------------------------------

There are no active volume tasks

Volume gs_store is not started

[root@ovirt106 ~]# gluster volume gs_store

unrecognized word: gs_store (position 1)

Usage: gluster [options] <help> <peer> <pool> <volume>

Options:

--help Shows the help information

--version Shows the version

--print-logdir Shows the log directory

--print-statedumpdir Shows the state dump directory

[root@ovirt106 ~]# gluster volume data

unrecognized word: data (position 1)

Usage: gluster [options] <help> <peer> <pool> <volume>

Options:

--help Shows the help information

--version Shows the version

--print-logdir Shows the log directory

--print-statedumpdir Shows the state dump directory

[root@ovirt106 ~]# gluster volume data status

unrecognized word: data (position 1)

Usage: gluster [options] <help> <peer> <pool> <volume>

Options:

--help Shows the help information

--version Shows the version

--print-logdir Shows the log directory

--print-statedumpdir Shows the state dump directory

[root@ovirt106 ~]# gluster volume delete gs_store

Deleting volume will erase all information about the volume. Do you want to continue? (y/n) y

volume delete: gs_store: success

[root@ovirt106 ~]# gluster volume data status

unrecognized word: data (position 1)

Usage: gluster [options] <help> <peer> <pool> <volume>

Options:

--help Shows the help information

--version Shows the version

--print-logdir Shows the log directory

--print-statedumpdir Shows the state dump directory

[root@ovirt106 ~]# gluster volume info

Volume Name: data

Type: Replicate

Volume ID: 5db6c160-09c5-460b-8d1d-f79cb87dc73b

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x 3 = 3

Transport-type: tcp

Bricks:

Brick1: node101.com:/gluster_bricks/data/data

Brick2: node103.com:/gluster_bricks/data/data

Brick3: ovirt106.com:/gluster_bricks/data/data

Options Reconfigured:

performance.client-io-threads: on

nfs.disable: on

transport.address-family: inet

storage.fips-mode-rchecksum: on

performance.quick-read: off

performance.read-ahead: off

performance.io-cache: off

performance.low-prio-threads: 32

network.remote-dio: off

performance.strict-o-direct: on

cluster.eager-lock: enable

cluster.quorum-type: auto

cluster.server-quorum-type: server

cluster.data-self-heal-algorithm: full

cluster.locking-scheme: granular

cluster.shd-max-threads: 8

cluster.shd-wait-qlength: 10000

features.shard: on

user.cifs: off

cluster.choose-local: off

client.event-threads: 4

server.event-threads: 4

network.ping-timeout: 30

server.tcp-user-timeout: 20

server.keepalive-time: 10

server.keepalive-interval: 2

server.keepalive-count: 5

cluster.lookup-optimize: off

storage.owner-uid: 36

storage.owner-gid: 36

cluster.granular-entry-heal: enable

Volume Name: engine

Type: Replicate

Volume ID: 47cb595e-018e-43b7-b387-26a1d83bb5ff

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x 3 = 3

Transport-type: tcp

Bricks:

Brick1: node101.com:/gluster_bricks/engine/engine

Brick2: node103.com:/gluster_bricks/engine/engine

Brick3: ovirt106.com:/gluster_bricks/engine/engine

Options Reconfigured:

performance.client-io-threads: on

nfs.disable: on

transport.address-family: inet

storage.fips-mode-rchecksum: on

performance.quick-read: off

performance.read-ahead: off

performance.io-cache: off

performance.low-prio-threads: 32

network.remote-dio: off

performance.strict-o-direct: on

cluster.eager-lock: enable

cluster.quorum-type: auto

cluster.server-quorum-type: server

cluster.data-self-heal-algorithm: full

cluster.locking-scheme: granular

cluster.shd-max-threads: 8

cluster.shd-wait-qlength: 10000

features.shard: on

user.cifs: off

cluster.choose-local: off

client.event-threads: 4

server.event-threads: 4

network.ping-timeout: 30

server.tcp-user-timeout: 20

server.keepalive-time: 10

server.keepalive-interval: 2

server.keepalive-count: 5

cluster.lookup-optimize: off

storage.owner-uid: 36

storage.owner-gid: 36

cluster.granular-entry-heal: enable

[root@ovirt106 ~]# gluster volume create gs_store node101.com:/gluster_bricks/xgvmstore node103.com:/gluster_bricks/xgvmstore ovirt106.com:/gluster_bricks/xgvmstore force

volume create: gs_store: success: please start the volume to access data

[root@ovirt106 ~]#

重启卷

[root@ovirt106 ~]# gluster volume start gs_store

volume start: gs_store: success

[root@ovirt106 ~]# gluster volume info

Volume Name: data

Type: Replicate

Volume ID: 5db6c160-09c5-460b-8d1d-f79cb87dc73b

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x 3 = 3

Transport-type: tcp

Bricks:

Brick1: node101.com:/gluster_bricks/data/data

Brick2: node103.com:/gluster_bricks/data/data

Brick3: ovirt106.com:/gluster_bricks/data/data

Options Reconfigured:

performance.client-io-threads: on

nfs.disable: on

transport.address-family: inet

storage.fips-mode-rchecksum: on

performance.quick-read: off

performance.read-ahead: off

performance.io-cache: off

performance.low-prio-threads: 32

network.remote-dio: off

performance.strict-o-direct: on

cluster.eager-lock: enable

cluster.quorum-type: auto

cluster.server-quorum-type: server

cluster.data-self-heal-algorithm: full

cluster.locking-scheme: granular

cluster.shd-max-threads: 8

cluster.shd-wait-qlength: 10000

features.shard: on

user.cifs: off

cluster.choose-local: off

client.event-threads: 4

server.event-threads: 4

network.ping-timeout: 30

server.tcp-user-timeout: 20

server.keepalive-time: 10

server.keepalive-interval: 2

server.keepalive-count: 5

cluster.lookup-optimize: off

storage.owner-uid: 36

storage.owner-gid: 36

cluster.granular-entry-heal: enable

Volume Name: engine

Type: Replicate

Volume ID: 47cb595e-018e-43b7-b387-26a1d83bb5ff

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x 3 = 3

Transport-type: tcp

Bricks:

Brick1: node101.com:/gluster_bricks/engine/engine

Brick2: node103.com:/gluster_bricks/engine/engine

Brick3: ovirt106.com:/gluster_bricks/engine/engine

Options Reconfigured:

performance.client-io-threads: on

nfs.disable: on

transport.address-family: inet

storage.fips-mode-rchecksum: on

performance.quick-read: off

performance.read-ahead: off

performance.io-cache: off

performance.low-prio-threads: 32

network.remote-dio: off

performance.strict-o-direct: on

cluster.eager-lock: enable

cluster.quorum-type: auto

cluster.server-quorum-type: server

cluster.data-self-heal-algorithm: full

cluster.locking-scheme: granular

cluster.shd-max-threads: 8

cluster.shd-wait-qlength: 10000

features.shard: on

user.cifs: off

cluster.choose-local: off

client.event-threads: 4

server.event-threads: 4

network.ping-timeout: 30

server.tcp-user-timeout: 20

server.keepalive-time: 10

server.keepalive-interval: 2

server.keepalive-count: 5

cluster.lookup-optimize: off

storage.owner-uid: 36

storage.owner-gid: 36

cluster.granular-entry-heal: enable

Volume Name: gs_store

Type: Distribute

Volume ID: e6ae7082-7ba6-4e02-9656-2f8a3792350c

Status: Started

Snapshot Count: 0

Number of Bricks: 3

Transport-type: tcp

Bricks:

Brick1: node101.com:/gluster_bricks/xgvmstore

Brick2: node103.com:/gluster_bricks/xgvmstore

Brick3: ovirt106.com:/gluster_bricks/xgvmstore

Options Reconfigured:

storage.fips-mode-rchecksum: on

transport.address-family: inet

nfs.disable: on

[root@ovirt106 ~]#

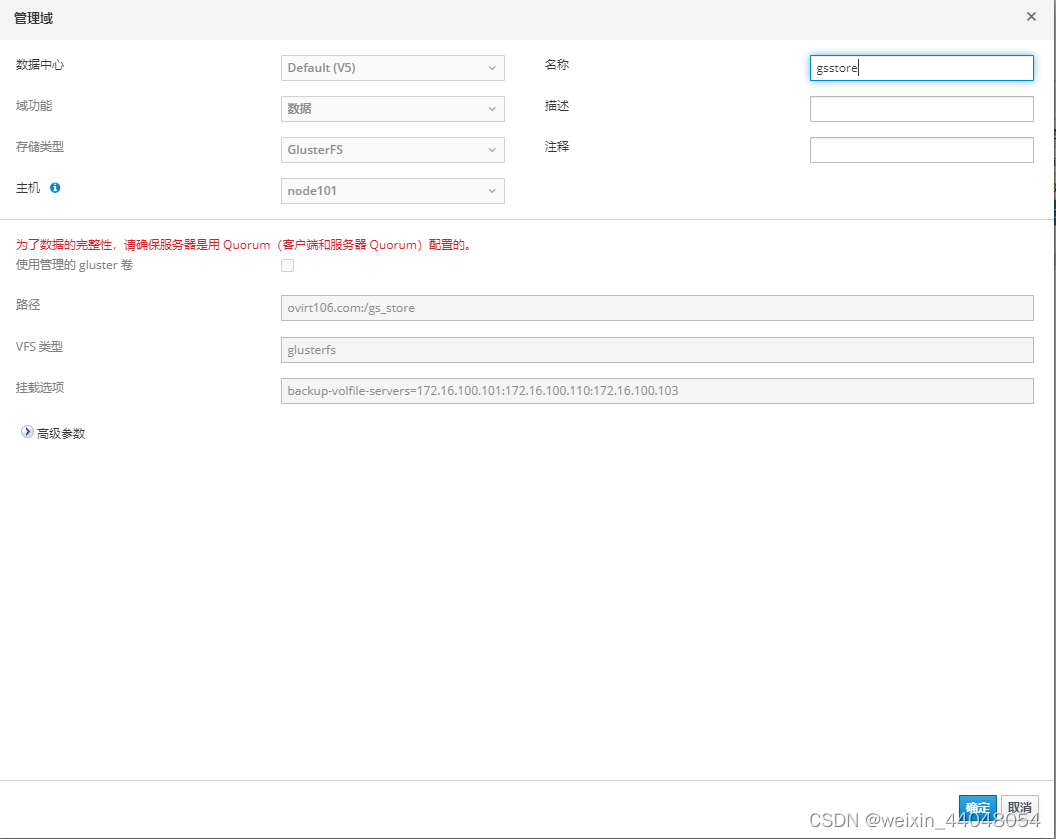

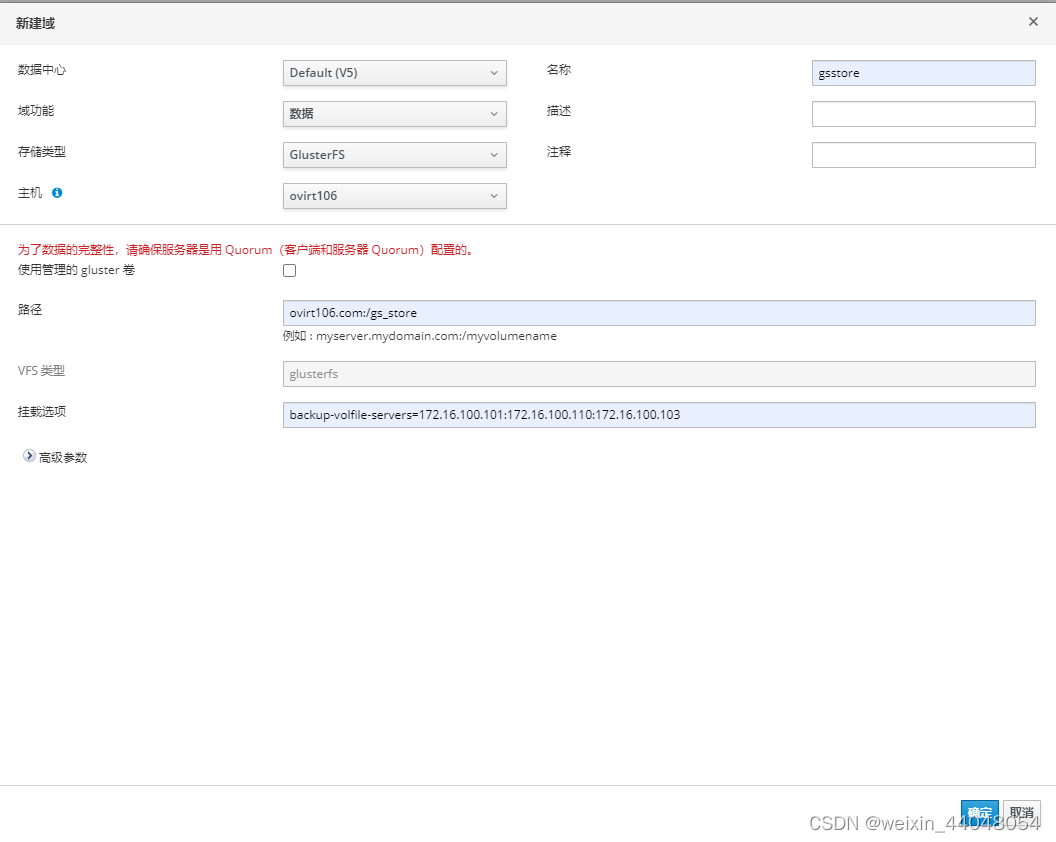

添加域

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?