Unifying Large Language Models and Knowledge Graphs: A Roadmap

• Shirui Pan is with the School of Information and Communication Technology and Institute for Integrated and Intelligent Systems (IIIS), Griffith University, Queensland, Australia. Email: s.pan@griffith.edu.au;

• Linhao Luo and Yufei Wang are with the Department of Data Science and AI, Monash University, Melbourne, Australia. E-mail:linhao.luo@monash.edu, garyyufei@gmail.com.

• Chen Chen is with the Nanyang Technological University, Singapore. E-mail: s190009@ntu.edu.sg.

• Jiapu Wang is with the Faculty of Information Technology, Beijing University of Technology, Beijing, China. E-mail:jpwang@emails.bjut.edu.cn.

• Xindong Wu is with the Key Laboratory of Knowledge Engineering with Big Data (the Ministry of Education of China), Hefei University of

Technology, Hefei, China; He is also affiliated with the Research Center for Knowledge Engineering, Zhejiang Lab, Hangzhou, China. Email:xwu@hfut.edu.cn.

• Shirui Pan and Linhao Luo contributed equally to this work.

• Corresponding Author: Xindong Wu.

• 潘石瑞就职于澳⼤利亚昆⼠兰州格⾥菲斯⼤学信息与通信技术学院和集成与智能系统研究所 (IIIS)。电⼦邮件:s.pan@griffith.edu.au;

• 罗林浩和王玉飞来⾃澳⼤利亚墨尔本莫纳什⼤学数据科学与⼈⼯智能系。电⼦邮箱:lin hao.luo@monash.edu、garyyufei@gmail.com。

• 陈晨来⾃新加坡南洋理⼯⼤学。电⼦邮件:s190009@ntu.edu.sg。

• 王家普在中国北京北京⼯业⼤学信息技术学院⼯作。电⼦邮箱:jpwang@emails.bjut.edu.cn。

•吴新东,合肥⼯业⼤学⼤数据知识⼯程重点实验室(中国教育部),中国合肥;他还⾪属于中国杭州浙江实验室知识⼯程研究中⼼。邮箱:xwu@hfut.edu.cn。

• 潘石瑞和罗林浩对这项⼯作做出了同等贡献。

•通讯作者:吴新东

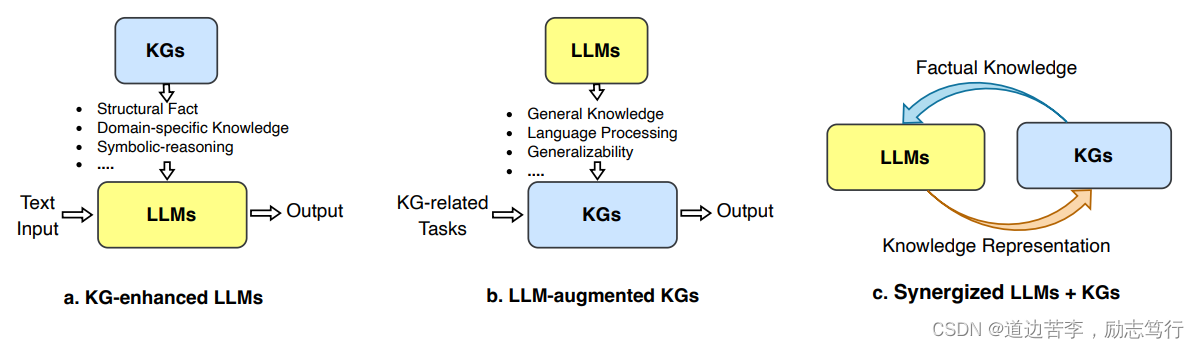

Abstract—Large language models (LLMs), such as ChatGPT and GPT4, are making new waves in the field of natural language processing and artificial intelligence, due to their emergent ability and generalizability. However, LLMs are black-box models, which often fall short of capturing and accessing factual knowledge. In contrast, Knowledge Graphs (KGs), Wikipedia and Huapu for example,are structured knowledge models that explicitly store rich factual knowledge. KGs can enhance LLMs by providing external knowledge for inference and interpretability. Meanwhile, KGs are difficult to construct and evolving by nature, which challenges the existing methods in KGs to generate new facts and represent unseen knowledge. Therefore, it is complementary to unify LLMs and KGs together and simultaneously leverage their advantages. In this article, we present a forward-looking roadmap for the unification of LLMs and KGs. Our roadmap consists of three general frameworks, namely, 1) KG-enhanced LLMs, which incorporate KGs during the pre-training and inference phases of LLMs, or for the purpose of enhancing understanding of the knowledge learned by LLMs; 2) LLM-augmented KGs, that leverage LLMs for different KG tasks such as embedding, completion, construction, graph-to-text generation, and question answering; and 3) Synergized LLMs + KGs, in which LLMs and KGs play equal roles and work in a mutually beneficial way to enhance both LLMs and KGs for bidirectional reasoning driven by both data and knowledge. We review and summarize existing efforts within these three frameworks in our roadmap and pinpoint their future research directions.

摘要——大型语言模型(LLM) 如ChaGPT和GPT4,由于其涌现能力和可推广性正在自然语言处理和人工智能领域掀起新的浪潮。然而,LLM是黑匣子模型,通常无法捕捉和获取事实知识。相反,知识图(KGs) ,例如维基百科和华谱是明确存储丰富事实知识的结构化知识模型。KGs可以通过为推理和可解释性提供外部知识来增强LLM。同时KGs在本质上很难构建和进化,这对KGs中现有的生成新事实和表示未知知识的方法提出了挑战。因此,将LLM和KGs统一在一起并同时利用它们的优势是相辅相成的。在本文中,我们提出了LLM和KGs统一的前瞻性路钱图。我们的路线图由三个通用框架姐成,即:1 )KG增强LLM,它在LLM的预训练和推理阶段结合了KG,或者是为了增强对LLM所学知识的理解;2)LLM增强的KG,利用LLM执行不同的KG任务,如嵌入、完成、构建、图到文本生成和问答;以及3)协同LLIA+KGs,其中LLM和KGs扮演着平等的角色,并以互利的方式工作,以增强LLM和KG,实现由数据和知识驱动的双向推理。我们在路线图中回顾和总结了这三个框架内的现有努力,并确定了它们未来的研究方向。

Index Terms—Natural Language Processing, Large Language Models, Generative Pre-Training, Knowledge Graphs, Roadmap,Bidirectional Reasoning.

索引术语——⾃然语⾔处理、⼤型语⾔模型、⽣成预训练、知识图、路线图、双向推理。

1 INTRODUCTION

1 简介

Large language models (LLMs)

1

^1

1(e.g., BERT [1], RoBERTA[2], and T5 [3]), pre-trained on the large-scale corpus,have shown great performance in various natural language processing (NLP) tasks, such as question answering [4],machine translation [5], and text generation [6]. Recently,the dramatically increasing model size further enables the LLMs with the emergent ability [7], paving the road for applying LLMs as Artificial General Intelligence (AGI).Advanced LLMs like ChatGPT

2

^2

2and PaLM2

3

^3

3, with billions of parameters, exhibit great potential in many complex practical tasks, such as education [8], code generation [9] and recommendation [10].

在大规模语料库上进行预训练的大型语模型(LLM)

1

^1

1(例如,BERT[1]、ROBERTA[2]和T5[3])在各种自然语言处理(NLP)任务中表现出了良好的性能,例如问答[4]机器翻译[5]和文本生成[6]。最近,模型大小的急剧增加进一步使LLM具有了涌现能力[7],为LLM作为人工通用智能(AGI)的应用铺平了道路。ChatGPT

2

^2

2和PaLM2

3

^3

3等具有数十亿参数的高级LLM在许多复杂的实际任务中表现出巨大的潜力,如教育[8]、代码生成[9]和推荐[10]。

- LLMs are also known as pre-trained language models (PLMs).

1.LLM也称为预训练语言模型(PLM) - https://openai.com/blog/chatgpt

- https://ai.google/discover/palm2

Despite their success in many applications, LLMs have been criticized for their lack of factual knowledge. Specifically, LLMs memorize facts and knowledge contained in the training corpus [14]. However, further studies reveal that LLMs are not able to recall facts and often experience hallucinations by generating statements that are factually incorrect [15], [28]. For example, LLMs might say “Einstein discovered gravity in 1687” when asked, “When did Einstein discover gravity?”, which contradicts the fact that Isaac Newton formulated the gravitational theory. This issue severely impairs the trustworthiness of LLMs.

尽管 LLM 已有许多成功应用,但由于缺乏事实知识,它们还是备受诟病。具体来说,LLM 会记忆训练语料库中包含的事实和知识[14]。但是,进一步的研究表明,LLM 无法回忆出事实,而且往往还会出现幻觉问题,即生成具有错误事实的表述 [15], [28]。举个例子,如果向 LLM 提问:“爱因斯坦在什么时候发现了引力?”它可能会说:“爱因斯坦在 1687 年发现了引力。”但事实上,提出引力理论的人是艾萨克・牛顿。这种问题会严重损害 LLM 的可信度。

As black-box models, LLMs are also criticized for their lack of interpretability. LLMs represent knowledge implicitly in their parameters. It is difficult to interpret or validate the knowledge obtained by LLMs. Moreover, LLMs perform reasoning by a probability model, which is an indecisive process [16]. The specific patterns and functions LLMs used to arrive at predictions or decisions are not directly accessible or explainable to humans [17]. Even though some LLMs are equipped to explain their predictions by applying chain-of-thought [29], their reasoning explanations also suffer from the hallucination issue [30]. This severely impairs the application of LLMs in high-stakes scenarios, such as medical diagnosis and legal judgment. For instance, in a medical diagnosis scenario, LLMs may incorrectly diagnose a disease and provide explanations that contradict medical commonsense. This raises another issue that LLMs trained on general corpus might not be able to generalize well to specific domains or new knowledge due to the lack of domain-specific knowledge or new training data [18].

作为黑盒模型,LLM也因其缺乏可解释性而受到批评。LLM 通过参数隐含地表示知识。因此,我们难以解释和验证 LLM 获得的知识。此外,LLM通过概率模型进行推理,而这是一个非决断性的过程[16]。对于 LLM 用以得出预测结果和决策的具体模式和功能,人类难以直接获得详情和解释[17]。尽管通过使用思维链(chain-of-thought)[29],某些 LLM 具备解释自身预测结果的功能,但它们推理出的解释依然存在幻觉问题[30]。这会严重影响 LLM 在事关重大的场景中的应用,如医疗诊断和法律评判。举个例子,在医疗诊断场景中,LLM 可能误诊并提供与医疗常识相悖的解释。这就引出了另一个问题:在一般语料库上训练的 LLM 由于缺乏特定领域的知识或新训练数据,可能无法很好地泛化到特定领域或新知识上[18]。

To address the above issues, a potential solution is to incorporate knowledge graphs (KGs) into LLMs. Knowledge graphs (KGs), storing enormous facts in the way of triples,i.e., (head entity, relation, tail entity), are a structured and decisive manner of knowledge representation (e.g., Wikidata [20], YAGO [31], and NELL [32]). KGs are crucial for various applications as they offer accurate explicit knowledge [19]. Besides, they are renowned for their symbolic reasoning ability [22], which generates interpretable results. KGs can also actively evolve with new knowledge continuously added in [24]. Additionally, experts can construct domain-specific KGs to provide precise and dependable domain-specific knowledge [23].

为了解决上述问题,一个潜在的解决方案是将知识图谱(KG)整合进 LLM 中。知识图谱能以三元组的形式存储巨量事实,即 (头实体、关系、尾实体),因此知识图谱是一种结构化和决断性的知识表征形式,(例如,Wikidata[20]、YAGO[31]、和NELL[32])。知识图谱对多种应用而言都至关重要,因为其能提供准确、明确的知识[19]。此外,此外,众所周知它们还具有很棒的符号推理能力22],这能生成可解释的结果。知识图谱还能随着新知识的持续输入而积极演进[24]。此外,通过让专家来构建特定领域的知识图谱,就能具备提供精确可靠的特定领域知识的能力[23]。

Nevertheless, KGs are difficult to construct [33], and current approaches in KGs [25], [27], [34] are inadequate in handling the incomplete and dynamically changing nature of real-world KGs. These approaches fail to effectively model unseen entities and represent new facts. In addition, they often ignore the abundant textual information in KGs.Moreover, existing methods in KGs are often customized for specific KGs or tasks, which are not generalizable enough.Therefore, it is also necessary to utilize LLMs to address the challenges faced in KGs. We summarize the pros and cons of LLMs and KGs in Fig. 1, respectively.

然而,知识图谱很难构建[33],并且由于真实世界知识图谱[25]、[27]、[34]往往是不完备的,还会动态变化,因此当前的知识图谱方法难以应对。这些方法无法有效建模未见过的实体以及表征新知识。此外,知识图谱中丰富的文本信息往往会被忽视。不仅如此,知识图谱的现有方法往往是针对特定知识图谱或任务定制的,泛化能力不足。因此,有必要使用 LLM 来解决知识图谱面临的挑战。图 1 总结了 LLM 和知识图谱的优缺点。

Recently, the possibility of unifying LLMs with KGs has attracted increasing attention from researchers and practitioners. LLMs and KGs are inherently interconnected and can mutually enhance each other. In KG-enhanced LLMs,KGs can not only be incorporated into the pre-training and inference stages of LLMs to provide external knowledge[35]–[37], but also used for analyzing LLMs and providing interpretability [14], [38], [39]. In LLM-augmented KGs,LLMs have been used in various KG-related tasks, e.g., KG embedding [40], KG completion [26], KG construction [41], KG-to-text generation [42], and KGQA [43], to improve the performance and facilitate the application of KGs. In Synergized LLM + KG, researchers marries the merits of LLMs and KGs to mutually enhance performance in knowledge representation [44] and reasoning [45], [46]. Although there are some surveys on knowledge-enhanced LLMs [47]–[49],which mainly focus on using KGs as an external knowledge to enhance LLMs, they ignore other possibilities of integrating KGs for LLMs and the potential role of LLMs in KG applications.

近段时间,将 LLM 和知识图谱联合起来的可能性受到了越来越多研究者和从业者关注。LLM 和知识图谱本质上是互相关联的,并且能彼此互相强化。如果用知识图谱增强 LLM,那么知识图谱不仅能被集成到 LLM 的预训练和推理阶段,从而用来提供外部知识[35]–[37],还能被用来分析 LLM 以提供可解释性 [14], [38], [39]。而在用 LLM 来增强知识图谱方面,LLM 已被用于多种与知识图谱相关的应用,比如知识图谱嵌入 [40]、知识图谱补全[26]、知识图谱构建[41]、知识图谱到文本的生成[42]、知识图谱问答 [43],LLM 能够提升知识图谱的性能并助益其应用。在 LLM 与知识图谱协同的相关研究中,研究者将 LLM 和知识图谱的优点融合,让它们在知识表征 [44] 和推理[45], [46]方面的能力得以互相促进。 尽管有⼀些关于知识增强LLM的调查[47]-[49],主要集中于使⽤知识图谱作为外部知识来增强LLM,但他们忽略了LLM集成知识图谱的其他可能性以及LLM在知识图谱应用中的潜在作⽤。

In this article, we present a forward-looking roadmap for unifying both LLMs and KGs, to leverage their respective strengths and overcome the limitations of each approach,for various downstream tasks. We propose detailed categorization, conduct comprehensive reviews, and pinpoint emerging directions in these fast-growing fields. Our main contributions are summarized as follows:

在这篇文章中,将在联合 LLM 与知识图谱方面提出一个前瞻性的路线图,针对不同的下游任务,以利用它们各自的优势并克服各自的局限。我们提出详细的分类和全面的总结,并指出了这些快速发展的领域的新兴方向。我们的主要贡献总结如下:

-

Roadmap. We present a forward-looking roadmap for integrating LLMs and KGs. Our roadmap,consisting of three general frameworks to unify LLMs and KGs, namely, KG-enhanced LLMs, LLM-augmented KGs, and Synergized LLMs + KGs, provides guidelines for the unification of these two distinct but complementary technologies.

1)路线图:我们提出了一份 LLM 和知识图谱整合方面的前瞻性路线图。这份路线图包含联合 LLM 与知识图谱的三个概括性框架:用知识图谱增强 LLM、用 LLM 增强知识图谱、LLM 与知识图谱协同。可为联合这两种截然不同但互补的技术提供指导方针。 -

Categorization and review. For each integration framework of our roadmap, we present a detailed categorization and novel taxonomies of research on unifying LLMs and KGs. In each category, we review the research from the perspectives of different integration strategies and tasks, which provides more insights into each framework.

2)分类和总结评估:对于该路线图中的每种整合模式,文中都提供了详细的分类和全新的分类法。对于每种类别,我们从不同整合策略和任务角度总结评估了相关研究工作,从而能为每种框架提供更多见解。 -

Coverage of emerging advances. We cover the advanced techniques in both LLMs and KGs. We include the discussion of state-of-the-art LLMs like ChatGPT and GPT-4 as well as the novel KGs e.g.,multi-modal knowledge graphs.

3)涵盖了新进展:我们覆盖了 LLM 和知识图谱的先进技术。其中讨论了 ChatGPT 和 GPT-4 等当前最先进的 LLM 以及多模态知识图谱等知识图谱新技术。 -

Summary of challenges and future directions. We highlight the challenges in existing research and present several promising future research directions.

4)挑战和未来方向:我们也给出当前研究面临的挑战并给出一些有潜力的未来研究方向。

The rest of this article is organized as follows. Section 2 first explains the background of LLMs and KGs. Section 3 introduces the roadmap and the overall categorization of this article. Section 4 presents the different KGs-enhanced LLM approaches. Section 5 describes the possible LLM-augmented KG methods. Section 6 shows the approaches of synergizing LLMs and KGs. Section 7 discusses the challenges and future research directions. Finally, Section 8 concludes this paper.

本⽂的其余部分组织如下。第 2 节⾸先解释了 LLM 和 KG 的背景。第 3 节介绍了本⽂的路线图和总体分类。第 4 节介绍了不同的 KG 增强 LLM ⽅法。第 5 节描述了可能的 LLM 增强 KG ⽅法。第 6 节展⽰了LLM 和 KG 协同作⽤的⽅法。第 7 节讨论了挑战和未来的研究⽅向。最后,第 8 节总结了本⽂。

2 BACKGROUND

2 背景

In this section, we will first briefly introduce a few representative large language models (LLMs) and discuss the prompt engineering that efficiently uses LLMs for varieties of applications. Then, we illustrate the concept of knowledge graphs (KGs) and present different categories of KGs.

在本节中,我们将⾸先简要介绍⼀些有代表性的⼤语⾔模型(LLM),并讨论如何高效地使用 LLM 进行各种应用的提示工程。然后,我们阐述了知识图谱(KGs)的概念,并介绍了不同类型的知识图谱。

2.1 Large Language models (LLMs)

2.1 大型语言模型(LLM)

Large language modes (LLMs) pre-trained on large-scale corpus have shown great potential in various NLP tasks [13]. As shown in Fig. 3, most LLMs derive from the Transformer design [50], which contains the encoder and decoder modules empowered by a self-attention mechanism. Based on the architecture structure, LLMs can be categorized into three groups: 1) encoder-only LLMs, 2) encoder-decoder LLMs, and 3) decoder-only LLMs. As shown in Fig. 2, we summarize several representative LLMs with different model architectures, model sizes, and open-source availabilities.

在大规模语料库上预训练的 LLM ,在各种自然语言处理任务中显示出巨大的潜力 [13]。如图 3 所示,大多数 LLM 都源于 Transformer 设计 [50],其中包含编码器和解码器模块,并采用了自注意力机制。LLM 可以根据架构不同而分为三大类别:1)仅编码器 LLM,2)编码器-解码器 LLM,3)仅解码器 LLM。如图 2 所示,我们对具有不同模型架构、模型大小和开源可用性的几个具有代表性的 LLM 进行了总结。

2.1.1 Encoder-only LLMs.

2.1.1 仅编码器LLM

Encoder-only large language models only use the encoder to encode the sentence and understand the relationships between words. The common training paradigm for these model is to predict the mask words in an input sentence.This method is unsupervised and can be trained on the large-scale corpus. Encoder-only LLMs like BERT [1], ALBERT [51], RoBERTa [2], and ELECTRA [52] require adding an extra prediction head to resolve downstream tasks. These models are most effective for tasks that require understanding the entire sentence, such as text classification [53] and named entity recognition [54].

仅编码器⼤型语⾔模型仅使⽤编码器对句⼦进⾏编码并理解单词之间的关系。这些模型的常⻅训练范式是预测输⼊句⼦中的掩码词。该⽅法是⽆监督的,可以在⼤规模语料库上进⾏训练。仅编码器的LLM,如 BERT [1]、AL BERT [51]、RoBERTa [2] 和 ELECTRA[52] 需要添加额外的预测头来解决下游任务。这些模型对于需要理解整个句子的任务(如文本分类 [53] 和命名实体识别 [54])最为有效。

2.1.2 Encoder-decoder LLMs.

2.1.2 编码器-解码器LLM

Encoder-decoder large language models adopt both the encoder and decoder module. The encoder module is responsible for encoding the input sentence into a hiddenspace, and the decoder is used to generate the target output text. The training strategies in encoder-decoder LLMs can be more flexible. For example, T5 [3] is pre-trained by masking and predicting spans of masking words. UL2 [55] unifies several training targets such as different masking spans and masking frequencies. Encoder-decoder LLMs (e.g., T0 [56],ST-MoE [57], and GLM-130B [58]) are able to directly resolve tasks that generate sentences based on some context, such as summariaztion, translation, and question answering [59].

编码器-解码器⼤语⾔模型同时采⽤编码器和解码器模块。编码器模块负责将输⼊句⼦编码到隐藏空间,解码器⽤于⽣成⽬标输出⽂本。编码器-解码器 LLM 中的训练策略可以更加灵活。例如,T5 [3]是通过掩蔽和预测掩蔽词的跨度来预训练的。 UL2[55]统⼀了多个训练⽬标,例如不同的掩蔽跨度和掩蔽频率。编码器-解码器 LLM(例如 T0 [56]、ST-MoE [57] 和 GLM-130B [58])能够直接解决基于某些上下⽂⽣成句⼦的任务,例如摘要、翻译和问答[59]。

2.1.3 Decoder-only LLMs.

2.1.3 仅解码器LLM

Decoder-only large language models only adopt the decoder module to generate target output text. The training paradigm for these models is to predict the next word in the sentence. Large-scale decoder-only LLMs can generally perform downstream tasks from a few examples or simple instructions, without adding prediction heads or finetuning [60]. Many state-of-the-art LLMs (e.g., Chat-GPT [61] and GPT-4

4

^4

4) follow the decoder-only architecture. However,since these models are closed-source, it is challenging for academic researchers to conduct further research. Recently,Alpaca

5

^5

5 and Vicuna

6

^6

6 are released as open-source decoder-only LLMs. These models are finetuned based on LLaMA [62] and achieve comparable performance with ChatGPT and GPT-4.

仅解码器⼤型语⾔模型仅采⽤解码器模块来⽣成⽬标输出⽂本。这些模型的训练范式是预测句⼦中的下⼀个单词。⼤规模仅解码器的LLM 通常可以通过⼏个⽰例或简单指令执⾏下游任务,⽽⽆需添加预测头或微调 [60]。许多最先进的 LLM(例如 Chat-GPT [61] 和GPT-4

4

^4

4 )都遵循仅解码器架构。然⽽,由于这些模型是闭源的,学术研究⼈员很难进⾏进⼀步的研究。最近,Alpaca

5

^5

5和 Vicuna

6

^6

6作为仅限开源解码器的LLM发布。这些模型基于 LLaMA [62] 进⾏了微调,并实现了与 ChatGPT和 GPT-4 相当的性能。

- https://openai.com/product/gpt-4

- https://github.com/tatsu-lab/stanford_alpaca

- https://lmsys.org/blog/2023-03-30-vicuna/

2.2 Prompt Engineering

2.2 提示工程

Prompt engineering is a novel field that focuses on creating and refining prompts to maximize the effectiveness of large language models (LLMs) across various applications and research areas [63]. As shown in Fig. 4, a prompt is a sequence of natural language inputs for LLMs that are specified for the task, such as sentiment classification. A prompt could contain several elements, i.e., 1) Instruction, 2) Context, and 3) Input Text. Instruction is a short sentence that instructs the model to perform a specific task. Context provides the context for the input text or few-shot examples. Input Text is the text that needs to be processed by the model.

提⽰⼯程是⼀个全新的领域,专注于创建和完善提⽰,以最⼤限度地提⾼跨各种应⽤和研究领域的⼤型语⾔模型(LLM)的有效性[63]。如图 4 所⽰, 是 LLM 的自然语言输入序列,需要针对具体任务(如情绪分类)创建。提⽰可以包含

多个元素,即 1) 指示、2) 背景信息和 3) 输⼊⽂本。指示是告知模型执行某特定任务的短句。背景信息为输入文本或少样本学习提供相关的信息。输入文本是需要模型处理的文本。

Prompt engineering seeks to improve the capacity of large large language models (e.g.,ChatGPT) in diverse complex tasks such as question answering, sentiment classification, and common sense reasoning. Chain-of-thought (CoT) prompt [64] enables complex reasoning capabilities through intermediate reasoning steps. Liu et al. [65] incorporate external knowledge to design better knowledge-enhanced prompts. Automatic prompt engineer (APE) proposes an automatic prompt generation method to improve the performance of LLMs [66]. Prompt offers a simple way to utilize the potential of LLMs without finetuning. Proficiency in prompt engineering leads to a better understanding of the strengths and weaknesses of LLMs.

提示⼯程旨在提⾼⼤型语⾔模型(例如,ChatGPT)在各种复杂任务中的能⼒,例如问答、情感分类和常识推理。思想链(CoT)提⽰[64]通过中间推理步骤实现复杂的推理能⼒。刘等⼈。 [65]另一种方法则是通过整合外部知识来设计更好的知识增强型 prompt。自动化 prompt 工程(APE)则是一种可以提升 LLM 性能的 prompt 自动生成方法[66]。 prompt 提供了⼀种⽆需微调即可发挥LLM潜⼒的简单⽅法。熟练掌握提示工程设计能让人更好地理解 LLM 的优劣之处。

2.3 Knowledge Graphs (KGs)

2.3 知识图谱(KG)

Knowledge graphs (KGs) store structured knowledge as a collection of triples KG = {(h, r, t) ⊆ E × R × E}, where E and R respectively denote the set of entities and relations.Existing knowledge graphs (KGs) can be classified into four groups based on the stored information: 1) encyclopedic KGs,2) commonsense KGs, 3) domain-specific KGs, and 4) multimodal KGs. We illustrate the examples of KGs of different categories in Fig. 5.

知识图谱则是以 (实体、关系、实体) 三元组集合的方式来存储结构化知识。根据所存储信息的不同,现有的知识图谱可分为四大类:1) 百科知识型知识图谱、2) 常识型知识图谱、3)特定领域型知识图谱、4) 多模态知识图谱。图 5 展示了不同类别知识图谱的例子。

2.3.1 Encyclopedic Knowledge Graphs.

2.3.1 百科知识图谱。

Encyclopedic knowledge graphs are the most ubiquitous KGs, which represent the general knowledge in real-world.Encyclopedic knowledge graphs are often constructed by integrating information from diverse and extensive sources,including human experts, encyclopedias, and databases.Wikidata [20] is one of the most widely used encyclopedic knowledge graphs, which incorporates varieties of knowledge extracted from articles on Wikipedia. Other typical encyclopedic knowledge graphs, like Freebase [67], Dbpedia[68], and YAGO [31] are also derived from Wikipedia. In addition, NELL [32] is a continuously improving encyclopedic knowledge graph, which automatically extracts knowledge from the web, and uses that knowledge to improve its performance over time. There are several encyclopedic knowledge graphs available in languages other than English such as CN-DBpedia [69] and Vikidia [70]. The largest knowledge graph, named Knowledge Occean (KO)

7

^7

7, currently contains 4,8784,3636 entities and 17,3115,8349 relations in both English and Chinese.

百科知识图谱是最普遍的知识图谱,它代表了现实世界中的通用知识。百科知识图谱通常是通过整合来自多种广泛来源的信息来构建的,包括⼈类专家、百科全书和数据库。维基数据[20]是使⽤最⼴泛的百科知识图谱之⼀,它包含了从维基百科⽂章中提取的各种知识。其他典型的百科全书知识图谱,如Freebase [67]、Dbpedia [68] 和 YAGO [31] 也源⾃维基百科。此外,NELL [32] 是⼀个不断完善的百科知识图谱,它能够自动从网络上提取知识,并利用这些知识随着时间的推移提高其性能。除了英语之外,还有⼏种百科知识图谱可⽤,例如 CN-DBpedia [69] 和 Vikidia [70]。最⼤的知识图谱,名为知识海洋(KO)

7

^7

7,包含了 4,8784,3636 个实体和 17,3115,8349 个中英文关系。

2.3.2 Commonsense Knowledge Graphs.

2.3.2 常识知识图谱。

Commonsense knowledge graphs formulate the knowledge about daily concepts, e.g., objects, and events, as well as their relationships [71]. Compared with encyclopedic knowledge graphs, commonsense knowledge graphs often model the tacit knowledge extracted from text such as (Car,UsedFor, Drive). ConceptNet [72] contains a wide range of commonsense concepts and relations, which can help computers understand the meanings of words people use.ATOMIC [73], [74] and ASER [75] focus on the causal effects between events, which can be used for commonsense reasoning. Some other commonsense knowledge graphs, such as TransOMCS [76] and CausalBanK [77] are automatically constructed to provide commonsense knowledge.

常识知识图谱表示了关于日常概念,例如,对象、事件以及它们之间的关系[71]。与百科知识图谱相比,常识知识图谱通常从文本中提取的隐性知识进行建模,例如(汽车,用途,驾驶)。ConceptNet[72] 包含了大量常识概念和关系,这有助于计算机理解人们使用的单词的含义。ATOMIC[73]、[74] 和 ASER[75] 关注于事件之间的因果关系,这可以用于常识推理。还有一些其他的常识知识图谱,如 TransOMCS[76] 和 CausalBanK[77],它们被自动构建以提供常识知识。

2.3.3 Domain-specific Knowledge Graphs.

2.3.3 特定领域知识图谱。

Domain-specific knowledge graphs are often constructed to represent knowledge in a specific domain, e.g., medical, biology, and finance [23]. Compared with encyclopedic knowledge graphs, domain-specific knowledge graphs are often smaller in size, but more accurate and reliable. For example, UMLS [78] is a domain-specific knowledge graph in the medical domain, which contains biomedical concepts and their relationships. In addition, there are some domainspecific knowledge graphs in other domains, such as finance[79], geology [80], biology [81], chemistry [82] and genealogy [83].

领域特定知识图谱通常用于表示特定领域的知识,例如医学、生物学和金融等领域[23]。与百科知识图谱相比,特定领域知识图谱的规模通常较小,但更加精确和可靠。例如,UMLS[78] 是医学领域的一个领域特定知识图谱,其中包含生物医学概念及其关系。此外,还有其他领域的一些领域特定知识图谱,如金融 [79]、地质学 [80]、生物学 [81]、化学 [82] 和家谱学 [83] 等。

2.3.4 Multi-modal Knowledge Graphs.

2.3.4 多模态知识图谱。

Unlike conventional knowledge graphs that only contain textual information, multi-modal knowledge graphs represent facts in multiple modalities such as images, sounds, and videos [84]. For example, IMGpedia [85], MMKG [86], and Richpedia [87] incorporate both the text and image information into the knowledge graphs. These knowledge graphs can be used for various multi-modal tasks such as image-text matching [88], visual question answering [89],and recommendation [90].

与传统的仅包含文本信息的知识图谱不同,多模态知识图谱通过多种模态(如图像、声音和视频)来表示事实 [84]。例如,IMGpedia [85]、MMKG [86] 和 Richpedia [87] 将文本和图像信息都整合到了知识图谱中。这些知识图谱可用于各种多模态任务,如图像 -文本匹配 [88]、视觉问答 [89] 和推荐 [90]。

2.4 Applications

2.4 应用

LLMs as KGs have been widely applied in various real-world applications. We summarize some representative applications of using LLMs and KGs in Table 1. ChatGPT/GPT-4 are LLM-based chatbots that can communicate with humans in a natural dialogue format. To improve knowledge awareness of LLMs, ERNIE 3.0 and Bard incorporate KGs into their chatbot applications. Instead of Chatbot. Firefly develops a photo editing application that allows users to edit photos by using natural language descriptions. Copilot, New Bing, and Shop.ai adopt LLMs to empower their applications in the areas of coding assistant, web search, and recommendation, respectively. Wikidata and KO are two representative knowledge graph applications that are used to provide external knowledge. OpenBG[91] is a knowledge graph designed for recommendation.Doctor.ai develops a health care assistant that incorporatesLLMs and KGs to provide medical advice.

大型语言模型(LLMs)作为知识图谱(KGs)已广泛应用于各种实际应用场景。我们在表 1 中总结了一些使用 LLMS 和 KGs 的代表性应用。ChatGPT/GPT-4 是基于大型语言模型的聊天机器人,可以以自然对话的形式与人类进行交流。为了提高大型语言模型的知识意识,ERNIE 3.0 和 Bard 将知识图谱融入其聊天机器人应用中。而 Firefly 则开发了一款照片编辑应用,用户可以通过自然语言描述来编辑照片。Copilot、New Bing 和 Shop.ai 分别采用大型语言模型来加强其在编程助手、网络搜索和推荐领域的应用。Wikidata 和 KO 是两个代表性的知识图谱应用,用于提供外部知识。OpenBG[91] 是一个为推荐而设计的知识图谱。Doctor.ai 开发了一款医疗助手,通过结合大型语言模型和知识图谱来提供医疗建议。

3 ROADMAP & CATEGORIZATION

3 路线图与分类

In this section, We first present a road map of explicit frameworks that unify LLMs and KGs. Then, we present the categorization of research on unifying LLMs and KGs.

在本节中,我们首先呈现一个将 LLM 和 KG 联合的显式框架路线图。然后,我们介绍联合 LLM 和 KG 的研究分类。

3.1 Roadmap

3.1 路线图

The roadmap of unifying KGs and LLMs is illustrated in Fig. 6. In the roadmap, we identify three frameworks for the unification of LLMs and KGs, including KG-enhanced LLMs, LLM-augmented KGs, and Synergized LLMs + KGs.

图 6 展示了将 LLM 和知识图谱联合起来的路线图。这份路线图包含联合 LLM 与知识图谱的三个框架:用知识图谱增强 LLM、用 LLM 增强知识图谱、LLM 与知识图谱协同。

3.1.1 KG-enhanced LLMs

3.1.1 KG增强LLM

LLMs are renowned for their ability to learn knowledge from large-scale corpus and achieve state-of-the-art performance in various NLP tasks. However, LLMs are often criticized for their hallucination issues [15], and lacking of interpretability. To address these issues, researchers have proposed to enhance LLMs with knowledge graphs (KGs).

LLM以其从大规模语料库中学习知识的能力以及在各种自然语言处理任务中取得的最先进性能而闻名。然而,LLM 常常因幻觉问题 [15] 和缺乏解释性而受到批评。为了解决这些问题,研究人员提议通过知识图谱(KG)来增强 LLM。

KGs store enormous knowledge in an explicit and structured way, which can be used to enhance the knowledge awareness of LLMs. Some researchers have proposed to incorporate KGs into LLMs during the pre-training stage,which can help LLMs learn knowledge from KGs [92], [93].Other researchers have proposed to incorporate KGs into LLMs during the inference stage. By retrieving knowledge from KGs, it can significantly improve the performance of LLMs in accessing domain-specific knowledge [94]. To improve the interpretability of LLMs, researchers also utilize KGs to interpret the facts [14] and the reasoning process of LLMs [95].

知识图谱以明确和结构化的方式存储海量知识,这可以用来提高 LLM 的知识感知能力。一些研究人员提议在 LLM 的预训练阶段将知识图谱融入其中,这可以帮助 LLM 从知识图谱中学习知识 [92]、[93]。其他研究人员则提议在 LLM 的推理阶段将知识图谱融入其中。通过从知识图谱中检索知识,可以显著提高 LLM 在访问特定领域知识方面的能力[94]。为了提高 LLM 的可解释性,研究人员还利用知识图谱来解释 LLM 的事实 [14] 和推理过程 [95]。

3.1.2 LLM-augmented KGs

3.1.2 LLM增强KG

KGs store structure knowledge playing an essential role in many real-word applications [19]. Existing methods in KGs fall short of handling incomplete KGs [25] and processing text corpus to construct KGs [96]. With the generalizability of LLMs, many researchers are trying to harness the power of LLMs for addressing KG-related tasks.

知识图谱(KG)存储结构知识,在许多实际应用中发挥着关键作用 [19]。现有的知识图谱方法在处理不完整的知识图谱 [25] 和处理文本语料库以构建知识图谱 [96] 方面存在不足。随着预训练语言模型(LLM)的泛化能力,许多研究人员正试图利用 LLMs 的力量来解决与知识图谱相关的任务。

The most straightforward way to apply LLMs as text encoders for KG-related tasks. Researchers take advantage of LLMs to process the textual corpus in the KGs and then use the representations of the text to enrich KGs representation [97]. Some studies also use LLMs to process the original corpus and extract relations and entities for KG construction[98]. Recent studies try to design a KG prompt that can effectively convert structural KGs into a format that can be comprehended by LLMs. In this way, LLMs can be directly applied to KG-related tasks, e.g., KG completion [99] and KG reasoning [100].

将 LLM 直接应用于知识图谱(KG)相关任务的最直接方法是将其作为文本编码器。研究人员利用 LLM 处理 KG 中的文本语料库,然后使用文本表示来丰富 KG 的表现形式 [97]。一些研究还使用 LLM 处理原始语料库,以提取用于 KG 构建的关系和实体 [98]。最近的研究试图设计一个 KG 提示,可以将结构化的 KGs 有效地转换为 LLM 可理解的格式。这样,LLM 就可以直接应用于 KG 相关任务,例如,KG 补全 [99] 和 KG 推理 [100]。

3.1.3 Synergized LLMs + KGs

3.1.3 应用

The synergy of LLMs and KGs has attracted increasing attention from researchers these years [40], [42]. LLMs and KGs are two inherently complementary techniques, which should be unified into a general framework to mutually enhance each other.

近年来,研究人员对 LLMs 和 KGs 的协同作用越来越关注 [40],[42]。LLMs 和 KGs 是两种固有互补的技术,应该将它们统一到一个通用框架中,以相互增强。LLMs和KGs的协同引起了研究人员的越来越多的关注[40],[42]。LLMs和KGs是两种本质上互补的技术,应该统一到一个通用框架中以相互增强。

To further explore the unification, we propose a unified framework of the synergized LLMs + KGs in Fig. 7. The unified framework contains four layers: 1) Data, 2) Synergized Model, 3) Technique, and 4) Application. In the Data layer, LLMs and KGs are used to process the textual and structural data, respectively. With the development of multi-modal LLMs [101] and KGs [102], this framework can be extended to process multi-modal data, such as video, audio, and images. In the Synergized Model layer, LLMs and KGs could synergize with each other to improve their capabilities. In Technique layer, related techniques that have been used in LLMs and KGs can be incorporated into this framework to further enhance the performance. In the Application layer, LLMs and KGs can be integrated to address various realworld applications, such as search engines [103], recommender systems [10], and AI assistants [104].

为了进一步探索统一LLM + KG的方法,我们提出了一个统一的框架,如图7所示。这个统一框架包含四个层次:1)数据层,2)协同模型层,3)技术层,4)应用层。在数据层,LLM 和 KG 分别用于处理文本和结构化数据。随着多模态 LLM[101] 和 KG[102] 的发展,这个框架可以扩展到处理多模态数据,如图像、音频和视频。在协同模型层,LLM 和 KG 可以相互协同以提高其能力。在技术层,LLM 和 KG 中使用过的相关技术可以纳入这个框架,以进一步提高性能。在应用层,LLM 和 KG 可以集成起来,解决各种实际应用问题,如搜索引擎 [103],推荐系统 [10],和 AI 助手 [104]。

3.2 Categorization

3.2 分类

To better understand the research on unifying LLMs and KGs, we further provide a fine-grained categorization for each framework in the roadmap. Specifically, we focus on different ways of integrating KGs and LLMs, i.e., KG-enhanced LLMs, KG-augmented LLMs, and Synergized LLMs + KGs. The fine-grained categorization of the research is illustrated in Fig. 8.

为了更好地了解联合LLM和KG的研究,我们进一步为路线图中的每个框架提供了细粒度的分类。具体而言,我们关注不同的KG和LLM集成方式,即KG增强的LLM,LLM增强的KG和协同LLM + KG。研究的细粒度分类如图8所示。

KG-enhanced LLMs. Integrating KGs can enhance the performance and interpretability of LLMs in various downstream tasks. We categorize the research on KG-enhanced LLMs into three groups:

KG增强LLM。集成KG可以增强LLM在各种下游任务中的性能和可解释性。我们将KG增强的LLM的研究分为三组:

1)KG-enhanced LLM pre-training includes works that apply KGs during the pre-training stage and improve the knowledge expression of LLMs.

1)KG增强的LLM预训练:包括在预训练阶段应用KG的,以改善LLM的知识表达能力。

2)KG-enhanced LLM inference includes research that utilizes KGs during the inference stage of LLMs,which enables LLMs to access the latest knowledge without retraining.

2)KG增强的LLM推理:包括在LLM的推理阶段使用KG的研究,使LLM能够在无需重新训练的情况下访问最新的知识。

3)KG-enhanced LLM interpretability includes works that use KGs to understand the knowledge learned by LLMs and interpret the reasoning process of LLMs.

3)KG增强的LLM可解释性:包括使用KG来理解LLM学到的知识以及解释LLM的推理过程的工作。

LLM-augmented KGs. LLMs can be applied to augment various KG-related tasks. We categorize the research on LLM-augmented KGs into five groups based on the task types:

LLM增强KG。LLM可以用于增强各种与KG相关的任务。我们根据任务类型将LLM增强的KG的研究分为五组:

1)LLM-augmented KG embedding includes studies that apply LLMs to enrich representations of KGs by encoding the textual descriptions of entities and relations.

1)LLM增强的KG嵌入:包括将LLM应用于通过编码实体和关系的文本描述来丰富KG表示的研究。

2)LLM-augmented KG completion includes papers that utilize LLMs to encode text or generate facts for better KGC performance.

2)LLM增强的KG补全:包括利用LLM对文本进行编码或生成事实,以提高KG完成任务的性能的论文。

3)LLM-augmented KG construction includes works that apply LLMs to address the entity discovery, coreference resolution, and relation extraction tasks for KG construction.

3)LLM增强的KG构建:包括利用LLMs来处理实体发现、共指消解和关系提取等任务,用于KG构建的研究。

4)LLM-augmented KG-to-text Generation includes research that utilizes LLMs to generate natural language that describes the facts from KGs.

4)LLM增强的KG到文本生成:包括利用LLM生成描述KG事实的自然语言的研究。

5)LLM-augmented KG question answering includes studies that apply LLMs to bridge the gap between natural language questions and retrieve answers from KGs.

5)LLM增强的KG问答:包括将LLM应用于连接自然语言问题并从KG中检索答案的研究。

Synergized LLMs + KGs. The synergy of LLMs and KGs aims to integrate LLMs and KGs into a unified framework to mutually enhance each other. In this categorization, we review the recent attempts of Synergized LLMs + KGs from the perspectives of knowledge representation and reasoning.

协同LLM + KG。LLM和KG的协同旨在将LLM和KG统一到一个框架中,以相互增强。在这个分类中,我们从知识表示和推理的角度回顾了最近的协同LLM + KG的尝试。

In the following sections (Sec 4, 5, and 6), we will provide details on these categorizations.

在接下来的章节(第4、5和6节)中,我们将详细介绍这些分类。

4 KG-ENHANCED LLMS

4 KG增强LLM

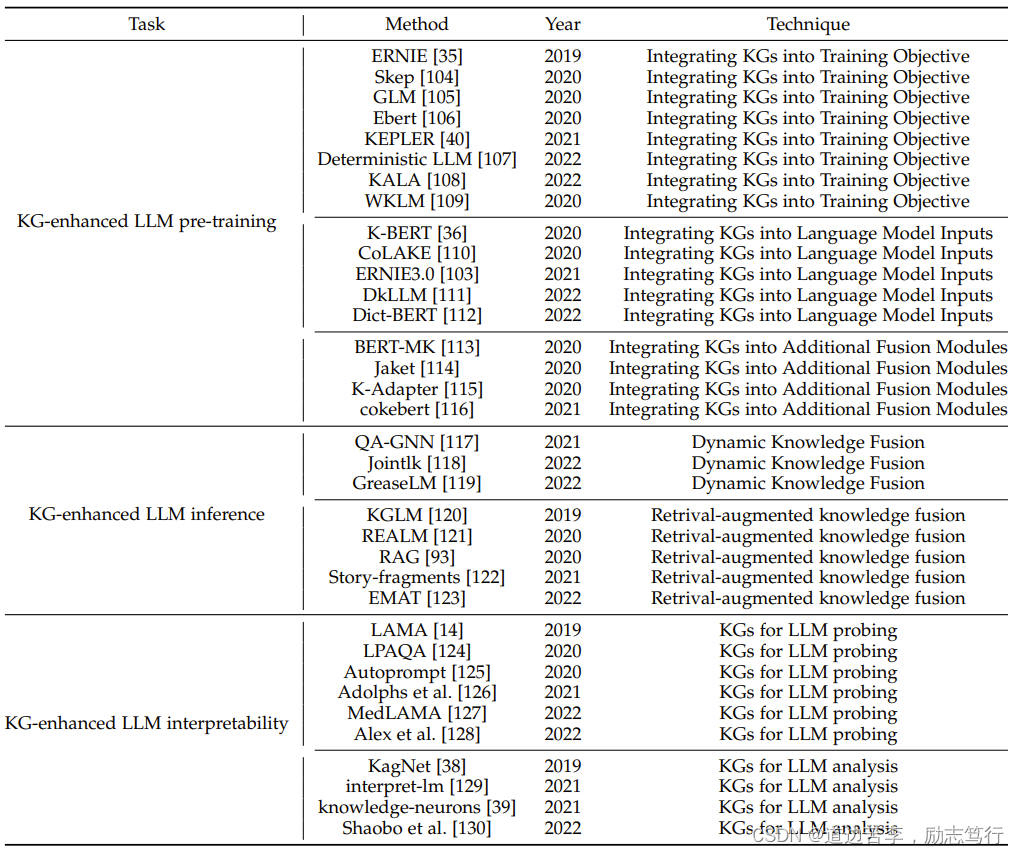

Large language models (LLMs) achieve promising results in many natural language processing tasks. However, LLMs have been criticized for their lack of practical knowledge and tendency to generate factual errors during inference.To address this issue, researchers have proposed integrating knowledge graphs (KGs) to enhance LLMs. In this section, we first introduce the KG-enhanced LLM pre-training,which aims to inject knowledge into LLMs during the pretraining stage. Then, we introduce the KG-enhanced LLM inference, which enables LLMs to consider the latest knowledge while generating sentences. Finally, we introduce the KG-enhanced LLM interpretability, which aims to improve the interpretability of LLMs by using KGs. Table 2 summarizes the typical methods that integrate KGs for LLMs.

大型语言模型(LLM)在许多自然语言处理任务中取得了有希望的结果。然而,LLM因缺乏实际知识并在推理过程中产生事实错误而受到批评。为了解决这个问题,研究人员提出了将知识图谱(KG)集成到LLM中以增强其性能。在本节中,我们首先介绍KG增强的LLM预训练,旨在在预训练阶段将知识注入到LLM中。然后,我们介绍KG增强的LLM推理,使LLM在生成句子时考虑到最新的知识。最后,我们介绍KG增强的LLM可解释性,旨在通过使用KG来提高LLM的可解释性。表2总结了将KG集成到LLM中的典型方法。

4.1 KG-enhanced LLM Pre-training

4.1 KG增强的LLM预训练

Existing large language models mostly rely on unsupervised training on the large-scale corpus. While these models may exhibit impressive performance on downstream tasks, they often lack practical knowledge relevant to the real world. Previous works that integrate KGs into large language models can be categorized into three parts: 1) Integrating KGs into training objective, 2) Integrating KGs into LLM inputs and 3) Integrating KGs into additional fusion modules.

现有的大型语言模型主要依赖于对大规模语料库进行无监督训练。尽管这些模型在下游任务中可能表现出色,但它们通常缺乏与现实世界相关的实际知识。之前的研究将知识图谱集成到大型语言模型中可分为三部分:1)将知识图谱集成到训练目标中,2)将知识图谱集成到LLM的输入中,3)将知识图谱集成到额外的融合模块中。

4.1.1 Integrating KGs into Training Objective

4.1.1 将知识图谱集成到训练目标中

The research efforts in this category focus on designing novel knowledge-aware training objectives. An intuitive idea is to expose more knowledge entities in the pre-training objective. GLM [106] leverages the knowledge graph structure to assign a masking probability. Specifically, entities that can be reached within a certain number of hops are considered to be the most important entities for learning,and they are given a higher masking probability during pre-training. Furthermore, E-BERT [107] further controls the balance between the token-level and entity-level training losses. The training loss values are used as indications of the learning process for token and entity, which dynamically determines their ratio for the next training epochs. SKEP [105] also follows a similar fusion to inject sentiment knowledge during LLMs pre-training. SKEP first determines words with positive and negative sentiment by utilizing PMI along with a predefined set of seed sentiment words. Then, it assigns a higher masking probability to those identified sentiment words in the word masking objective.

这个类别的研究工作侧重于设计新颖的知识感知训练目标。一种直观的想法是在预训练目标中暴露更多的知识实体。GLM [106]利用知识图谱的结构为掩码概率分配权重。具体而言,对于可以通过一定数量跳跃到达的实体,被认为是学习的最重要实体,并且在预训练过程中给予更高的掩码概率。此外,E-BERT [107]进一步控制了标记级和实体级训练损失之间的平衡。训练损失的值被用作标记和实体的学习过程的指示,动态确定它们在下一次训练周期中的比例。SKEP [105]在LLM的预训练过程中也采用了类似的融合方式来注入情感知识。SKEP首先通过利用PMI和预定义的种子情感词识别具有积极和消极情感的单词。然后,它在词掩码目标中对这些被识别为情感词的单词分配更高的掩码概率。

The other line of work explicitly leverages the connections with knowledge and input text. As shown in Figure 9,ERNIE [92] proposes a novel word-entity alignment training objective as a pre-training objective. Specifically, ERNIE feeds both sentences and corresponding entities mentioned in the text into LLMs, and then trains the LLMs to predict alignment links between textual tokens and entities in knowledge graphs. Similarly, KALM [93] enhances the input tokens by incorporating entity embeddings and includes an entity prediction pre-training task in addition to the token-only pre-training objective. This approach aims to improve the ability of LLMs to capture knowledge related to entities. Finally, KEPLER [132] directly employs both knowledge graph embedding training objective and Masked token pre-training objective into a shared transformer-based encoder. Deterministic LLM [108] focuses on pre-training language models to capture deterministic factual knowledge. It only masks the span that has a deterministic entity as the question and introduces additional clue contrast learning and clue classification objective. WKLM [110] first replaces entities in the text with other same-type entities and then feeds them into LLMs. The model is further pre-trained to distinguish whether the entities have been replaced or not.

另一方面,还有一些研究明确利用与知识和输入文本之间的连接。如图9所示,ERNIE [92]提出了一种新颖的单词-实体对齐训练目标作为预训练目标。具体而言,ERNIE将句子和文本中提到的相应实体输入到LLM中,然后训练LLM来预测文本标记和知识图谱中实体之间的对齐链接。类似地,KALM [93]通过合并实体嵌入将实体相关的信息融入到输入标记中,并在仅有标记的预训练目标之外引入了实体预测的预训练任务。这种方法旨在提高LLM捕捉与实体相关的知识的能力。最后,KEPLER [132]将知识图谱嵌入训练目标和掩码标记预训练目标直接应用于共享的基于Transformer的编码器中。Deterministic LLM [108]的重点是预训练语言模型以捕捉确定性的事实知识。它只对具有确定性实体作为问题的范围的部分进行掩码,并引入了额外的线索对比学习和线索分类目标。WKLM [110]首先将文本中的实体替换为同类型的其他实体,然后将其输入到LLM中。模型进一步进行预训练以区分实体是否已被替换。

4.1.2 Integrating KGs into LLM Inputs

4.1.2 将知识图谱集成到LLM的输入中

As shown in Fig. 10, this kind of research focus on introducing relevant knowledge sub-graph into the inputs of LLMs. Given a knowledge graph triple and the corresponding sentences, ERNIE 3.0 [104] represents the triple as a sequence of tokens and directly concatenates them with the sentences. It further randomly masks either the relation token in the triple or tokens in the sentences to better combine knowledge with textual representations. However, such direct knowledge triple concatenation method allows the tokens in the sentence to intensively interact with the tokens in the knowledge sub-graph, which could result in Knowledge Noise [36]. To solve this issue, K-BERT [36] takes the first step to inject the knowledge triple into the sentence via a visible matrix where only the knowledge entities have access to the knowledge triple information, while the tokens in the sentences can only see each other in the self-attention module. To further reduce Knowledge Noise, Colake [111] proposes a unified word-knowledge graph (shown in Fig. 10) where the tokens in the input sentences form a fully connected word graph where tokens aligned with knowledge entities are connected with their neighboring entities.

如图10所示,这类研究工作侧重于将相关的知识子图引入到LLMs的输入中。给定一个知识图谱三元组和相应的句子,ERNIE 3.0 [104]将三元组表示为一个标记序列,并将其直接与句子拼接在一起。它进一步随机掩盖三元组中的关系标记或句子中的标记,以更好地将知识与文本表示相结合。然而,这种直接知识三元组拼接的方法使得句子中的标记与知识子图中的标记密集地相互作用,可能导致知识噪声。为了解决这个问题,K-BERT [36]通过可见矩阵将知识三元组注入到句子中,只有知识实体可以访问知识三元组信息,而句子中的标记只能在自注意力模块中相互作用。为了进一步减少知识噪声,Colake [111]提出了一个统一的词-知识图谱(如图10所示),其中输入句子中的标记形成一个全连接的词图,与知识实体对齐的标记与其相邻实体连接在一起。上述方法确实能够向LLMs注入大量的知识。然而,它们主要关注热门实体,忽视了低频和长尾实体。DkLLM [112]旨在改进LLMs对这些实体的表示。DkLLM首先提出一种确定长尾实体的新方法,然后用伪标记嵌入将这些选定的实体替换为文本中的实体,作为大型语言模型的新输入。此外,Dict-BERT [113]提出利用外部字典来解决这个问题。具体而言,Dict-BERT通过将字典中的定义附加到输入文本的末尾来改善稀有单词的表示质量,并训练语言模型来在输入句子和字典定义之间进行局部对齐,并区分输入文本和定义是否正确映射。

The above methods can indeed inject a large amount of knowledge into LLMs. However, they mostly focus on popular entities and overlook the low-frequent and longtail ones. DkLLM [112] aims to improve the LLMs representations towards those entities. DkLLM first proposes a novel measurement to determine long-tail entities and then replaces these selected entities in the text with pseudo token embedding as new input to the large language models.Furthermore, Dict-BERT [113] proposes to leverage external dictionaries to solve this issue. Specifically, Dict-BERT improves the representation quality of rare words by appending their definitions from the dictionary at the end of input text and trains the language model to locally align rare words representations in input sentences and dictionary definitions as well as to discriminate whether the input text and definition are correctly mapped.

上述方法确实能够向LLMs注入大量的知识。然而,它们主要关注热门实体,忽视了低频和长尾实体。DkLLM [112]旨在改进LLMs对这些实体的表示。DkLLM首先提出一种确定长尾实体的新方法,然后用伪标记嵌入将这些选定的实体替换为文本中的实体,作为大型语言模型的新输入。此外,Dict-BERT [113]提出利用外部字典来解决这个问题。具体而言,Dict-BERT通过将字典中的定义附加到输入文本的末尾来改善稀有单词的表示质量,并训练语言模型来在输入句子和字典定义之间进行局部对齐,并区分输入文本和定义是否正确映射

4.1.3 Integrating KGs by Additional Fusion Modules

4.1.3 通过额外的融合模块集成KG

By introducing additional fusion modules into LLMs, the information from KGs can be separately processed and fused into LLMs. As shown in Fig. 11, ERNIE [92] proposes a textual-knowledge dual encoder architecture where a Tencoder first encodes the input sentences, then a K-encoder processes knowledge graphs which are fused them with the textual representation from the T-encoder. BERT-MK [114] employs a similar dual-encoder architecture but it introduces additional information of neighboring entities in the knowledge encoder component during the pre-training of LLMs. However, some of the neighboring entities in KGs may not be relevant to the input text, resulting in extra redundancy and noise. CokeBERT [117] focuses on this issue and proposes a GNN-based module to filter out irrelevant KG entities using the input text. JAKET [115] proposes to fuse the entity information in the middle of the large language model. The first half of the model processes the input text and knowledge entity sequence separately. Then, the outputs of text and entities are combined together. Specifically, the entity representations are added to their corresponding position of text representations, which are further processed by the second half of the model. K-adapters [116] fuses linguistic and factual knowledge via adapters which only adds trainable multi-layer perception in the middle of the transformer layer while the existing parameters of large language models remain frozen during the knowledge pretraining stage. Such adapters are independent of each other and can be trained in parallel.

通过将额外的融合模块引入LLM中,可以单独处理并将知识图谱中的信息融入到LLM中。如图11所示,ERNIE [92]提出了一种文本-知识双编码器架构,其中T-编码器首先对输入句子进行编码,然后K-编码器处理知识图谱,并将其与T-编码器中的文本表示融合。BERT-MK [114]采用了类似的双编码器架构,但在LLM的预训练过程中引入了知识编码器组件中相邻实体的附加信息。然而,知识图谱中的某些相邻实体可能与输入文本无关,导致额外的冗余和噪声。CokeBERT [117]关注这个问题,并提出了一个基于GNN的模块,通过使用输入文本来过滤掉无关的KG实体。JAKET [115]提出在大型语言模型的中间融合实体信息。模型的前半部分分别处理输入文本和实体序列。然后,文本和实体的输出被组合在一起。具体而言,实体表示被添加到其对应位置的文本表示中,然后由模型的后半部分进一步处理。K-adapters [116]通过适配器来融合语言和事实知识,适配器只在变压器层的中间添加可训练的多层感知器,而大型语言模型的现有参数在知识预训练阶段保持冻结状态。这样的适配器彼此独立,并且可以并行训练。

4.2 KG-enhanced LLM Inference

4.2 KG增强的LLM推理

The above methods could effectively fuse knowledge with the textual representations in the large language models. However, real-world knowledge is subject to change and the limitation of these approaches is that they do not permit updates to the incorporated knowledge without retraining the model. As a result, they may not generalize well to the unseen knowledge during inference [133]. Therefore, considerable research has been devoted to keeping the knowledge space and text space separate and injecting the knowledge while inference. These methods mostly focus on the Question Answering (QA) tasks, because QA requires

the model to capture both textual semantic meanings and up-to-date real-world knowledge.

上述方法可以有效地将知识与大型语言模型中的文本表示进行融合。然而,现实世界的知识是不断变化的,这些方法的局限性在于它们不允许在不重新训练模型的情况下更新已整合的知识。因此,在推理过程中它们可能无法很好地推广到未见过的知识[133]。因此,人们对将知识空间和文本空间分离并在推理过程中注入知识进行了大量研究。这些方法主要集中在问答(QA)任务上,因为QA要求模型能够捕捉到文本语义含义和最新的现实世界知识。

4.2.1 Dynamic Knowledge Fusion

4.2.1 动态知识融合

A straightforward method is to leverage a two-tower architecture where one separated module processes the text inputs and the other one processes the relevant knowledge graph inputs [134]. However, this method lacks interaction between text and knowledge. Thus, KagNet [95] proposes to first encode the input KG, and then augment the input textual representation. In contrast, MHGRN [135] uses the final LLM outputs of the input text to guide the reasoning process on the KGs. Yet, both of them only design a singledirection interaction between the text and KGs. To tackle this issue, QA-GNN [118] proposes to use a GNN-based model to jointly reason over input context and KG information via message passing. Specifically, QA-GNN represents the input textual information as a special node via a pooling operation and connects this node with other entities in KG. However, the textual inputs are only pooled into a single dense vector, limiting the information fusion performance. JointLK [119] then proposes a framework with fine-grained interaction between any tokens in the textual inputs and any KG entities through LM-to-KG and KG-to-LM bi-directional attention mechanism. As shown in Fig. 12, pairwise dotproduct scores are calculated over all textual tokens and KG entities, the bi-directional attentive scores are computed separately. In addition, at each jointLK layer, the KGs are also dynamically pruned based on the attention score to allow later layers to focus on more important sub-KG structures. Despite being effective, in JointLK, the fusion process between the input text and KG still uses the final LLM outputs as the input text representations. GreaseLM [120] designs deep and rich interaction between the input text tokens and KG entities at each layer of the LLMs. The architecture and fusion approach are mostly similar to ERNIE [92] discussed in Section 4.1.3, except that GreaseLM does not use the textonly T-encoder to handle input text.

一种直接的方法是利用双塔架构,其中一个独立模块处理文本输入,另一个处理相关的知识图谱输入[134]。然而,这种方法缺乏文本和知识之间的交互。因此,KagNet [95]提出首先编码输入的知识图谱,然后增强输入的文本表示。相反,MHGRN [135]使用输入文本的最终LLM输出来指导对知识图谱的推理过程。然而,它们都只设计了文本和知识之间的单向交互。为了解决这个问题,QA-GNN [118]提出使用基于GNN的模型通过消息传递来共同推理输入上下文和知识图谱信息。具体而言,QA-GNN通过池化操作将输入的文本信息表示为一个特殊节点,并将该节点与知识图谱中的其他实体连接起来。然而,文本输入只被池化为一个单一的密集向量,限制了信息融合的性能。JointLK [119]随后提出了一个框架,通过LM-to-KG和KG-to-LM双向注意机制,在文本输入的任何标记和知识图谱实体之间实现细粒度的交互。如图12所示,计算了所有文本标记和知识图谱实体之间的成对点积分数,并单独计算了双向注意分数。此外,在每个JointLK层中,根据注意分数动态修剪了知识图谱,以便后续层能够集中在更重要的子知识图结构上。尽管JointLK有效,但其中输入文本和知识图谱之间的融合过程仍然使用最终的LLM输出作为输入文本表示。GreaseLM [120]在LLMs的每一层中为输入文本标记和知识图谱实体设计了深入和丰富的交互。架构和融合方法在很大程度上类似于在第4.1.3节中讨论的ERNIE [92],只是GreaseLM不使用单独的文本T-编码器来处理输入文本。

4.2.2 Retrieval-Augmented Knowledge Fusion

4.2.2 检索增强的知识融合

Different from the above methods that store all knowledge in parameters, as shown in Figure 13, RAG [94] proposes to combine non-parametric and parametric modules to handle the external knowledge. Given the input text, RAG first searches for relevant KG in the non-parametric module via MIPS to obtain several documents. RAG then treats these documents as hidden variables z and feeds them into the output generator, empowered by Seq2Seq LLMs, as additional context information. The research indicates that using different retrieved documents as conditions at different generation steps performs better than only using a single document to guide the whole generation process. The experimental results show that RAG outperforms other parametric-only and non-parametric-only baseline models in open-domain QA. RAG can also generate more specific, diverse, and factual text than other parameter-only baselines. Story-fragments [123] further improves architecture by adding an additional module to determine salient knowledge entities and fuse them into the generator to improve the quality of generated long stories. EMAT [124] further improves the efficiency of such a system by encoding external knowledge into a key-value memory and exploiting the fast maximum inner product search for memory querying. REALM [122] proposes a novel knowledge retriever to help the model to retrieve and attend over documents from a large corpus during the pre-training stage and successfully improves the performance of open-domain question answering. KGLM [121] selects the facts from a knowledge graph using the current context to generate factual sentences. With the help of an external knowledge graph, KGLM could describe facts using out-of-domain words or phrases.

与将所有知识存储在参数中的上述方法不同,如图13所示,RAG [94]提出了将非参数模块和参数模块结合起来处理外部知识的方法。给定输入文本,RAG首先通过最近邻搜索在非参数模块中搜索相关的知识图谱,以获取几个文档。然后,RAG将这些文档视为隐藏变量z,并将它们作为附加上下文信息馈送到由Seq2Seq LLM支持的输出生成器中。研究表明,在生成过程中使用不同的检索文档作为条件比仅使用单个文档来引导整个生成过程效果更好。实验结果表明,与只使用参数模块或非参数模块的基准模型相比,RAG在开放域QA中表现更好。RAG还能够生成更具体、多样和真实的文本,而不仅仅是基于参数的基线模型。Story-fragments [123]通过添加额外模块来确定重要的知识实体并将它们融入生成器中,以提高生成的长篇故事的质量。EMAT [124]通过将外部知识编码为键值内存,并利用快速最大内积搜索进行内存查询,进一步提高了这种系统的效率。REALM [122]提出了一种新颖的知识检索器,帮助模型在预训练阶段从大型语料库中检索并关注文档,并成功提高了开放域问答的性能。KGLM [121]根据当前上下文从知识图谱中选择事实以生成事实性句子。借助外部知识图谱的帮助,KGLM可以使用领域外的词汇描述事实。

4.3 KG-enhanced LLM Interpretability

4.3 KG增强的LLM可解释性

Although LLMs have achieved remarkable success in many NLP tasks, they are still criticized for their lack of interpretability. The large language model (LLM) interpretability refers to the understanding and explanation of the inner workings and decision-making processes of a large language model [17]. This can improve the trustworthiness of LLMs and facilitate their applications in high-stakes scenarios such as medical diagnosis and legal judgment. Knowledge graphs (KGs) represent the knowledge structurally and can provide good interpretability for the reasoning results.Therefore, researchers try to utilize KGs to improve the interpretability of LLMs, which can be roughly grouped into two categories: 1) KGs for language model probing, and 2) KGs for language model analysis.

尽管LLM在许多自然语言处理任务上都表现不凡,但由于缺乏可解释性,依然备受诟病。大型语言模型(LLM)可解释性是指理解和解释大型语言模型的内部工作方式和决策过程[17]。这可以提高LLM的置信度,并促进它们在医学诊断和法律判断等高风险场景中的应用。由于知识图谱是以结构化的方式表示知识,因此可为推理结果提供优良的可解释性。因此,研究人员尝试利用知识图谱来提高LLM的可解释性,这可以大致分为两类:1)用于语言模型探测的知识图谱,和2)用于语言模型分析的知识图谱。

4.3.1 KGs for LLM Probing

4.3.1 用于LLM探测的知识图谱

The large language model (LLM) probing aims to understand the knowledge stored in LLMs. LLMs, trained on large-scale corpus, are often known as containing enormous knowledge. However, LLMs store the knowledge in a hidden way, making it hard to figure out the stored knowledge. Moreover, LLMs suffer from the hallucination problem [15], which results in generating statements that contradict facts. This issue significantly affects the reliability of LLMs. Therefore, it is necessary to probe and verify the knowledge stored in LLMs.

大型语言模型(LLM)探测旨在了解存储在LLM中的知识。LLM经过大规模语料库的训练,通常被认为包含了大量的知识。然而,LLM以一种隐藏的方式存储知识,使得很难确定存储的知识。此外,LLM存在幻觉问题[15],导致生成与事实相矛盾的陈述。这个问题严重影响了LLM的可靠性。因此,有必要对存储在LLMs中的知识进行探测和验证。

LAMA [14] is the first work to probe the knowledge in LLMs by using KGs. As shown in Fig. 14, LAMA first

converts the facts in KGs into cloze statements by a predefined prompt template and then uses LLMs to predict the missing entity. The prediction results are used to evaluate the knowledge stored in LLMs. For example, we try to probe whether LLMs know the fact (Obama, profession, president). We first convert the fact triple into a cloze question “Obama’s profession is .” with the object masked. Then, we test if the LLMs can predict the object “president” correctly.

LAMA [14]是首个使用知识图谱探测LLM中知识的工作。如图14所示,LAMA首先通过预定义的提示模板将知识图谱中的事实转化为填空陈述,然后使用LLM预测缺失的实体。预测结果用于评估LLM中存储的知识。例如,我们尝试探测LLM是否知道事实(奥巴马,职业,总统)。我们首先将事实三元组转换为带有对象被屏蔽的填空问题“奥巴马的职业是什么?”然后,我们测试LLM能否正确预测出“总统”这个对象。

However, LAMA ignores the fact that the prompts are inappropriate. For example, the prompt “Obama worked as a ” may be more favorable to the prediction of the blank by the language models than “Obama is a by profession”. Thus, LPAQA [125] proposes a mining and paraphrasing-based method to automatically generate high-quality and diverse prompts for a more accurate assessment of the knowledge contained in the language model. Moreover, Adolphs et al.[127] attempt to use examples to make the language model understand the query, and experiments obtain substantial improvements for BERT-large on the T-REx data. Unlike using manually defined prompt templates, Autoprompt[126] proposes an automated method, which is based on the gradient-guided search to create prompts.

然而,LAMA忽略了提示不恰当的事实。例如,“奥巴马的职业是。”可能对语言模型的填空预测比“奥巴马是一位职业。”更有利。因此,LPAQA [125]提出了一种基于挖掘和改写的方法,自动生成高质量和多样化的提示,以更准确地评估语言模型中包含的知识。此外,Adolphs等人[127]尝试使用示例使语言模型理解查询,实验证明对T-REx数据的BERT-large模型取得了显著的改进。与使用手动定义的提示模板不同,Autoprompt [126]提出了一种基于梯度引导搜索的自动化方法来创建提示。

Instead of probing the general knowledge by using the encyclopedic and commonsense knowledge graphs, BioLAMA [136] and MedLAMA [128] probe the medical knowledge in LLMs by using medical knowledge graphs. Alex et al. [129] investigate the capacity of LLMs to retain less popular factual knowledge. They select unpopular facts from Wikidata knowledge graphs which have lowfrequency clicked entities. These facts are then used for the evaluation, where the results indicate that LLMs encounter difficulties with such knowledge, and that scaling fails to appreciably improve memorization of factual knowledge in the tail.

与使用百科和常识知识图谱来探测一般知识不同,BioLAMA [136]和MedLAMA [128]使用医学知识图谱来探测LLMs中的医学知识。Alex等人[129]研究LLMs保留不太流行的事实的能力。他们从Wikidata知识图谱中选择了低频点击实体的不太流行的事实。然后将这些事实用于评估,结果表明LLMs在处理此类知识时遇到困难,并且在尾部的事实性知识的记忆方面,扩大规模并不能显著提高。

4.3.2 KGs for LLM Analysis

4.3.2 用于LLM分析的知识图谱

Knowledge graphs (KGs) for pre-train language models (LLMs) analysis aims to answer the following questions such as “how do LLMs generate the results?”, and “how do the function and structure work in LLMs?”. To analyze the inference process of LLMs, as shown in Fig. 15, KagNet [38] and QA-GNN [118] make the results generated by LLMs at each reasoning step grounded by knowledge graphs. In this way, the reasoning process of LLMs can be explained by extracting the graph structure from KGs. Shaobo et al. [131] investigate how LLMs generate the results correctly. They adopt the causal-inspired analysis from facts extracted from KGs. This analysis quantitatively measures the word patterns that LLMs depend on to generate the results. The results show that LLMs generate the missing factual more by the positionally closed words rather than the knowledge-dependent words. Thus, they claim that LLMs are inadequate to memorize factual knowledge because of the inaccurate dependence. To interpret the training of LLMs, Swamy et al. [130] adopt the language model during pre-training to generate knowledge graphs. The knowledge acquired by LLMs during training can be unveiled by the facts in KGs explicitly. To explore how implicit knowledge is stored in parameters of LLMs, Dai et al. [39] propose the concept of knowledge neurons. Specifically, activation of the identified knowledge neurons is highly correlated with knowledge expression. Thus, they explore the knowledge and facts represented by each neuron by suppressing and amplifying knowledge neurons.

知识图谱(KG)用于预训练语言模型(LLM)分析旨在回答以下问题:“LLM是如何生成结果的?”和“LLM的功能和结构如何工作?”为了分析LLM的推理过程,如图15所示,KagNet [38]和QA-GNN [118]通过知识图谱将LLM在每个推理步骤生成的结果进行了基于图的支撑。通过从KG中提取图结构,可以解释LLM的推理过程。Shaobo等人[131]研究LLM如何正确生成结果。他们采用了从KG中提取的事实的因果性分析。该分析定量地衡量LLM在生成结果时所依赖的词汇模式。结果表明,LLM更多地依赖于位置相关的词汇模式而不是知识相关的词汇模式来生成缺失的事实。因此,他们声称LLM不适合记忆事实性知识,因为依赖关系不准确。为了解释LLM的训练,Swamy等人[130]采用语言模型在预训练期间生成知识图谱。LLM在训练过程中获得的知识可以通过KG中的事实来揭示。为了探索LLM中的隐式知识是如何存储在参数中的,Dai等人[39]提出了知识神经元的概念。具体而言,被识别的知识神经元的激活与知识表达高度相关。因此,他们通过抑制和放大知识神经元来探索每个神经元表示的知识和事实。

5 LLM-AUGMENTED FOR KGS

5.基于LLM的知识图谱增强

Knowledge graphs are famous for representing knowledge in a structural manner. They have been applied in many downstream tasks such as question answering, recommendation, and web search. However, the conventional KGs are often incomplete and existing methods often lack considering textual information. To address these issues, recent research has explored integrating LLMs to augment KGs to consider the textual information and improve the performance in downstream tasks. In this section, we will introduce the recent research on LLM-augmented KGs. Representative works are summarized in Table 3. We will introduce the methods that integrate LLMs for KG embedding, KG completion, KG construction, KG-to-text generation, and KG question answering, respectively.

知识图谱以结构化的方式表示知识,并已应用于许多下游任务,如问答、推荐和网络搜索。然而,传统的知识图谱通常不完整,现有方法往往缺乏考虑文本信息的能力。为了解决这些问题,最近的研究探索了将LLM整合到知识图谱中以考虑文本信息并提高下游任务性能。在本节中,我们将介绍关于LLM增强的知识图谱的最新研究。代表性的工作总结在表3中。我们将分别介绍整合LLM进行知识图谱嵌入、知识图谱补全、知识图谱构建、知识图谱到文本生成和知识图谱问答的方法。

5.1 LLM-augmented KG Embedding

5.1 基于LLM的知识图谱嵌入

Knowledge graph embedding (KGE) aims to map each entity and relation into a low-dimensional vector (embedding) space. These embeddings contain both semantic and structural information of KGs, which can be utilized for various tasks such as question answering [182], reasoning [38], and recommendation [183]. Conventional knowledge graph embedding methods mainly rely on the structural information of KGs to optimize a scoring function defined on embeddings (e.g., TransE [25], and DisMult [184]).However, these approaches often fall short in representing unseen entities and long-tailed relations due to their limited structural connectivity [185], [186]. To address this issue, as shown in Fig. 16, recent research adopt LLMs to enrich representations of KGs by encoding the textual descriptions of entities and relations [40], [97].

知识图谱嵌入(KGE)的目标是将每个实体和关系映射到低维的向量(嵌入)空间。这些嵌入包含知识图谱的语义和结构信息,可用于多种不同的任务,如问答、推理和推荐。传统的知识图谱嵌入方法主要依靠知识图谱的结构信息来优化一个定义在嵌入上的评分函数(如 TransE 和 DisMult)。但是,这些方法由于结构连接性有限,因此难以表示未曾见过的实体和长尾的关系。图 16 展示了近期的一项研究:为了解决这一问题,该方法使用 LLM 来编码实体和关系的文本描述,从而丰富知识图谱的表征。

5.1.1 LLMs as Text Encoders

5.1.1 LLM作为文本编码器

Pretrain-KGE [97] is a representative method that follows the framework shown in Fig. 16. Given a triple

(

h

,

r

,

t

)

(h, r, t)

(h,r,t) from KGs, it firsts uses a LLM encoder to encode the textual descriptions of entities

h

,

t

,

h, t,

h,t, and relations

r

r

r into representations as

e

h

=

L

L

M

(

T

e

x

t

h

)

,

e

t

=

L

L

M

(

T

e

x

t

t

)

,

e

r

=

L

L

M

(

T

e

x

t

r

)

,

(1)

e_h=LLM(Text_h),e_t = LLM(Text_t),e_r = LLM(Text_r) ,\tag{1}

eh=LLM(Texth),et=LLM(Textt),er=LLM(Textr),(1)

where

e

h

e_h

eh,

e

r

e_r

er, and

e

t

e_t

et denotes the initial embeddings of entities

h

,

t

,

h, t,

h,t, and relations

r

,

r,

r, respectively. Pretrain-KGE uses the BERT as the LLM encoder in experiments. Then, the initial embeddings are fed into a KGE model to generate the final embeddings

v

h

,

v

r

,

v_h, v_r,

vh,vr, and

v

t

v_t

vt. During the KGE training phase, they optimize the KGE model by following the standard KGE loss function as

L

=

[

γ

+

f

(

v

h

,

v

r

,

v

t

)

−

f

(

v

h

′

,

v

r

′

,

v

t

′

)

]

,

(2)

\mathcal{L}= [γ + f(v_h,v_r,v_t) - f(v'_h,v'_r,v'_t)], \tag{2}

L=[γ+f(vh,vr,vt)−f(vh′,vr′,vt′)],(2)

where

f

f

f is the KGE scoring function,

γ

γ

γ is a margin hyperparameter, and

v

h

′

,

v

r

′

,

v'_h, v'_r,

vh′,vr′, and

v

t

′

v'_t

vt′ are the negative samples. In this way, the KGE model could learn adequate structure information, while reserving partial knowledge from LLM enabling better knowledge graph embedding. KEPLER [40] offers a unified model for knowledge embedding and pre-trained language representation. This model not only generates effective text-enhanced knowledge embedding using powerful LLMs but also seamlessly integrates factual knowledge into LLMs. Nayyeri et al. [137] use LLMs to generate the world-level, sentence-level, and document-level representations. They are integrated with graph structure embeddings into a unified vector by Dihedron and Quaternion representations of 4D hypercomplex numbers. Huang et al. [138] combine LLMs with other vision and graph encoders to learn multi-modal knowledge graph embedding that enhances the performance of downstream tasks. CoDEx [139] presents a novel loss function empowered by LLMs that guides the KGE models in measuring the likelihood of triples by considering the textual information. The proposed loss function is agnostic to model structure that can be incorporated with any KGE model.

Pretrain-KGE [97]是一个典型的方法,遵循图16所示的框架。给定知识图谱中的三元组

(

h

,

r

,

t

)

(h,r,t)

(h,r,t),它首先使用LLM编码器将实体

h

、

t

h、t

h、t和关系

r

r

r的文本描述编码为表示:

e

h

=

L

L

M

(

T

e

x

t

h

)

,

e

t

=

L

L

M

(

T

e

x

t

t

)

,

e

r

=

L

L

M

(

T

e

x

t

r

)

,

(1)

e_h=LLM(Text_h),e_t = LLM(Text_t),e_r = LLM(Text_r) ,\tag{1}

eh=LLM(Texth),et=LLM(Textt),er=LLM(Textr),(1)

其中

e

h

、

e

r

e_h、e_r

eh、er和

e

t

e_t

et分别表示实体

h

、

t

h、t

h、t和关系

r

r

r的初始嵌入。Pretrain-KGE在实验中使用BERT作为LLM编码器。然后,将初始嵌入馈送到KGE模型中生成最终的嵌入

v

h

、

v

r

v_h、v_r

vh、vr和

v

t

v_t

vt。在KGE训练阶段,通过遵循标准KGE损失函数优化KGE模型:

L

=

[

γ

+

f

(

v

h

,

v

r

,

v

t

)

−

f

(

v

h

′

,

v

r

′

,

v

t

′

)

]

,

(2)

\mathcal{L}= [γ + f(v_h,v_r,v_t) - f(v'_h,v'_r,v'_t)], \tag{2}

L=[γ+f(vh,vr,vt)−f(vh′,vr′,vt′)],(2)

其中

f

f

f是KGE评分函数,

γ

γ

γ是边界超参数,

v

′

h

、

v

′

r

v′_h、v′_r

v′h、v′r和

v

′

t

v′_t

v′t是负样本。通过这种方式,KGE模型可以学习充分的结构信息,同时保留LLM中的部分知识,从而实现更好的知识图谱嵌入。KEPLER [40]提供了一个统一的模型用于知识嵌入和预训练的语言表示。该模型不仅使用强大的LLM生成有效的文本增强的知识嵌入,还无缝地将事实知识集成到LLM中。Nayyeri等人[137]使用LLM生成世界级、句子级和文档级表示。它们通过四维超复数的Dihedron和Quaternion表示将其与图结构嵌入统一为一个向量。Huang等人[138]将LLM与其他视觉和图形编码器结合起来,学习多模态的知识图谱嵌入,提高下游任务的性能。CoDEx[139]提出了一种由LLM赋能的新型损失函数,通过考虑文本信息来指导KGE模型测量三元组的可能性。所提出的损失函数与模型结构无关,可以与任何KGE模型结合使用。

5.1.2 LLMs for Joint Text and KG Embedding

5.1.2 用于联合文本和知识图谱嵌入的LLM

Instead of using KGE model to consider graph structure,another line of methods directly employs LLMs to incorporate both the graph structure and textual information into the embedding space simultaneously. As shown in Fig. 17,kNN-KGE [141] treats the entities and relations as special tokens in the LLM. During training, it transfers each triple

(

h

,

r

,

t

)

(h, r, t)

(h,r,t) and corresponding text descriptions into a sentence

x

x

x as

x

=

[

C

L

S

]

h

T

e

x

t

h

[

S

E

P

]

r

[

S

E

P

]

[

M

A

S

K

]

T

e

x

t

t

[

S

E

P

]

,

(3)

x = [CLS] h Text_h [SEP] r [SEP] [MASK] Text_t [SEP], \tag{3}

x=[CLS]hTexth[SEP]r[SEP][MASK]Textt[SEP],(3)

where the tailed entities are replaced by [MASK]. The sentence is fed into a LLM, which then finetunes the model to predict the masked entity, formulated as

P

L

L

M

(

t

∣

h

,

r

)

=

P

(

[

M

A

S

K

]

=

t

∣

x

,

Θ

)

,

(4)

P_{LLM}(t|h, r) = P([MASK]=t|x, Θ), \tag{4}

PLLM(t∣h,r)=P([MASK]=t∣x,Θ),(4)

where

Θ

Θ

Θ denotes the parameters of the LLM. The LLM is optimized to maximize the probability of the correct entity

t

t

t. After training, the corresponding token representations in LLMs are used as embeddings for entities and relations. Similarly, LMKE [140] proposes a contrastive learning method to improve the learning of embeddings generated by LLMs for KGE. Meanwhile, to better capture graph structure, LambdaKG [142] samples 1-hop neighbor entities and concatenates their tokens with the triple as a sentence feeding into LLMs.

另一类方法直接使用LLM将图结构和文本信息同时纳入嵌入空间,而不是使用KGE模型考虑图结构。如图17所示,kNN-KGE [141]将实体和关系作为LLM中的特殊标记处理。在训练过程中,它将每个三元组

(

h

,

r

,

t

)

(h,r,t)

(h,r,t)及其相应的文本描述转化为句子

x

x

x:

x

=

[

C

L

S

]

h

T

e

x

t

h

[

S

E

P

]

r

[

S

E

P

]

[

M

A

S

K

]

T

e

x

t

t

[

S

E

P

]

,

(3)

x = [CLS] h Text_h [SEP] r [SEP] [MASK] Text_t [SEP], \tag{3}

x=[CLS]hTexth[SEP]r[SEP][MASK]Textt[SEP],(3)

其中尾部实体被[MASK]替换。将句子馈送到LLM中,然后微调模型来预测被遮蔽的实体,公式化为

P

L

L

M

(

t

∣

h

,

r

)

=

P

(

[

M

A

S

K

]

=

t

∣

x

,

Θ

)

,

(4)

P_{LLM}(t|h, r) = P([MASK]=t|x, Θ), \tag{4}

PLLM(t∣h,r)=P([MASK]=t∣x,Θ),(4)

其中

Θ

Θ

Θ表示LLM的参数。LLM被优化以最大化正确实体t的概率。训练后,LLM中相应的标记表示用作实体和关系的嵌入。类似地,LMKE [140]提出了一种对比学习方法,改进了LLM生成的用于KGE的嵌入的学习。此外,为了更好地捕捉图结构,LambdaKG [142]采样1-hop邻居实体,并将它们的标记与三元组连接为一个句子,然后馈送到LLMs中。

5.2 LLM-augmented KG Completion

5.2 基于LLM的知识图谱补全

Knowledge Graph Completion (KGC) refers to the task of inferring missing facts in a given knowledge graph. Similar to KGE, conventional KGC methods mainly focused on the structure of the KG, without considering the extensive textual information. However, the recent integration of LLMs enables KGC methods to encode text or generate facts for better KGC performance. These methods fall into two distinct categories based on their utilization styles: 1) LLM as Encoders (PaE), and 2) LLM as Generators (PaG).

知识图谱补全(KGC)是指在给定知识图谱中推断缺失的事实的任务。与KGE类似,传统的KGC方法主要关注知识图谱的结构,而没有考虑广泛的文本信息。然而,LLM的最新整合使得KGC方法能够编码文本或生成事实,以提高KGC的性能。根据它们的使用方式,这些方法分为两个不同的类别:1) LLM作为编码器(PaE),2) LLM作为生成器(PaG)。

5.2.1 LLM as Encoders (PaE).

5.2.1 LLM作为编码器(PaE)

As shown in Fig. 18 (a), (b), and (c ), This line of work first uses encoder-only LLMs to encode textual information as well as KG facts. Then, they predict the plausibility of the triples by feeding the encoded representation into a prediction head, which could be a simple MLP or conventional KG score function (e.g., TransE [25] and TransR [187]).

如图18(a)、(b)和(c )所示,这一系列的方法首先使用仅编码器LLM对文本信息和知识图谱事实进行编码。然后,它们通过将编码表示输入到预测头部(可以是简单的MLP或传统的知识图谱评分函数,如TransE [25]和TransR [187])中来预测三元组的可信度。

Joint Encoding. Since the encoder-only LLMs (e.g., Bert [1]) are well at encoding text sequences, KG-BERT [26] represents a triple (h, r, t) as a text sequence and encodes it with LLM Fig. 18(a).

x

=

[

C

L

S

]

T

e

x

t

h

[

S

E

P

]

T

e

x

t

r

[

S

E

P

]

T

e

x

t

t

[

S

E

P

]

,

(5)

x = [CLS] Text_h [SEP] Text_r [SEP] Text_t [SEP], \tag{5}

x=[CLS]Texth[SEP]Textr[SEP]Textt[SEP],(5)

The final hidden state of the

[

C

L

S

]

[CLS]

[CLS] token is fed into a classifier to predict the possibility of the triple, formulated as

s

=

σ

(

M

L

P

(

e

[

C

L

S

]

)

)

,

(6)

s = σ(MLP(e_{[CLS]})), \tag{6}

s=σ(MLP(e[CLS])),(6)

where

σ

(

⋅

)

σ(·)

σ(⋅)denotes the sigmoid function and

e

[

C

L

S

]

e_{[CLS]}

e[CLS] denotes the representation encoded by LLMs. To improve the efficacy of KG-BERT, MTL-KGC [143] proposed a MultiTask Learning for the KGC framework which incorporates additional auxiliary tasks into the model’s training, i.e. prediction (RP) and relevance ranking (RR). PKGC [144] assesses the validity of a triplet

(

h

,

r

,

t

)

(h, r, t)

(h,r,t) by transforming the triple and its supporting information into natural language sentences with pre-defined templates. These sentences are then processed by LLMs for binary classification. The supporting information of the triplet is derived from the attributes of

h

h

h and

t

t

t with a verbalizing function. For instance, if the triple is (Lebron James, member of sports team, Lakers), the information regarding Lebron James is verbalized as ”Lebron James: American basketball player”. LASS [145] observes that language semantics and graph structures are equally vital to KGC. As a result, LASS is proposed to jointly learn two types of embeddings: semantic embedding and structure embedding. In this method, the full text of a triple is forwarded to the LLM, and the mean pooling of the corresponding LLM outputs for

h

,

r

,

h, r,

h,r,and

t

t

t are separately calculated. These embeddings are then passed to a graphbased method, i.e. TransE, to reconstruct the KG structures.

联合编码。由于仅编码器LLM(如BERT [1])在编码文本序列方面表现出色,KG-BERT [26]将三元组

(

h

,

r

,

t

)

(h,r,t)

(h,r,t)表示为一个文本序列,并使用LLM对其进行编码(图18(a))。

x

=

[

C

L

S

]

T

e

x

t

h

[

S

E

P

]

T

e

x

t

r

[

S

E

P

]

T

e

x

t

t

[

S

E

P

]

,

(5)

x = [CLS] Text_h [SEP] Text_r [SEP] Text_t [SEP], \tag{5}

x=[CLS]Texth[SEP]Textr[SEP]Textt[SEP],(5)

将

[

C

L

S

]

[CLS]

[CLS]标记的最终隐藏状态馈送到分类器中,预测三元组的可能性,公式化为

s

=

σ

(

M

L

P

(

e

[

C

L

S

]

)

)

,

(6)

s = σ(MLP(e_{[CLS]})), \tag{6}

s=σ(MLP(e[CLS])),(6)

其中

σ

(

⋅

)

σ(·)

σ(⋅)表示Sigmoid函数,

e

[

C

L

S

]

e_{[CLS]}

e[CLS]表示LLM编码的表示。为了提高KG-BERT的效果,MTL-KGC [143]提出了一种用于KGC框架的多任务学习,将额外的辅助任务(如预测和相关性排序)融入模型的训练中。PKGC [144]通过使用预定义模板将三元组及其支持信息转化为自然语言句子,评估三元组

(

h

,

r

,

t

)

(h,r,t)

(h,r,t)的有效性。这些句子然后通过LLMs进行二分类处理。三元组的支持信息是根据

h

h

h和

t

t

t的属性使用语言化函数推导出来的。例如,如果三元组是(Lebron James,member of sports team,Lakers),则关于Lebron James的信息被语言化为“Lebron James:American basketball player”。LASS [145]观察到语言语义和图结构对KGC同样重要。因此,提出了LASS来联合学习两种类型的嵌入:语义嵌入和结构嵌入。在这种方法中,将三元组的完整文本转发给LLM,然后分别计算

h

、

r

h、r

h、r和

t

t

t的对应LLMs输出的平均池化。然后,将这些嵌入传递给图结构方法(如TransE)以重构知识图谱结构。

MLM Encoding. Instead of encoding the full text of a triple, many works introduce the concept of Masked Language Model (MLM) to encode KG text (Fig. 18(b)). MEM-KGC [146] uses Masked Entity Model (MEM) classification mechanism to predict the masked entities of the triple. The input text is in the form of

x

=

[

C

L

S

]

T

e

x

t

h

[

S

E

P

]

T

e

x

t

r

[

S

E

P

]

[

M

A

S

K

]

[

S

E

P

]

,

(7)

x = [CLS] Text_h [SEP] Text_r [SEP] [MASK] [SEP],\tag{7}

x=[CLS]Texth[SEP]Textr[SEP][MASK][SEP],(7)

Similar to Eq. 4, it tries to maximize the probability that the masked entity is the correct entity t. Additionally, to enable the model to learn unseen entities, MEM-KGC integrates multitask learning for entities and super-class prediction based on the text description of entities:

x

=

[

C

L

S

]

[

M

A

S

K

]

[

S

E

P

]

T

e

x

t

h

[

S

E

P

]

.

(8)

x = [CLS] [MASK] [SEP] Text_h [SEP]. \tag{8}

x=[CLS][MASK][SEP]Texth[SEP].(8)

OpenWorld KGC [147] expands the MEM-KGC model to address the challenges of open-world KGC with a pipeline framework, where two sequential MLM-based modules are defined: Entity Description Prediction (EDP), an auxiliary module that predicts a corresponding entity with a given textual description; Incomplete Triple Prediction (ITP), the target module that predicts a plausible entity for a given incomplete triple

(

h

,

r

,

?

)

(h, r, ?)

(h,r,?). EDP first encodes the triple with Eq. 8 and generates the final hidden state, which is then forwarded into ITP as an embedding of the head entity in Eq. 7 to predict target entities.

MLM编码。许多工作不是编码三元组的完整文本,而是引入了遮蔽语言模型(MLM)的概念来编码知识图谱的文本信息(图18(b))。MEM-KGC [146]使用遮蔽实体模型(MEM)分类机制来预测三元组中的遮蔽实体。输入文本的形式为

x

=

[

C

L

S

]

T

e

x

t

h

[

S

E

P

]

T

e

x

t

r

[

S

E

P

]

[

M

A

S

K

]

[

S

E

P

]

,

(7)

x = [CLS] Text_h [SEP] Text_r [SEP] [MASK] [SEP],\tag{7}

x=[CLS]Texth[SEP]Textr[SEP][MASK][SEP],(7)

类似于公式4,它试图最大化遮蔽实体是正确实体

t

t

t的概率。此外,为了使模型学习未见实体,MEM-KGC还结合了基于实体文本描述的实体和超类预测的多任务学习:

x

=

[

C

L

S

]

[

M

A

S

K

]

[

S

E

P

]

T

e

x

t

h

[

S

E

P

]

.

(8)

x = [CLS] [MASK] [SEP] Text_h [SEP]. \tag{8}

x=[CLS][MASK][SEP]Texth[SEP].(8)

OpenWorld KGC [147]将MEM-KGC模型扩展到处理开放世界KGC的挑战,采用了一个流水线框架,在其中定义了两个串行的基于MLM的模块:实体描述预测(EDP)和不完整三元组预测(ITP)。EDP首先使用公式8对三元组进行编码,并生成最终的隐藏状态,然后将其作为公式7中头实体的嵌入传递给ITP,以预测目标实体。

Separated Encoding. As shown in Fig. 18( c), these methods involve partitioning a triple

(

h

,

r

,

t

)

(h, r, t)

(h,r,t) into two distinct parts, i.e.

(

h

,

r

)

(h, r)

(h,r) and

t

t

t, which can be expressed as

x

(

h

,

r

)

=

[

C

L

S

]

T

e

x

t

h

[

S

E

P

]

T

e

x

t

r

[

S

E

P

]

,

(9)

x_{(h,r)} = [CLS] Text_h [SEP] Text_r [SEP], \tag{9}

x(h,r)=[CLS]Texth[SEP]Textr[SEP],(9)

x

t

=

[

C

L

S

]

T

e

x

t

t

[

S

E

P

]

.

(10)

x_t = [CLS] Text_t [SEP]. \tag{10}

xt=[CLS]Textt[SEP].(10)

Then the two parts are encoded separately by LLMs, and the final hidden states of the

[

C

L

S

]

[CLS]

[CLS] tokens are used as the representations of

(

h

,

r

)

(h, r)

(h,r) and

t

t

t, respectively. The representations are then fed into a scoring function to predict the possibility of the triple, formulated as

s

=

f

s

c

o

r

e

(

e

(

h

,

r

)

,

e

t

)

,

(11)

s = f_{score}(e_{(h,r)}, e_t), \tag{11}

s=fscore(e(h,r),et),(11)

where

f

s

c

o

r

e

f_{score}

fscore denotes the score function like TransE.

分离编码。如图18( c)所示,这些方法将三元组

(

h

,

r

,

t

)

(h,r,t)

(h,r,t)分为两个不同的部分,即

(

h

,

r

)

(h,r)

(h,r)和

t

t

t,可以表示为

x

(

h

,

r

)

=

[

C

L

S

]

T

e

x

t

h

[

S

E

P

]

T

e

x

t

r

[

S

E

P

]

,

(9)

x_{(h,r)} = [CLS] Text_h [SEP] Text_r [SEP], \tag{9}

x(h,r)=[CLS]Texth[SEP]Textr[SEP],(9)

x

t

=

[

C

L

S

]

T

e

x

t

t

[

S

E

P

]

.

(10)