问题描述:

mindspore.nn.Pad 输出异常

不小心把原问题删掉了。。。重新说明一遍

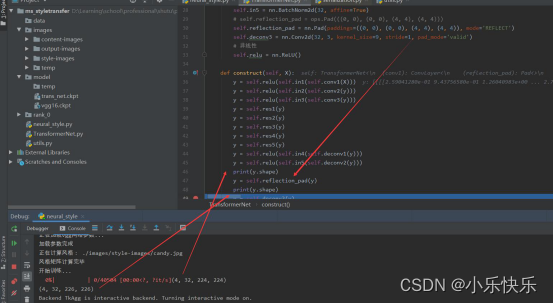

代码中self.reflection_pad函数不能正确对输入的数据进行填充,理论上应该输出【32,232,232】维度的数据 实际上输出了【32,226,226】的数据。

实际上使用了这个函数之后,训练时反向传播中也会报错。

【问题截图】

【代码】

import mindspore.nn as nnimport mindspore.ops as ops

class TransformerNet(nn.Cell):

def __init__(self):

super(TransformerNet, self).__init__()

self.conv1 = ConvLayer(3, 32, kernel_size=9, stride=1)

# self.in1 = nn.InstanceNorm2d(32, affine=True)

self.in1 = nn.BatchNorm2d(32, affine=True)

self.conv2 = ConvLayer(32, 64, kernel_size=3, stride=2)

# self.in2 = nn.InstanceNorm2d(64, affine=True)

self.in2 = nn.BatchNorm2d(64, affine=True)

self.conv3 = ConvLayer(64, 128, kernel_size=3, stride=2)

# self.in3 = nn.InstanceNorm2d(128, affine=True)

self.in3 = nn.BatchNorm2d(128, affine=True)

# Residual layers

self.res1 = ResidualBlock(128)

self.res2 = ResidualBlock(128)

self.res3 = ResidualBlock(128)

self.res4 = ResidualBlock(128)

self.res5 = ResidualBlock(128)

# Upsampling Layers

self.deconv1 = UpsampleConvLayer(128, 64, kernel_size=3, stride=1, upsample=2)

# self.in4 = nn.InstanceNorm2d(64, affine=True)

self.in4 = nn.BatchNorm2d(64, affine=True)

self.deconv2 = UpsampleConvLayer(64, 32, kernel_size=3, stride=1, upsample=2)

# self.in5 = nn.InstanceNorm2d(32, affine=True)

self.in5 = nn.BatchNorm2d(32, affine=True)

# self.reflection_pad = ops.Pad(((0, 0), (0, 0), (4, 4), (4, 4)))

self.reflection_pad = nn.Pad(paddings=((0, 0), (0, 0), (4, 4), (4, 4)), mode='REFLECT')

self.deconv3 = nn.Conv2d(32, 3, kernel_size=9, stride=1, pad_mode='valid')

# 非线性

self.relu = nn.ReLU()

def construct(self, X):

y = self.relu(self.in1(self.conv1(X)))

y = self.relu(self.in2(self.conv2(y)))

y = self.relu(self.in3(self.conv3(y)))

y = self.res1(y)

y = self.res2(y)

y = self.res3(y)

y = self.res4(y)

y = self.res5(y)

y = self.relu(self.in4(self.deconv1(y)))

y = self.relu(self.in5(self.deconv2(y)))

print(y.shape)

y = self.reflection_pad(y)

print(y.shape)

y = self.deconv3(y)

return y

class ConvLayer(nn.Cell):

def __init__(self, in_channels, out_channels, kernel_size, stride):

super(ConvLayer, self).__init__()

padding_size = kernel_size // 2

self.reflection_padding = ((0, 0), (0, 0), (padding_size, padding_size), (padding_size, padding_size))

self.reflection_pad = nn.Pad(paddings=self.reflection_padding, mode='REFLECT')

self.conv2d = nn.Conv2d(in_channels, out_channels, kernel_size, stride, pad_mode='valid')

def construct(self, x):

out = self.reflection_pad(x)

out = self.conv2d(out)

return out

class ResidualBlock(nn.Cell):

"""ResidualBlock

introduced in: https://arxiv.org/abs/1512.03385

recommended architecture: http://torch.ch/blog/2016/02/04/resnets.html

"""

def __init__(self, channels):

super(ResidualBlock, self).__init__()

self.conv1 = ConvLayer(channels, channels, kernel_size=3, stride=1)

# self.in1 = nn.InstanceNorm2d(channels, affine=True)

self.in1 = nn.BatchNorm2d(channels, affine=True)

self.conv2 = ConvLayer(channels, channels, kernel_size=3, stride=1)

# self.in2 = nn.InstanceNorm2d(channels, affine=True)

self.in2 = nn.BatchNorm2d(channels, affine=True)

self.relu = nn.ReLU()

def construct(self, x):

residual = x

out = self.relu(self.in1(self.conv1(x)))

out = self.in2(self.conv2(out))

out = out + residual

return out

class UpsampleConvLayer(nn.Cell):

"""UpsampleConvLayer

Upsamples the input and then does a convolution. This method gives better results

compared to ConvTranspose2d.

ref: http://distill.pub/2016/deconv-checkerboard/

"""

def __init__(self, in_channels, out_channels, kernel_size, stride, upsample=None): # upsample

super(UpsampleConvLayer, self).__init__()

self.upsample = upsample

if upsample:

self.upsample_layer = nn.ResizeBilinear()

padding_size = kernel_size // 2

reflection_padding = ((0, 0), (0, 0), (padding_size, padding_size), (padding_size, padding_size))

self.reflection_pad = nn.Pad(paddings=reflection_padding, mode='REFLECT')

self.conv2d = nn.Conv2d(in_channels, out_channels, kernel_size, stride, pad_mode='valid')

def construct(self, x):

x_in = x

if self.upsample:

x_in = self.upsample_layer(x_in, scale_factor=self.upsample)

out = self.reflection_pad(x_in)

out = self.conv2d(out)

return out解答:

ops.pad算子是不支持reflect模式的,想要实现symmetric或者reflect模式请使用ops.mirrorpad算子。

6354

6354

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?