之前学习了符号型数据的NB算法,现在开始学习数值型数据的NB算法。

数值型数据的NB算法

我们可以理解在一个数据集中,P(89 < humidty <91)的概率不为0,但是humidty恰好为整数90(而不是90.001,即小数点后还有数字,不是精确的90)的概率基本为0。

再回忆一下上次学习过的符号型数据的NB算法的公式:

(1)

其中 argmax 表示哪个类别的相对概率高, 我们就预测为该类别。 k为决策总数, m为条件的总个数。

我们假设数据服从正态分布,正态分布在实际中也最常见。

(2)

其中的参数为均值,以及方差

。

我们把(2)式中的 替换掉(1)式中的

。

(3)

其中是常数不会影响argmax的最终结果,可以去掉。最后化简得:

(4)

其中的以及

表示方差与均值都与类别、属性相关。

输入:arff数据集

输出:符号型数据的NB算法以及数值型数据的NB算法的预测准确度

优化目标:可能没有优化目标

代码:

package knn5;

import java.io.FileReader;

import java.util.Arrays;

import weka.core.*;

public class NaiveBayes {

private class GaussianParamters{

double mu;

double sigma;

public GaussianParamters(double paraMu, double paraSigma) {

mu = paraMu;

sigma = paraSigma;

}//Of the constructor

public String toString() {

return "(" + mu + ", " + sigma + ")";

}// Of toString

}// Of GaussianParamters

Instances dataset;

int numClasses;

int numInstances;

int numConditions;

int[] predicts;

double[] classDistribution;

double[] classDistributionLaplacian;

double[][][] conditionalCounts;

double[][][] conditionalProbabilitiesLaplacian;

GaussianParamters[][] gaussianParameters;

int dataType;

public static final int NOMINAL = 0;

public static final int NUMERICAL = 1;

public NaiveBayes(String paraFilename) {

dataset = null;

try {

FileReader fileReader = new FileReader(paraFilename);

dataset = new Instances(fileReader);

fileReader.close();

}catch(Exception ee) {

System.out.println("Cannot read the file: " + paraFilename + "\r\n" + ee);

System.exit(0);

}//Of try

dataset.setClassIndex(dataset.numAttributes() - 1);

numConditions = dataset.numAttributes() - 1;

numInstances =dataset.numInstances();

numClasses = dataset.attribute(numConditions).numValues();

}// Of the constructor

public NaiveBayes(Instances paraInstances) {

dataset = paraInstances;

dataset.setClassIndex(dataset.numAttributes() - 1);

numConditions = dataset.numAttributes() - 1;

numInstances = dataset.numInstances();

numClasses = dataset.attribute(numConditions).numValues();

}// Of the constructor

public void setDataType(int paraDataType) {

dataType = paraDataType;

}// Of setDataType

public void calculateClassDistribution() {

classDistribution = new double[numClasses];

classDistributionLaplacian = new double[numClasses];

double[]tempCounts = new double[numClasses];

for(int i = 0;i < numInstances; i++) {

int tempClassValue = (int)dataset.instance(i).classValue();

tempCounts[tempClassValue]++;

}//Of for i

for(int i = 0; i < numClasses; i++) {

classDistribution[i] = tempCounts[i]/numInstances;

classDistributionLaplacian[i] = (tempCounts[i] + 1)/(numInstances + numClasses);

}//Of for i

System.out.println("Class distribution: " + Arrays.toString(classDistribution));

System.out.println("Class distribution Laplacian: " + Arrays.toString(classDistributionLaplacian));

}// Of calculateClassDistribution

public void calculateConditionalProbabilities() {

conditionalCounts = new double[numClasses][numConditions][];

conditionalProbabilitiesLaplacian = new double[numClasses][numConditions][];

for(int i = 0; i < numClasses; i++) {

for(int j = 0; j < numConditions; j++) {

int tempNumValues = (int)dataset.attribute(j).numValues() ;

conditionalCounts[i][j] = new double[tempNumValues];

conditionalProbabilitiesLaplacian[i][j] = new double[tempNumValues];

}//Of for j

}//Of for i

int[] tempClassCounts = new int[numClasses];

for(int i = 0; i < numInstances; i++) {

int tempClass = (int)dataset.instance(i).classValue();

tempClassCounts[tempClass] ++;

for(int j = 0; j < numConditions; j++) {

int tempValue = (int)dataset.instance(i).value(j);

conditionalCounts[tempClass][j][tempValue]++;

}//Of for j

}//Of for i

for(int i = 0; i < numClasses; i++) {

for(int j = 0; j < numConditions; j++) {

int tempNumValues = (int)dataset.attribute(j).numValues() ;

for(int k = 0; k < tempNumValues; k++) {

conditionalProbabilitiesLaplacian[i][j][k] = (conditionalCounts[i][j][k] + 1)

/ (tempClassCounts[i] + tempNumValues);

}// Of for k

} // Of for j

} // Of for i

System.out.println("Conditional probabilities: " + Arrays.deepToString(conditionalCounts));

}// Of calculateConditionalProbabilities

public void calculateGausssianParameters() {

gaussianParameters = new GaussianParamters[numClasses][numConditions];

double[] tempValuesArray = new double[numInstances];

int tempNumValues = 0;

double tempSum = 0;

for (int i = 0; i < numClasses; i++) {

for (int j = 0; j < numConditions; j++) {

tempSum = 0;

tempNumValues = 0;

for (int k = 0; k < numInstances; k++) {

if ((int) dataset.instance(k).classValue() != i) {

continue;

} // Of if

tempValuesArray[tempNumValues] = dataset.instance(k).value(j);

tempSum += tempValuesArray[tempNumValues];

tempNumValues++;

} // Of for k

double tempMu = tempSum / tempNumValues;

double tempSigma = 0;

for (int k = 0; k < tempNumValues; k++) {

tempSigma += (tempValuesArray[k] - tempMu) * (tempValuesArray[k] - tempMu);

} // Of for k

tempSigma /= tempNumValues;

tempSigma = Math.sqrt(tempSigma);

gaussianParameters[i][j] = new GaussianParamters(tempMu, tempSigma);

} // Of for j

} // Of for i

System.out.println(Arrays.deepToString(gaussianParameters));

}// Of calculateGausssianParameters

public void classify() {

predicts = new int[numInstances];

for(int i = 0; i < numInstances; i++) {

predicts[i] = classify(dataset.instance(i));

}//Of for i

}//Of classify

public int classify(Instance paraInstance) {

if(dataType == NOMINAL) {

return classifyNominal(paraInstance);

}else if(dataType == NUMERICAL) {

return classifyNumerical(paraInstance);

}//Of if

return -1;

}//Of classify

public int classifyNominal(Instance paraInstance) {

double tempBiggest = -10000;

int resultBestIndex = 0;

for(int i = 0; i < numClasses; i++) {

double tempClassProbabilityLaplacian = Math.log(classDistributionLaplacian[i]);

double tempPseudoProbability = tempClassProbabilityLaplacian;

for (int j = 0; j < numConditions; j++) {

int tempAttributeValue = (int) paraInstance.value(j);

tempPseudoProbability += Math.log(conditionalCounts[i][j][tempAttributeValue])

- tempClassProbabilityLaplacian;

} // Of for j

if (tempBiggest < tempPseudoProbability) {

tempBiggest = tempPseudoProbability;

resultBestIndex = i;

} // Of if

} // Of for i

return resultBestIndex;

}// Of classifyNominal

public int classifyNumerical(Instance paraInstance) {

double tempBiggest = -10000;

int resultBestIndex = 0;

for (int i = 0; i < numClasses; i++) {

double tempClassProbabilityLaplacian = Math.log(classDistributionLaplacian[i]);

double tempPseudoProbability = tempClassProbabilityLaplacian;

for (int j = 0; j < numConditions; j++) {

double tempAttributeValue = paraInstance.value(j);

double tempSigma = gaussianParameters[i][j].sigma;

double tempMu = gaussianParameters[i][j].mu;

tempPseudoProbability += -Math.log(tempSigma) - (tempAttributeValue - tempMu)

* (tempAttributeValue - tempMu) / (2 * tempSigma * tempSigma);

} // Of for j

if (tempBiggest < tempPseudoProbability) {

tempBiggest = tempPseudoProbability;

resultBestIndex = i;

} // Of if

} // Of for i

return resultBestIndex;

}// Of classifyNumerical

public double computeAccuracy() {

double tempCorrect = 0;

for (int i = 0; i < numInstances; i++) {

if (predicts[i] == (int) dataset.instance(i).classValue()) {

tempCorrect++;

} // Of if

} // Of for i

double resultAccuracy = tempCorrect / numInstances;

return resultAccuracy;

}// Of computeAccuracy

public static void testNominal() {

System.out.println("Hello, Naive Bayes. I only want to test the nominal data.");

String tempFilename = "C:\\\\Users\\\\ASUS\\\\Desktop\\\\文件\\\\weather.arff";

NaiveBayes tempLearner = new NaiveBayes(tempFilename);

tempLearner.setDataType(NOMINAL);

tempLearner.calculateClassDistribution();

tempLearner.calculateConditionalProbabilities();

tempLearner.classify();

System.out.println("The accuracy is: " + tempLearner.computeAccuracy());

}// Of testNominal

public static void testNumerical() {

System.out.println("Hello, Naive Bayes. I only want to test the numerical data with Gaussian assumption.");

String tempFilename = "C:\\\\Users\\\\ASUS\\\\Desktop\\\\文件\\\\iris.arff";

NaiveBayes tempLearner = new NaiveBayes(tempFilename);

tempLearner.setDataType(NUMERICAL);

tempLearner.calculateClassDistribution();

tempLearner.calculateGausssianParameters();

tempLearner.classify();

System.out.println("The accuracy is: " + tempLearner.computeAccuracy());

}// Of testNumerical

public static void main(String[] args) {

testNominal();

testNumerical();

}// Of main

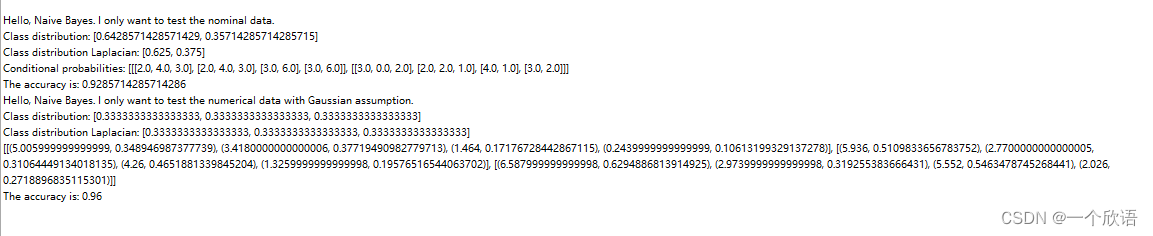

}// Of class NaiveBayes运行截图:

1429

1429

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?