ref <视觉SLAM十四讲从理论到实践 第二版>

5.1.相机模型

5.1.1.针孔模型

- 真实坐标->成像坐标: 相似

- X ′ = f X Z Y ′ = f Y Z \begin{aligned}X^{\prime} &=f \frac{X}{Z} \\Y^{\prime} &=f \frac{Y}{Z}\end{aligned} X′Y′=fZX=fZY

- 成像坐标->像素坐标: 相差一个缩放和一个原点的平移

- u = α X ′ + c x v = β Y ′ + c y \begin{array}{l}u=\alpha X^{\prime}+c_{x} \\v=\beta Y^{\prime}+c_{y}\end{array} u=αX′+cxv=βY′+cy

- 真实坐标->像素坐标: f(米) x α(像素/米) = f_x(像素)

- u = f x X Z + c x v = f y Y Z + c y \begin{array}{l}u=f_{x} \frac{X}{Z}+c_{x} \\v=f_{y} \frac{Y}{Z}+c_{y}\end{array} u=fxZX+cxv=fyZY+cy

-

Z

(

u

v

1

)

=

(

f

x

0

c

x

0

f

y

c

y

0

0

1

)

(

X

Y

Z

)

=

def

K

P

Z\left(\begin{array}{l}u \\v \\1\end{array}\right)=\left(\begin{array}{ccc}f_{x} & 0 & c_{x} \\0 & f_{y} & c_{y} \\0 & 0 & 1\end{array}\right)\left(\begin{array}{c}X \\Y \\Z\end{array}\right) \stackrel{\text { def }}{=} \boldsymbol{K}\boldsymbol{P}

Z⎝

⎛uv1⎠

⎞=⎝

⎛fx000fy0cxcy1⎠

⎞⎝

⎛XYZ⎠

⎞= def KP

- 内参矩阵K, 出厂后固定的

-

Z

P

u

v

=

Z

[

u

v

1

]

=

K

(

R

P

w

+

t

)

=

K

T

P

w

Z \boldsymbol{P}_{u v}=Z\left[\begin{array}{c}u \\v \\1\end{array}\right]=\boldsymbol{K}\left(\boldsymbol{R} \boldsymbol{P}_{\mathrm{w}}+\boldsymbol{t}\right)=\boldsymbol{K} \boldsymbol{T} \boldsymbol{P}_{\mathrm{w}}

ZPuv=Z⎣

⎡uv1⎦

⎤=K(RPw+t)=KTPw

- P的相机坐标是其世界坐标 P w P_w Pw根据相机当前位姿变换到相机坐标系的结果

- 内参: 描述像素比, 焦距等信息

- 标定: 自己确定内参

- 外参: 相机的位姿Rt, 随着运动发生变化

- 归一化: 一个世界坐标点转换到相机坐标系, 除掉了最后一维数值

- 归一化平面: z=1

- 归一化坐标: 看成相机前方z=1平面的一个点

- 点的深度在投影中被丢失, 单目视觉不能得到深度值

5.1.2.畸变模型

- 径向畸变: 透镜形状引起的畸变

- 桶形畸变: 与光轴距离↑, 放大率↓

- 枕型畸变: 相反

- 穿过图像中心和光轴有交点的直线还能保持形状不变

- 切向畸变: 相机组装过程不能使透镜与成像面严格平行

- 坐标点沿切线方向发生了变化

- 对相机坐标系的一点P, 可通过5个畸变系数

径向

k

1

k

2

k

3

切向

p

1

p

2

径向k_1k_2k_3切向p_1p_2

径向k1k2k3切向p1p2找到该点的正确位置

- 三维空间点投影到归一化图像平面, 归一化坐标 [ x , y ] T [x,y]^T [x,y]T

- { x distorted = x ( 1 + k 1 r 2 + k 2 r 4 + k 3 r 6 ) + 2 p 1 x y + p 2 ( r 2 + 2 x 2 ) y distorted = y ( 1 + k 1 r 2 + k 2 r 4 + k 3 r 6 ) + p 1 ( r 2 + 2 y 2 ) + 2 p 2 x y \left\{\begin{array}{l}x_{\text {distorted }}=x\left(1+k_{1} r^{2}+k_{2} r^{4}+k_{3} r^{6}\right)+2 p_{1} x y+p_{2}\left(r^{2}+2 x^{2}\right) \\y_{\text {distorted }}=y\left(1+k_{1} r^{2}+k_{2} r^{4}+k_{3} r^{6}\right)+p_{1}\left(r^{2}+2 y^{2}\right)+2 p_{2} x y\end{array}\right. {xdistorted =x(1+k1r2+k2r4+k3r6)+2p1xy+p2(r2+2x2)ydistorted =y(1+k1r2+k2r4+k3r6)+p1(r2+2y2)+2p2xy

- 畸变后的点通过内参矩阵投影到像素平面, 得到在图像上的正确位置 { u = f x x d i s t o r t e d + c x v = f y y d i s t o r t e d + c y \left\{\begin{array}{l}u=f_xx_{\rm distorted}+c_x\\v=f_yy_{\rm distorted}+c_y\end{array}\right. {u=fxxdistorted+cxv=fyydistorted+cy

单目相机的成像过程

- 世界坐标系下有一个固定点 P P P, 世界坐标 P w P_w Pw

- 相机在运动, 由变换矩阵 T ∈ S E ( 3 ) T\in {\rm SE}(3) T∈SE(3)描述, P P P的相机坐标 P ~ c = R P w + t \tilde{P}_c=RP_w+t P~c=RPw+t

- P ~ c ( X , Y , Z ) \tilde{P}_c(X,Y,Z) P~c(X,Y,Z)投影到归一化平面 Z = 1 Z=1 Z=1, 得归一化坐标 P c = [ X / Z , Y / Z , 1 ] T P_c=[X/Z,Y/Z,1]^T Pc=[X/Z,Y/Z,1]T

- 有畸变时, 据畸变参数计算 P c P_c Pc发生畸变后的坐标

- 归一化坐标经内参, 对应到像素坐标 P u v = K P c P_{uv}=KP_c Puv=KPc

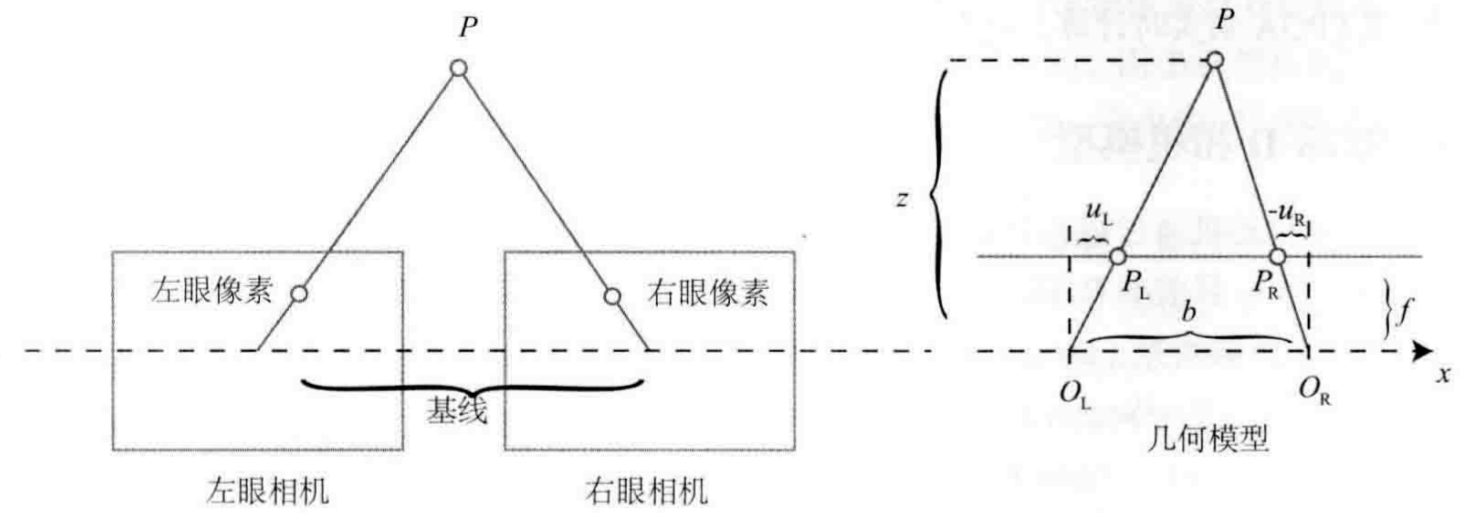

5.1.3.双目相机模型

- 通常左眼与右眼相机水平放置组成

- 光新都在x轴, 坐标仅在x轴上有差异

- z = f b d , d = u L − u R z=\frac{fb}{d}, d=u_L-u_R z=dfb,d=uL−uR

- 根据视差d可估计出一个像素与相机之间的距离, 视差↑距离↓

- 视差最小一像素, 存在理论最大值,fb决定

- 计算量大, 需用GPU/FPGA

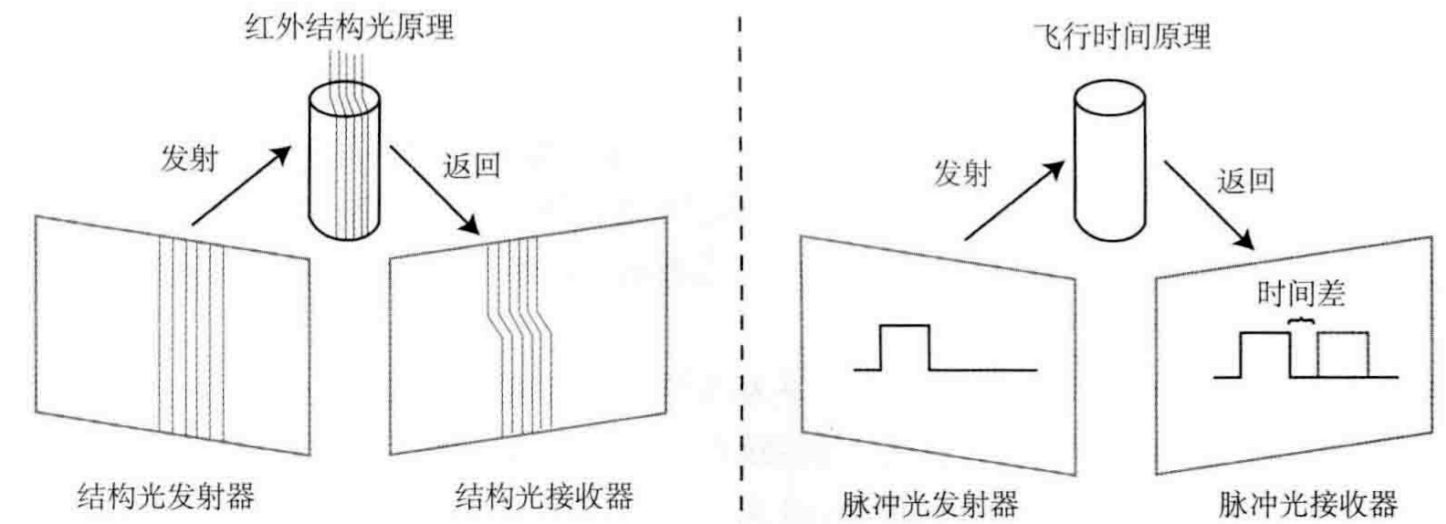

5.1.4.RGB-D模型

- 红外结构光

- 向探测目标发射一束光线(红外光), 据返回结构光图案计算物体与自身距离

- 飞行时间ToF

- 发射脉冲光, 据发送到返回之间光束飞行时间确定物体与自身距离

- 输出对应彩色图与深度图, 可在图像层/点云层处理数据

- 缺点

- 易受日光/其他传感器的红外光干扰, 不能在室外使用

- 投射材质无法测量

- 成本高,功耗大

图像

- 灰度图: 对一个xy处的像素

unsigned char pixel = image[y][x];- 像素0~255

- 深度16位0~65535

- 彩色24位, BGR

实现

#include <iostream>

#include <chrono>

using namespace std;

#include <eigen3/Eigen/Core>

using namespace Eigen;

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/calib3d.hpp>

#include <sophus/types.hpp>

#include "sophus/se3.hpp"

#include <pangolin/pangolin.h>

#include <vector>

#include <unistd.h>

#include <boost/format.hpp>

typedef Eigen::Matrix<double, 6, 1> Vector6d;

typedef vector<Sophus::SE3d, Eigen::aligned_allocator<Sophus::SE3d>> TrajectoryType;

char img1[] = "./Firefox_wallpaper.png";

char img2[] = "./distorted.png";

char imgL[] = "./left.png";

char imgR[] = "./right.png";

int CV_Base(const char* img);

int CV_UndistortImage(const char* img);

int StereoVision(const char * imgL, const char * imgR);

void showPointCloud(const vector<Vector4d, Eigen::aligned_allocator<Vector4d>> &pointcloud);

void showPointCloud6(const vector<Vector6d, Eigen::aligned_allocator<Vector6d>> &pointcloud);

void jointMap();

int main(int argc, char **argv) {

// CV_Base(img1);

// CV_UndistortImage(img2);

// StereoVision(imgL,imgR);

jointMap();

return 0;

}

int CV_Base(const char* img)

{

cv::Mat image;

image = cv::imread(img);

if(image.data == nullptr)

{

cerr << "file:" << img1 << "can not find" << endl;

return 0;

}

cout << "\nimage.width: " << image.cols << "\nimage.height: " << image.rows << "\nimage.channels: " << image.channels() << endl;

cv::imshow("img",image);

cv::waitKey(0);

if(image.type() != CV_8UC1 && image.type() != CV_8UC3)

{

cout << "\n please input a colorful or grey image" << endl;

return 0;

}

chrono::steady_clock::time_point t1 = chrono::steady_clock::now();

for(size_t y=0;y<image.rows;y++)

{

unsigned char *row_ptr = image.ptr<unsigned char>(y);

for(size_t x=0;x<image.cols;x++)

{

unsigned char *data_ptr = &row_ptr[x*image.channels()];

for(int c=0;c!=image.channels();c++)

{

unsigned char data = data_ptr[c];

}

}

}

chrono::steady_clock::time_point t2 = chrono::steady_clock::now();

chrono::duration<double> time_used = chrono::duration_cast < chrono::duration <double >> (t2-t1);

cout << "time use: " << time_used.count() << "s" << endl;

cv::Mat image_another = image;

image_another(cv::Rect(0,0,100,100)).setTo(0);

cv::imshow("img2",image);

cv::waitKey(0);

cv::Mat image_clone = image.clone();

image_clone(cv::Rect(0,0,100,100)).setTo(255);

cv::imshow("img3",image);

cv::imshow("img4",image_clone);

cv::waitKey(0);

cv::destroyAllWindows();

return 0;

}

int CV_UndistortImage(const char* img)

{

double k1 = -0.28340811, k2 = 0.07395907, p1 = 0.00019359, p2 = 1.76187114e-05;

double fx = 458.654, fy = 457.296, cx = 367.215, cy = 248.375;

cv::Mat image = cv::imread(img, 0);

int rows = image.rows, cols = image.cols;

cv::Mat image_undistort = cv::Mat(rows, cols, CV_8UC1);

for(int v=0; v<rows; v++)

{

for(int u=0; u<cols; u++)

{

double x = (u-cx)/fx, y = (v-cy)/fy;

double r = sqrt(x*x+y*y);

double x_distorted = x*(1 + k1*r*r + k2*r*r*r*r) + 2*p1*x*y + p2*(r*r + 2*x*x);

double y_distorted = y*(1 + k1*r*r + k2*r*r*r*r) + p1*(r*r + 2*y*y) + 2*p2*x*y;

double u_distorted = fx * x_distorted + cx;

double v_distorted = fy * y_distorted + cy;

if(u_distorted >= 0 && u_distorted <= cols && v_distorted >= 0 && u_distorted <= rows)

{

image_undistort.at<uchar>(v,u) = image.at<uchar>((int)v_distorted, (int)u_distorted);

}

else

{

image_undistort.at<uchar>(v,u) = 0;

}

}

}

cv::imshow("distorted", image);

cv::imshow("undistorted", image_undistort);

cv::waitKey();

}

int StereoVision(const char * imgL, const char * imgR)

{

double fx = 718.856, fy = 718.856, cx = 607.1928, cy = 185.2157;

double b = 0.573;

cv::Mat left = cv::imread(imgL,0);

cv::Mat right = cv::imread(imgR,0);

cv::Ptr<cv::StereoSGBM> sgbm = cv::StereoSGBM::create(0,96,9,8*9*9,32*9*9,1,63,10,100,32);

cv::Mat disparity_sgbm, disparity;

sgbm->compute(left,right,disparity_sgbm);

disparity_sgbm.convertTo(disparity, CV_32F, 1.0/16.0f);

vector<Vector4d, Eigen::aligned_allocator<Vector4d>> pointcloud;

for(int v=0; v<left.rows; v++)

{

for(int u=0; u<left.cols; u++)

{

if(disparity.at<float>(v,u) <= 10.0 || disparity.at<float>(v,u) >= 96.0)

continue;

Vector4d point(0,0,0,left.at<uchar>(v,u)/255.0);

double x = (u-cx)/fx;

double y = (v-cy)/fy;

double depth = fx * b / (disparity.at<float>(v,u));

point[0] = x * depth;

point[1] = y * depth;

point[2] = depth;

pointcloud.push_back(point);

}

}

cv::imshow("disparity", disparity / 96.0);

cv::waitKey(0);

showPointCloud(pointcloud);

}

void showPointCloud(const vector<Vector4d, Eigen::aligned_allocator<Vector4d>> &pointcloud) {

if (pointcloud.empty()) {

cerr << "Point cloud is empty!" << endl;

return;

}

pangolin::CreateWindowAndBind("Point Cloud Viewer", 1024, 768);

glEnable(GL_DEPTH_TEST);

glEnable(GL_BLEND);

glBlendFunc(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA);

pangolin::OpenGlRenderState s_cam(

pangolin::ProjectionMatrix(1024, 768, 500, 500, 512, 389, 0.1, 1000),

pangolin::ModelViewLookAt(0, -0.1, -1.8, 0, 0, 0, 0.0, -1.0, 0.0)

);

pangolin::View &d_cam = pangolin::CreateDisplay()

.SetBounds(0.0, 1.0, pangolin::Attach::Pix(175), 1.0, -1024.0f / 768.0f)

.SetHandler(new pangolin::Handler3D(s_cam));

while (pangolin::ShouldQuit() == false) {

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

d_cam.Activate(s_cam);

glClearColor(1.0f, 1.0f, 1.0f, 1.0f);

glPointSize(2);

glBegin(GL_POINTS);

for (auto &p: pointcloud) {

glColor3f(p[3], p[3], p[3]);

glVertex3d(p[0], p[1], p[2]);

}

glEnd();

pangolin::FinishFrame();

usleep(5000); // sleep 5 ms

}

return;

}

void jointMap()

{

vector<cv::Mat> colorImgs, depthImgs;

TrajectoryType poses;

ifstream fin("./pose.txt");

if(!fin)

{

cerr << "no pose.txt" << endl;

return ;

}

for(int i=0; i<5; i++)

{

boost::format fmt("./%s/%d.%s");

colorImgs.push_back(cv::imread((fmt % "color" % (i+1) % "png").str()));

depthImgs.push_back(cv::imread((fmt % "depth" % (i+1) % "pgm").str(),-1));

double data[7] = {0};

for(auto &d:data) fin >> d;

Sophus::SE3d pose(Eigen::Quaterniond(data[6],data[3],data[4],data[5]),Eigen::Vector3d(data[0],data[1],data[2]));

poses.push_back(pose);

}

double cx = 325.5;

double cy = 253.5;

double fx = 518.0;

double fy = 519.0;

double depthScale = 1000.0;

vector<Vector6d, Eigen::aligned_allocator<Vector6d>> pointcloud;

pointcloud.reserve(1000000);

for(int i=0; i<5; i++)

{

cout << "transforming" << i+1 << endl;

cv::Mat color = colorImgs[i];

cv::Mat depth = depthImgs[i];

Sophus::SE3d T = poses[i];

for(int v=0; v<color.rows; v++)

{

for(int u=0; u<color.cols; u++)

{

unsigned int d = depth.ptr<unsigned short>(v)[u];

if(d==0)continue;

Eigen::Vector3d point;

point[2] = double(d) / depthScale;

point[0] = (u-cx) * point[2] / fx;

point[1] = (v-cy) * point[2] / fy;

Eigen::Vector3d pointWorld = T*point;

Vector6d p;

p.head<3>() = pointWorld;

p[5] = color.data[v*color.step + u*color.channels()];

p[4] = color.data[v*color.step + u*color.channels() + 1];

p[3] = color.data[v*color.step + u*color.channels() + 2];

pointcloud.push_back(p);

}

}

}

cout << "\npoints: " << pointcloud.size() << endl;

showPointCloud6(pointcloud);

}

void showPointCloud6(const vector<Vector6d, Eigen::aligned_allocator<Vector6d>> &pointcloud) {

if (pointcloud.empty()) {

cerr << "Point cloud is empty!" << endl;

return;

}

pangolin::CreateWindowAndBind("Point Cloud Viewer", 1024, 768);

glEnable(GL_DEPTH_TEST);

glEnable(GL_BLEND);

glBlendFunc(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA);

pangolin::OpenGlRenderState s_cam(

pangolin::ProjectionMatrix(1024, 768, 500, 500, 512, 389, 0.1, 1000),

pangolin::ModelViewLookAt(0, -0.1, -1.8, 0, 0, 0, 0.0, -1.0, 0.0)

);

pangolin::View &d_cam = pangolin::CreateDisplay()

.SetBounds(0.0, 1.0, pangolin::Attach::Pix(175), 1.0, -1024.0f / 768.0f)

.SetHandler(new pangolin::Handler3D(s_cam));

while (pangolin::ShouldQuit() == false) {

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

d_cam.Activate(s_cam);

glClearColor(1.0f, 1.0f, 1.0f, 1.0f);

glPointSize(2);

glBegin(GL_POINTS);

for (auto &p: pointcloud) {

glColor3d(p[3] / 255.0, p[4] / 255.0, p[5] / 255.0);

glVertex3d(p[0], p[1], p[2]);

}

glEnd();

pangolin::FinishFrame();

usleep(5000); // sleep 5 ms

}

return;

}

cmake_minimum_required(VERSION 2.6)

project(test_cv)

find_package(OpenCV REQUIRED)

find_package(Pangolin REQUIRED)

find_package(Sophus REQUIRED)

include_directories("/usr/include/eigen3")

include_directories(${Sophus_INCLUDE_DIRS})

add_executable(test_cv main.cpp)

target_link_libraries(test_cv ${OpenCV_LIBS} ${Pangolin_LIBRARIES} fmt::fmt)

593

593

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?