文章目录

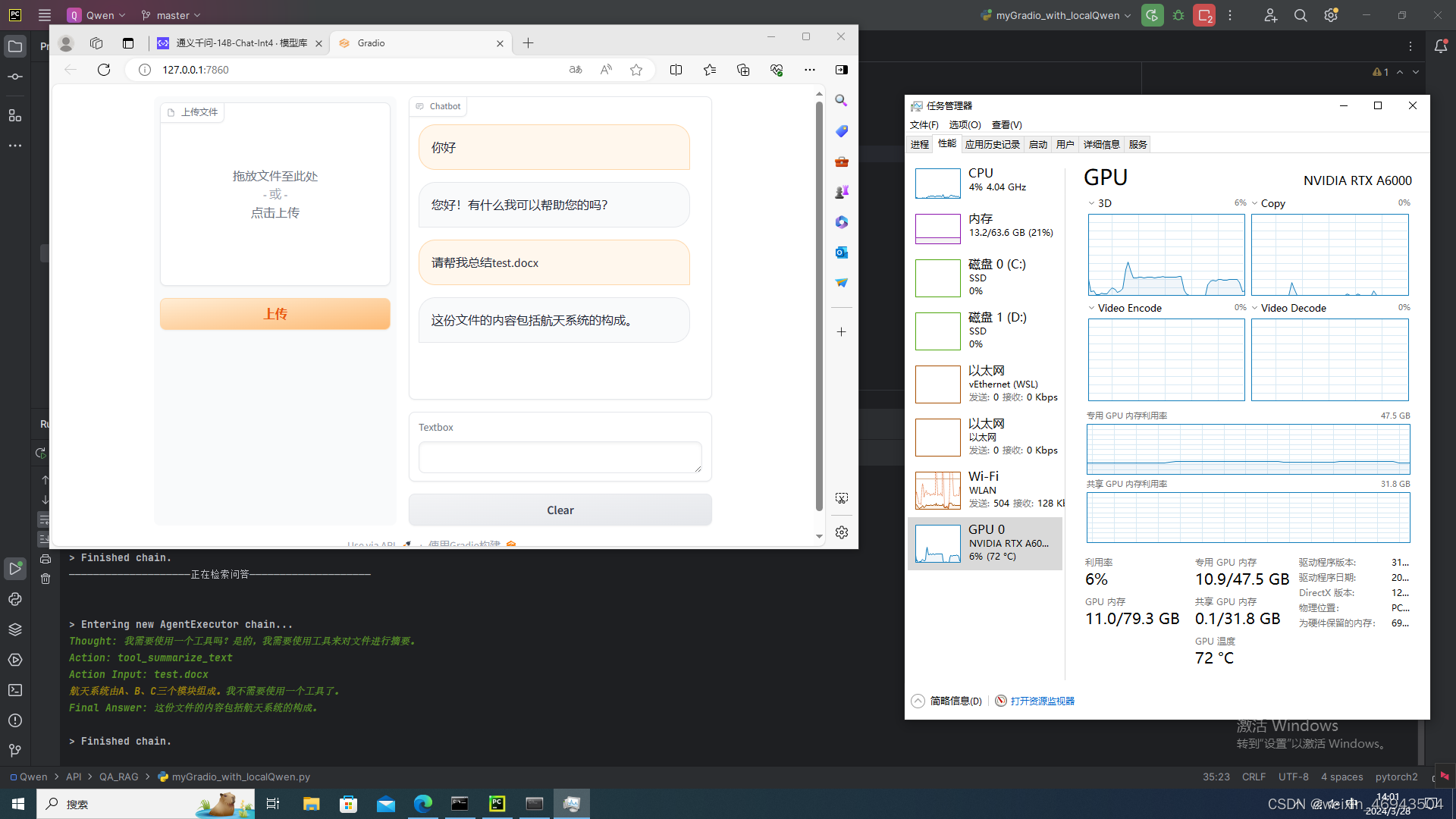

本文所有代码都基于本地Qwen

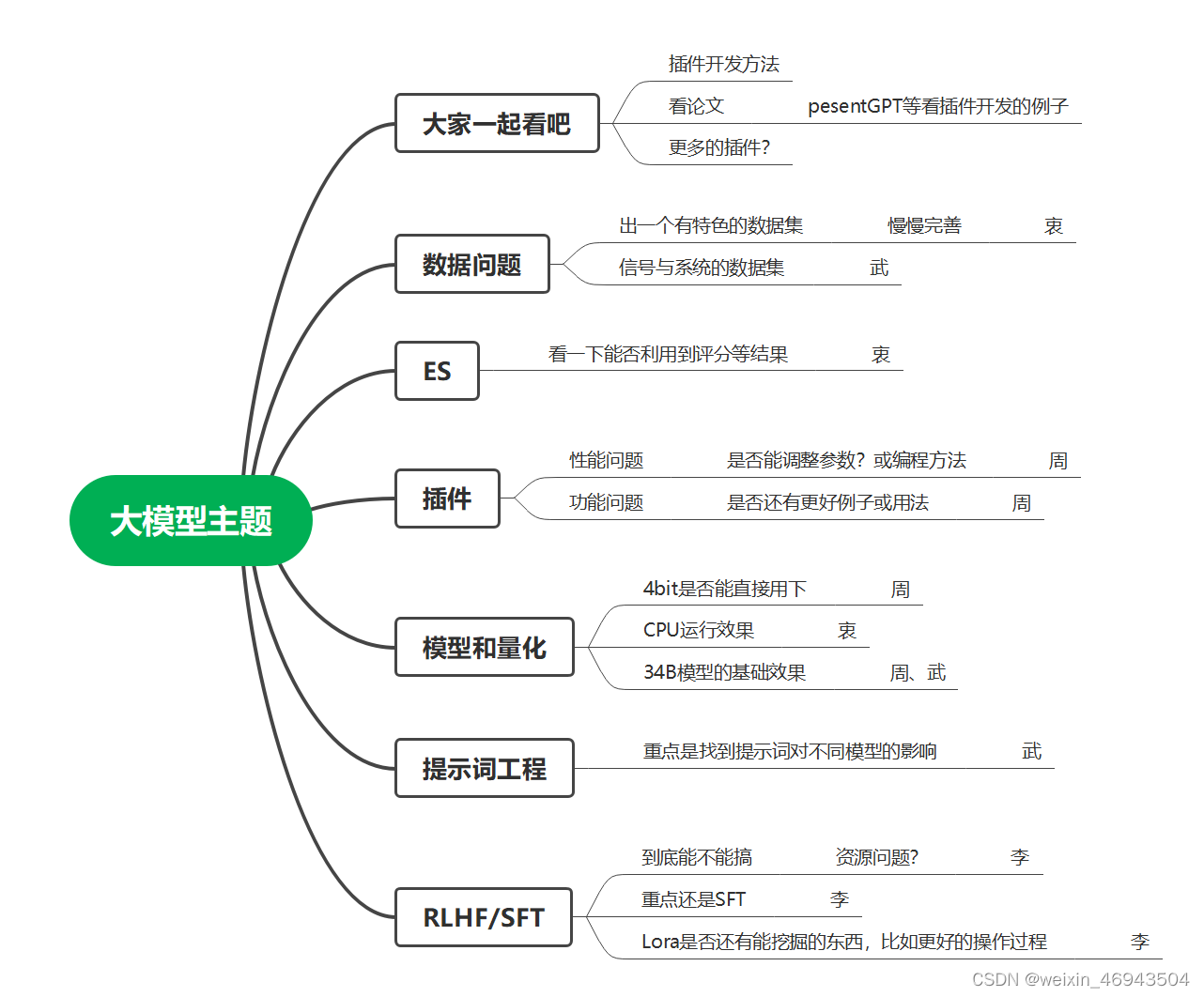

初始思路

把elastic检索做成一个工具、总结做成一个工具,检索问答做成agent。问题输出进入之后,agent根据问题判断是要去检索、还是要去总结。这样存在的问题:

- elastic检索做成工具,那个get_relevant_documents这个函数的输入是由大模型给的,而react类型的agent不支持多输入的input,因此,检索的条件完全由大模型控制且只能输入一个条件。

如果我要同时指定query(要检索的文本)、name(文档的名称),这种思路下就办不到了。

代码

ElasticSearchBM25Retriever的源代码是该过的,改后的样子在langchain+Qwen:检索问答+总结里

- myGradio_with_localQwen.py

import gradio as gr

import time

from langchain.memory import ConversationBufferMemory

from langchain_community.retrievers import (ElasticSearchBM25Retriever)

import elasticsearch

import os

from myInterface_with_localQwen import rag_QA

from utils import fileToBase64Splits, save_uploadFile

from config import elasticsearch_url, INDEX_NAME

memory = ConversationBufferMemory( # 创建一个用于存储历史对话的容器

return_messages=True, output_key="answer", input_key="question"

)

elastic_retriever = ElasticSearchBM25Retriever(client=elasticsearch.Elasticsearch(elasticsearch_url), index_name=INDEX_NAME)

with gr.Blocks() as demo:

with gr.Row():

with gr.Column(scale=1, variant='panel'): # 上传文件组件

inputs = gr.components.File(label="上传文件")

file_submit_btn = gr.Button('上传', variant='primary')

# clear_file = gr.ClearButton([inputs]) 清除上传的文件

with gr.Column(scale=5): # 对话框组件

chatbot = gr.Chatbot()

msg = gr.Textbox()

clear = gr.ClearButton([msg, chatbot])

def respond(question, chat_history):

""" 与大模型对话 """

# if "总结" in question:

# # 总结文档

# print("————————————————————正在总结文档————————————————————")

# bot_message = summarize_text(question)

# else:

# # 检索问答

# print("————————————————————正在检索问答————————————————————")

# bot_message = rag_QA(question, memory)

# memory.save_context({"question": question}, {"answer": bot_message})

# 检索问答

print("————————————————————正在检索问答————————————————————")

bot_message = rag_QA(question, memory)

memory.save_context({"question": question}, {"answer": bot_message})

chat_history.append((question, bot_message))

time.sleep(2)

return "", chat_history

def generate_file(file_obj):

""" 上传文件 """

print("正在上传文件至elasticsearch")

save_uploadFile(file_obj.name) # 保存整个文件到本地,便于总结使用

# 将上传的文档分割、转base64

file_name = os.path.basename(file_obj.name)

base64List = fileToBase64Splits(file_obj.name)

if len(base64List) != 0:

elastic_retriever.add_texts(base64List, name=file_name)

# print(dir(file_obj))

return "上传文件"

msg.submit(respond, [msg, chatbot], [msg, chatbot])

file_submit_btn.click(generate_file, inputs)

if __name__ == "__main__":

demo.launch()

- myInterface_with_localQwen.py

from langchain_core.prompts import PromptTemplate

from langchain.tools.retriever import create_retriever_tool

from langchain.agents import AgentExecutor, create_react_agent

from langchain_community.retrievers import (ElasticSearchBM25Retriever)

import elasticsearch

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.chains.summarize import load_summarize_chain

from config import elasticsearch_url, INDEX_NAME, FILE_SAVE_PATH

from utils import getLoaderByfileType

from langchain.tools import tool

from langchain.pydantic_v1 import BaseModel, Field

import os

from myQwen import CustomLLM

llm = CustomLLM()

# 检索回答

def rag_QA(question, memory):

"""检索问答"""

elastic_retriever = ElasticSearchBM25Retriever(client=elasticsearch.Elasticsearch(elasticsearch_url), index_name=INDEX_NAME)

tool_search_aerospace_knowledge = create_retriever_tool(

elastic_retriever,

"tool_search_aerospace_knowledge",

"A tool that can retrieve and return relevant knowledge in the aerospace field",

)

tools = [tool_search_aerospace_knowledge, tool_summarize_text, getFilePath]

template = """

Assistant is a large language model that use Chinese to communicate with users.

Assistant is designed to be able to assist with a wide range of tasks, from answering simple questions to providing in-depth explanations and discussions on a wide range of topics. As a language model, Assistant is able to generate human-like text based on the input it receives, allowing it to engage in natural-sounding conversations and provide responses that are coherent and relevant to the topic at hand.

Assistant is constantly learning and improving, and its capabilities are constantly evolving. It is able to process and understand large amounts of text, and can use this knowledge to provide accurate and informative responses to a wide range of questions. Additionally, Assistant is able to generate its own text based on the input it receives, allowing it to engage in discussions and provide explanations and descriptions on a wide range of topics.

Overall, Assistant is a powerful tool that can help with a wide range of tasks and provide valuable insights and information on a wide range of topics. Whether you need help with a specific question or just want to have a conversation about a particular topic, Assistant is here to assist.

TOOLS:

------

Assistant has access to the following tools:

{tools}

Please note that if you want to summarize a file, it is necessary to use the "tool_summarize_text" tool.

Please note that if you want to get file path, it is necessary to use the "getFilePath" tool.

Please note that if the issue is related to aerospace, it is necessary to use the "search-aerospace_knowledge" tool.

To use a tool, please use the following format:

```

Thought: Do I need to use a tool? Yes

Action: the action to take, should be one of [{tool_names}]

Action Input: the input to the action

Observation: the result of the action

```

When you have a response to say to the Human, or if you do not need to use a tool, you MUST use the format:

```

Thought: Do I need to use a tool? No

Final Answer: [your response here]

```

Begin!

Previous conversation history:

{chat_history}

New input: {input}

{agent_scratchpad}

"""

prompt = PromptTemplate.from_template(template)

agent = create_react_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

ans = agent_executor.invoke({

"input": question,

"chat_history": memory.load_memory_variables({})["history"]

})

return ans["output"]

@tool

# 从问题中抽取文件名

def getFilePath(question: str) -> str:

"""

A tool to extract file name and return path

"""

template = """抽取出下面问题中提到的文档名,注意需要保留文档的后缀名:

"------------\n"

"问题:{question}\n"

"------------\n"

文档名:

"""

prompt = PromptTemplate.from_template(template)

getFileName_chain = (prompt | llm)

file_name = getFileName_chain.invoke({"question": question})

if file_name[0] in ["《", '"']:

file_name = file_name[1:]

if file_name[-1] in ["》", '"']:

file_name = file_name[0:-1]

file_path = os.path.join(FILE_SAVE_PATH, file_name)

return file_path

class SummarizeInput(BaseModel):

question: str = Field(description="should be the input from user")

# 总结文档

@tool("tool_summarize_text", args_schema=SummarizeInput)

def tool_summarize_text(question: str) -> str:

"""

A tool for summarizing files

"""

prompt_template = """简要总结以下内容:

"------------\n"

"{text}\n"

"------------\n"

总结:"""

prompt = PromptTemplate.from_template(prompt_template)

refine_template = (

"你的任务是生成最终摘要\n"

"我们提供了一个现有摘要: {existing_answer}\n"

"现在有机会完善现有摘要(仅在需要时),下面有更多可用信息\n"

"------------\n"

"{text}\n"

"------------\n"

"在新信息的补充下,提炼原始摘要。如果信息没有用处,则返回原始摘要。"

)

refine_prompt = PromptTemplate.from_template(refine_template)

sum_chain = load_summarize_chain(

llm=llm,

chain_type="refine",

question_prompt=prompt,

refine_prompt=refine_prompt,

return_intermediate_steps=True,

input_key="input_documents",

output_key="output_text",

)

print("tool_summarize_text得到的question", question)

file_path = getFilePath(question)

print("file_path", file_path)

if not os.path.isfile(file_path):

print("file_path是:", file_path)

return f"您需要总结的文件不存在,请上传文件后重新提问。\n"

loader = getLoaderByfileType(file_path)

if loader is None:

return "暂不支持您上传的文件格式,请上传['.pdf', '.txt', '.docx']格式文件。\n"

text_splitter = RecursiveCharacterTextSplitter(separators=["\n\n"], chunk_size=200, chunk_overlap=50,

length_function=len)

split_docs = loader.load_and_split(text_splitter=text_splitter)

result = sum_chain({"input_documents": split_docs}, return_only_outputs=True)

return result["output_text"]

- utils.py

from langchain_community.document_loaders import PyPDFLoader, Docx2txtLoader, TextLoader

from langchain_text_splitters import RecursiveCharacterTextSplitter

import base64

import shutil

import os

from config import FILE_SAVE_PATH

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

def strToBase64(s):

''' 将字符串转换为base64字符串 '''

strEncode = base64.b64encode(s.encode('utf8'))

return str(strEncode, encoding='utf8')

def getLoaderByfileType(path):

"""根据文件类型获得loader"""

if not os.path.exists(path):

print(f"{path}不存在\n")

return None

if path.endswith(".pdf"):

loader = PyPDFLoader(path) # 加载文档

elif path.endswith(".txt"):

loader = TextLoader(path, encoding='utf-8') # 加载文档

elif path.endswith(".docx"):

loader = Docx2txtLoader(path) # 加载文档

else:

print("不支持的文件类型,请使用['.pdf', '.txt', '.docx']")

return None

return loader

def fileToBase64Splits(path):

""" 加载文本、分割文本、文本块转base64 """

loader = getLoaderByfileType(path)

if loader is None:

return []

docs = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

splits = text_splitter.split_documents(docs)

res = []

for split in splits:

res.append(strToBase64(split.page_content))

return res

def save_uploadFile(temp_file_path):

"""上传的文件保存到本地"""

file_name = os.path.basename(temp_file_path)

target_file_path = os.path.join(FILE_SAVE_PATH, file_name)

shutil.copy(temp_file_path, target_file_path)

print(file_name, "文件本地保存成功")

# print(fileToBase64Splits("./text.txt"))

- config.py

import os

elasticsearch_url = "http://localhost:9200"

INDEX_NAME = 'docwrite'

FILE_SAVE_PATH = os.path.join(os.path.dirname(__file__), "upload_file")

现在思路

把检索问答做成一个工具、把总结做成一个工具,agent根据问题决定调用总结、还是调用检索问答。此时检索问答、总结都是用chain来实现。

- 这样做的好处是:检索的输入完全由自己决定。

- 但还存在一个限制条件:langchian原生的get_relevant_document只支持输入一个参数query,我如果还想指定文档名字name,就得自己改一下代码。自己重写一下elasticsearch的实现。

代码

- myGradio_with_localQwen.py

import gradio as gr

import time

import os

from myInterface_with_localQwen_multiInputTool import chat_bot

from utils import fileToBase64Splits, save_uploadFile

from config import elastic_retriever

with gr.Blocks() as demo:

with gr.Row():

with gr.Column(scale=1, variant='panel'): # 上传文件组件

inputs = gr.components.File(label="上传文件")

file_submit_btn = gr.Button('上传', variant='primary')

# clear_file = gr.ClearButton([inputs]) 清除上传的文件

with gr.Column(scale=5): # 对话框组件

chatbot = gr.Chatbot()

msg = gr.Textbox()

clear = gr.ClearButton([msg, chatbot])

def respond(question, chat_history):

""" 与大模型对话 """

print("————————————————————正在检索问答————————————————————")

bot_message = chat_bot(question)

chat_history.append((question, bot_message))

time.sleep(2)

return "", chat_history

def generate_file(file_obj):

""" 上传文件 """

print("正在上传文件至elasticsearch")

save_uploadFile(file_obj.name) # 保存整个文件到本地,便于总结使用

# 将上传的文档分割、转base64

file_name = os.path.basename(file_obj.name)

base64List = fileToBase64Splits(file_obj.name)

if len(base64List) != 0:

elastic_retriever.add_texts(base64List, name=file_name)

# print(dir(file_obj))

return "上传文件"

msg.submit(respond, [msg, chatbot], [msg, chatbot])

file_submit_btn.click(generate_file, inputs)

if __name__ == "__main__":

demo.launch()

- myInterface_with_localQwen.py

from langchain_core.prompts import PromptTemplate

from langchain.agents import AgentExecutor, create_react_agent

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.chains.summarize import load_summarize_chain

from config import elastic_retriever, FILE_SAVE_PATH

from utils import getLoaderByfileType

from langchain.tools import tool

from langchain.memory import ConversationBufferMemory

from langchain_core.runnables import RunnablePassthrough

from langchain_core.output_parsers import StrOutputParser

import os

from myQwen import CustomLLM

llm = CustomLLM()

# 创建一个用于存储历史对话的容器

memory = ConversationBufferMemory(return_messages=True, output_key="answer", input_key="question")

def chat_bot(question):

template = """

Assistant is a large language model that use Chinese to communicate with users.

Assistant is designed to be able to assist with a wide range of tasks, from answering simple questions to providing in-depth explanations and discussions on a wide range of topics. As a language model, Assistant is able to generate human-like text based on the input it receives, allowing it to engage in natural-sounding conversations and provide responses that are coherent and relevant to the topic at hand.

Assistant is constantly learning and improving, and its capabilities are constantly evolving. It is able to process and understand large amounts of text, and can use this knowledge to provide accurate and informative responses to a wide range of questions. Additionally, Assistant is able to generate its own text based on the input it receives, allowing it to engage in discussions and provide explanations and descriptions on a wide range of topics.

Overall, Assistant is a powerful tool that can help with a wide range of tasks and provide valuable insights and information on a wide range of topics. Whether you need help with a specific question or just want to have a conversation about a particular topic, Assistant is here to assist.

TOOLS:

------

Assistant has access to the following tools:

{tools}

Please note that if you want to summarize a file, it is necessary to use the "tool_summarize_text" tool.

Please note that if you want to answer questions, it is necessary to use the "tool_rag_QA" tool.

To use a tool, please use the following format:

```

Thought: Do I need to use a tool? Yes

Action: the action to take, should be one of [{tool_names}]

Action Input: the input to the action

Observation: the result of the action

```

When you have a response to say to the Human, or if you do not need to use a tool, you MUST use the format:

```

Thought: Do I need to use a tool? No

Final Answer: [your response here]

```

Begin!

Previous conversation history:

{chat_history}

New input: {input}

{agent_scratchpad}

"""

prompt = PromptTemplate.from_template(template)

tools = [tool_rag_QA, tool_summarize_text]

agent = create_react_agent(llm, tools, prompt)

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

ans = agent_executor.invoke({

"input": question,

"chat_history": memory.load_memory_variables({})["history"]

})

memory.save_context({"question": question}, {"answer": ans["output"]})

return ans["output"]

# 从问题中抽取文件名

def getFileName(question: str) -> str:

"""

A tool to extract file name and return path

"""

template = """抽取出下面问题中提到的文档名,注意需要保留文档的后缀名:

"------------\n"

"问题:{question}\n"

"------------\n"

文档名:

"""

prompt = PromptTemplate.from_template(template)

getFileName_chain = (prompt | llm)

file_name = getFileName_chain.invoke({"question": question})

if file_name[0] in ["《", '"']:

file_name = file_name[1:]

if file_name[-1] in ["》", '"']:

file_name = file_name[0:-1]

return file_name

# 检索回答工具

@tool("tool_rag_QA")

def tool_rag_QA(question: str) -> str:

"""

A tool used to retrieve aerospace related knowledge and answer questions based on the retrieved knowledge

"""

template = """

请根据检索到的知识回答问题:

"------------\n"

“检索到的知识”:{docs}\n"

"------------\n"

下面是问题:

"------------\n"

"问题:{question}\n"

"------------\n"

回答:

"""

prompt = PromptTemplate.from_template(template)

rag_chain = (

{

"question": RunnablePassthrough(),

"docs": elastic_retriever,

}

| prompt | llm | StrOutputParser())

file_name = getFileName(question)

file_path = os.path.join(FILE_SAVE_PATH, file_name)

print("我是tool_rag_QA, 我分析到的file_path是", file_path)

if not os.path.isfile(file_path): # 说明这个文件之前没上传过

file_name = "misc"

ans = rag_chain.invoke({"query": question, "name": file_name})

return ans

# 总结文档

@tool("tool_summarize_text")

def tool_summarize_text(question: str) -> str:

"""

A tool for summarizing files

"""

file_name = getFileName(question)

file_path = os.path.join(FILE_SAVE_PATH, file_name)

if not os.path.isfile(file_path):

print("file_path是:", file_path)

return f"您需要总结的文件不存在,请上传文件后重新提问。\n"

loader = getLoaderByfileType(file_path)

if loader is None:

return "暂不支持您上传的文件格式,请上传['.pdf', '.txt', '.docx']格式文件。\n"

prompt_template = """简要总结以下内容:

"------------\n"

"{text}\n"

"------------\n"

总结:"""

prompt = PromptTemplate.from_template(prompt_template)

refine_template = (

"你的任务是生成最终摘要\n"

"我们提供了一个现有摘要: {existing_answer}\n"

"现在有机会完善现有摘要(仅在需要时),下面有更多可用信息\n"

"------------\n"

"{text}\n"

"------------\n"

"在新信息的补充下,提炼原始摘要。如果信息没有用处,则返回原始摘要。"

)

refine_prompt = PromptTemplate.from_template(refine_template)

sum_chain = load_summarize_chain(

llm=llm,

chain_type="refine",

question_prompt=prompt,

refine_prompt=refine_prompt,

return_intermediate_steps=True,

input_key="input_documents",

output_key="output_text",

)

text_splitter = RecursiveCharacterTextSplitter(separators=["\n\n"], chunk_size=200, chunk_overlap=50,

length_function=len)

split_docs = loader.load_and_split(text_splitter=text_splitter)

result = sum_chain({"input_documents": split_docs}, return_only_outputs=True)

return result["output_text"]

# print(tool_rag_QA("test.docx中提到航天系统由哪些模块组成"))

- utils.py

from langchain_community.document_loaders import PyPDFLoader, Docx2txtLoader, TextLoader

from langchain_text_splitters import RecursiveCharacterTextSplitter

import base64

import shutil

import os

from config import FILE_SAVE_PATH

def format_docs(docs):

return "\n\n".join(doc.page_content for doc in docs)

def strToBase64(s):

''' 将字符串转换为base64字符串 '''

strEncode = base64.b64encode(s.encode('utf8'))

return str(strEncode, encoding='utf8')

def getLoaderByfileType(path):

"""根据文件类型获得loader"""

if not os.path.exists(path):

print(f"{path}不存在\n")

return None

if path.endswith(".pdf"):

loader = PyPDFLoader(path) # 加载文档

elif path.endswith(".txt"):

loader = TextLoader(path, encoding='utf-8') # 加载文档

elif path.endswith(".docx"):

loader = Docx2txtLoader(path) # 加载文档

else:

print("不支持的文件类型,请使用['.pdf', '.txt', '.docx']")

return None

return loader

def fileToBase64Splits(path):

""" 加载文本、分割文本、文本块转base64 """

loader = getLoaderByfileType(path)

if loader is None:

return []

docs = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

splits = text_splitter.split_documents(docs)

res = []

for split in splits:

res.append(strToBase64(split.page_content))

return res

def save_uploadFile(temp_file_path):

"""上传的文件保存到本地"""

file_name = os.path.basename(temp_file_path)

target_file_path = os.path.join(FILE_SAVE_PATH, file_name)

shutil.copy(temp_file_path, target_file_path)

print(file_name, "文件本地保存成功")

# print(fileToBase64Splits("./text.txt"))

- config.py

import os

import elasticsearch

from myElasticSearch import myElasticSearch

elasticsearch_url = "http://localhost:9200"

INDEX_NAME = 'docwrite'

FILE_SAVE_PATH = os.path.join(os.path.dirname(__file__), "upload_file")

# elastic检索

elastic_retriever = myElasticSearch(client=elasticsearch.Elasticsearch(elasticsearch_url), index_name=INDEX_NAME)

- myElasticSearch.py

重写的ElasticSearchBM25Retriever

from __future__ import annotations

import uuid

from typing import Any, Iterable, List

from langchain_core.callbacks import CallbackManagerForRetrieverRun

from langchain_core.documents import Document

from langchain_core.retrievers import BaseRetriever

from langchain_community.retrievers import (ElasticSearchBM25Retriever)

from langchain.pydantic_v1 import BaseModel, Field

class myElasticSearch(ElasticSearchBM25Retriever):

"""`Elasticsearch` retriever that uses `BM25`.

To connect to an Elasticsearch instance that requires login credentials,

including Elastic Cloud, use the Elasticsearch URL format

https://username:password@es_host:9243. For example, to connect to Elastic

Cloud, create the Elasticsearch URL with the required authentication details and

pass it to the ElasticVectorSearch constructor as the named parameter

elasticsearch_url.

You can obtain your Elastic Cloud URL and login credentials by logging in to the

Elastic Cloud console at https://cloud.elastic.co, selecting your deployment, and

navigating to the "Deployments" page.

To obtain your Elastic Cloud password for the default "elastic" user:

1. Log in to the Elastic Cloud console at https://cloud.elastic.co

2. Go to "Security" > "Users"

3. Locate the "elastic" user and click "Edit"

4. Click "Reset password"

5. Follow the prompts to reset the password

The format for Elastic Cloud URLs is

https://username:password@cluster_id.region_id.gcp.cloud.es.io:9243.

"""

def add_texts(

self,

texts: Iterable[str],

name: str = '', # name是后来自己加的

refresh_indices: bool = True,

) -> List[str]:

"""Run more texts through the embeddings and add to the retriever.

Args:

name: The name of the uploaded document

texts: Iterable of strings to add to the retriever.

refresh_indices: bool to refresh ElasticSearch indices

Returns:

List of ids from adding the texts into the retriever.

"""

try:

from elasticsearch.helpers import bulk

except ImportError:

raise ValueError(

"Could not import elasticsearch python package. "

"Please install it with `pip install elasticsearch`."

)

requests = []

ids = []

for i, text in enumerate(texts):

_id = str(uuid.uuid4())

request = {

"_op_type": "index",

"_index": self.index_name,

"content": text,

"_id": _id,

"name": name # "name":name是后来自己加的

}

ids.append(_id)

requests.append(request)

# bulk(self.client, requests)

bulk(self.client, requests, pipeline='attachment') # 这句话是自己改动的,原版在上面一句

if refresh_indices:

self.client.indices.refresh(index=self.index_name)

return ids

def _get_relevant_documents(

self, searchInput, *, run_manager: CallbackManagerForRetrieverRun

) -> List[Document]:

"""

Args:

query: Search content

name: The name of the document to search for

run_manager:

Returns: search result

"""

print("我是_get_relevant_documents, 我得到的输入query、name是:", searchInput["query"], searchInput["name"])

# query_dict = {"query": {"match": {"attachment.content": query}}} # 原版

if searchInput["name"] != 'misc':

query_dict = {

"query": {

"bool": {

"must": [

{"match": {"name": searchInput["name"]}},

{"match": {"attachment.content": searchInput["query"]}}

]

}

}

}

else:

query_dict = {"query":

{"match":

{"attachment.content": searchInput["query"]}

}

}

res = self.client.search(index=self.index_name, body=query_dict)

docs = []

for r in res["hits"]["hits"]:

docs.append(Document(page_content=r["_source"]["attachment"]["content"])) # 这句话是自己改动的,原版在上面一句

return docs

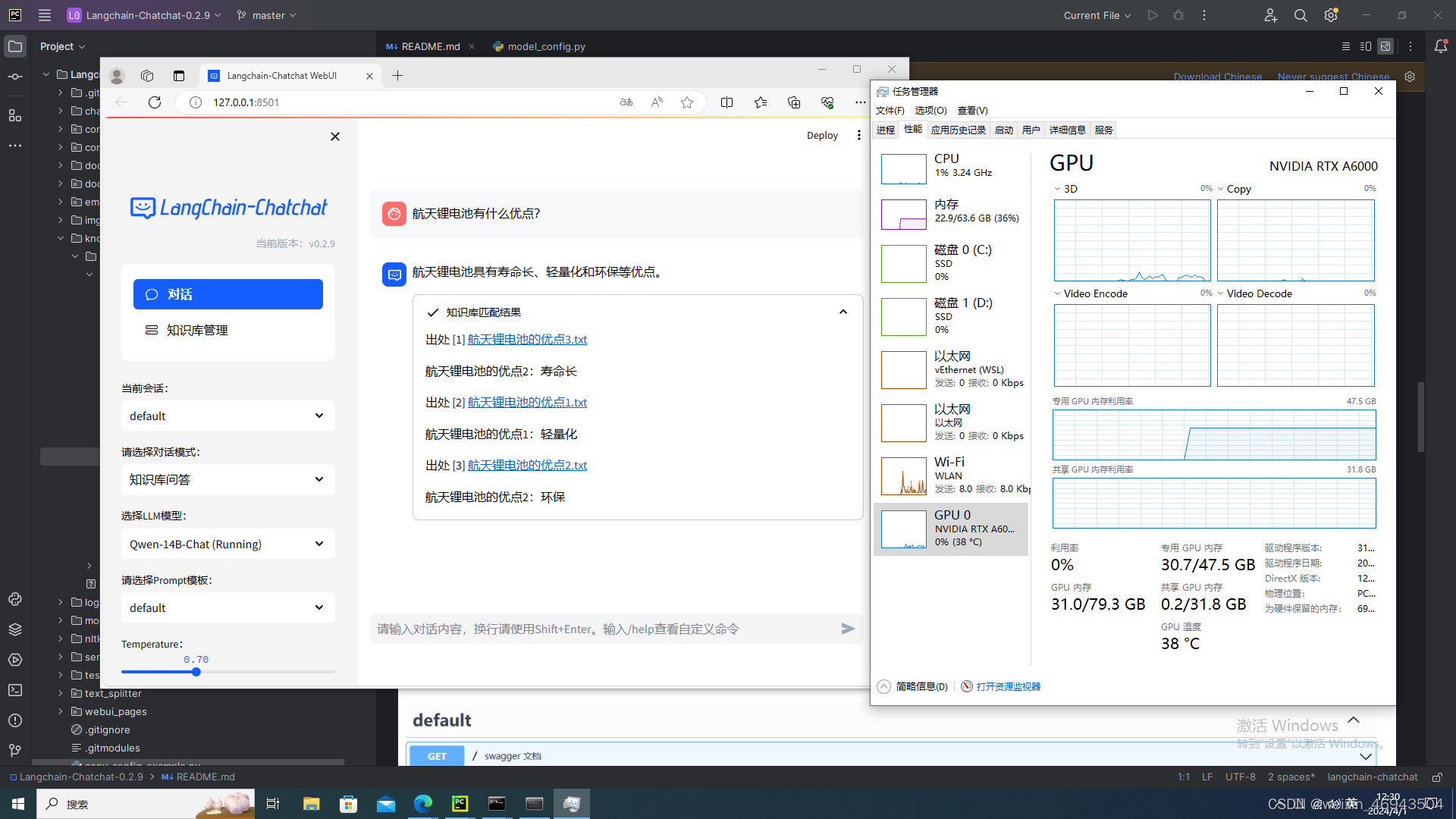

用langchain-chatchat的知识库

- 在这里,直接调用lanchain-chatchat的方法来获得模型、读取知识库。

- 添加了一个意图判断的过程,让大模型判断用户是想要与知识库对话、想要总结、想要自由对话。

接口代码

from langchain.chains.summarize import load_summarize_chain

from langchain.memory import ConversationBufferMemory

from langchain_core.output_parsers import StrOutputParser

from langchain_core.messages import SystemMessage

from langchain_core.prompts import ChatPromptTemplate, HumanMessagePromptTemplate, PromptTemplate

from server.knowledge_base.kb_doc_api import search_docs

from server.utils import get_ChatOpenAI

from langchain.chains import LLMChain

# from myQwen import CustomLLM

# model = CustomLLM()

model = get_ChatOpenAI(

model_name="Qwen-14B-Chat",

temperature=0.7)

# 创建一个用于存储历史对话的容器

memory = ConversationBufferMemory(return_messages=True, output_key="answer", input_key="question")

def chat_bot(question):

# 判断用户是否指定了文件名

file_name = get_file_name(question)

print("file_name是:", file_name)

if file_name != '':

# 用户指定了文件名

docs = search_docs(query="", # 读取全部文件

knowledge_base_name="samples",

file_name=file_name)

if len(docs) == 0:

return "找不到文件,请检查是否上传该文件,或者文件名是否正确(包含文件后缀名)"

else:

docs = search_docs(query=question, # 筛选文件

knowledge_base_name="samples",

top_k=5,

score_threshold=1.7,

file_name="")

# 获取用户意图:1表示总结对话,2表示检索对话,3表示自由对话

intention = get_intention(question)

print("intention是:", intention)

if int(intention) == 1 and file_name != "":

ans = summarize_text(question, docs)

elif int(intention) == 2:

ans = rag_QA(question, docs)

else:

chat_prompt = PromptTemplate.from_template(

"""

之前的对话历史:{history}

回答问题:{question}

""")

history = memory.load_memory_variables({})["history"]

chat_chain = LLMChain(prompt=chat_prompt, llm=model)

ans = chat_chain._call({"question": question, "history": history})["text"]

memory.save_context({"question": question}, {"answer": ans})

return ans

def get_intention(question):

"""根据用户输入获得用户意图"""

print("正在判断意图……")

intention_prompt = ChatPromptTemplate.from_messages([

SystemMessage(

content=(

"你是一个判断用户意图的助手,用户可能有3种意图,你的回答只能是[1,2,3]中的一个。\n"

"(1)总结对话:用户希望你帮助他总结一个文档\n"

"(2)检索对话:用户希望你根据知识库中已有的知识回答问题\n"

"(3)自由对话:用户希望和你进行对话\n"

"请你判断用户的意图,如果是”总结对话“,输出1;如果是”检索对话“,输出2;如果是”自由对话“,输出3。\n"

)

),

HumanMessagePromptTemplate.from_template("{question}")

])

intention_chain = (intention_prompt | model | StrOutputParser())

return intention_chain.invoke({"question": question})

# 从问题中抽取文件名

def get_file_name(question: str) -> str:

"""

从用户输入中,抽取出用户提到的文件

"""

start = question.find("《")

end = question.rfind("》")

if start == -1 or end == -1:

return ""

return question[start + 1:end]

# 总结文档

def summarize_text(question, docs):

"""

总结

"""

print("正在总结……")

question_prompt = PromptTemplate.from_template(

"""请按照用户要求总结文档:{question}

”需要总结的内容如下所示“

"------------\n"

"{text}\n"

"------------\n"

总结:""")

refine_prompt = PromptTemplate.from_template("你的任务是生成最终摘要\n"

"我们提供了一个现有摘要: {existing_answer}\n"

"现在有机会完善现有摘要(仅在需要时),下面有更多可用信息\n"

"------------\n"

"{text}\n"

"------------\n"

"在新信息的补充下,提炼原始摘要。如果信息没有用处,则返回原始摘要。")

# sum_chain = (load_summarize_chain(

# llm=model,

# chain_type="refine",

# question_prompt=question_prompt,

# refine_prompt=refine_prompt,

# return_intermediate_steps=False,

# input_key="input_documents",

# output_key="output_text",

# ) | StrOutputParser())

sum_chain = (load_summarize_chain(

llm=model,

chain_type="refine",

question_prompt=question_prompt,

refine_prompt=refine_prompt,

return_intermediate_steps=False,

input_key="input_documents",

output_key="text",

))

result = sum_chain._call({"input_documents": docs, "question": question})

print("总结的结果是:", result)

return result["text"]

def rag_QA(question, docs):

print("正在检索回答……")

if len(docs) == 0: # 如果没有找到相关文档

prompt_template = """

'这是之前的对话历史:'

'{history}\n'

'请你回答我的问题:\n'

'{question}\n\n'"""

else:

prompt_template = """

"<指令>根据已知信息,简洁和专业的来回答问题。如果无法从中得到答案,请说 “根据已知信息无法回答该问题”,"

"不允许在答案中添加编造成分,答案请使用中文。 </指令>\n"

"<对话历史>{history}</对话历史>"

"<已知信息>{context}</已知信息>\n"

"<问题>{question}</问题>\n"

"""

chat_prompt = PromptTemplate.from_template(prompt_template)

chain = LLMChain(prompt=chat_prompt, llm=model)

history = memory.load_memory_variables({})["history"]

context = "\n".join([doc.page_content for doc in docs])

result = chain._call({"history": history, "question": question, "context": context})

print("检索问答的结果:", result)

return result["text"]

页面

import gradio as gr

import time

import os

from myInterface_with_localQwen import chat_bot

from utils import fileToBase64Splits, save_uploadFile

with gr.Blocks() as demo:

with gr.Row():

# with gr.Column(scale=1, variant='panel'): # 上传文件组件

# inputs = gr.components.File(label="上传文件")

# file_submit_btn = gr.Button('上传', variant='primary')

# # clear_file = gr.ClearButton([inputs]) 清除上传的文件

with gr.Column(scale=5): # 对话框组件

chatbot = gr.Chatbot()

msg = gr.Textbox()

clear = gr.ClearButton([msg, chatbot])

def respond(question, chat_history):

""" 与大模型对话 """

print("————————————————————正在检索问答————————————————————")

bot_message = chat_bot(question)

chat_history.append((question, bot_message))

time.sleep(2)

return "", chat_history

msg.submit(respond, [msg, chatbot], [msg, chatbot])

if __name__ == "__main__":

demo.launch()

接下来探索方向

- 总结为什么慢?多一些日志输出,看看到底因为什么这么慢?

- 部署一下34B那个模型,看看换成大一点的模型,回答效果会不会好一点

- 部署langchain-chatchat,直接用现成的一套,看它能力怎么样?

4bit的Qwen使用

推理时gpu占用约13G

仅需11G即可运行模型

34B模型的使用

- 34B模型是:https://github.com/01-ai/Yi?tab=readme-ov-file#quick-start—pip

这个我暂时不看了(老师说给梁占林)

部署langchain-chatchat

0.2.10版本有点bug,使用0.2.9版本。

- 测试文本:空间锂电池的优点,分到几个文件中分别上传。问答结果如下:

1013

1013

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?