1.数据

这里只插入部分数据,有一些实验的数据中的日期格式是1998-XX-XX形式,在后来代码也会有相应的修改

13 987 1/10/1998 3 999 1 1232.16

13 1660 1/10/1998 3 999 1 1232.16

13 1762 1/10/1998 3 999 1 1232.16

13 1843 1/10/1998 3 999 1 1232.16

13 1948 1/10/1998 3 999 1 1232.16

13 2273 1/10/1998 3 999 1 1232.16

13 2380 1/10/1998 3 999 1 1232.16

13 2683 1/10/1998 3 999 1 1232.16

13 2865 1/10/1998 3 999 1 1232.16

13 4663 1/10/1998 3 999 1 1232.16

13 5203 1/10/1998 3 999 1 1232.16

13 5321 1/10/1998 3 999 1 1232.16

13 5590 1/10/1998 3 999 1 1232.16

13 6277 1/10/1998 3 999 1 1232.16

13 6859 1/10/1998 3 999 1 1232.16

13 8540 1/10/1998 3 999 1 1232.16

13 9076 1/10/1998 3 999 1 1232.16

13 12099 1/10/1998 3 999 1 1232.16

13 35834 1/10/1998 3 999 1 1232.16

13 524 1/20/1998 2 999 1 1205.99

13 188 1/20/1998 3 999 1 1232.16

13 361 1/20/1998 3 999 1 1232.16

13 531 1/20/1998 3 999 1 1232.16

13 659 1/20/1998 3 999 1 1232.16

13 848 1/20/1998 3 999 1 1232.16

13 949 1/20/1998 3 999 1 1232.16

13 1242 1/20/1998 3 999 1 1232.16

13 1291 1/20/1998 3 999 1 1232.16

13 1422 1/20/1998 3 999 1 1232.16

13 1485 1/20/1998 3 999 1 1232.16

13 1580 1/20/1998 3 999 1 1232.16

13 1943 1/20/1998 3 999 1 1232.16

13 1959 1/20/1998 3 999 1 1232.16

13 2021 1/20/1998 3 999 1 1232.16

13 2142 1/20/1998 3 999 1 1232.16

13 3014 1/20/1998 3 999 1 1232.16

13 3053 1/20/1998 3 999 1 1232.16

13 3261 1/20/1998 3 999 1 1232.16

13 3783 1/20/1998 3 999 1 1232.16

13 3947 1/20/1998 3 999 1 1232.16

13 4523 1/20/1998 3 999 1 1232.16

13 5813 1/20/1998 3 999 1 1232.16

13 6543 1/20/1998 3 999 1 1232.16

13 7076 1/20/1998 3 999 1 1232.16

13 7421 1/20/1998 3 999 1 1232.16

13 8747 1/20/1998 3 999 1 1232.16

13 8787 1/20/1998 3 999 1 1232.16

13 8836 1/20/1998 3 999 1 1232.16

13 9052 1/20/1998 3 999 1 1232.16

13 9680 1/20/1998 3 999 1 1232.16

2.要求

编写程序,将数据表中的销售笔数相加并求出各年的销售总额

原理

其实这里的原理和前面的wordcount的原理是一样的,只不过求的东西发生了变化罢了

3.代码

关于pom.xml文件的配置,在前面的文章已经有介绍—>这里

sold类

import org.apache.hadoop.io.Writable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

public class sold implements Writable {

//字段名 prod_id,cust_id,time,channel_id,promo_id,quantity_sold,amount_sold

//数据类型:Int,Int,Date, Int,Int ,Int ,float(10,2),

//数据: 13, 987, 1998/1/10, 3, 999,1, 1232.16

//由以上定义变量

private int prod_id;

private int cust_id;

private String time;

private int channel_id;

private int promo_id;

private int quantity_sold;

private float amount_sold;

//序列化方法:将java对象转化为可跨机器传输数据流(二进制串/字节)的一种技术

public void write(DataOutput out) throws IOException {

out.writeInt(this.prod_id);

out.writeInt(this.cust_id);

out.writeUTF(this.time);

out.writeInt(this.channel_id);

out.writeInt(this.promo_id);

out.writeInt(this.quantity_sold);

out.writeFloat(this.amount_sold);

}

//反序列化方法:将可跨机器传输数据流(二进制串)转化为java对象的一种技术

public void readFields(DataInput in) throws IOException {

this.prod_id = in.readInt();

this.cust_id = in.readInt();

this.time = in.readUTF();

this.channel_id = in.readInt();

this.promo_id = in.readInt();

this.quantity_sold = in.readInt();

this.amount_sold = in.readFloat();

}

public int getProd_id() {

return prod_id;

}

public void setProd_id(int prod_id) {

this.prod_id = prod_id;

}

public int getCust_id() {

return cust_id;

}

public void setCust_id(int cust_id) {

this.cust_id = cust_id;

}

public String getTime() {

return time;

}

public void setTime(String time) {

this.time = time;

}

public int getChannel_id() {

return channel_id;

}

public void setChannel_id(int channel_id) {

this.channel_id = channel_id;

}

public int getPromo_id() {

return promo_id;

}

public void setPromo_id(int promo_id) {

this.promo_id = promo_id;

}

public int getQuantity_sold() {

return quantity_sold;

}

public void setQuantity_sold(int quantity_sold) {

this.quantity_sold = quantity_sold;

}

public float getAmount_sold() {

return amount_sold;

}

public void setAmount_sold(float amount_sold) {

this.amount_sold = amount_sold;

}

}

sold_mapper第一类

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class sold_mapper extends Mapper<LongWritable, Text, Text, sold> {

@Override

protected void map(LongWritable k1, Text v1, Context context) throws IOException, InterruptedException {

String data = v1.toString();

String[] words = data.split(",");

sold s = new sold();

s.setTime("1998");

s.setQuantity_sold(Integer.parseInt(words[5]));

s.setAmount_sold(Float.valueOf(words[6]));

context.write(new Text("1998"), s);

}

}

对于csv文件中日期格式为1998-XX-XX的文件而言,mapper类需要修改一下

sold_mapper第二类

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

public class SoldMapper extends Mapper<LongWritable, Text, Text, Sold> {

@Override

protected void map(LongWritable k1, Text v1,

Context context)

throws IOException, InterruptedException {

//字段名 prod_id,cust_id,time,channel_id,promo_id,quantity_sold,amount_sold

//数据: 13,987,1998-01-10,3,999,1,1232.16

String data = v1.toString();

String[] words = data.split(",");

//数据: t1=987,1998-01-10,3,999,1,1232.16

String t1 = StringUtils.substringAfter(data, ",");

//数据: t2=1998-01-10,3,999,1,1232.16

String t2 = StringUtils.substringAfter(t1, ",");

//取年份为偏移量,数据: words2[0]=1998,words2[1]=01,words2[2]=10,3,999,1,1232.16

String[] words2 = t2.split("-");

// StringUtils.substringAfter("dskeabcedeh", "e");

// /*结果是:abcedeh*/

Sold sold = new Sold();

sold.setTime(words[2]);//数组word[]

sold.setQuantity_sold(Integer.parseInt(words[5]));

sold.setAmount_sold(Float.valueOf(words[6]));

context.write(new Text(words2[0]), sold);//数组word2[],word2[0]代表年份作为k2

}

}

sold_reducer类

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

public class sold_reducer extends Reducer<Text, sold,Text,Text> {

protected void reduce(Text k3,Iterable<sold> v3,Context context) throws IOException,InterruptedException {

int sum1 = 0;

float sum2 = 0;

for(sold s:v3){

sum1 = sum1 + s.getQuantity_sold();

sum2 = sum2 + s.getAmount_sold();

}

String end = "销售数量: "+Integer.toString(sum1)+", "+"销售总额: "+Float.toString(sum2);

context.write(k3,new Text(end));

}

}

sold_main类

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class sold_main {

public static void main(String[] args) throws Exception {

//1. 创建一个job和任务入口(指定主类)

Job job = Job.getInstance(new Configuration());

job.setJarByClass(sold_main.class);

//2. 指定job的mapper和输出的类型<k2 v2>

job.setMapperClass(sold_mapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(sold.class);

//3. 指定job的reducer和输出的类型<k4 v4>

job.setReducerClass(sold_reducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

//4. 指定job的输入和输出路径

FileInputFormat.setInputPaths(job, new Path(args[0]));

FileOutputFormat.setOutputPath(job, new Path(args[1]));

//5. 执行job

job.waitForCompletion(true);

}

}

4.打包jar包

之后我们需要右击项目找到Open Module Settings

找到Artifacts→JAR→From modules with dependencies…

找到主类Myemployee_partitioner

在主页面找到Build → Build Artifacts→Build

之后我们可以发现目录树中出现了一个out目录,在字树文件中有一个

mypro.jar文件,这个就是我们打包的文件

5.将打包的jar文件上传到hdfs

hdfs dfs -put 虚拟机本地jar包存储完整路径 hdfs文件系统存储的路径

6.利用Hadoop集群运行该jar包

hadoop jar 项目名.jar 主类名 输入目录 输出目录

eg:hadoop jar sold.jar sold_main /input/sales.csv /output

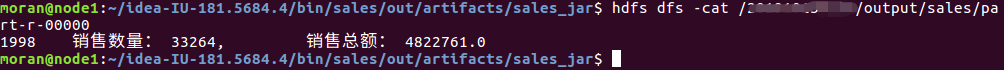

7.演示

在打包完jar包之后,开始对terminal写入命令

查看输出结果与实验要求是否一致

由于我的文件是只有1998年这一年的数据,所以输出结果中只有这一年的销售数量和销售总额

8.总结

其实我们通过wordcount就已经知道了mapreduce的整体流程,对于后来的其他要求只是对于map函数和reduce函数的修改罢了,要记住,MapTask的数量是有split决定的,而split的大小默认是一个block的大小,我们可以通过修改split的大小来控制,而map输出后的中间数据集中会以key来决定ReduceTask的数量。

680

680

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?