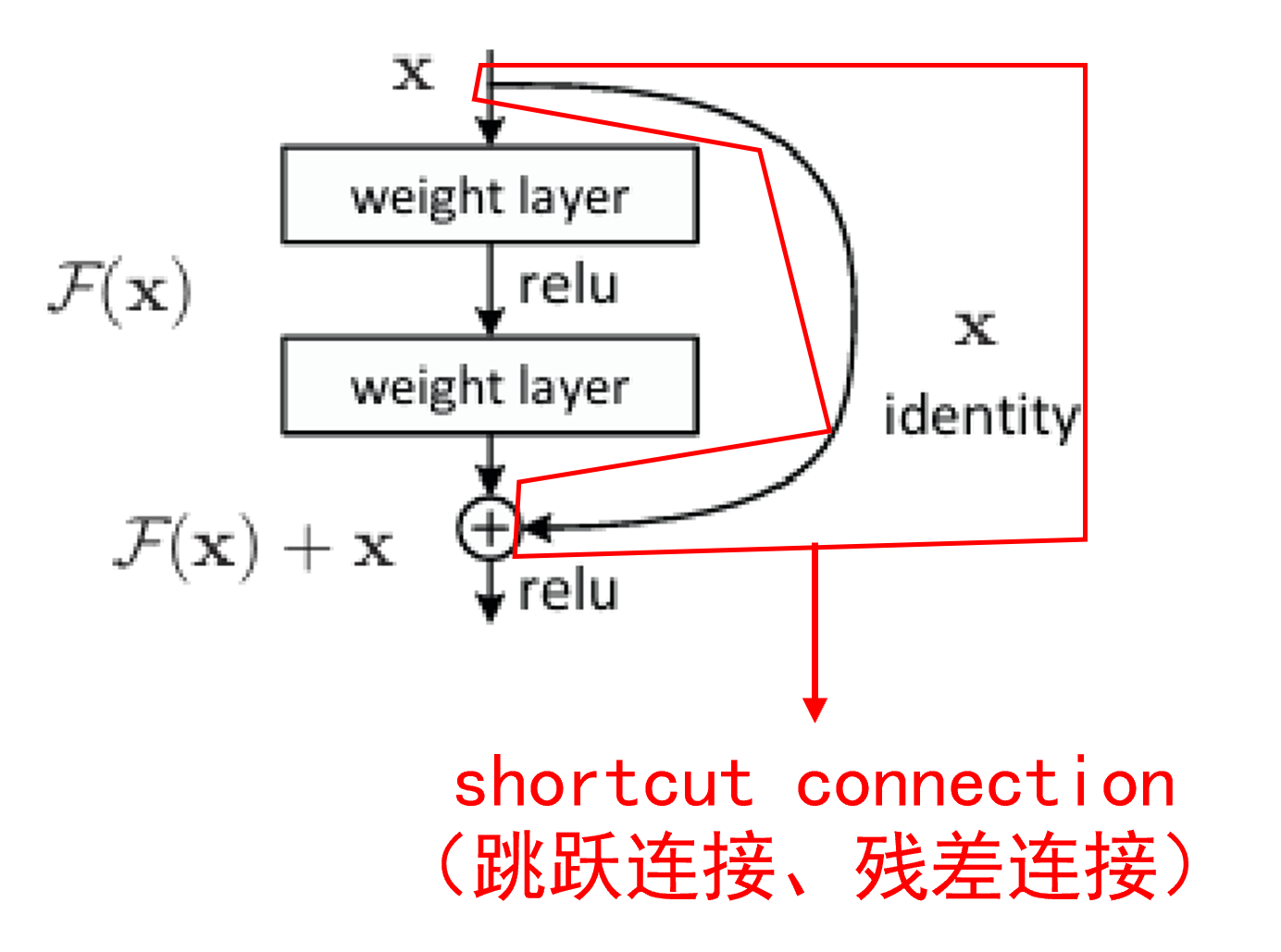

一、残差块

- 残差块主要由卷积层、ReLU、shortcut connection(跳跃连接、残差连接)与加和操作构成。

- 当残差块下采样时(HW减小,C增加),跳跃连接为卷积核大小为1步长为2的卷积层。其他情况下,通常为Identity层。

残差块参数与变量:

- expansion:最后一层卷积层输出通道数与第一层卷积层输出通道数之比

- in _channels:残差块输入的通道数

- out_channels:残差块的输出通道数

- size:残差块大小(64、128、256、512四种大小)

- stride:为2时,下采样残差块;为1时,普通残差块

- shortcut:identity层或卷积层

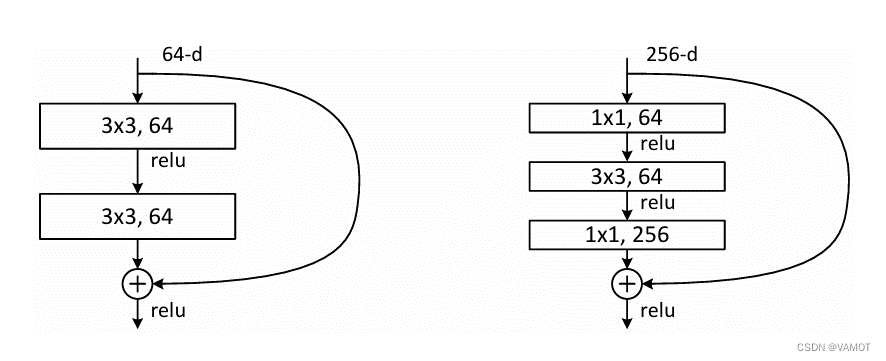

1、两层残差块

class ResidualBlock(nn.Module):

expansion: int = 1

def __init__(self, in_channels, size, stride=1, shortcut=None):

super(ResidualBlock, self).__init__()

# first layer

self.conv1 = nn.Conv2d(in_channels, size, kernel_size=3, stride=stride, padding=1)

self.bn1 = nn.BatchNorm2d(size)

self.relu1 = nn.ReLU()

# second layer

self.conv2 = nn.Conv2d(size, size, kernel_size=3, stride=1, padding=1)

self.bn2 = nn.BatchNorm2d(size)

# shortcut connection

self.shortcut = nn.Identity() if shortcut is None else shortcut

self.bn3 = nn.BatchNorm2d(size)

self.relu3 = nn.ReLU()

def forward(self, x):

o = self.conv1(x)

o = self.bn1(o)

o = self.relu1(o)

o = self.conv2(o)

o = self.bn2(o)

o = o + self.shortcut(x) # F(x)+x

o = self.bn3(o)

o = self.relu3(o)

return o2、三层残差块(Bottleneck)

当为下采样残差块时,下采样操作在3x3卷积层(第二个卷积层)。

class Bottleneck(nn.Module):

expansion: int = 4

def __init__(self, in_channels, size, stride=1, shortcut: nn.Module = None):

super(Bottleneck, self).__init__()

out_channels = size * self.expansion

# first layer

self.conv1 = nn.Conv2d(in_channels, size, kernel_size=1)

self.bn1 = nn.BatchNorm2d(size)

self.relu1 = nn.ReLU()

# second layer

self.conv2 = nn.Conv2d(size, size, kernel_size=3, stride=stride, padding=1)

self.bn2 = nn.BatchNorm2d(size)

self.relu2 = nn.ReLU()

# third layer

self.conv3 = nn.Conv2d(size, out_channels, kernel_size=1, stride=1)

self.bn3 = nn.BatchNorm2d(out_channels)

# shortcut connection

self.shortcut = nn.Identity() if shortcut is None else shortcut

self.bn4 = nn.BatchNorm2d(out_channels)

self.relu4 = nn.ReLU()

def forward(self, x):

o = self.conv1(x)

o = self.bn1(o)

o = self.relu1(o)

o = self.conv2(o)

o = self.bn2(o)

o = self.relu2(o)

o = self.conv3(o)

o = self.bn3(o)

o = o + self.shortcut(x) # F(x)+x

o = self.bn4(o)

o = self.relu4(o)

return o卷积层情况的shortcut

class Shortcut(nn.Sequential):

def __init__(self, in_channels, out_channels, stride):

layers = [nn.Conv2d(in_channels, out_channels, kernel_size=1,stride=stride),

nn.BatchNorm2d(out_channels)]

super(Shortcut, self).__init__(*layers)二、ResNet示例——ResNet18

class ResNet18(nn.Module):

def __init__(self, class_num, pre_filter_size=7):

super(ResNet18, self).__init__()

# preprocessing layer

self.pl = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=pre_filter_size, stride=2, padding=pre_filter_size // 2),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(kernel_size=(3, 3), stride=2, padding=1)

)

# residual blocks

self.block1 = ResidualBlock(64, 64)

self.block2 = ResidualBlock(64, 64)

self.block3 = ResidualBlock(64, 128, 2,

Shortcut(64, 128, 2))

self.block4 = ResidualBlock(128, 128)

self.block5 = ResidualBlock(128, 256, 2,

Shortcut(128, 256, 2))

self.block6 = ResidualBlock(256, 256)

self.block7 = ResidualBlock(256, 512, 2,

Shortcut(256, 512, 2))

self.block8 = ResidualBlock(512, 512)

# fc

self.avg_pool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512, class_num)

def forward(self, x):

o = self.pl(x)

o = self.block1(o)

o = self.block2(o)

o = self.block3(o)

o = self.block4(o)

o = self.block5(o)

o = self.block6(o)

o = self.block7(o)

o = self.block8(o)

o = self.avg_pool(o)

o = o.view(o.size(0), -1)

o = self.fc(o)

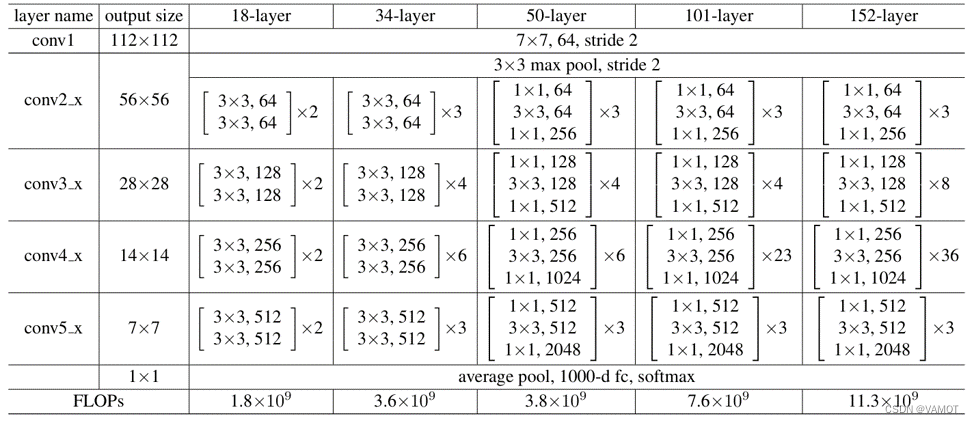

return o三、高复用性ResNet

在可复用的ResNet中,相同大小的残差块被放入到一个Sequential层。因此,通过更改残差块种类和各个残差块层的残差块数量便可生成各种ResNet模型。

Resnet参数:

- class_num:种类数量

- block:残差块类

- blocks_num:每个大小的残差块的数量

- pre_filter_size: 预处理层卷积核大小

class ResNet(nn.Module):

def __init__(self, class_num: int, block: Type[Union[ResidualBlock, Bottleneck]],

blocks_num: list[int], pre_filter_size: int = 7):

super(ResNet, self).__init__()

assert len(blocks_num) == 4, "blocks_num should be a list with 4 elements"

self.block = block

# preprocessing layer

self.pl = nn.Sequential(

nn.Conv2d(3, 64, kernel_size=pre_filter_size, stride=2,

padding=pre_filter_size // 2),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(kernel_size=(3, 3), stride=2, padding=1)

)

# blocks

self.blocks1 = self.make_blocks(64, 64, blocks_num[0])

self.blocks2 = self.make_blocks(64 * self.block.expansion, 128, blocks_num[1])

self.blocks3 = self.make_blocks(128 * self.block.expansion, 256, blocks_num[2])

self.blocks4 = self.make_blocks(256 * self.block.expansion, 512, blocks_num[3])

# fc

self.avg_pool = nn.AdaptiveAvgPool2d((1, 1))

self.fc = nn.Linear(512 * self.block.expansion, class_num)

def forward(self, x):

o = self.pl(x)

o = self.blocks1(o)

o = self.blocks2(o)

o = self.blocks3(o)

o = self.blocks4(o)

o = self.avg_pool(o)

o = o.view(o.size(0), -1)

o = self.fc(o)

return omake_blocks参数:

- in_channels:残差块层输入的通道数

- size:残差块的大小

- num:残差块的数量

注意:第一个残差块层没有进行下采样。对于使用两层残差块的ResNet,跳跃连接仍使用Identity层。而对使用Bottleneck的ResNet,其跳跃连接使用卷积核大小与步长均为1的卷积层。

def make_blocks(self, in_channels, size, num):

blocks = nn.Sequential()

if in_channels == size:

if self.block.expansion == 1:

shortcut = nn.Identity()

else:

shortcut = Shortcut(in_channels, size * self.block.expansion, 1)

stride = 1

else:

shortcut = Shortcut(in_channels, size * self.block.expansion, 2)

stride = 2

blocks.append(self.block(in_channels, size, stride=stride, shortcut=shortcut))

for _ in range(1, num):

blocks.append(self.block(size * self.block.expansion, size))

return blocks用法示例:

ResNet18 = ResNet(10, ResidualBlock, [2,2,2,2])

ResNet34 = ResNet(10, ResidualBlock, [3,4,6,3])

ResNet50 = ResNet(10, Bottleneck, [3,4,6,3])

ResNet101 = ResNet(10, Bottleneck, [3,4,23,3])

ResNet152 = ResNet(10, Bottleneck, [3,8,36,3])四、参考文献

[1512.03385] Deep Residual Learning for Image Recognition (arxiv.org)

1903

1903

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?