import torch

import torch.nn as nn

import torch.nn.functional as F

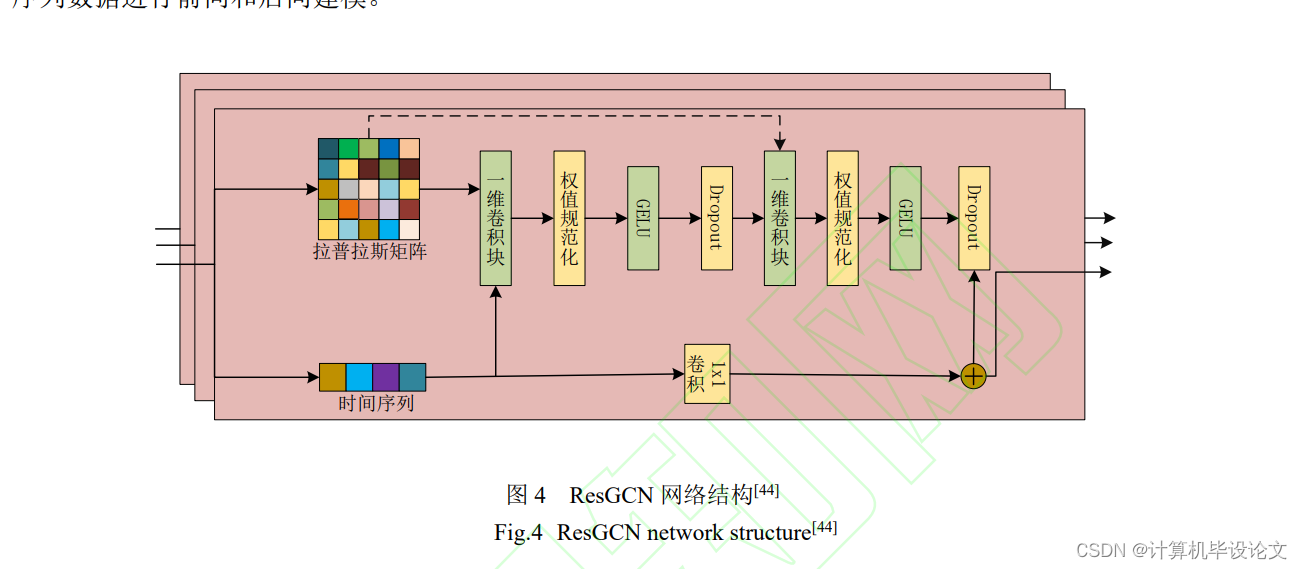

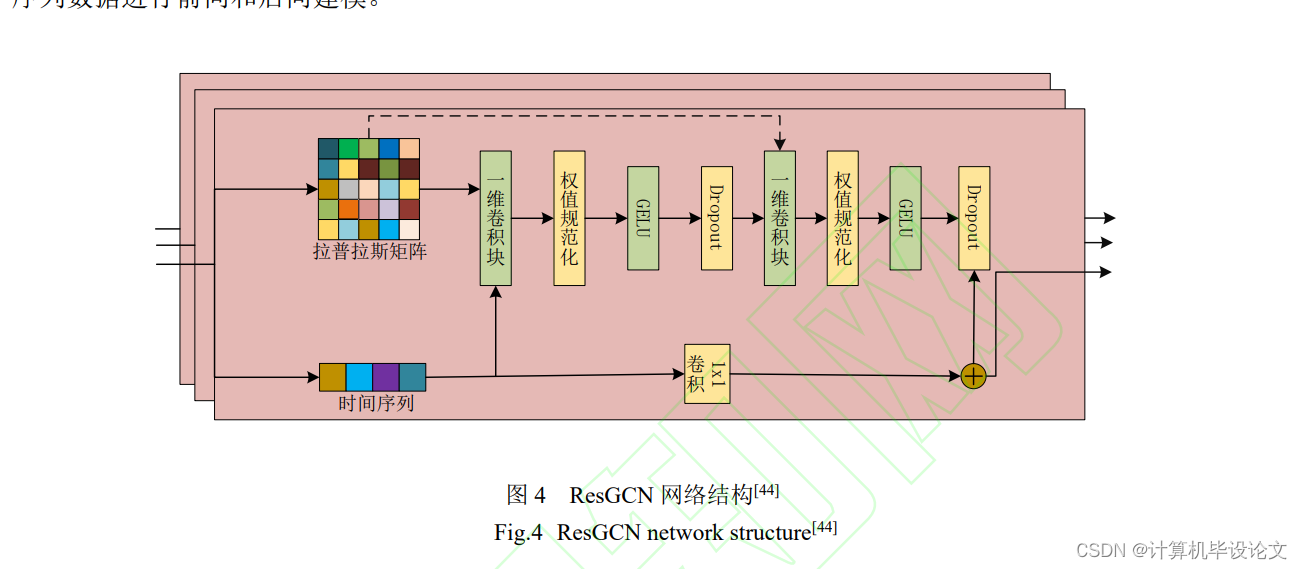

class ResGCNBlock(nn.Module):

def __init__(self, in_channels, out_channels, dropout=0.5):

super(ResGCNBlock, self).__init__()

self.conv1 = nn.Conv1d(in_channels, out_channels, kernel_size=1)

self.bn1 = nn.BatchNorm1d(out_channels)

self.gelu = nn.GELU()

self.dropout = nn.Dropout(dropout)

self.conv2 = nn.Conv1d(out_channels, out_channels, kernel_size=1)

self.bn2 = nn.BatchNorm1d(out_channels)

def forward(self, x):

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = self.gelu(out)

out = self.dropout(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.gelu(out)

out = self.dropout(out)

out += identity

return out

class ResGCN(nn.Module):

def __init__(self, input_dim, hidden_dim, output_dim, num_blocks, dropout=0.5):

super(ResGCN, self).__init__()

self.initial_conv = nn.Conv1d(input_dim, hidden_dim, kernel_size=1)

self.blocks = nn.ModuleList(

[ResGCNBlock(hidden_dim, hidden_dim, dropout) for _ in range(num_blocks)]

)

self.final_conv = nn.Conv1d(hidden_dim, output_dim, kernel_size=1)

def forward(self, x):

out = self.initial_conv(x)

for block in self.blocks:

out = block(out)

out = self.final_conv(out)

return out

# Example usage

input_dim = 5 # Number of input features

hidden_dim = 64 # Hidden layer size

output_dim = 1 # Output size

num_blocks = 3 # Number of ResGCN blocks

dropout = 0.5 # Dropout rate

model = ResGCN(input_dim, hidden_dim, output_dim, num_blocks, dropout)

# Sample data

batch_size = 16

sequence_length = 50

x = torch.rand(batch_size, input_dim, sequence_length)

# Forward pass

output = model(x)

print(output.shape)

import torch.optim as optim

# Hyperparameters

learning_rate = 0.001

num_epochs = 100

# Loss and optimizer

criterion = nn.MSELoss()

optimizer = optim.Adam(model.parameters(), lr=learning_rate)

# Training loop

for epoch in range(num_epochs):

model.train()

optimizer.zero_grad()

outputs = model(x)

loss = criterion(outputs, torch.rand(batch_size, output_dim, sequence_length)) # Assuming random targets for example

loss.backward()

optimizer.step()

if (epoch + 1) % 10 == 0:

print(f'Epoch [{epoch+1}/{num_epochs}], Loss: {loss.item():.4f}')

from sklearn.metrics import mean_squared_error, mean_absolute_error

model.eval()

with torch.no_grad():

predictions = model(x)

targets = torch.rand(batch_size, output_dim, sequence_length) # Assuming random targets for example

predictions = predictions.cpu().numpy()

targets = targets.cpu().numpy()

rmse = mean_squared_error(targets, predictions, squared=False)

mae = mean_absolute_error(targets, predictions)

print(f'RMSE: {rmse:.4f}, MAE: {mae:.4f}')

1038

1038

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?