一、默认锚定框

Yolov5 中默认保存了一些针对 coco数据集的预设锚定框,在 yolov5 的配置文件*.yaml 中已经预设了640×640图像大小下锚定框的尺寸(以 yolov5s.yaml 为例):

# anchors

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32anchors参数共有三行,每行9个数值;且每一行代表应用不同的特征图;

1、第一行是在最大的特征图上的锚框

2、第二行是在中间的特征图上的锚框

3、第三行是在最小的特征图上的锚框;

在目标检测任务中,一般希望在大的特征图上去检测小目标,因为大特征图才含有更多小目标信息,因此大特征图上的anchor数值通常设置为小数值,而小特征图上数值设置为大数值检测大的目标。

二、自定义锚定框

1、训练时自动计算锚定框

yolov5 中不是只使用默认锚定框,在开始训练之前会对数据集中标注信息进行核查,计算此数据集标注信息针对默认锚定框的最佳召回率,当最佳召回率大于或等于0.98,则不需要更新锚定框;如果最佳召回率小于0.98,则需要重新计算符合此数据集的锚定框。

核查锚定框是否适合要求的函数在 /utils/autoanchor.py 文件中:

def check_anchors(dataset, model, thr=4.0, imgsz=640):其中 thr 是指 数据集中标注框宽高比最大阈值,默认是使用 超参文件 hyp.scratch.yaml 中的 “anchor_t” 参数值。

核查主要代码如下:

def metric(k): # compute metric

r = wh[:, None] / k[None]

x = torch.min(r, 1. / r).min(2)[0] # ratio metric

best = x.max(1)[0] # best_x

aat = (x > 1. / thr).float().sum(1).mean() # anchors above threshold

bpr = (best > 1. / thr).float().mean() # best possible recall

return bpr, aat

bpr, aat = metric(m.anchor_grid.clone().cpu().view(-1, 2))其中两个指标需要解释一下(bpr 和 aat):

即bpr(best possible recall)和 aat(anchors above threshold)。

其中 bpr 参数就是判断是否需要重新计算锚定框的依据(是否小于 0.98)。

重新计算符合此数据集标注框的锚定框,是利用 kmean聚类方法实现的,代码在 /utils/autoanchor.py 文件中:自己找找。

其kmean_anchors()函数中的参数做一下简单解释(代码中已经有了英文注释):

1.path:包含数据集文件路径等相关信息的 yaml 文件(比如 coco128.yaml), 或者 数据集张量(yolov5 自动计算锚定框时就是用的这种方式,先把数据集标签信息读取再处理)

2.n:锚定框的数量,即有几组;默认值是9

3.img_size:图像尺寸。计算数据集样本标签框的宽高比时,是需要缩放到 img_size 大小后再计算的;默认值是640

4.thr:数据集中标注框宽高比最大阈值,默认是使用 超参文件 hyp.scratch.yaml 中的 “anchor_t” 参数值;默认值是4.0;自动计算时,会自动根据你所使用的数据集,来计算合适的阈值。

5.gen:kmean聚类算法迭代次数,默认值是1000

6.verbose:是否打印输出所有计算结果,默认值是true

如果你不想自动计算锚定框,可以在 train.py 中设置参数

parser.add_argument('--noautoanchor', action='store_true', help='disable autoanchor check')2、训练前手动计算锚定框

重点在于如果使用 yolov5 训练效果并不好(排除其他原因,只考虑 “预设锚定框” 这个因素), yolov5在核查默认锚定框是否符合要求时,计算的最佳召回率大于0.98,没有自动计算锚定框;此时你可以自己手动计算锚定框。【即使自己的数据集中目标宽高比最大值小于4,默认锚定框也不一定是最合适的】。在这可以自己在labels.jpg中查看数据集目标物的宽高大小,超过4:1时即可自定义anchors大小,因为默认coco数据集宽高不一定适合你的数据集标注的大小。

首先可以自行编写一个程序,统计一下你所训练的数据集所有标签框宽高比,看下宽高比主要分布在哪个范围、最大宽高比是多少? 比如:你使用的数据集中目标宽高比最大达到了 5:1(甚至 10:1) ,那肯定需要重新计算锚定框了,针对coco数据集的最大宽高比是 4:1 。然后在 yolov5 程序中创建一个新的 python 文件 test.py,手动计算锚定框:

import utils.autoanchor as autoAC

# 对数据集重新计算 anchors

new_anchors = autoAC.kmean_anchors('./data/mydata.yaml', 9, 640, 5.0, 1000, True)

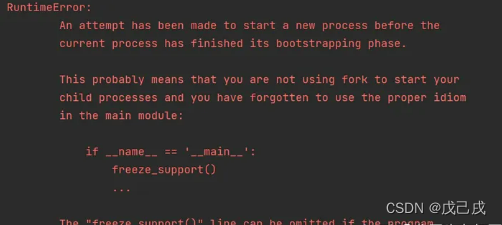

print(new_anchors)问题1:你可能会出现报错,例如下:

RuntimeError:

An attempt has been made to start a new process before the

current process has finished its bootstrapping phase.

This probably means that you are not using fork to start your

child processes and you have forgotten to use the proper idiom

in the main module:

if name == ‘main‘:

freeze_support()

…

The “freeze_support()” line can be omitted if the program

is not going to be frozen to produce an executable.

错误原因:

Python 解释器在 Windows平台 执行创建多进程的程序时,子进程会读取当前 Python 文件,用以创建进程。

在子进程读取当前文件时,读取到创建子进程的代码时又会创建新的子进程,这样程序就陷入递归创建进程的状态

解决办法:

按照提示将程序创建子进程的放进 if __name__ == '__main__': 语句内,该语句的作用是判断当前进程是否为主进程,是主进程才执行程序。

import utils.autoanchor as autoAC

if __name__ == '__main__':

# 对数据集重新计算 anchors

new_anchors = autoAC.kmean_anchors('./data/pk.yaml', 9, 640, 5.0, 1000, True)

print(new_anchors)运行脚本打印平均anchors。

Scanning C:\Users\49626\Desktop\yolov5-master\pk\labels\train... 588 images, 8 backgrounds, 0 corrupt: 100%|██████████| 591/591 [00:08<00:00, 65.75it/s]

WARNING Cache directory C:\Users\49626\Desktop\yolov5-master\pk\labels is not writeable: [WinError 183] : 'C:\\Users\\49626\\Desktop\\yolov5-master\\pk\\labels\\train.cache.npy' -> 'C:\\Users\\49626\\Desktop\\yolov5-master\\pk\\labels\\train.cache'

AutoAnchor: WARNING Extremely small objects found: 5 of 1195 labels are <3 pixels in size

AutoAnchor: Running kmeans for 9 anchors on 1195 points...

0%| | 0/1000 [00:00<?, ?it/s]AutoAnchor: thr=0.20: 0.9808 best possible recall, 5.49 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.317/0.708-mean/best, past_thr=0.455-mean: 19,13, 50,25, 87,41, 168,30, 152,74, 260,48, 269,106, 328,158, 510,220

AutoAnchor: thr=0.20: 0.9808 best possible recall, 5.46 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.316/0.709-mean/best, past_thr=0.455-mean: 19,13, 49,24, 86,41, 171,30, 155,74, 270,49, 269,104, 326,162, 512,215

AutoAnchor: thr=0.20: 0.9841 best possible recall, 5.47 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.316/0.710-mean/best, past_thr=0.453-mean: 18,12, 49,23, 86,43, 180,31, 256,44, 151,75, 267,98, 326,153, 505,215

AutoAnchor: thr=0.20: 0.9841 best possible recall, 5.46 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.315/0.710-mean/best, past_thr=0.453-mean: 18,12, 50,22, 87,43, 180,31, 151,75, 263,44, 262,95, 333,156, 535,207

AutoAnchor: thr=0.20: 0.9841 best possible recall, 5.46 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.315/0.710-mean/best, past_thr=0.454-mean: 18,11, 51,22, 92,42, 185,30, 151,74, 266,45, 260,93, 339,156, 539,207

AutoAnchor: thr=0.20: 0.9900 best possible recall, 5.48 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.316/0.711-mean/best, past_thr=0.454-mean: 17,11, 52,22, 91,41, 175,29, 151,75, 257,47, 265,92, 331,158, 539,207

AutoAnchor: thr=0.20: 0.9900 best possible recall, 5.46 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.316/0.712-mean/best, past_thr=0.455-mean: 17,11, 51,23, 91,41, 177,30, 153,74, 257,47, 267,92, 334,158, 547,206

AutoAnchor: thr=0.20: 0.9900 best possible recall, 5.45 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.315/0.712-mean/best, past_thr=0.454-mean: 17,11, 47,23, 86,40, 186,30, 145,74, 254,45, 260,88, 334,149, 517,223

AutoAnchor: thr=0.20: 0.9900 best possible recall, 5.44 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.315/0.713-mean/best, past_thr=0.453-mean: 17,11, 47,23, 85,40, 196,30, 246,45, 147,75, 263,87, 337,150, 512,222

AutoAnchor: thr=0.20: 0.9900 best possible recall, 5.45 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.314/0.713-mean/best, past_thr=0.453-mean: 17,11, 48,23, 86,40, 198,29, 246,45, 147,75, 263,88, 337,150, 510,222

AutoAnchor: thr=0.20: 0.9900 best possible recall, 5.45 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.314/0.713-mean/best, past_thr=0.453-mean: 17,11, 48,23, 85,41, 197,29, 149,72, 248,45, 269,88, 337,150, 495,227

AutoAnchor: thr=0.20: 0.9900 best possible recall, 5.46 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.315/0.714-mean/best, past_thr=0.453-mean: 17,12, 48,22, 87,39, 202,29, 149,72, 248,45, 260,88, 324,148, 489,224

AutoAnchor: thr=0.20: 0.9900 best possible recall, 5.46 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.315/0.714-mean/best, past_thr=0.453-mean: 17,12, 48,22, 87,39, 202,29, 149,72, 242,45, 264,89, 323,150, 489,226

AutoAnchor: thr=0.20: 0.9900 best possible recall, 5.45 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.315/0.714-mean/best, past_thr=0.453-mean: 17,12, 48,22, 86,40, 203,29, 149,71, 243,45, 264,90, 323,155, 484,223

AutoAnchor: thr=0.20: 0.9900 best possible recall, 5.46 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.316/0.714-mean/best, past_thr=0.454-mean: 17,12, 48,22, 86,39, 200,30, 151,72, 242,47, 260,90, 329,153, 468,230

AutoAnchor: thr=0.20: 0.9900 best possible recall, 5.46 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.316/0.715-mean/best, past_thr=0.455-mean: 17,12, 48,22, 89,38, 199,29, 233,47, 152,72, 263,91, 325,153, 491,225

AutoAnchor: thr=0.20: 0.9900 best possible recall, 5.46 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.316/0.715-mean/best, past_thr=0.455-mean: 16,12, 48,22, 89,38, 199,29, 232,47, 153,72, 262,91, 324,152, 493,225

AutoAnchor: thr=0.20: 0.9900 best possible recall, 5.48 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.317/0.715-mean/best, past_thr=0.455-mean: 17,12, 48,22, 87,39, 201,29, 153,71, 232,47, 261,92, 323,151, 495,223

AutoAnchor: thr=0.20: 0.9958 best possible recall, 5.41 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.714-mean/best, past_thr=0.454-mean: 15,12, 46,21, 89,37, 213,29, 159,72, 238,51, 261,92, 323,151, 510,216

AutoAnchor: thr=0.20: 0.9958 best possible recall, 5.42 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.314/0.715-mean/best, past_thr=0.455-mean: 15,13, 45,21, 85,38, 210,29, 166,70, 234,51, 261,86, 321,151, 518,215

AutoAnchor: thr=0.20: 0.9958 best possible recall, 5.42 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.315/0.715-mean/best, past_thr=0.455-mean: 15,13, 45,21, 85,38, 207,30, 166,71, 235,50, 263,85, 315,150, 518,212

AutoAnchor: thr=0.20: 0.9983 best possible recall, 5.35 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.312/0.714-mean/best, past_thr=0.455-mean: 13,13, 48,21, 84,38, 189,28, 261,50, 178,75, 257,75, 310,154, 518,211

AutoAnchor: thr=0.20: 0.9992 best possible recall, 5.32 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.311/0.715-mean/best, past_thr=0.456-mean: 11,12, 47,21, 88,35, 184,26, 163,66, 261,48, 254,74, 313,153, 547,211

AutoAnchor: thr=0.20: 0.9992 best possible recall, 5.32 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.311/0.715-mean/best, past_thr=0.457-mean: 11,12, 47,21, 88,35, 182,26, 163,66, 261,48, 254,74, 315,153, 551,210

AutoAnchor: thr=0.20: 0.9992 best possible recall, 5.32 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.311/0.715-mean/best, past_thr=0.457-mean: 11,12, 47,21, 88,35, 182,26, 162,66, 262,48, 255,74, 316,153, 551,210

AutoAnchor: thr=0.20: 0.9992 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.311/0.716-mean/best, past_thr=0.457-mean: 11,12, 47,21, 88,35, 184,26, 162,66, 258,48, 254,75, 318,150, 552,208

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.35 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.312/0.717-mean/best, past_thr=0.456-mean: 11,12, 48,20, 88,36, 184,26, 161,64, 258,47, 247,74, 317,150, 552,209

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.34 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.312/0.717-mean/best, past_thr=0.457-mean: 11,12, 47,20, 88,36, 185,26, 161,64, 257,47, 247,74, 318,149, 552,209

AutoAnchor: Evolving anchors with Genetic Algorithm: fitness = 0.7172: 16%|█▋ | 165/1000 [00:00<00:00, 1640.33it/s]AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.31 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.309/0.719-mean/best, past_thr=0.454-mean: 10,14, 46,19, 76,42, 177,26, 160,71, 256,47, 240,76, 313,153, 571,201

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.32 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.308/0.720-mean/best, past_thr=0.452-mean: 10,14, 50,20, 78,42, 183,26, 147,75, 275,44, 249,76, 325,145, 539,201

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.31 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.308/0.721-mean/best, past_thr=0.453-mean: 10,14, 50,20, 78,41, 184,26, 147,75, 278,44, 250,76, 327,144, 545,203

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.28 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.308/0.724-mean/best, past_thr=0.456-mean: 9,14, 44,20, 76,41, 174,25, 157,64, 282,47, 250,79, 298,144, 548,210

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.28 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.309/0.726-mean/best, past_thr=0.457-mean: 9,14, 44,19, 79,41, 171,24, 157,65, 280,48, 246,81, 302,140, 565,205

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.29 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.311/0.731-mean/best, past_thr=0.459-mean: 9,15, 46,19, 79,39, 171,23, 157,64, 272,48, 252,82, 276,137, 525,209

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.30 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.311/0.731-mean/best, past_thr=0.459-mean: 9,15, 47,19, 81,40, 171,24, 154,63, 269,48, 252,83, 282,135, 537,206

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.27 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.309/0.732-mean/best, past_thr=0.458-mean: 8,15, 45,18, 81,39, 179,24, 155,64, 269,50, 259,82, 276,140, 550,202

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.30 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.311/0.732-mean/best, past_thr=0.460-mean: 8,16, 45,17, 84,36, 181,25, 143,65, 272,50, 258,76, 269,129, 551,208

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.32 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.312/0.732-mean/best, past_thr=0.460-mean: 8,16, 46,18, 82,36, 179,25, 142,63, 262,51, 261,74, 270,129, 545,214

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.733-mean/best, past_thr=0.460-mean: 9,17, 45,18, 81,36, 188,25, 143,63, 245,51, 262,74, 270,129, 516,215

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.733-mean/best, past_thr=0.460-mean: 9,17, 45,18, 81,37, 189,25, 145,64, 244,50, 262,74, 267,130, 510,215

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.733-mean/best, past_thr=0.460-mean: 9,17, 45,18, 81,37, 188,25, 144,64, 244,50, 262,74, 266,129, 511,215

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.734-mean/best, past_thr=0.460-mean: 9,17, 45,18, 81,37, 187,25, 145,65, 245,50, 262,74, 266,129, 511,214

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.734-mean/best, past_thr=0.460-mean: 9,17, 45,18, 81,37, 187,25, 145,65, 245,50, 262,74, 266,129, 511,214

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.734-mean/best, past_thr=0.460-mean: 9,17, 45,18, 81,37, 188,25, 145,64, 245,50, 261,75, 265,128, 511,214

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.32 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.312/0.734-mean/best, past_thr=0.459-mean: 8,16, 45,17, 80,36, 181,26, 142,68, 251,49, 260,76, 274,133, 526,204

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.311/0.735-mean/best, past_thr=0.457-mean: 8,16, 43,17, 78,36, 188,27, 142,72, 241,43, 240,85, 293,138, 511,198

AutoAnchor: Evolving anchors with Genetic Algorithm: fitness = 0.7348: 38%|███▊ | 376/1000 [00:00<00:00, 1908.21it/s]AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.34 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.312/0.735-mean/best, past_thr=0.457-mean: 8,16, 43,17, 78,36, 188,27, 236,43, 142,72, 238,85, 293,138, 509,197

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.34 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.312/0.735-mean/best, past_thr=0.458-mean: 8,16, 43,17, 78,36, 188,27, 142,72, 236,43, 237,85, 293,139, 508,196

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.311/0.735-mean/best, past_thr=0.458-mean: 8,16, 43,17, 78,36, 188,28, 143,72, 237,44, 240,85, 295,137, 508,197

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.311/0.735-mean/best, past_thr=0.457-mean: 8,16, 43,17, 78,36, 187,27, 236,43, 143,73, 242,85, 295,139, 505,197

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.311/0.735-mean/best, past_thr=0.457-mean: 8,16, 43,17, 78,37, 187,27, 143,73, 237,44, 247,84, 295,139, 500,198

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.311/0.735-mean/best, past_thr=0.457-mean: 8,16, 43,17, 78,36, 187,27, 142,73, 238,44, 248,84, 295,138, 499,198

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.31 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.311/0.736-mean/best, past_thr=0.459-mean: 8,15, 45,18, 81,37, 190,27, 232,43, 149,69, 267,86, 288,137, 471,203

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.31 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.312/0.736-mean/best, past_thr=0.460-mean: 8,15, 45,18, 79,37, 190,28, 231,43, 152,69, 264,86, 289,138, 469,203

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.32 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.312/0.736-mean/best, past_thr=0.459-mean: 8,15, 44,18, 79,37, 190,28, 231,43, 153,70, 262,86, 292,138, 466,203

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.32 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.312/0.736-mean/best, past_thr=0.460-mean: 8,15, 45,18, 80,37, 189,28, 226,44, 153,70, 262,86, 291,139, 470,208

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.32 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.312/0.736-mean/best, past_thr=0.460-mean: 8,15, 44,18, 79,36, 190,28, 224,43, 152,70, 264,86, 288,138, 467,208

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.312/0.736-mean/best, past_thr=0.460-mean: 8,15, 44,18, 79,36, 192,27, 225,44, 152,69, 247,84, 289,138, 475,217

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.32 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.312/0.736-mean/best, past_thr=0.460-mean: 8,15, 44,18, 79,36, 192,27, 225,44, 152,69, 248,84, 289,138, 476,216

AutoAnchor: Evolving anchors with Genetic Algorithm: fitness = 0.7362: 59%|█████▉ | 594/1000 [00:00<00:00, 2025.15it/s]AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.736-mean/best, past_thr=0.460-mean: 8,15, 44,18, 79,36, 190,27, 224,44, 152,68, 247,84, 289,137, 473,215

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.736-mean/best, past_thr=0.460-mean: 8,15, 44,18, 80,37, 189,27, 223,44, 152,68, 247,84, 288,137, 473,216

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.736-mean/best, past_thr=0.461-mean: 8,15, 44,18, 80,37, 188,27, 224,43, 153,67, 247,83, 287,137, 475,216

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.736-mean/best, past_thr=0.461-mean: 8,15, 44,18, 80,37, 188,27, 224,43, 153,67, 247,83, 287,137, 475,216

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.736-mean/best, past_thr=0.461-mean: 8,15, 44,18, 80,37, 188,27, 224,43, 153,67, 247,83, 286,137, 475,216

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.736-mean/best, past_thr=0.460-mean: 8,15, 44,18, 80,37, 188,27, 225,43, 153,67, 248,83, 286,137, 473,215

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.736-mean/best, past_thr=0.460-mean: 8,15, 44,18, 80,37, 188,27, 224,43, 152,68, 248,83, 288,136, 476,215

AutoAnchor: Evolving anchors with Genetic Algorithm: fitness = 0.7364: 84%|████████▍ | 842/1000 [00:00<00:00, 2203.49it/s]AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.33 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.736-mean/best, past_thr=0.461-mean: 8,15, 44,18, 80,37, 188,27, 224,43, 153,68, 249,83, 288,136, 476,215

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.34 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.737-mean/best, past_thr=0.461-mean: 8,15, 44,18, 80,37, 187,27, 221,43, 154,67, 249,83, 285,136, 480,213

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.34 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.737-mean/best, past_thr=0.461-mean: 8,15, 44,18, 80,37, 186,27, 221,43, 154,67, 249,83, 285,136, 481,213

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.34 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.737-mean/best, past_thr=0.460-mean: 8,15, 44,18, 81,38, 187,26, 219,43, 152,67, 247,84, 282,136, 498,212

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.34 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.737-mean/best, past_thr=0.460-mean: 8,15, 43,18, 80,38, 190,26, 219,43, 152,68, 247,83, 283,132, 497,210

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.34 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.737-mean/best, past_thr=0.460-mean: 8,15, 43,18, 81,38, 191,26, 221,43, 150,68, 246,82, 284,132, 492,212

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.35 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.737-mean/best, past_thr=0.460-mean: 8,15, 43,18, 81,38, 191,26, 221,43, 149,67, 247,82, 284,131, 486,211

AutoAnchor: Evolving anchors with Genetic Algorithm: fitness = 0.7370: 100%|██████████| 1000/1000 [00:00<00:00, 2132.71it/s]

AutoAnchor: thr=0.20: 1.0000 best possible recall, 5.35 anchors past thr

AutoAnchor: n=9, img_size=640, metric_all=0.313/0.737-mean/best, past_thr=0.460-mean: 8,15, 43,18, 81,38, 191,26, 221,43, 149,67, 247,82, 284,131, 486,211

[[ 8.0319 15.323]

[ 42.802 17.647]

[ 81.306 37.98]

[ 191.47 26.115]

[ 220.98 42.882]

[ 149.14 66.916]

[ 247.44 82.486]

[ 283.74 131.36]

[ 485.51 211.37]]输出的 9 组新的锚定框即是根据自己的数据集来计算的,可以按照顺序替换到你所使用的配置文件*.yaml中(比如 yolov5s.yaml)。就可以重新训练了。

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?