1. 自定义二维卷积算子

import torch

import torch.nn as nn

class Conv2D(nn.Module):

def __init__(self, kernel_size, stride=1, padding=0, weight_value=[[0., 1.], [2., 3.]]):

super(Conv2D, self).__init__()

self.weight = nn.Parameter(torch.tensor(weight_value, dtype=torch.float32), requires_grad=True)

# 步长

self.stride = stride

# 零填充

self.padding = padding

def forward(self,X):

"""

输入:

- X:输入矩阵,shape=[B, M, N],B为样本数量

输出:

- output:输出矩阵

"""

u,v=self.weight.shape

output=torch.zeros(X.shape[0],X.shape[1]-u+1,X.shape[2]-v+1)

for i in range(output.shape[1]):

for j in range(output.shape[2]):

output[:,i,j]=torch.sum(X[:,i:i+u,j:j+v]*self.weight,dim=[1,2])

return output

torch.manual_seed(100)

inputs=torch.tensor([[[1.,2.,3.,],[4.,5.,6.,],[7.,8.,9.,]]])

conv2d=Conv2D(kernel_size=2)

outputs=conv2d(inputs)

print("input :{},\noutput :{}".format(inputs,outputs))

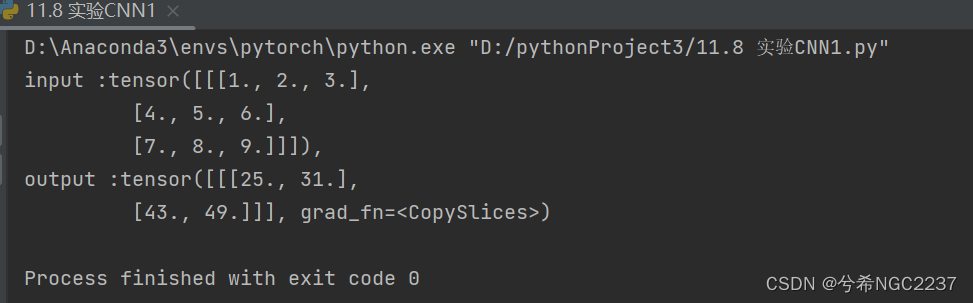

输出结果:

2. 自定义带步长和零填充的二维卷积算子

import torch

import torch.nn as nn

# 带步长和零填充的二维卷积算子

class Conv2D(nn.Module):

def __init__(self, kernel_size, stride=1, padding=0, weight_value=1.0):

super(Conv2D, self).__init__()

self.weight = nn.Parameter(torch.full((kernel_size, kernel_size), fill_value=weight_value, dtype=torch.float32), requires_grad=True)

# 步长

self.stride = stride

# 零填充

self.padding = padding

def forward(self,X):

# 零填充

new_X=torch.zeros([X.shape[0],X.shape[1]+2*self.padding,X.shape[2]+2*self.padding])

new_X[:,self.padding:X.shape[1]+self.padding,self.padding:X.shape[2]+self.padding]=X

u,v=self.weight.shape

output_w=(new_X.shape[1]-u)//self.stride+1

output_h=(new_X.shape[2]-v)//self.stride+1

output=torch.zeros([X.shape[0],output_w,output_h])

for i in range(0,output.shape[1]):

for j in range(0,output.shape[2]):

output[:,i,j]=torch.sum(new_X[:,self.stride*i:self.stride*i+u,self.stride*j:self.stride*j+v],dim=[1,2])

return output

inputs=torch.randn(size=[2,8,8])

conv2d_padding=Conv2D(kernel_size=3,padding=1)

outputs=conv2d_padding(inputs)

print("When kernel_size=3, padding=1 stride=1, input's shape: {}, output's shape: {}".format(inputs.shape, outputs.shape))

conv2d_stride = Conv2D(kernel_size=3, stride=2, padding=1)

outputs = conv2d_stride(inputs)

print("When kernel_size=3, padding=1 stride=2, input's shape: {}, output's shape: {}".format(inputs.shape, outputs.shape))

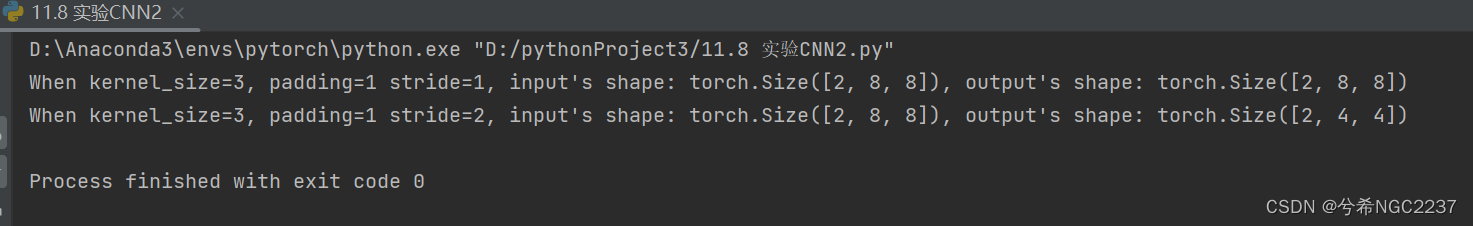

输出结果:

3. 实现图像边缘检测

import torch

import torch.nn as nn

import matplotlib.pyplot as plt

from PIL import Image

import numpy as np

class Conv2D(nn.Module):

def __init__(self, stride=1, padding=0, weight_value=np.array([[-1, -1, -1], [-1, 8, -1], [-1, -1, -1]], dtype='float32').reshape((3,3))):

super(Conv2D, self).__init__()

self.weight = nn.Parameter(torch.tensor(weight_value, dtype=torch.float32), requires_grad=True)

# 步长

self.stride = stride

# 零填充

self.padding = padding

def forward(self, X):

# 零填充

new_X = torch.zeros([X.shape[0], X.shape[1] + 2 * self.padding, X.shape[2] + 2 * self.padding])

new_X[:, self.padding:X.shape[1] + self.padding, self.padding:X.shape[2] + self.padding] = X

u, v = self.weight.shape

output_w = (new_X.shape[1] - u) // self.stride + 1

output_h = (new_X.shape[2] - v) // self.stride + 1

output = torch.zeros([X.shape[0], output_w, output_h])

for i in range(0, output.shape[1]):

for j in range(0, output.shape[2]):

output[:, i, j] = torch.sum(

new_X[:, self.stride * i:self.stride * i + u, self.stride * j:self.stride * j + v]*self.weight, dim=[1, 2])

return output

# 使用卷积运算完成图像边缘检测任务

# 读取图片

img = Image.open('cat.jpg').resize((256,256)).convert('L')

img = np.array(img, dtype='float32')

img = torch.from_numpy(img.reshape((img.shape[0],img.shape[1])))

# 创建卷积算子,卷积核大小为3x3,并使用上面的设置好的数值作为卷积核权重的初始化参数

conv=Conv2D(stride=1,padding=0)

# 将读入的图片转化为float32类型的numpy.ndarray

inputs = np.array(img).astype('float32')

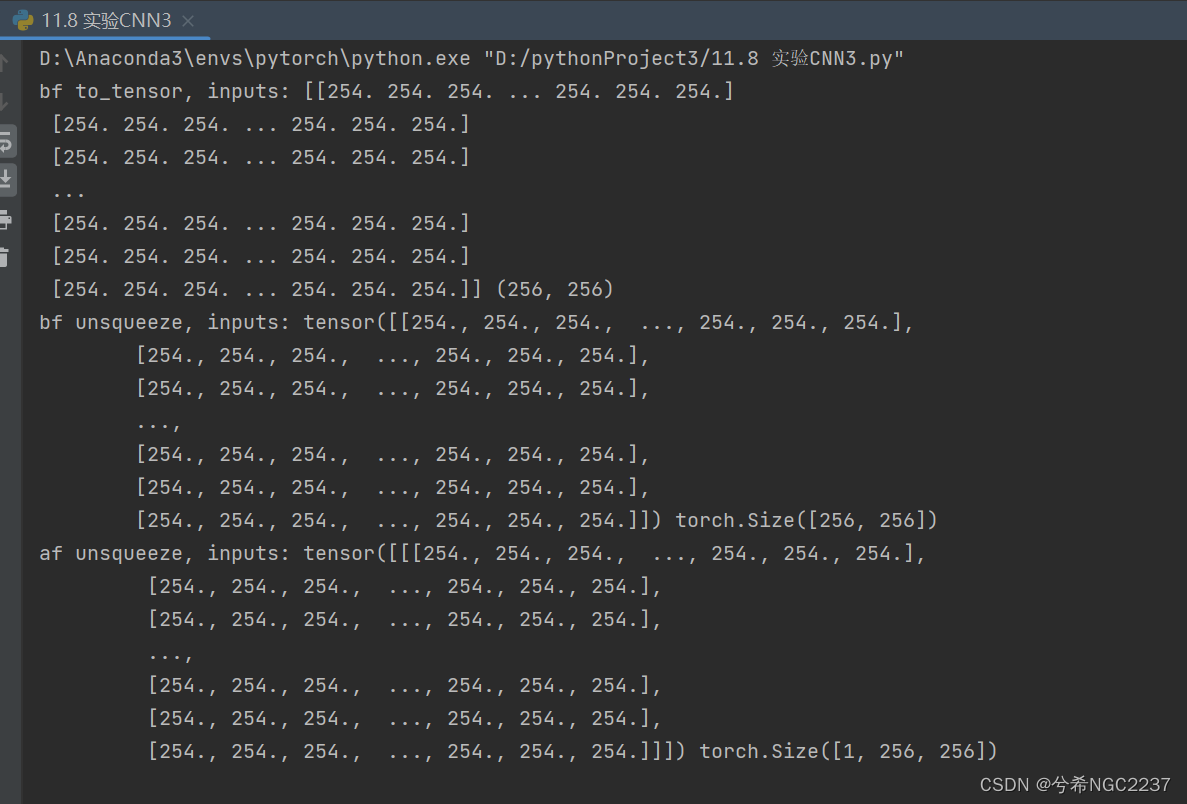

print("bf to_tensor, inputs:",inputs,inputs.shape)

# 将图片转为Tensor

inputs = torch.tensor(inputs)

print("bf unsqueeze, inputs:",inputs,inputs.shape)

inputs = torch.unsqueeze(inputs, dim=0)

print("af unsqueeze, inputs:",inputs,inputs.shape)

outputs = conv(inputs)

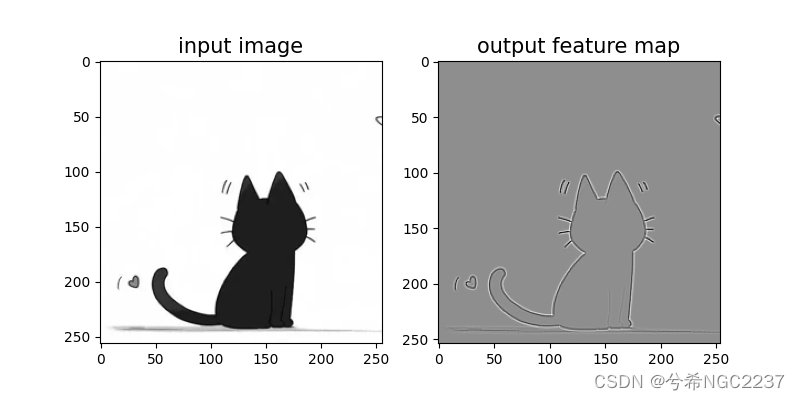

# 可视化结果

plt.figure(figsize=(8, 4))

f = plt.subplot(121)

f.set_title('input image', fontsize=15)

plt.imshow(img,cmap='gray')

f = plt.subplot(122)

f.set_title('output feature map', fontsize=15)

plt.imshow(outputs.squeeze().detach().numpy(), cmap='gray')

plt.savefig('conv-vis.pdf')

plt.show()输出结果:

4. 自定义卷积层算子并调用torch函数验证

import torch

import torch.nn as nn

class Conv2D(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0,

weight_attr=None, bias_attr=None):

super(Conv2D, self).__init__()

if weight_attr is None:

weight_attr = nn.init.constant_(torch.empty(out_channels, in_channels, kernel_size, kernel_size), 1.0)

if bias_attr is None:

bias_attr = nn.init.constant_(torch.empty(out_channels), 0.0)

# 创建卷积核

self.weight = nn.Parameter(weight_attr)

# 创建偏置

self.bias = nn.Parameter(bias_attr)

self.stride = stride

self.padding = padding

# 输入通道数

self.in_channels = in_channels

# 输出通道数

self.out_channels = out_channels

# 基础卷积运算

def single_forward(self, X, weight):

# 零填充

new_X = torch.zeros([X.shape[0], X.shape[1]+2*self.padding, X.shape[2]+2*self.padding])

new_X[:, self.padding:X.shape[1]+self.padding, self.padding:X.shape[2]+self.padding] = X

u, v = weight.shape

output_w = (new_X.shape[1] - u) // self.stride + 1

output_h = (new_X.shape[2] - v) // self.stride + 1

output = torch.zeros([X.shape[0], output_w, output_h])

for i in range(0, output.shape[1]):

for j in range(0, output.shape[2]):

output[:, i, j] = torch.sum(

new_X[:, self.stride*i:self.stride*i+u, self.stride*j:self.stride*j+v]*weight,

dim=[1,2])

return output

def forward(self, inputs):

"""

输入:

- inputs:输入矩阵,shape=[B, D, M, N]

- weights:P组二维卷积核,shape=[P, D, U, V]

- bias:P个偏置,shape=[P, 1]

"""

feature_maps = []

# 进行多次多输入通道卷积运算

p=0

for w, b in zip(self.weight, self.bias): # P个(w,b),每次计算一个特征图Zp

multi_outs = []

# 循环计算每个输入特征图对应的卷积结果

for i in range(self.in_channels):

single = self.single_forward(inputs[:,i,:,:], w[i])

multi_outs.append(single)

# print("Conv2D in_channels:",self.in_channels,"i:",i,"single:",single.shape)

# 将所有卷积结果相加

feature_map = torch.sum(torch.stack(multi_outs), dim=0) + b #Zp

feature_maps.append(feature_map)

# print("Conv2D out_channels:",self.out_channels, "p:",p,"feature_map:",feature_map.shape)

p+=1

# 将所有Zp进行堆叠

out = torch.stack(feature_maps, 1)

return out

inputs = torch.tensor([[[[0.0, 1.0, 2.0], [3.0, 4.0, 5.0], [6.0, 7.0, 8.0]],

[[1.0, 2.0, 3.0], [4.0, 5.0, 6.0], [7.0, 8.0, 9.0]]]])

conv2d = Conv2D(in_channels=2, out_channels=3, kernel_size=2)

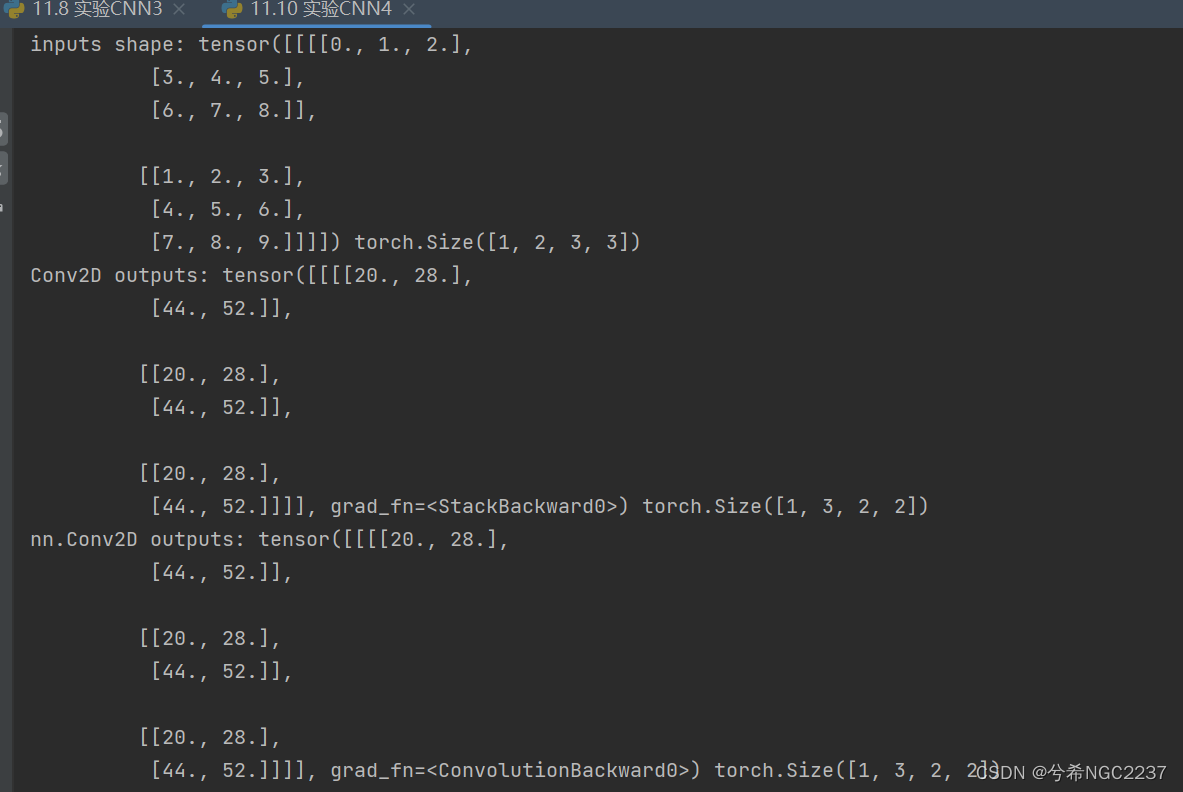

print("inputs shape:",inputs,inputs.shape)

outputs = conv2d(inputs)

# 比较与torch API运算结果

import torch.nn.init as init

conv2d_torch = nn.Conv2d(in_channels=2, out_channels=3, kernel_size=2)

# Initialize the weights and biases

init.constant_(conv2d_torch.weight, 1.0)

init.constant_(conv2d_torch.bias, 0.0)

outputs_torch = conv2d_torch(inputs)

# 自定义算子运算结果

print('Conv2D outputs:', outputs,outputs.shape)

# paddle API运算结果

print('nn.Conv2D outputs:', outputs_torch,outputs_torch.shape)

print('-------------------------------------------------------------')输出结果:

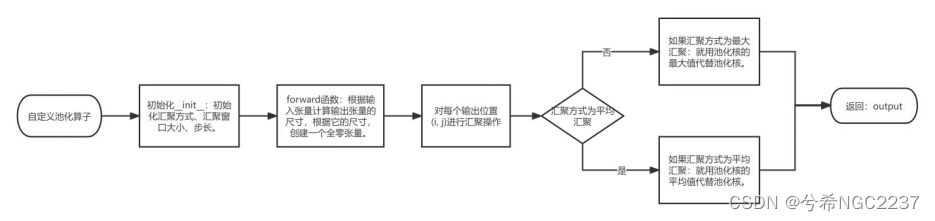

5. 自定义汇聚层算子并调用torch函数验证

class Pool2D(nn.Module):

def __init__(self, size=(2, 2), mode='max', stride=1):

super(Pool2D, self).__init__()

# 汇聚方式

self.mode = mode

self.h, self.w = size

self.stride = stride

def forward(self, x):

output_w = (x.shape[2] - self.w) // self.stride + 1

output_h = (x.shape[3] - self.h) // self.stride + 1

output = torch.zeros([x.shape[0], x.shape[1], output_w, output_h])

# 汇聚

for i in range(output.shape[2]):

for j in range(output.shape[3]):

# 最大汇聚

if self.mode == 'max':

output[:, :, i, j] = torch.max(

x[:, :, self.stride * i:self.stride * i + self.w,

self.stride * j:self.stride * j + self.h].reshape(x.size(0), x.size(1), -1),

dim=2

).values.reshape(x.size(0), x.size(1), 1, 1)

# 平均汇聚

elif self.mode == 'avg':

output[:, :, i, j] = torch.mean(

x[:, :, self.stride * i:self.stride * i + self.w, self.stride * j:self.stride * j + self.h],

dim=[2, 3])

return output

inputs = torch.tensor([[[[1., 2., 3., 4.], [5., 6., 7., 8.], [9., 10., 11., 12.], [13., 14., 15., 16.]]]])

pool2d = Pool2D(stride=2)

outputs = pool2d(inputs)

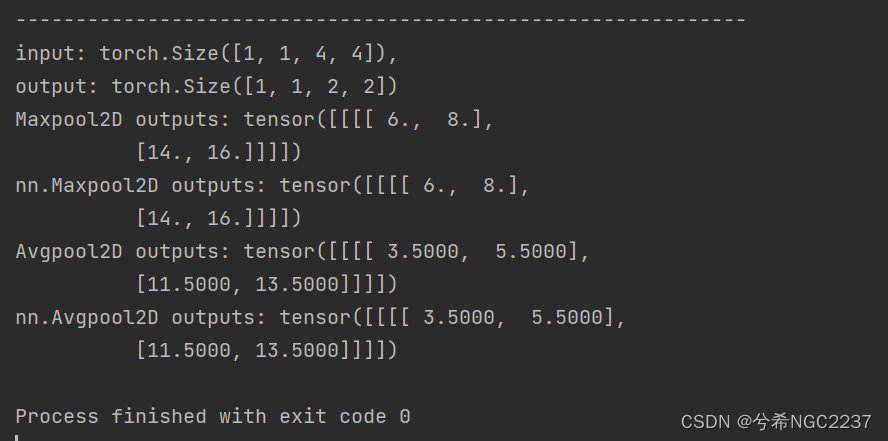

print("input: {}, \noutput: {}".format(inputs.shape, outputs.shape))

# 比较Maxpool2D与torch API运算结果

maxpool2d_torch = nn.MaxPool2d(kernel_size=(2, 2), stride=2)

outputs_paddle = maxpool2d_torch(inputs)

# 自定义算子运算结果

print('Maxpool2D outputs:', outputs)

# paddle API运算结果

print('nn.Maxpool2D outputs:', outputs_paddle)

# 比较Avgpool2D与paddle API运算结果

avgpool2d_paddle = nn.AvgPool2d(kernel_size=(2, 2), stride=2)

outputs_paddle = avgpool2d_paddle(inputs)

pool2d = Pool2D(mode='avg', stride=2)

outputs = pool2d(inputs)

# 自定义算子运算结果

print('Avgpool2D outputs:', outputs)

# paddle API运算结果

print('nn.Avgpool2D outputs:', outputs_paddle)输出结果:

6. 分别用自定义卷积算子和torch.nn.Conv2d()编程实现下面的卷积运算

import torch

import torch.nn as nn

class Conv2D(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0):

super(Conv2D, self).__init__()

# 创建卷积核

self.weight = torch.nn.Parameter(torch.tensor([[[[-1, 1, 0],

[0, 1, 0],

[0, 1, 1]],

[[-1, -1, 0],

[0, 0, 0],

[0, -1, 0]],

[[0, 0, -1],

[0, 1, 0],

[1, -1, -1]]],

[[[1, 1, -1],

[-1, -1, 1],

[0, -1, 1]],

[[0, 1, 0],

[-1, 0, -1],

[-1, 1, 0]],

[[-1, 0, 0],

[-1, 0, 1],

[-1, 0, 0]]]], dtype=torch.float32))

# 创建偏置

self.bias = torch.nn.Parameter(torch.tensor([[1.],

[0.]]))

self.stride = stride

self.padding = padding

# 输入通道数

self.in_channels = in_channels

# 输出通道数

self.out_channels = out_channels

# 基础卷积运算

def single_forward(self, X, weight):

# 零填充

new_X = torch.zeros([X.shape[0], X.shape[1] + 2 * self.padding, X.shape[2] + 2 * self.padding])

new_X[:, self.padding:X.shape[1] + self.padding, self.padding:X.shape[2] + self.padding] = X

u, v = weight.shape

output_w = (new_X.shape[1] - u) // self.stride + 1

output_h = (new_X.shape[2] - v) // self.stride + 1

output = torch.zeros([X.shape[0], output_w, output_h])

for i in range(0, output.shape[1]):

for j in range(0, output.shape[2]):

output[:, i, j] = torch.sum(

new_X[:, self.stride * i:self.stride * i + u, self.stride * j:self.stride * j + v] * weight,

dim=[1, 2])

return output

def forward(self, inputs):

"""

输入:

- inputs:输入矩阵,shape=[B, D, M, N]

- weights:P组二维卷积核,shape=[P, D, U, V]

- bias:P个偏置,shape=[P, 1]

"""

feature_maps = []

# 进行多次多输入通道卷积运算

p = 0

for w, b in zip(self.weight, self.bias): # P个(w,b),每次计算一个特征图Zp

multi_outs = []

# 循环计算每个输入特征图对应的卷积结果

for i in range(self.in_channels):

single = self.single_forward(inputs[:, i, :, :], w[i])

multi_outs.append(single)

# print("Conv2D in_channels:",self.in_channels,"i:",i,"single:",single.shape)

# 将所有卷积结果相加

feature_map = torch.sum(torch.stack(multi_outs), dim=0) + b # Zp

feature_maps.append(feature_map)

# print("Conv2D out_channels:",self.out_channels, "p:",p,"feature_map:",feature_map.shape)

p += 1

# 将所有Zp进行堆叠

out = torch.stack(feature_maps, 1)

return out

inputs = torch.tensor([[[[0, 1, 1, 0, 2],

[2, 2, 2, 2, 1],

[1, 0, 0, 2, 0],

[0, 1, 1, 0, 0],

[1, 2, 0, 0, 2]],

[[1, 0, 2, 2, 0],

[0, 0, 0, 2, 0],

[1, 2, 1, 2, 1],

[1, 0, 0, 0, 0],

[1, 2, 1, 1, 1]],

[[2, 1, 2, 0, 0],

[1, 0, 0, 1, 0],

[0, 2, 1, 0, 1],

[0, 1, 2, 2, 2],

[2, 1, 0, 0, 1]]]], dtype=torch.float32)

net1 = Conv2D(3, 2, 3, stride=2, padding=1)

outputs1 = net1(inputs)

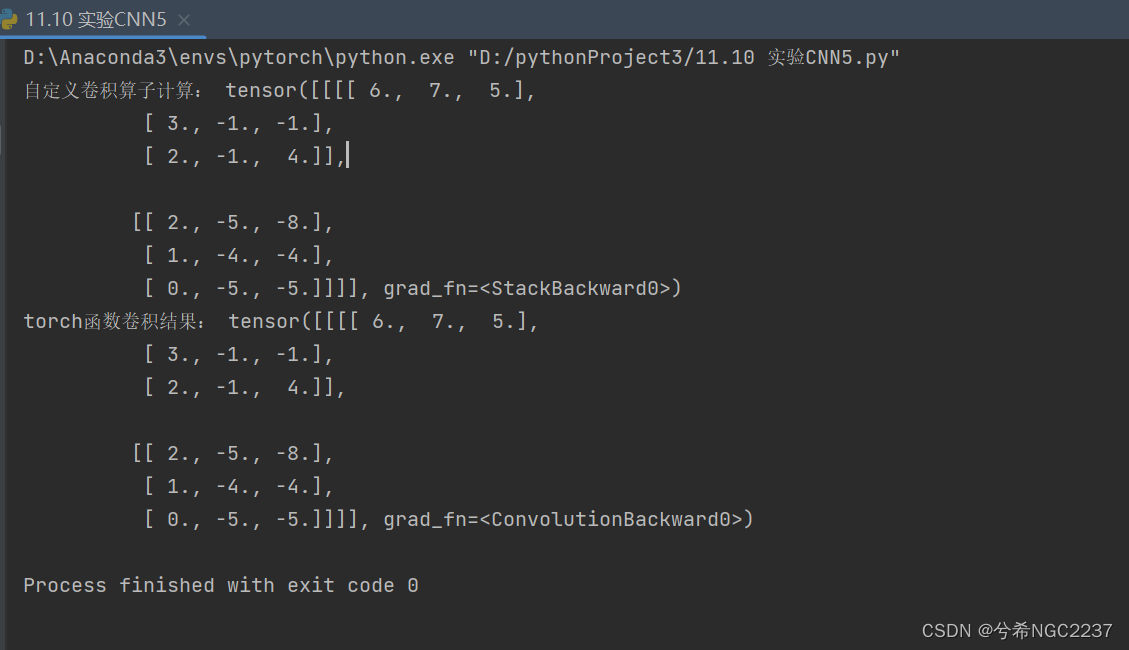

print("自定义卷积算子计算:",outputs1)

# 自定义权重

weight = nn.Parameter(torch.tensor([[[[-1, 1, 0],

[0, 1, 0],

[0, 1, 1]],

[[-1, -1, 0],

[0, 0, 0],

[0, -1, 0]],

[[0, 0, -1],

[0, 1, 0],

[1, -1, -1]]],

[[[1, 1, -1],

[-1, -1, 1],

[0, -1, 1]],

[[0, 1, 0],

[-1, 0, -1],

[-1, 1, 0]],

[[-1, 0, 0],

[-1, 0, 1],

[-1, 0, 0]]]], dtype=torch.float32))

# 创建卷积层

conv2d = nn.Conv2d(in_channels=3, out_channels=2, kernel_size=3, stride=2, padding=1, bias=True)

# 将自定义的权重赋值给卷积层的参数

conv2d.weight = weight

# 分别为两个卷积核设置不同的偏置值

conv2d.bias = nn.Parameter(torch.tensor([1., 0.]))

# 对输入张量进行卷积操作

output = conv2d(inputs)

# 输出张量的形状和值

print("torch函数卷积结果:", output)

输出结果:

总结:

在这次实验中,主要进行了:

自定义二维卷积算子和自定义带步长和零填充的二维卷积算子,然后利用定义的卷计算子实现图像边缘检测:

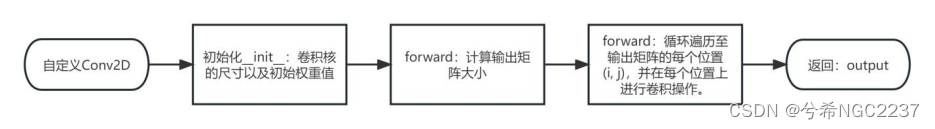

主要就是深入了解函数内部的详细运行方式:这两个的流程都如下,只是输出矩阵的计算方式不同:

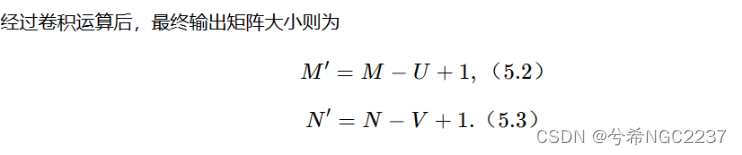

- 不带零填充的输出矩阵的计算:

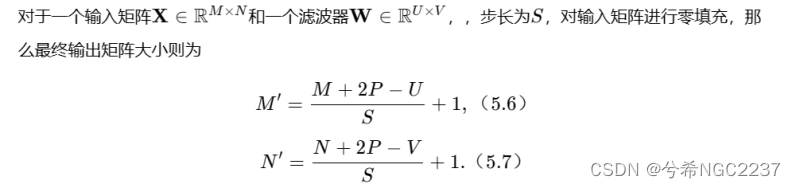

- 带零填充的输出矩阵的计算:

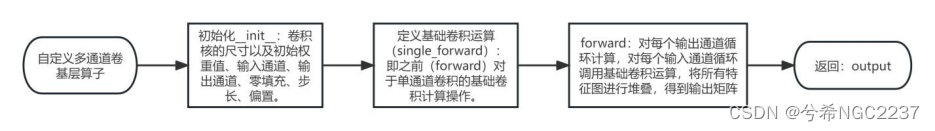

自定义卷积层算子和汇聚层算子

主要是在之前计算的基础上加上,对于每个通道的卷积运算,再堆叠起来。

在该实验中,对于卷积算法的了解层层深入,从只是简单的卷积运算,到带步长和零填充的卷积,再到多通道的卷积运算,并对卷积算法进行应用。

1361

1361

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?