TD3: Learning To Run With AI

TD3:学习与人工智能一起运行

This article looks at one of the most powerful and state of the art algorithms in Reinforcement Learning (RL), Twin Delayed Deep Deterministic Policy Gradients (TD3)( Fujimoto et al., 2018). By the end of this article you should have a solid understanding of what makes TD3 perform so well, be capable of implementing the algorithm yourself and use TD3 to train an agent to successfully run in the HalfCheetah environment.

本文着眼于强化学习 (RL) 中最强大和最先进的算法之一,即孪生延迟深度确定性策略梯度 (TD3)(Fujimoto et al., 2018)。在本文结束时,您应该对 TD3 性能如此出色的原因有了深入的理解,能够自己实现算法,并使用 TD3 训练代理在 HalfCheetah 环境中成功运行。

However, before tackling TD3 you should already have a good understanding of RL and the common algorithms such as Deep Q Networks and DDPG, which TD3 is built upon. If you need to brush up on your knowledge, check out these excellent resources, DeepMind Lecture Series, Let’s make a DQN, Spinning Up: DDPG. This article will cover the following:

但是,在处理 TD3 之前,您应该已经对 RL 和 TD3 所基于的常见算法(如 Deep Q Networks 和 DDPG)有了很好的理解。如果您需要复习您的知识,请查看这些优秀的资源,DeepMind 讲座系列,让我们制作 DQN,旋转起来:DDPG。本文将涵盖以下内容:

- What is TD3 什么是TD3

- Explanation of each core mechanic

每个核心机制的说明 - Implementation & code walkthrough

实现和代码演练 - Results & Benchmarking 结果与基准

The full code can be found here on my github. If you want to quickly follow along with the code used here click on the icon below to be taken to a Google Colab workbook with everything ready to go.

完整的代码可以在我的 github 上找到。如果您想快速按照此处使用的代码进行操作,请单击下面的图标以转到 Google Colab 工作簿,所有内容都已准备就绪。

What is TD3? 什么是TD3?

TD3 is the successor to the Deep Deterministic Policy Gradient (DDPG)(Lillicrap et al, 2016). Up until recently, DDPG was one of the most used algorithms for continuous control problems such as robotics and autonomous driving. Although DDPG is capable of providing excellent results, it has its drawbacks. Like many RL algorithms training DDPG can be unstable and heavily reliant on finding the correct hyper parameters for the current task (OpenAI Spinning Up, 2018). This is caused by the algorithm continuously over estimating the Q values of the critic (value) network. These estimation errors build up over time and can lead to the agent falling into a local optima or experience catastrophic forgetting. TD3 addresses this issue by focusing on reducing the overestimation bias seen in previous algorithms. This is done with the addition of 3 key features:

TD3是深度确定性策略梯度(DDPG)的继承者(Lillicrap et al, 2016)。直到最近,DDPG还是机器人和自动驾驶等连续控制问题中使用最多的算法之一。尽管 DDPG 能够提供出色的结果,但它也有其缺点。像许多 RL 算法一样,训练 DDPG 可能不稳定,并且严重依赖于为当前任务找到正确的超参数(OpenAI Spinning Up,2018 年)。这是由于算法不断高估了critic(值)网络的Q值造成的。这些估计误差会随着时间的推移而累积,并可能导致智能体陷入局部最优值或经历灾难性的遗忘。TD3 通过专注于减少先前算法中出现的高估偏差来解决这个问题。这是通过添加 3 个关键功能来实现的:

- Using a pair of critic networks (The twin part of the title)

使用一对评论家网络(标题的双胞胎部分) - Delayed updates of the actor (The delayed part)

演员的延迟更新(The delayed part) - Action noise regularisation (This part didn’t make it to the title :/ )

动作噪声正则化(这部分没有进入标题:/ )

Twin Critic Networks 双影评人网络

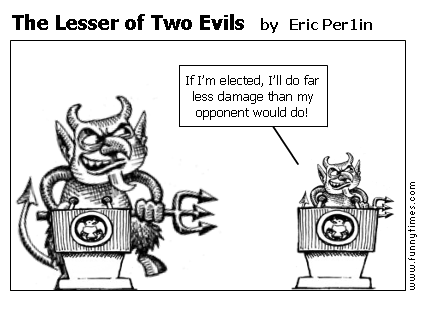

The first feature added to TD3 is the use of two critic networks. This was inspired by the technique seen in Deep Reinforcement Learning with Double Q-learning (Van Hasselt et al., 2016) which involved estimating the current Q value using a separate target value function, thus reducing the bias. However, the technique doesn’t work perfectly for actor critic methods. This is because the policy and target networks are updated so slowly that they look very similar, which brings bias back into the picture. Instead, an older implementation seen in Double Q Learning (Van Hasselt, 2010) is used. TD3 uses clipped double Q learning where it takes the smallest value of the two critic networks (The lesser of two evils if you will).

TD3 添加的第一个功能是使用两个评论家网络。这是受到具有双重Q学习的深度强化学习(Van Hasselt等人,2016)中看到的技术的启发,该技术涉及使用单独的目标值函数估计当前的Q值,从而减少偏差。然而,该技术对于演员评论家方法并不完美。这是因为策略和目标网络的更新速度如此之慢,以至于它们看起来非常相似,这又带来了偏见。取而代之的是,使用了Double Q Learning(Van Hasselt,2010)中看到的旧实现。TD3 使用剪裁双 Q 学习,其中它取两个批评网络的最小值(如果您愿意,则取两害相权取其轻)。

Fig 1. The lesser of the two value estimates will cause less damage to our policy updates. image found here

图 1.两个价值估计值中较小的一个将对我们的政策更新造成较小的损害。在这里找到的图片

This method favours underestimation of Q values. This underestimation bias isn’t a problem as the low values will not be propagated through the algorithm, unlike overestimate values. This provides a more stable approximation, thus improving the stability of the entire algorithm.

这种方法有利于低估 Q 值。这种低估偏差不是问题,因为与高估值不同,低值不会通

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

731

731

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?