背景

binder通信流程中,binder驱动在内核层,作为“中转站”,对通信进行流转。

FW、native调用binder驱动,本质上通过系统调用ioctl,向内核发送请求。内核通过cmd匹配各个case进行响应。

binder通信可简单划分为3层:业务层、IPC层、驱动层。IPC层作为中间层,承接业务层与驱动层之间的数据。IPC层的逻辑主要关注IPCThreadState.cpp

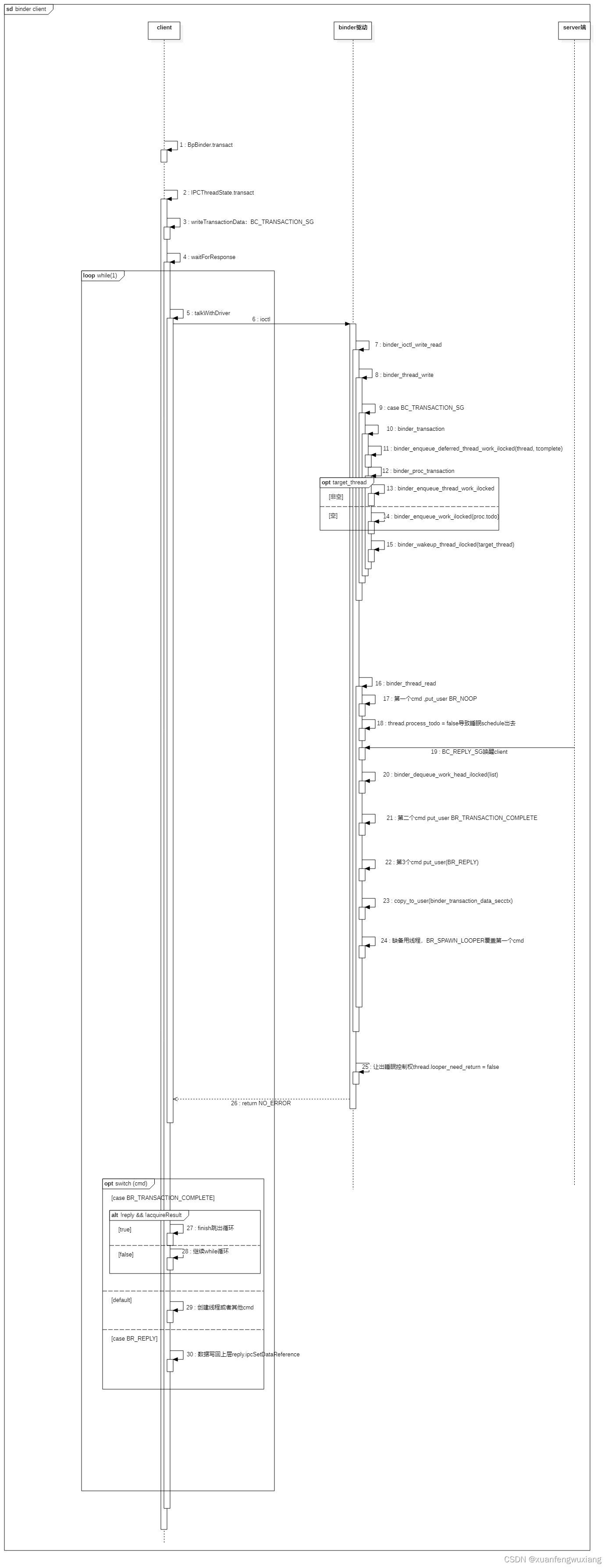

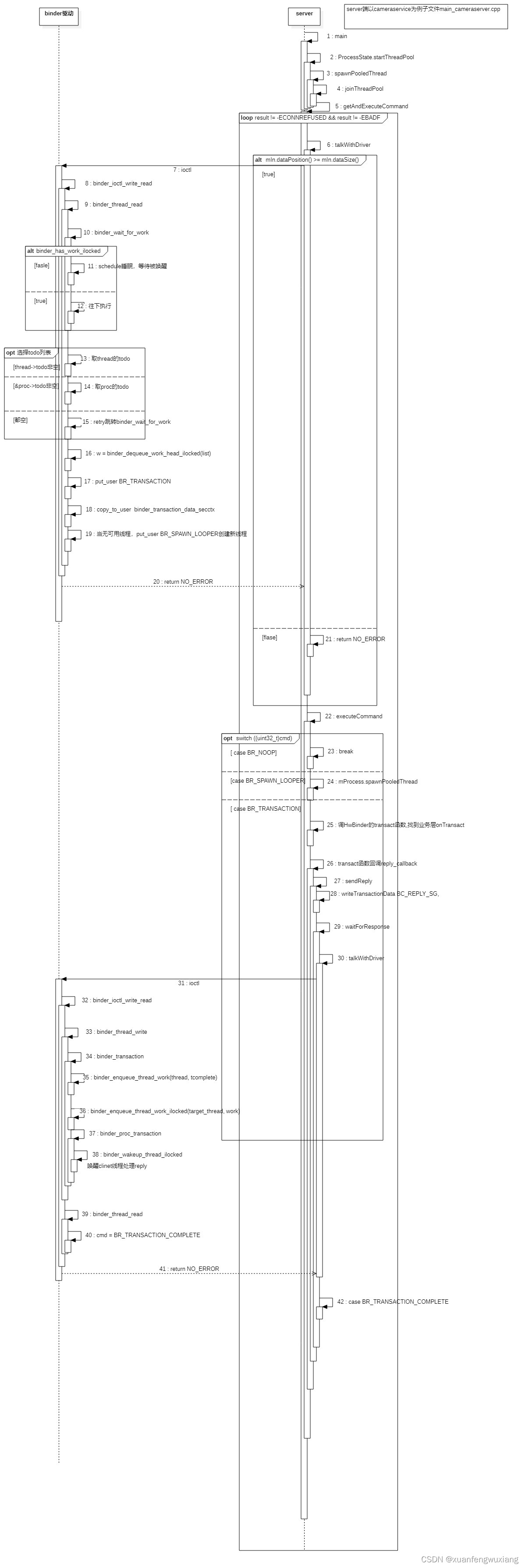

先上终极大图流程

binder驱动流程简述

IPC层与驱动层沟通的时候,用到的cmd码,分为2类:1、BC_开头,是请求码,用于IPC层发给binder驱动 2、BR_开头,是响应码,用于binder驱动发给IPC层。

流程大致:1、client 端写请求 2、server端读请求 3、server端修改thread looper状态请求 4、server端thread 退出请求

下面是代码流程图:

1、client 端写请求:

2、server端读请求:

3、server端修改thread looper状态请求:

4、server端thread 退出请求:

binder驱动流程详述

1. binder_ioctl

描述:client端通过ioctl系统调用,发起写入数据。这里cmd:BINDER_WRITE_READ

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

....................................

switch (cmd) {

case BINDER_WRITE_READ:

ret = binder_ioctl_write_read(filp, cmd, arg, thread);

..............................

}

if (thread) // 首次会影响client端read的时候睡眠,之后置位false,这个变量就不影响client端睡眠了。

thread->looper_need_return = false;

.......................................

}

2.binder_ioctl_write_read

描述:1、从上层拷贝数据到内核层 2、请求server端干活,走binder_thread_write() 3、查看自己有没有活,走binder_thread_read()

static int binder_ioctl_write_read(struct file *filp,unsigned int cmd, unsigned long arg,struct binder_thread *thread)

{

...............................

void __user *ubuf = (void __user *)arg;

// 与native层约定好的格式

struct binder_write_read bwr;

...............................

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

................................

}

if (bwr.write_size > 0) { // 有请求需要server端处理

ret = binder_thread_write(proc, thread, bwr.write_buffer, bwr.write_size, &bwr.write_consumed);

..................................

}

if (bwr.read_size > 0) { // 读一下read buffer。看看自己有没有活要干

ret = binder_thread_read(proc, thread, bwr.read_buffer,bwr.read_size,&bwr.read_consumed,filp->f_flags & O_NONBLOCK);

...................................

if (!binder_worklist_empty_ilocked(&proc->todo)) // 进程有todo,唤醒进程中的某线程干活

binder_wakeup_proc_ilocked(proc);

.....................................

}

....................................

}

3. binder_thread_write

描述:从binder_buffer中,解析出cmd:BC_TRANSACTION和binder_transaction_data。携带参数选择下一步流程。

static int binder_thread_write(struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed)

{

uint32_t cmd;

......................................

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread->return_error.cmd == BR_OK) {

................................

if (get_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

..................................

switch (cmd) {

.............................

case BC_TRANSACTION:

case BC_REPLY: {

struct binder_transaction_data tr;

if (copy_from_user(&tr, ptr, sizeof(tr))) //从native层拷贝参数

return -EFAULT;

ptr += sizeof(tr);

binder_transaction(proc, thread, &tr, cmd == BC_REPLY, 0);

break;

.................

}

..............

}

return 0;

}

4.binder_transaction

描述:

1、计算出target_node 、target_proc 、target_thread

2、构造binder_transaction参数t,user数据拷贝到t->buffer 。记录一个transaction_stack到client的thread->todo

3、调用binder_proc_transaction,并将以上参数传入

static void binder_transaction(struct binder_proc *proc, struct binder_thread *thread, struct binder_transaction_data *tr, int reply, binder_size_t extra_buffers_size)

{

struct binder_transaction *t;

struct binder_work *w;

................................

struct binder_proc *target_proc = NULL;

struct binder_thread *target_thread = NULL;

struct binder_node *target_node = NULL;

............................

const void __user *user_buffer = (const void __user *)(uintptr_t)tr->data.ptr.buffer;

if (reply) {

............................

in_reply_to = thread->transaction_stack;

............................

thread->transaction_stack = in_reply_to->to_parent;//非常重要,server端回复的时候,会出栈server端记录的stack

} else {

if (tr->target.handle) {

.................

if (ref) {//从target_node获取target_proc

target_node = binder_get_node_refs_for_txn(ref->node, &target_proc, &return_error);

}

..................

} else {

..........................

if (target_node)

target_node = binder_get_node_refs_for_txn(target_node, &target_proc, &return_error);

.......................

}

................

if (!(tr->flags & TF_ONE_WAY) && thread->transaction_stack) {//从transaction_stack中找到server发给client但未回复的通信记录,匹配出一个target_thread,形成一个嵌套通信

struct binder_transaction *tmp;

tmp = thread->transaction_stack;

........

while (tmp) {

struct binder_thread *from;

from = tmp->from;

if (from && from->proc == target_proc) {

target_thread = from;

break;

}

tmp = tmp->from_parent;

}

}

}

.........................

//构造binder_transaction

t = kzalloc(sizeof(*t), GFP_KERNEL);

.............................

if (!reply && !(tr->flags & TF_ONE_WAY))

t->from = thread;

else

t->from = NULL;

t->sender_euid = task_euid(proc->tsk);

t->to_proc = target_proc;

t->to_thread = target_thread;

t->code = tr->code;

t->flags = tr->flags;

...............

t->buffer = binder_alloc_new_buf(&target_proc->alloc, tr->data_size, tr->offsets_size, extra_buffers_size, !reply && (t->flags & TF_ONE_WAY), current->tgid);

...............

t->buffer->transaction = t;

t->buffer->target_node = target_node;

................

//将数据从user buffer拷贝到server进程的buffer

for (buffer_offset = off_start_offset; buffer_offset < off_end_offset; buffer_offset += sizeof(binder_size_t)) {

if (copy_size && (user_offset > object_offset || binder_alloc_copy_user_to_buffer(&target_proc->alloc, t->buffer, user_offset, user_buffer + user_offset, copy_size))) {

...........................................

}

}

........................

if (reply) {//server端的回复逻辑

.............

binder_pop_transaction_ilocked(target_thread, in_reply_to);//非常重要,server端回复的时候,会出栈client端记录的stack

.............

} else if (!(t->flags & TF_ONE_WAY)) {//client端发起的同步逻辑

.............

binder_enqueue_deferred_thread_work_ilocked(thread, tcomplete);//给client的thread->todo放一个tcomplete。但延迟返回,会睡眠等回复

t->need_reply = 1;

t->from_parent = thread->transaction_stack;

thread->transaction_stack = t;//记录本次transaction到 线程transaction_stack

return_error = binder_proc_transaction(t, target_proc, target_thread);

..........

} else {//client端发起的异步逻辑

...............

binder_enqueue_thread_work(thread, tcomplete);//这个入队的work,导致client端,在read的时候,不会睡眠,直接返回用户态

return_error = binder_proc_transaction(t, target_proc, NULL);

................

}

......................

}

5. binder_proc_transaction

描述:发送transaction到 目标进程,并尝试唤醒进程中的线程处理事务。如果没有找到线程处理,将binder_work放到 目标进程todo队列。如果指定了 目标线程,将binder_work放入 目标线程todo队列。

static int binder_proc_transaction(struct binder_transaction *t, struct binder_proc *proc, struct binder_thread *thread)

{

................................

//未指定 目标线程,去获取一个。

if (!thread && !pending_async && !skip)

thread = binder_select_thread_ilocked(proc);

if (thread) {//有 可用线程,将work入队 线程等待列表。

binder_transaction_priority(thread, t, node);

binder_enqueue_thread_work_ilocked(thread, &t->work);

} else if (!pending_async) {

if (enqueue_task)//无 可用线程,将work入队 进程等待列表

binder_enqueue_work_ilocked(&t->work, &proc->todo);

} else {

..................................

}

if (!pending_async)//唤醒线程 干活

binder_wakeup_thread_ilocked(proc, thread, !oneway /* sync */);

...............................

return 0;

}

1. binder_ioctl

描述:ioctl系统调用,读数据。这里cmd:BINDER_WRITE_READ

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

....................................

switch (cmd) {

case BINDER_WRITE_READ:

ret = binder_ioctl_write_read(filp, cmd, arg, thread);

..............................

}

.......................................

}

2.binder_ioctl_write_read

描述:copy_from_user拷贝 上层参数。bwr.read_size > 0条件走 读逻辑。

static int binder_ioctl_write_read(struct file *filp,unsigned int cmd, unsigned long arg,struct binder_thread *thread)

{

...............................

void __user *ubuf = (void __user *)arg;

struct binder_write_read bwr;

...............................

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

................................

}

.........................................

if (bwr.read_size > 0) {

ret = binder_thread_read(proc, thread, bwr.read_buffer,bwr.read_size, &bwr.read_consumed, filp->f_flags & O_NONBLOCK);

...................................

}

....................................

}

3. binder_thread_read

描述:

static int binder_thread_read(struct binder_proc *proc, struct binder_thread *thread, binder_uintptr_t binder_buffer, size_t size, binder_size_t *consumed, int non_block)

{

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

// 往native层的read buffer,放第1个cmd,默认就是BR_NOOP,上层对这个指令不做实际操作。

if (*consumed == 0) {

if (put_user(BR_NOOP, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

}

.....................

//线程 没有历史任务,todo队列没有任务,可以给进程干活

wait_for_proc_work = binder_available_for_proc_work_ilocked(thread);

.........................

if (non_block) {

.......................

} else {//判断有无work,无则进入waiting状态。

ret = binder_wait_for_work(thread, wait_for_proc_work);

}

..................................

while (1) {

uint32_t cmd;

struct binder_work *w = NULL;

......................

struct list_head *list = NULL;

struct binder_transaction *t = NULL;

......................

if (!binder_worklist_empty_ilocked(&thread->todo))

list = &thread->todo;//优先做线程的todo

else if (!binder_worklist_empty_ilocked(&proc->todo) && wait_for_proc_work)

list = &proc->todo;//其次做进程的todo

.......................

w = binder_dequeue_work_head_ilocked(list);//取出work

switch (w->type) {

case BINDER_WORK_TRANSACTION: {

binder_inner_proc_unlock(proc);

t = container_of(w, struct binder_transaction, work);

} break;

....................

if (t->buffer->target_node) {//有work需要server处理

.................

cmd = BR_TRANSACTION;

} else {//返回数据给client

............

cmd = BR_REPLY;

}

..........................

if (put_user(cmd, (uint32_t __user *)ptr)) {//cmd传给server

...........................

}

ptr += sizeof(uint32_t);

if (copy_to_user(ptr, &tr, trsize)) {//数据传给server

...........................

}

}

//判断是否新建线程

if (proc->requested_threads == 0 && list_empty(&thread->proc->waiting_threads) && proc->requested_threads_started < proc->max_threads &&

(thread->looper & (BINDER_LOOPER_STATE_REGISTERED | BINDER_LOOPER_STATE_ENTERED)) /* the user-space code fails to */

/*spawn a new thread if we leave this out */) {

proc->requested_threads++;

.....................

if (put_user(BR_SPAWN_LOOPER, (uint32_t __user *)buffer))

return -EFAULT;

binder_stat_br(proc, thread, BR_SPAWN_LOOPER);

} else

binder_inner_proc_unlock(proc);

return 0;

}

本文详细解释了Android系统中Binder通信的原理,涉及client端和server端的流程,以及binder_ioctl、binder_thread_write、binder_transaction等关键函数的工作机制,展示了驱动层与IPC层如何通过cmd码进行交互。

本文详细解释了Android系统中Binder通信的原理,涉及client端和server端的流程,以及binder_ioctl、binder_thread_write、binder_transaction等关键函数的工作机制,展示了驱动层与IPC层如何通过cmd码进行交互。

582

582

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?