前言:

将yolov8 模型转为可以在rk3588 里面运行的rknn 模型,作者亲测可行的步骤。可以供各位读者参考。

完成pt->rknn的转换需要用到下述三个软件,链接地址如下:

1.ultralytics_yolov8:https://github.com/airockchip/ultralytics_yolov8

2.rknn_model_zoo:https://github.com/airockchip/rknn_model_zoo

3.rknn-toolkit2:https://github.com/airockchip/rknn-toolkit2

1. yolov8 模型训练

提示:修改yolov8 训练代码时,代码或直接运行之所以会报错,是因为YOLOv8以及YOLOv10(使用了YOLOv8的项目结构)的项目结构中的ultralytics文件夹里面的路径的代码命名与pip install ultralytics这个第三方的包重复了,在终端使用yolo的命令训练的话,会默认解析pip下载的那个ultralytics包。

然后运行以下命令,使得ultralytics包与项目的ultralytics文件夹成为一体,在项目中更改比较方便。

pip install -e .yolov8 训练本文不详细介绍了,可以参考其他博主资料,正常训练即可。

提醒:部分作者会推荐在训练时,修改激活函数,不修改对后续影响也不大。使用ReLU()能提高检测速度,但是会损失一点精度,看个人需求。

# default_act = nn.SiLU() # default activation

default_act = nn.ReLU()

2.pt 模型转为ONNX(window/ubuntu)

1. 将该仓库的项目下载到本地上,压缩包或者git命令都可以。

ultralytics_yolov8: https://gitcode.com/gh_mirrors/ul/ultralytics_yolov8/overview?utm_source=csdn_github_accelerator&isLogin=1

安装环境:

如果没有requirements.txt,则自己新建文件 requirements.txt

# Ultralytics requirements

# Usage: pip install -r requirements.txt

# Base ----------------------------------------

matplotlib>=3.2.2

numpy>=1.22.2 # pinned by Snyk to avoid a vulnerability

opencv-python>=4.6.0

pillow>=7.1.2

pyyaml>=5.3.1

requests>=2.23.0

scipy>=1.4.1

torch>=1.7.0

torchvision>=0.8.1

tqdm>=4.64.0

onnx ==1.12

# Logging -------------------------------------

# tensorboard>=2.13.0

# dvclive>=2.12.0

# clearml

# comet

# Plotting ------------------------------------

pandas>=1.1.4

seaborn>=0.11.0

# Export --------------------------------------

# coremltools>=7.0.b1 # CoreML export

# onnx>=1.12.0 # ONNX export

# onnxsim>=0.4.1 # ONNX simplifier

# nvidia-pyindex # TensorRT export

# nvidia-tensorrt # TensorRT export

# scikit-learn==0.19.2 # CoreML quantization

# tensorflow>=2.4.1 # TF exports (-cpu, -aarch64, -macos)

# tflite-support

# tensorflowjs>=3.9.0 # TF.js export

# openvino-dev>=2023.0 # OpenVINO export

# Extras --------------------------------------

psutil # system utilization

py-cpuinfo # display CPU info

# thop>=0.1.1 # FLOPs computation

# ipython # interactive notebook

# albumentations>=1.0.3 # training augmentations

# pycocotools>=2.0.6 # COCO mAP

# roboflow

执行:

pip install -r requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple重点!!!!,一定要执行下面代码,否则后面修改激活函数不会生效

pip install -e .改激活函数:

把ultralytics/nn/modules/conv.py中的Conv类进行修改。将default_act = nn.SiLU() # default activation 改为default_act = nn.ReLU() # default activation

开始转化:

把自己需要转换的权重文件放到如下图所示的位置:一定要放在与ultralytics 同级。

执行一下命令:记得安装onnx 模块,版本最好为 onnx==1.12

yolo export model=yolov8m.pt format=rknn

注意:yolo export model=/your_path/best.pt format=rknn

一定要使用 format =rknn !!!!!!

结果如下:出现 output shape(s) ((1, 64, 80, 80), (1, 2, 80, 80), (1, 1, 80, 80), (1, 64, 40, 40), (1, 2, 40, 40), (1, 1, 40, 40), (1, 64, 20, 20), (1, 2, 20, 20), (1, 1, 20, 20)) (148.5 MB) 则成功转为适合onnx 转为RKNN 的结构。

把onnx 模型用https://netron.app/ 查看网络结构:

把onnx 模型用https://netron.app/ 查看网络结构:

出现上述截图结果,则表示pt 模型转换成功。

3.ONNX 模型转为RKNN(只能在ubuntu上转化)

- 下载rknn-toolkit2:https://github.com/airockchip/rknn-toolkit2

- 创建一个虚拟环境

conda create -n rknn220 python=3.9- 进入

rknn-toolkit2-2.1.0\rknn-toolkit2-2.1.0\rknn-toolkit2\packages文件中

在该文件目录下执行一下命令安装rknn 环境:

pip install -r requirements_cp39-2.2.0.txt -i https://pypi.tuna.tsinghua.edu.cn/simplepip install rknn_toolkit2-2.2.0-cp39-cp39-manylinux_2_17_x86_64.manylinux2014_x86_64.whl下载 rknn_model_zoo:https://github.com/airockchip/rknn_model_zoo

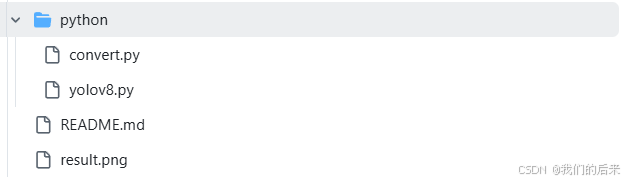

把文件放到该环境下,进入下面目录中:

rknn_model_zoo/tree/main/examples/yolov8/python

修改convert.py: 改为自己的模型地址

DATASET_PATH = '/rk3588/rknpu2/examples/rknn_yolov8_demo/coco_subset_20.txt'

DEFAULT_RKNN_PATH = '/rk3588/rknpu2/examples/rknn_yolov8_demo/best.rknn'最后执行以下命令:

python convert.py yolov8s.onnx rk3588生成结果:

4.rk3588测试:

rk3588 环境可以参考 RK3588 板子实现yolov5 模型转化推理 ,里面详细介绍了 安装rknn 工具包安装步骤。yolov5 和yolov8 适用于同一个环境。

安装环境后,新建文件 yolov8_demo:

在yolov8_demo 里在创建:

以下脚本:

coco_utils.py

import os

import cv2

from rknnlite.api import RKNNLite

import numpy as np

import time

from coco_utils import COCO_test_helper

current_path = os.path.dirname(os.path.abspath(__file__))

print(current_path )

RKNN_MODEL = current_path + "/best.rknn"

DATASET = current_path + '/test_demo.mp4'

QUANTIZE_ON = False

# CLASSES = ['person','bicycle','car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light',

# 'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

# 'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee',

# 'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard',

# 'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

# 'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch',

# 'potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

# 'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear',

# 'hair drier', 'toothbrush' ]

CLASSES = ['fire','smoke' ]

OBJ_THRESH = 0.45

NMS_THRESH = 0.45

MODEL_SIZE = (640, 640)

color_palette = np.random.uniform(0, 255, size=(len(CLASSES), 3))

def sigmoid(x):

return 1 / (1 + np.exp(-x))

def filter_boxes(boxes, box_confidences, box_class_probs):

"""Filter boxes with object threshold.

"""

box_confidences = box_confidences.reshape(-1)

candidate, class_num = box_class_probs.shape

class_max_score = np.max(box_class_probs, axis=-1)

classes = np.argmax(box_class_probs, axis=-1)

_class_pos = np.where(class_max_score* box_confidences >= OBJ_THRESH)

scores = (class_max_score * box_confidences)[_class_pos]

boxes = boxes[_class_pos]

classes = classes[_class_pos]

return boxes, classes, scores

def nms_boxes(boxes, scores):

"""Suppress non-maximal boxes.

# Returns

keep: ndarray, index of effective boxes.

"""

x = boxes[:, 0]

y = boxes[:, 1]

w = boxes[:, 2] - boxes[:, 0]

h = boxes[:, 3] - boxes[:, 1]

areas = w * h

order = scores.argsort()[::-1]

keep = []

while order.size > 0:

i = order[0]

keep.append(i)

xx1 = np.maximum(x[i], x[order[1:]])

yy1 = np.maximum(y[i], y[order[1:]])

xx2 = np.minimum(x[i] + w[i], x[order[1:]] + w[order[1:]])

yy2 = np.minimum(y[i] + h[i], y[order[1:]] + h[order[1:]])

w1 = np.maximum(0.0, xx2 - xx1 + 0.00001)

h1 = np.maximum(0.0, yy2 - yy1 + 0.00001)

inter = w1 * h1

ovr = inter / (areas[i] + areas[order[1:]] - inter)

inds = np.where(ovr <= NMS_THRESH)[0]

order = order[inds + 1]

keep = np.array(keep)

return keep

def softmax(x, axis=None):

x = x - x.max(axis=axis, keepdims=True)

y = np.exp(x)

return y / y.sum(axis=axis, keepdims=True)

def dfl(position):

# Distribution Focal Loss (DFL)

import torch

x = torch.tensor(position)

n,c,h,w = x.shape

p_num = 4

mc = c//p_num

y = x.reshape(n,p_num,mc,h,w)

y = y.softmax(2)

acc_metrix = torch.tensor(range(mc)).float().reshape(1,1,mc,1,1)

y = (y*acc_metrix).sum(2)

return y.numpy()

def box_process(position):

grid_h, grid_w = position.shape[2:4]

col, row = np.meshgrid(np.arange(0, grid_w), np.arange(0, grid_h))

col = col.reshape(1, 1, grid_h, grid_w)

row = row.reshape(1, 1, grid_h, grid_w)

grid = np.concatenate((col, row), axis=1)

stride = np.array([MODEL_SIZE[1]//grid_h, MODEL_SIZE[0]//grid_w]).reshape(1,2,1,1)

position = dfl(position)

box_xy = grid +0.5 -position[:,0:2,:,:]

box_xy2 = grid +0.5 +position[:,2:4,:,:]

xyxy = np.concatenate((box_xy*stride, box_xy2*stride), axis=1)

return xyxy

def post_process(input_data):

boxes, scores, classes_conf = [], [], []

defualt_branch=3

pair_per_branch = len(input_data)//defualt_branch

# Python 忽略 score_sum 输出

for i in range(defualt_branch):

boxes.append(box_process(input_data[pair_per_branch*i]))

classes_conf.append(input_data[pair_per_branch*i+1])

scores.append(np.ones_like(input_data[pair_per_branch*i+1][:,:1,:,:], dtype=np.float32))

def sp_flatten(_in):

ch = _in.shape[1]

_in = _in.transpose(0,2,3,1)

return _in.reshape(-1, ch)

boxes = [sp_flatten(_v) for _v in boxes]

classes_conf = [sp_flatten(_v) for _v in classes_conf]

scores = [sp_flatten(_v) for _v in scores]

boxes = np.concatenate(boxes)

classes_conf = np.concatenate(classes_conf)

scores = np.concatenate(scores)

# filter according to threshold

boxes, classes, scores = filter_boxes(boxes, scores, classes_conf)

# nms

nboxes, nclasses, nscores = [], [], []

for c in set(classes):

inds = np.where(classes == c)

b = boxes[inds]

c = classes[inds]

s = scores[inds]

keep = nms_boxes(b, s)

if len(keep) != 0:

nboxes.append(b[keep])

nclasses.append(c[keep])

nscores.append(s[keep])

if not nclasses and not nscores:

return None, None, None

boxes = np.concatenate(nboxes)

classes = np.concatenate(nclasses)

scores = np.concatenate(nscores)

return boxes, classes, scores

def draw(image, boxes, scores, classes):

for box, score, cl in zip(boxes, scores, classes):

top, left, right, bottom = [int(_b) for _b in box]

print("%s @ (%d %d %d %d) %.3f" % (CLASSES[cl], top, left, right, bottom, score))

cv2.rectangle(image, (top, left), (right, bottom), (255, 0, 0), 2)

cv2.putText(image, '{0} {1:.2f}'.format(CLASSES[cl], score),

(top, left - 6), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0, 0, 255), 2)

if __name__ == '__main__':

# 创建RKNN对象

rknn_lite = RKNNLite()

#加载RKNN模型

print('--> Load RKNN model')

ret = rknn_lite.load_rknn(RKNN_MODEL)

if ret != 0:

print('Load RKNN model failed')

exit(ret)

print('done')

# 初始化 runtime 环境

print('--> Init runtime environment')

# run on RK356x/RK3588 with Debian OS, do not need specify target.

ret = rknn_lite.init_runtime()

if ret != 0:

print('Init runtime environment failed!')

exit(ret)

print('done')

co_helper = COCO_test_helper(enable_letter_box=True)

frame_count = 0

cap = cv2.VideoCapture(DATASET)

# 另存视频

output_video_path = './res.mp4'

frame_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

frame_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

fps = cap.get(cv2.CAP_PROP_FPS)

# 创建 VideoWriter 对象以保存新视频

fourcc = cv2.VideoWriter_fourcc(*'mp4v') # 编码方式

out = cv2.VideoWriter(output_video_path, fourcc, fps, (frame_width, frame_height))

if not cap.isOpened():

print("Error: Could not open video.")

exit()

while True:

ret, img_src = cap.read()

# img_src = cv2.imread(current_path + "/" + img_path.split("\n")[0])

if not ret:

break

pad_color = (0,0,0)

img = co_helper.letter_box(im= img_src.copy(), new_shape=(MODEL_SIZE[1], MODEL_SIZE[0]), pad_color=(0,0,0))

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

input = np.expand_dims(img, axis=0)

# Inference

print('--> Running model')

time1 = time.time()

outputs = rknn_lite.inference(inputs=[input])

time2 = time.time()

print('done! Calculate time is ' + str(time2 - time1))

boxes, classes, scores = post_process(outputs)

img_p = img_src.copy()

if boxes is not None:

print(boxes)

draw(img_p, co_helper.get_real_box(boxes), scores, classes)

# frame_count+=1

# cv2.imwrite(f'./res/res_{frame_count}.jpg',img_p)

# cv2.namedWindow("full post process result", cv2.WINDOW_NORMAL)

# cv2.resizeWindow("full post process result", 800, 600)

# cv2.imshow("full post process result", img_p)

# cv2.waitKeyEx(0)

out.write(img_p)

out.release()

cap.release()

rknn_lite.release()test_lite.py

import os

import cv2

from rknnlite.api import RKNNLite

import numpy as np

import time

from coco_utils import COCO_test_helper

current_path = os.path.dirname(os.path.abspath(__file__))

print(current_path )

RKNN_MODEL = current_path + "/best.rknn"

DATASET = current_path + '/dataset.txt'

QUANTIZE_ON = False

# CLASSES = ['person','bicycle','car', 'motorcycle', 'airplane', 'bus', 'train', 'truck', 'boat', 'traffic light',

# 'fire hydrant', 'stop sign', 'parking meter', 'bench', 'bird', 'cat', 'dog', 'horse', 'sheep', 'cow',

# 'elephant', 'bear', 'zebra', 'giraffe', 'backpack', 'umbrella', 'handbag', 'tie', 'suitcase', 'frisbee',

# 'skis', 'snowboard', 'sports ball', 'kite', 'baseball bat', 'baseball glove', 'skateboard', 'surfboard',

# 'tennis racket', 'bottle', 'wine glass', 'cup', 'fork', 'knife', 'spoon', 'bowl', 'banana', 'apple',

# 'sandwich', 'orange', 'broccoli', 'carrot', 'hot dog', 'pizza', 'donut', 'cake', 'chair', 'couch',

# 'potted plant', 'bed', 'dining table', 'toilet', 'tv', 'laptop', 'mouse', 'remote', 'keyboard', 'cell phone',

# 'microwave', 'oven', 'toaster', 'sink', 'refrigerator', 'book', 'clock', 'vase', 'scissors', 'teddy bear',

# 'hair drier', 'toothbrush' ]

CLASSES = ['fire','smoke' ]

OBJ_THRESH = 0.45

NMS_THRESH = 0.45

MODEL_SIZE = (640, 640)

color_palette = np.random.uniform(0, 255, size=(len(CLASSES), 3))

def sigmoid(x):

return 1 / (1 + np.exp(-x))

def filter_boxes(boxes, box_confidences, box_class_probs):

"""Filter boxes with object threshold.

"""

box_confidences = box_confidences.reshape(-1)

candidate, class_num = box_class_probs.shape

class_max_score = np.max(box_class_probs, axis=-1)

classes = np.argmax(box_class_probs, axis=-1)

_class_pos = np.where(class_max_score* box_confidences >= OBJ_THRESH)

scores = (class_max_score * box_confidences)[_class_pos]

boxes = boxes[_class_pos]

classes = classes[_class_pos]

return boxes, classes, scores

def nms_boxes(boxes, scores):

"""Suppress non-maximal boxes.

# Returns

keep: ndarray, index of effective boxes.

"""

x = boxes[:, 0]

y = boxes[:, 1]

w = boxes[:, 2] - boxes[:, 0]

h = boxes[:, 3] - boxes[:, 1]

areas = w * h

order = scores.argsort()[::-1]

keep = []

while order.size > 0:

i = order[0]

keep.append(i)

xx1 = np.maximum(x[i], x[order[1:]])

yy1 = np.maximum(y[i], y[order[1:]])

xx2 = np.minimum(x[i] + w[i], x[order[1:]] + w[order[1:]])

yy2 = np.minimum(y[i] + h[i], y[order[1:]] + h[order[1:]])

w1 = np.maximum(0.0, xx2 - xx1 + 0.00001)

h1 = np.maximum(0.0, yy2 - yy1 + 0.00001)

inter = w1 * h1

ovr = inter / (areas[i] + areas[order[1:]] - inter)

inds = np.where(ovr <= NMS_THRESH)[0]

order = order[inds + 1]

keep = np.array(keep)

return keep

def softmax(x, axis=None):

x = x - x.max(axis=axis, keepdims=True)

y = np.exp(x)

return y / y.sum(axis=axis, keepdims=True)

def dfl(position):

# Distribution Focal Loss (DFL)

import torch

x = torch.tensor(position)

n,c,h,w = x.shape

p_num = 4

mc = c//p_num

y = x.reshape(n,p_num,mc,h,w)

y = y.softmax(2)

acc_metrix = torch.tensor(range(mc)).float().reshape(1,1,mc,1,1)

y = (y*acc_metrix).sum(2)

return y.numpy()

def box_process(position):

grid_h, grid_w = position.shape[2:4]

col, row = np.meshgrid(np.arange(0, grid_w), np.arange(0, grid_h))

col = col.reshape(1, 1, grid_h, grid_w)

row = row.reshape(1, 1, grid_h, grid_w)

grid = np.concatenate((col, row), axis=1)

stride = np.array([MODEL_SIZE[1]//grid_h, MODEL_SIZE[0]//grid_w]).reshape(1,2,1,1)

position = dfl(position)

box_xy = grid +0.5 -position[:,0:2,:,:]

box_xy2 = grid +0.5 +position[:,2:4,:,:]

xyxy = np.concatenate((box_xy*stride, box_xy2*stride), axis=1)

return xyxy

def post_process(input_data):

boxes, scores, classes_conf = [], [], []

defualt_branch=3

pair_per_branch = len(input_data)//defualt_branch

# Python 忽略 score_sum 输出

for i in range(defualt_branch):

boxes.append(box_process(input_data[pair_per_branch*i]))

classes_conf.append(input_data[pair_per_branch*i+1])

scores.append(np.ones_like(input_data[pair_per_branch*i+1][:,:1,:,:], dtype=np.float32))

def sp_flatten(_in):

ch = _in.shape[1]

_in = _in.transpose(0,2,3,1)

return _in.reshape(-1, ch)

boxes = [sp_flatten(_v) for _v in boxes]

classes_conf = [sp_flatten(_v) for _v in classes_conf]

scores = [sp_flatten(_v) for _v in scores]

boxes = np.concatenate(boxes)

classes_conf = np.concatenate(classes_conf)

scores = np.concatenate(scores)

# filter according to threshold

boxes, classes, scores = filter_boxes(boxes, scores, classes_conf)

# nms

nboxes, nclasses, nscores = [], [], []

for c in set(classes):

inds = np.where(classes == c)

b = boxes[inds]

c = classes[inds]

s = scores[inds]

keep = nms_boxes(b, s)

if len(keep) != 0:

nboxes.append(b[keep])

nclasses.append(c[keep])

nscores.append(s[keep])

if not nclasses and not nscores:

return None, None, None

boxes = np.concatenate(nboxes)

classes = np.concatenate(nclasses)

scores = np.concatenate(nscores)

return boxes, classes, scores

def draw(image, boxes, scores, classes):

for box, score, cl in zip(boxes, scores, classes):

top, left, right, bottom = [int(_b) for _b in box]

print("%s @ (%d %d %d %d) %.3f" % (CLASSES[cl], top, left, right, bottom, score))

cv2.rectangle(image, (top, left), (right, bottom), (255, 0, 0), 2)

cv2.putText(image, '{0} {1:.2f}'.format(CLASSES[cl], score),

(top, left - 6), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0, 0, 255), 2)

if __name__ == '__main__':

# 创建RKNN对象

rknn_lite = RKNNLite()

#加载RKNN模型

print('--> Load RKNN model')

ret = rknn_lite.load_rknn(RKNN_MODEL)

if ret != 0:

print('Load RKNN model failed')

exit(ret)

print('done')

# 初始化 runtime 环境

print('--> Init runtime environment')

# run on RK356x/RK3588 with Debian OS, do not need specify target.

ret = rknn_lite.init_runtime()

if ret != 0:

print('Init runtime environment failed!')

exit(ret)

print('done')

co_helper = COCO_test_helper(enable_letter_box=True)

with open(DATASET, "r") as f:

img_paths = f.readlines()

for img_path in img_paths:

img_src = cv2.imread(current_path + "/" + img_path.split("\n")[0])

pad_color = (0,0,0)

img = co_helper.letter_box(im= img_src.copy(), new_shape=(MODEL_SIZE[1], MODEL_SIZE[0]), pad_color=(0,0,0))

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

input = np.expand_dims(img, axis=0)

# Inference

print('--> Running model')

time1 = time.time()

outputs = rknn_lite.inference(inputs=[input])

time2 = time.time()

print('done! Calculate time is ' + str(time2 - time1))

boxes, classes, scores = post_process(outputs)

img_p = img_src.copy()

if boxes is not None:

print(boxes)

draw(img_p, co_helper.get_real_box(boxes), scores, classes)

cv2.imwrite("./res1.jpg",img_p)

# cv2.namedWindow("full post process result", cv2.WINDOW_NORMAL)

# cv2.resizeWindow("full post process result", 800, 600)

# cv2.imshow("full post process result", img_p)

# cv2.waitKeyEx(0)

rknn_lite.release()更改test_lite 里面文件路径和 图像大小,类别等信息。

执行以下命令:

python3 test_lite.py

结果如下:

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?