一、数据准备

因为只是详实的记录一下训练过程,所以数据量不需要太多,我们以数据集 Oxford-IIIT Pet 中的 阿比西尼亚猫(Abyssinian) 为例来说明。数据集 Oxford-IIIT Pet 可以从 这里 下载,数据量不大,只有 800M 不到。其中,阿比西尼亚猫的图像只有 232 张,这种猫的长相如下:

要训练 Mask R-CNN 实例分割模型,我们首先要准备图像的掩模(mask),使用标注工具 labelme(支持 Windows 和 Ubuntu,使用 (sudo) pip install labelme 安装,需要安装依赖项:(sudo) pip install pyqt5)来完成这一步。安装完 labelme 之后,在命令行执行 labelme 会弹出一个标注窗口:

点击 Save 之后选择路径保存为一个 json 文件:Abyssinian_65.json

【将命令行来到 Abyssinian_65.json 文件所在的文件夹,执行labelme_json_to_dataset Abyssinian_65.json

会在当前目录下生成一个名叫 Abyssinian_65_json 的文件夹,里面包含如下文件:

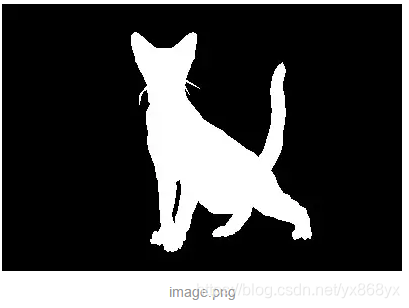

其中的 label.png 图像:

正是在公开数据集经常见到的实例分割掩模。——这一段只用来描述 labelme 的完整功能,实际上本文不需要执行这个过程!

但是 labelme 有一个很大的缺陷,即它只能标注首尾相连的多边形,如果一个目标实例包含一个洞(如第二幅图像 Abyssinian_65.jpg 的猫的两腿之间的空隙),那么这个洞也会算成这个目标实例的一部分,而这显然是不正确的。为了避免这个缺陷,在标注目标实例时,可以增加一个额外的类 hole(如上图的 绿色 部分),实际使用时只要把 hole 部分去掉即可,如:

TensorFlow 训练时要求 mask 是跟原图像一样大小的二值(0-1)png 图像(如上图),而且数据输入格式必须为 tfrecord 文件,所以还需要写一个数据格式转化的辅助 python 文件,该文件可以参考 TensorFlow 目标检测官方的文件 create_coco_tf_record.py来写。

在写之前,强调说明一下数据输入的格式:对每张图像中的每个目标,该目标的 mask 是一张与原图一样大小的 0-1 二值图像,该目标所在区域的值为 1,其他区域全为 0(见 TensorFlow/object_detection 官方说明:Run an Instance Segmentation Model/PNG Instance Segmentation Masks)。也就是说,同一张图像中的所有目标的 mask 都需要从单个标注文件中分割出来。这可以使用 OpenCV 的 cv2.fillPoly 函数来实现,该函数将指定多边形区域内部的值都填充为用户设定的值。

PS:labelme可以帮助我们快速的实现Mask-RCNN中数据集json文件的生成,然而还需要我们进一步的将json转成dataset,可以直接在cmd中执行labelme_json_to_dataset.exe (路径),但是这个过程需要我们一个json文件的生成,过程很慢。

1、打开labelme安装目录

在lableme安装目录下有G:\Anaconda\Lib\site-packages\labelme\cli目录,可以看到json_to_dataset.py文件

这里面提供将json转成dataset的代码,所以我们只需要在这个基础上更改即可。

2、代码实现

复制json_to_dataset.py文件,代码更改:

import argparse

import json

import os

import os.path as osp

import warnings

import PIL.Image

import yaml

from labelme import utils

import base64

def main():

warnings.warn("This script is aimed to demonstrate how to convert the\n"

"JSON file to a single image dataset, and not to handle\n"

"multiple JSON files to generate a real-use dataset.")

parser = argparse.ArgumentParser()

parser.add_argument('json_file')

parser.add_argument('-o', '--out', default=None)

args = parser.parse_args()

json_file = args.json_file

if args.out is None:

out_dir = osp.basename(json_file).replace('.', '_')

out_dir = osp.join(osp.dirname(json_file), out_dir)

else:

out_dir = args.out

if not osp.exists(out_dir):

os.mkdir(out_dir)

count = os.listdir(json_file)

for i in range(0, len(count)):

path = os.path.join(json_file, count[i])

if os.path.isfile(path):

data = json.load(open(path))

if data['imageData']:

imageData = data['imageData']

else:

imagePath = os.path.join(os.path.dirname(path), data['imagePath'])

with open(imagePath, 'rb') as f:

imageData = f.read()

imageData = base64.b64encode(imageData).decode('utf-8')

img = utils.img_b64_to_arr(imageData)

label_name_to_value = {'_background_': 0}

for shape in data['shapes']:

label_name = shape['label']

if label_name in label_name_to_value:

label_value = label_name_to_value[label_name]

else:

label_value = len(label_name_to_value)

label_name_to_value[label_name] = label_value

# label_values must be dense

label_values, label_names = [], []

for ln, lv in sorted(label_name_to_value.items(), key=lambda x: x[1]):

label_values.append(lv)

label_names.append(ln)

assert label_values == list(range(len(label_values)))

lbl = utils.shapes_to_label(img.shape, data['shapes'], label_name_to_value)

captions = ['{}: {}'.format(lv, ln)

for ln, lv in label_name_to_value.items()]

lbl_viz = utils.draw_label(lbl, img, captions)

out_dir = osp.basename(count[i]).replace('.', '_')

out_dir = osp.join(osp.dirname(count[i]), out_dir)

if not osp.exists(out_dir):

os.mkdir(out_dir)

PIL.Image.fromarray(img).save(osp.join(out_dir, 'img.png'))

#PIL.Image.fromarray(lbl).save(osp.join(out_dir, 'label.png'))

utils.lblsave(osp.join(out_dir, 'label.png'), lbl)

PIL.Image.fromarray(lbl_viz).save(osp.join(out_dir, 'label_viz.png'))

with open(osp.join(out_dir, 'label_names.txt'), 'w') as f:

for lbl_name in label_names:

f.write(lbl_name + '\n')

warnings.warn('info.yaml is being replaced by label_names.txt')

info = dict(label_names=label_names)

with open(osp.join(out_dir, 'info.yaml'), 'w') as f:

yaml.safe_dump(info, f, default_flow_style=False)

print('Saved to: %s' % out_dir)

if __name__ == '__main__':

main()

然后替换之前json_to_dataset.py文件。

3、执行与查看

在cmd中cd到label_json_to_dataset.py路径下,然后输入labelme_json_to_dataset.exe 图片路径路径只需要输入到文件夹即可,不需要具体指定json文件。然后在安装目录下的Scripts路径下可以查看到批量保存的json文件夹。

假设已经准备好了 mask 标注数据,因为包围每个目标的 mask 的最小矩形就是该目标的 boundingbox,所以目标检测的标注数据也就同时有了。接下来,只需要将这些标注数据(原始图像,以及 labelme 标注生成的 json 文件)转换成 TFRecord 文件即可,使用如下代码完成这一步操作(命名为 create_tf_record.py,见 github):

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

Created on Sun Aug 26 10:57:09 2018

@author: shirhe-lyh

"""

"""Convert raw dataset to TFRecord for object_detection.

Please note that this tool only applies to labelme's annotations(json file).

Example usage:

python3 create_tf_record.py \

--images_dir=your absolute path to read images.

--annotations_json_dir=your path to annotaion json files.

--label_map_path=your path to label_map.pbtxt

--output_path=your path to write .record.

"""

import cv2

import glob

import hashlib

import io

import json

import numpy as np

import os

import PIL.Image

import tensorflow as tf

import read_pbtxt_file

flags = tf.app.flags

flags.DEFINE_string('images_dir', None, 'Path to images directory.')

flags.DEFINE_string('annotations_json_dir', 'datasets/annotations',

'Path to annotations directory.')

flags.DEFINE_string('label_map_path', None, 'Path to label map proto.')

flags.DEFINE_string('output_path', None, 'Path to the output tfrecord.')

FLAGS = flags.FLAGS

def int64_feature(value):

return tf.train.Feature(int64_list=tf.train.Int64List(value=[value]))

def int64_list_feature(value):

return tf.train.Feature(int64_list=tf.train.Int64List(value=value))

def bytes_feature(value):

return tf.train.Feature(bytes_list=tf.train.BytesList(value=[value]))

def bytes_list_feature(value):

return tf.train.Feature(bytes_list=tf.train.BytesList(value=value))

def float_list_feature(value):

return tf.train.Feature(float_list=tf.train.FloatList(value=value))

def create_tf_example(annotation_dict, label_map_dict=None):

"""Converts image and annotations to a tf.Example proto.

Args:

annotation_dict: A dictionary containing the following keys:

['height', 'width', 'filename', 'sha256_key', 'encoded_jpg',

'format', 'xmins', 'xmaxs', 'ymins', 'ymaxs', 'masks',

'class_names'].

label_map_dict: A dictionary maping class_names to indices.

Returns:

example: The converted tf.Example.

Raises:

ValueError: If label_map_dict is None or is not containing a class_name.

"""

if annotation_dict is None:

return None

if label_map_dict is None:

raise ValueError('`label_map_dict` is None')

height = annotation_dict.get('height', None)

width = annotation_dict.get('width', None)

filename = annotation_dict.get('filename', None)

sha256_key = annotation_dict.get('sha256_key', None)

encoded_jpg = annotation_dict.get('encoded_jpg', None)

image_format = annotation_dict.get('format', None)

xmins = annotation_dict.get('xmins', None)

xmaxs = annotation_dict.get('xmaxs', None)

ymins = annotation_dict.get('ymins', None)

ymaxs = annotation_dict.get('ymaxs', None)

masks = annotation_dict.get('masks', None)

class_names = annotation_dict.get('class_names', None)

labels = []

for class_name in class_names:

label = label_map_dict.get(class_name, 'None')

if label is None:

raise ValueError('`label_map_dict` is not containing {}.'.format(

class_name))

labels.append(label)

encoded_masks = []

for mask in masks:

pil_image = PIL.Image.fromarray(mask.astype(np.uint8))

output_io = io.BytesIO()

pil_image.save(output_io, format='PNG')

encoded_masks.append(output_io.getvalue())

feature_dict = {

'image/height': int64_feature(height),

'image/width': int64_feature(width),

'image/filename': bytes_feature(filename.encode('utf8')),

'image/source_id': bytes_feature(filename.encode('utf8')),

'image/key/sha256': bytes_feature(sha256_key.encode('utf8')),

'image/encoded': bytes_feature(encoded_jpg),

'image/format': bytes_feature(image_format.encode('utf8')),

'image/object/bbox/xmin': float_list_feature(xmins),

'image/object/bbox/xmax': float_list_feature(xmaxs),

'image/object/bbox/ymin': float_list_feature(ymins),

'image/object/bbox/ymax': float_list_feature(ymaxs),

'image/object/mask': bytes_list_feature(encoded_masks),

'image/object/class/label': int64_list_feature(labels)}

example = tf.train.Example(features=tf.train.Features(

feature=feature_dict))

return example

def _get_annotation_dict(images_dir, annotation_json_path):

if (not os.path.exists(images_dir) or

not os.path.exists(annotation_json_path)):

return None

with open(annotation_json_path, 'r') as f:

json_text = json.load(f)

shapes = json_text.get('shapes', None)

if shapes is None:

return None

image_relative_path = json_text.get('imagePath', None)

if image_relative_path is None:

return None

image_name = image_relative_path.split('/')[-1]

image_path = os.path.join(images_dir, image_name)

image_format = image_name.split('.')[-1].replace('jpg', 'jpeg')

if not os.path.exists(image_path):

return None

with tf.gfile.GFile(image_path, 'rb') as fid:

encoded_jpg = fid.read()

image = cv2.imread(image_path)

height = image.shape[0]

width = image.shape[1]

key = hashlib.sha256(encoded_jpg).hexdigest()

xmins = []

xmaxs = []

ymins = []

ymaxs = []

masks = []

class_names = []

hole_polygons = []

for mark in shapes:

class_name = mark.get('label')

class_names.append(class_name)

polygon = mark.get('points')

polygon = np.array(polygon)

if class_name == 'hole':

hole_polygons.append(polygon)

else:

mask = np.zeros(image.shape[:2])

cv2.fillPoly(mask, [polygon], 1)

masks.append(mask)

# Boundingbox

x = polygon[:, 0]

y = polygon[:, 1]

xmin = np.min(x)

xmax = np.max(x)

ymin = np.min(y)

ymax = np.max(y)

xmins.append(float(xmin) / width)

xmaxs.append(float(xmax) / width)

ymins.append(float(ymin) / height)

ymaxs.append(float(ymax) / height)

# Remove holes in mask

for mask in masks:

mask = cv2.fillPoly(mask, hole_polygons, 0)

annotation_dict = {'height': height,

'width': width,

'filename': image_name,

'sha256_key': key,

'encoded_jpg': encoded_jpg,

'format': image_format,

'xmins': xmins,

'xmaxs': xmaxs,

'ymins': ymins,

'ymaxs': ymaxs,

'masks': masks,

'class_names': class_names}

return annotation_dict

def main(_):

if not os.path.exists(FLAGS.images_dir):

raise ValueError('`images_dir` is not exist.')

if not os.path.exists(FLAGS.annotations_json_dir):

raise ValueError('`annotations_json_dir` is not exist.')

if not os.path.exists(FLAGS.label_map_path):

raise ValueError('`label_map_path` is not exist.')

label_map = read_pbtxt_file.get_label_map_dict(FLAGS.label_map_path)

writer = tf.python_io.TFRecordWriter(FLAGS.output_path)

num_annotations_skiped = 0

annotations_json_path = os.path.join(FLAGS.annotations_json_dir, '*.json')

for i, annotation_file in enumerate(glob.glob(annotations_json_path)):

if i % 100 == 0:

print('On image %d', i)

annotation_dict = _get_annotation_dict(

FLAGS.images_dir, annotation_file)

if annotation_dict is None:

num_annotations_skiped += 1

continue

tf_example = create_tf_example(annotation_dict, label_map)

writer.write(tf_example.SerializeToString())

print('Successfully created TFRecord to {}.'.format(FLAGS.output_path))

if __name__ == '__main__':

tf.app.run()

二、训练 Mask R-CNN 模型

训练过程完全类似文章 TensorFlow 训练自己的目标检测器,只需要下载一个 Mask R-CNN 的预训练模型,以及配置一下训练参数即可。预训练模型下载请前往链接 Mask R-CNN 预训练模型 注:测试的图片不能太大,会报错。

三、测试Mask R-CNN

1、批量图片测试代码:

import numpy as np

import os

import six.moves.urllib as urllib

import sys

import tarfile

import tensorflow as tf

import zipfile

import time

import cv2

from collections import defaultdict

from io import StringIO

import matplotlib

matplotlib.use('Agg')

from matplotlib import pyplot as plt

from PIL import Image

# This is needed since the notebook is stored in the object_detection folder.

sys.path.append("..")

from object_detection.utils import ops as utils_ops

#if tf.__version__ < '1.4.0':

# raise ImportError('Please upgrade your tensorflow installation to v1.4.* or later!')

# This is needed to display the images.

#%matplotlib inline

from utils import label_map_util

from utils import visualization_utils as vis_util

start = time.time()

# What model to download.

MODEL_NAME = 'model_result_mask_rcnn_Abyssinian'

# Path to frozen detection graph. This is the actual model that is used for the object detection.

PATH_TO_CKPT = MODEL_NAME + '/frozen_inference_graph.pb' #模型文件

# List of the strings that is used to add correct label for each box.

PATH_TO_LABELS = os.path.join('data', 'Abyssinian_label_map.pbtxt') #标签路径

NUM_CLASSES = 1 #类别

detection_graph = tf.Graph()

with detection_graph.as_default():

od_graph_def = tf.GraphDef()

with tf.gfile.GFile(PATH_TO_CKPT, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

label_map = label_map_util.load_labelmap(PATH_TO_LABELS)

categories = label_map_util.convert_label_map_to_categories(label_map, max_num_classes=NUM_CLASSES, use_display_name=True)

category_index = label_map_util.create_category_index(categories)

def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8)

PATH_TO_TEST_IMAGES_DIR = os.getcwd() +'/test_pictures_Abyssinian' #测试图片路径

os.chdir(PATH_TO_TEST_IMAGES_DIR)

TEST_IMAGE_PATHS = os.listdir(PATH_TO_TEST_IMAGES_DIR)

# Size, in inches, of the output images.

IMAGE_SIZE = (12, 8)

output_path = ('/home/yuxin/tensorflow/models-master/research/object_detection/testout_mask_rcnn_Abyssinian/')#输出路径

def run_inference_for_single_image(image, graph):

with graph.as_default():

with tf.Session() as sess:

# Get handles to input and output tensors

ops = tf.get_default_graph().get_operations()

all_tensor_names = {output.name for op in ops for output in op.outputs}

tensor_dict = {}

for key in [

'num_detections', 'detection_boxes', 'detection_scores',

'detection_classes', 'detection_masks'

]:

tensor_name = key + ':0'

if tensor_name in all_tensor_names:

tensor_dict[key] = tf.get_default_graph().get_tensor_by_name(

tensor_name)

if 'detection_masks' in tensor_dict:

# The following processing is only for single image

detection_boxes = tf.squeeze(tensor_dict['detection_boxes'], [0])

detection_masks = tf.squeeze(tensor_dict['detection_masks'], [0])

# Reframe is required to translate mask from box coordinates to image coordinates and fit the image size.

real_num_detection = tf.cast(tensor_dict['num_detections'][0], tf.int32)

detection_boxes = tf.slice(detection_boxes, [0, 0], [real_num_detection, -1])

detection_masks = tf.slice(detection_masks, [0, 0, 0], [real_num_detection, -1, -1])

detection_masks_reframed = utils_ops.reframe_box_masks_to_image_masks(

detection_masks, detection_boxes, image.shape[0], image.shape[1])

detection_masks_reframed = tf.cast(

tf.greater(detection_masks_reframed, 0.1), tf.uint8)

# Follow the convention by adding back the batch dimension

tensor_dict['detection_masks'] = tf.expand_dims(

detection_masks_reframed, 0)

image_tensor = tf.get_default_graph().get_tensor_by_name('image_tensor:0')

output_dict = sess.run(tensor_dict,

feed_dict={image_tensor: np.expand_dims(image, 0)})

# all outputs are float32 numpy arrays, so convert types as appropriate

output_dict['num_detections'] = int(output_dict['num_detections'][0])

output_dict['detection_classes'] = output_dict[

'detection_classes'][0].astype(np.uint8)

output_dict['detection_boxes'] = output_dict['detection_boxes'][0]

output_dict['detection_scores'] = output_dict['detection_scores'][0]

if 'detection_masks' in output_dict:

output_dict['detection_masks'] = output_dict['detection_masks'][0]

return output_dict

for image_path in TEST_IMAGE_PATHS:

image = Image.open(image_path)

# the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

print(image_path)

image_np = load_image_into_numpy_array(image)

# Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

output_dict = run_inference_for_single_image(image_np, detection_graph)

# Visualization of the results of a detection.

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

output_dict['detection_boxes'],

output_dict['detection_classes'],

output_dict['detection_scores'],

category_index,

instance_masks=output_dict.get('detection_masks'),

use_normalized_coordinates=True,

line_thickness=8)

plt.figure(figsize=IMAGE_SIZE)

plt.imshow(image_np)

image_np = cv2.cvtColor(image_np, cv2.COLOR_RGB2BGR)

cv2.imwrite(output_path + image_path.split('\\')[-1], image_np)

end = time.time()

print("Execution Time: ", end - start)

Error:

ResourceExhaustedError: OOM when allocating tensor with shape[,] ,[,]的第一个参数表示batch_size的大小,第二个参数表示某层卷积核的个数,第三个参数表示图像的高,第四个参数表示图像的长

错误的原因可能是超出内存了,因此可以适当减小batch_size的大小即可解决,也可能是超出现显存了。程序加入 plt.imshow(image_np)时会显示处理图像的文件名,发现有图片尺寸过大的情况,删除图像则不会报错

2、视频检测:

import numpy as np

import os

import six.moves.urllib as urllib

import sys

import tarfile

import tensorflow as tf

import zipfile

import matplotlib

import cv2

# Matplotlib chooses Xwindows backend by default.

matplotlib.use('Agg')

from collections import defaultdict

from io import StringIO

from matplotlib import pyplot as plt

from PIL import Image

from utils import label_map_util

from utils import visualization_utils as vis_util

from object_detection.utils import ops as utils_ops

'''

检测视频

'''

##################### Download Model

cap = cv2.VideoCapture(0) #打开摄像头

# Path to frozen detection graph. This is the actual model that is used for the object detection.

PATH_TO_CKPT = 'model_result_mask_rcnn_Abyssinian' + '/frozen_inference_graph.pb'

# List of the strings that is used to add correct label for each box.

PATH_TO_LABELS = os.path.join('data', 'Abyssinian_label_map.pbtxt')

NUM_CLASSES =1

##################### Load a (frozen) Tensorflow model into memory.

print('Loading model...')

detection_graph = tf.Graph()

with detection_graph.as_default():

od_graph_def = tf.GraphDef()

with tf.gfile.GFile(PATH_TO_CKPT, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

##################### Loading label map

print('Loading label map...')

label_map = label_map_util.load_labelmap(PATH_TO_LABELS)

categories = label_map_util.convert_label_map_to_categories(label_map, max_num_classes=NUM_CLASSES, use_display_name=True)

category_index = label_map_util.create_category_index(categories)

##################### Helper code

def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8)

##################### Detection

def run_inference_for_single_image(image, graph):

with graph.as_default():

with tf.Session() as sess:

# Get handles to input and output tensors

ops = tf.get_default_graph().get_operations()

all_tensor_names = {output.name for op in ops for output in op.outputs}

tensor_dict = {}

for key in [

'num_detections', 'detection_boxes', 'detection_scores',

'detection_classes', 'detection_masks'

]:

tensor_name = key + ':0'

if tensor_name in all_tensor_names:

tensor_dict[key] = tf.get_default_graph().get_tensor_by_name(

tensor_name)

if 'detection_masks' in tensor_dict:

# The following processing is only for single image

detection_boxes = tf.squeeze(tensor_dict['detection_boxes'], [0])

detection_masks = tf.squeeze(tensor_dict['detection_masks'], [0])

# Reframe is required to translate mask from box coordinates to image coordinates and fit the image size.

real_num_detection = tf.cast(tensor_dict['num_detections'][0], tf.int32)

detection_boxes = tf.slice(detection_boxes, [0, 0], [real_num_detection, -1])

detection_masks = tf.slice(detection_masks, [0, 0, 0], [real_num_detection, -1, -1])

detection_masks_reframed = utils_ops.reframe_box_masks_to_image_masks(

detection_masks, detection_boxes, image.shape[0], image.shape[1])

detection_masks_reframed = tf.cast(

tf.greater(detection_masks_reframed, 0.5), tf.uint8)

# Follow the convention by adding back the batch dimension

tensor_dict['detection_masks'] = tf.expand_dims(

detection_masks_reframed, 0)

image_tensor = tf.get_default_graph().get_tensor_by_name('image_tensor:0')

# Run inference

output_dict = sess.run(tensor_dict,

feed_dict={image_tensor: np.expand_dims(image, 0)})

# all outputs are float32 numpy arrays, so convert types as appropriate

output_dict['num_detections'] = int(output_dict['num_detections'][0])

output_dict['detection_classes'] = output_dict[

'detection_classes'][0].astype(np.uint8)

output_dict['detection_boxes'] = output_dict['detection_boxes'][0]

output_dict['detection_scores'] = output_dict['detection_scores'][0]

if 'detection_masks' in output_dict:

output_dict['detection_masks'] = output_dict['detection_masks'][0]

return output_dict

while True:

ret, image_np = cap.read()

image_np_expanded = np.expand_dims(image_np, axis=0)

# Actual detection.

output_dict = run_inference_for_single_image(image_np, detection_graph)

# Visualization of the results of a detection.

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

output_dict['detection_boxes'],

output_dict['detection_classes'],

output_dict['detection_scores'],

category_index,

instance_masks=output_dict.get('detection_masks'),

use_normalized_coordinates=True,

line_thickness=8)

cv2.imshow('object detection', cv2.resize(image_np, (800, 600)))

# cv2.waitKey(0)

if cv2.waitKey(25) & 0xFF == ord('q'):

cv2.destroyAllWindows()

break

测试结果:

参考的博客代码:

https://www.jianshu.com/p/27e4dc070761

https://github.com/Shirhe-Lyh/mask_rcnn_test

https://blog.csdn.net/gulingfengze/article/details/80137767

https://blog.csdn.net/yql_617540298/article/details/81110685

https://github.com/liqunfeifei/Deep-Learning/tree/master/Custom_Mask_RCNN

1381

1381

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?