Retrieval-Augmented Generation for Large Language Models: A Survey

大型语言模型的检索增强生成:一项调查

Yunfan , Yun Xiong , Xinyu , Kangxiang Jia , Jinliu , Yuxi , Yi Daia , Jiawei Sun , Meng Wang , and Haofen Wang

Yunfan , Yun Xiong , Xinyu , Kangxiang Jia , Jinliu , Yuxi , Yi Daia , Jiawei Sun , Meng Wang , 和 Haofen Wang Shanghai Research Institute for Intelligent Autonomous Systems, Tongji University

上海智能自主系统研究院,同济大学 Shanghai Key Laboratory of Data Science, School of Computer Science, Fudan University

上海数据科学重点实验室,复旦大学计算机科学学院 College of Design and Innovation, Tongji University

同济大学设计与创新学院

Abstract 摘要

Large Language Models (LLMs) showcase impressive capabilities but encounter challenges like hallucination, outdated knowledge, and non-transparent, untraceable reasoning processes. Retrieval-Augmented Generation (RAG) has emerged as a promising solution by incorporating knowledge from external databases. This enhances the accuracy and credibility of the generation, particularly for knowledge-intensive tasks, and allows for continuous knowledge updates and integration of domainspecific information. RAG synergistically merges LLMs’ intrinsic knowledge with the vast, dynamic repositories of external databases. This comprehensive review paper offers a detailed examination of the progression of RAG paradigms, encompassing the Naive RAG, the Advanced RAG, and the Modular RAG. It meticulously scrutinizes the tripartite foundation of RAG frameworks, which includes the retrieval, the generation and the augmentation techniques. The paper highlights the state-of-theart technologies embedded in each of these critical components, providing a profound understanding of the advancements in RAG systems. Furthermore, this paper introduces up-to-date evaluation framework and benchmark. At the end, this article delineates the challenges currently faced and points out prospective avenues for research and development

大型语言模型(LLMs)展示了令人印象深刻的能力,但面临诸如幻觉、过时知识以及不透明、不可追溯的推理过程等挑战。检索增强生成(RAG)作为一种有前景的解决方案,通过结合来自外部数据库的知识而出现。这提高了生成的准确性和可信度,特别是在知识密集型任务中,并允许持续的知识更新和领域特定信息的整合。RAG 协同地将LLMs的内在知识与外部数据库的庞大动态存储库相结合。这篇综合评审论文详细审查了 RAG 范式的发展,包括简单 RAG、先进 RAG 和模块化 RAG。它细致地审视了 RAG 框架的三重基础,包括检索、生成和增强技术。论文强调了嵌入在这些关键组件中的最先进技术,提供了对 RAG 系统进展的深刻理解。此外,本文还介绍了最新的评估框架和基准。最后,本文阐明了当前面临的挑战,并指出了未来研究和发展的潜在方向

索引词-大型语言模型,检索增强生成,自然语言处理,信息检索

I. Introduction 一. 引言

大型语言模型(LLMs)取得了显著成功,但在特定领域或知识密集型任务中仍面临重大限制[1],特别是在处理超出其训练数据或需要当前信息的查询时,常常会产生“幻觉”[2]。为克服这些挑战,检索增强生成(RAG)通过语义相似性计算从外部知识库中检索相关文档片段,从而增强LLMs。通过参考外部知识,RAG 有效减少了生成事实不准确内容的问题。其在LLMs中的集成导致了广泛的采用,使 RAG 成为推动聊天机器人发展的关键技术,并增强了LLMs在现实世界应用中的适用性。

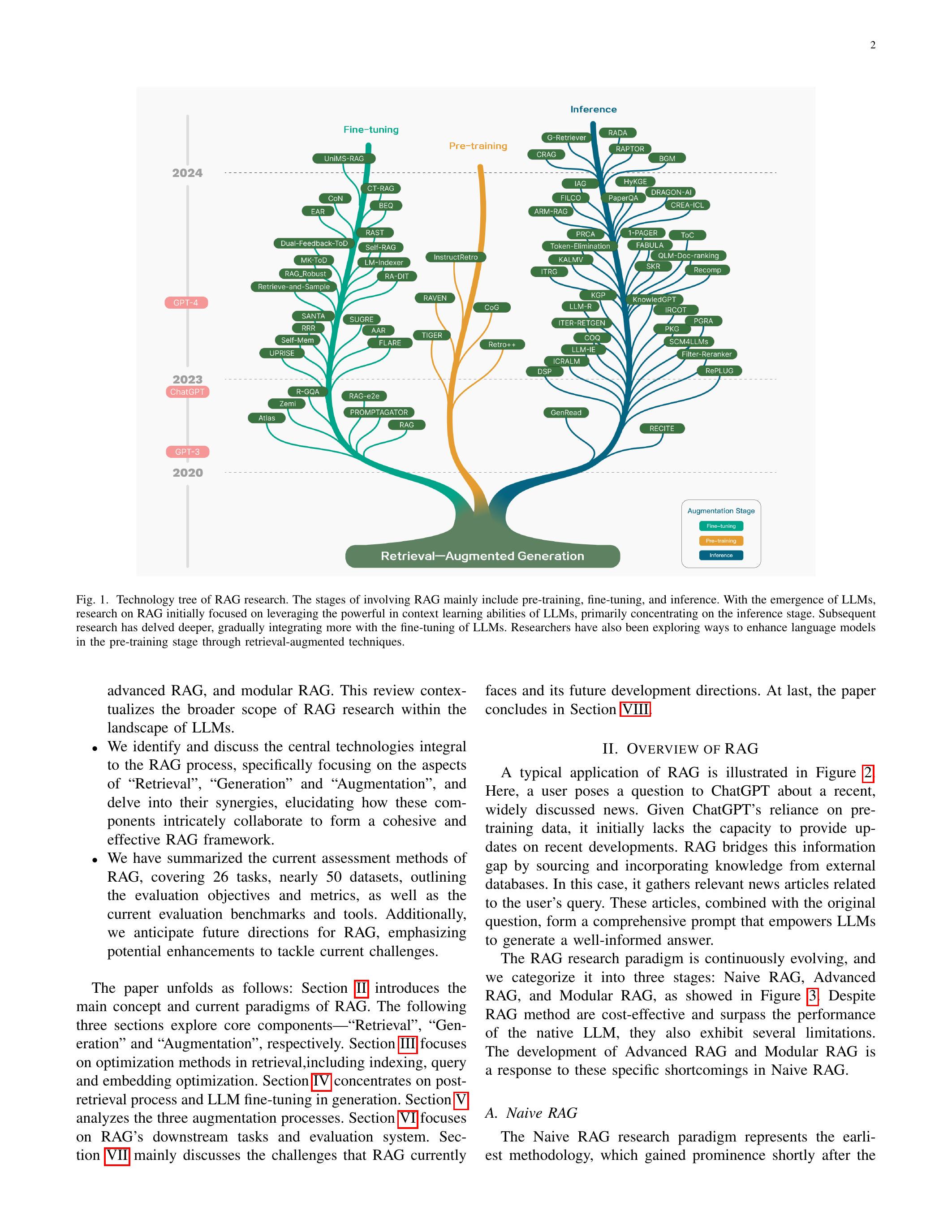

RAG 技术近年来迅速发展,相关研究的技术树如下所示

在图 1 中,RAG 在大模型时代的发展轨迹展现出几个明显的阶段特征。最初,RAG 的诞生与 Transformer 架构的兴起相吻合,重点通过预训练模型(PTM)引入额外知识来增强语言模型。这个早期阶段的特点是旨在完善预训练技术的基础性工作[3]-[5]。随后,ChatGPT 的出现[6]标志着一个关键时刻,LLM展示了强大的上下文学习(ICL)能力。RAG 研究转向在推理阶段为LLMs提供更好的信息,以回答更复杂和知识密集的任务,从而推动了 RAG 研究的快速发展。随着研究的进展,RAG 的增强不再局限于推理阶段,而是开始与LLM微调技术结合得更多。

The burgeoning field of RAG has experienced swift growth, yet it has not been accompanied by a systematic synthesis that could clarify its broader trajectory. This survey endeavors to fill this gap by mapping out the RAG process and charting its evolution and anticipated future paths, with a focus on the integration of RAG within LLMs. This paper considers both technical paradigms and research methods, summarizing three main research paradigms from over 100 RAG studies, and analyzing key technologies in the core stages of “Retrieval,” “Generation,” and “Augmentation.” On the other hand, current research tends to focus more on methods, lacking analysis and summarization of how to evaluate RAG. This paper comprehensively reviews the downstream tasks, datasets, benchmarks, and evaluation methods applicable to RAG. Overall, this paper sets out to meticulously compile and categorize the foundational technical concepts, historical progression, and the spectrum of RAG methodologies and applications that have emerged post-LLMs. It is designed to equip readers and professionals with a detailed and structured understanding of both large models and RAG. It aims to illuminate the evolution of retrieval augmentation techniques, assess the strengths and weaknesses of various approaches in their respective contexts, and speculate on upcoming trends and innovations.

RAG 这一新兴领域经历了迅速增长,但并没有伴随系统的综合分析来阐明其更广泛的发展轨迹。本调查旨在填补这一空白,通过绘制 RAG 过程并描绘其演变和预期未来路径,重点关注 RAG 在LLMs中的整合。本文考虑了技术范式和研究方法,总结了 100 多项 RAG 研究中的三种主要研究范式,并分析了“检索”、“生成”和“增强”核心阶段的关键技术。另一方面,目前的研究往往更关注方法,缺乏对如何评估 RAG 的分析和总结。本文全面回顾了适用于 RAG 的下游任务、数据集、基准和评估方法。总体而言,本文旨在细致地编纂和分类基础技术概念、历史进程以及在LLMs之后出现的 RAG 方法论和应用的广泛范围。它旨在为读者和专业人士提供对大型模型和 RAG 的详细和结构化理解。 它旨在阐明检索增强技术的发展,评估各种方法在各自背景下的优缺点,并推测即将出现的趋势和创新。

我们的贡献如下:

- In this survey, we present a thorough and systematic review of the state-of-the-art RAG methods, delineating its evolution through paradigms including naive RAG,

在本次调查中,我们对最先进的检索增强文本生成(RAG)方法进行了全面和系统的回顾,阐明了其通过包括简单 RAG 在内的范式的发展

图 1. RAG 研究的技术树。涉及 RAG 的阶段主要包括预训练、微调和推理。随着LLMs的出现,RAG 的研究最初集中在利用LLMs强大的上下文学习能力上,主要集中在推理阶段。后续研究深入探讨,逐渐与LLMs的微调整合得更多。研究人员还在探索通过检索增强技术在预训练阶段提升语言模型的方法。

advanced RAG, and modular RAG. This review contextualizes the broader scope of RAG research within the landscape of LLMs.

先进的 RAG 和模块化 RAG。本文回顾了 RAG 研究在LLMs领域的更广泛背景。

- We identify and discuss the central technologies integral to the RAG process, specifically focusing on the aspects of “Retrieval”, “Generation” and “Augmentation”, and delve into their synergies, elucidating how these components intricately collaborate to form a cohesive and effective RAG framework.

我们识别并讨论了 RAG 过程中的核心技术,特别关注“检索”、“生成”和“增强”这几个方面,并深入探讨它们的协同作用,阐明这些组件如何紧密合作形成一个连贯有效的 RAG 框架。 - We have summarized the current assessment methods of RAG, covering 26 tasks, nearly 50 datasets, outlining the evaluation objectives and metrics, as well as the current evaluation benchmarks and tools. Additionally, we anticipate future directions for RAG, emphasizing potential enhancements to tackle current challenges.

我们总结了 RAG 的当前评估方法,涵盖了 26 个任务,近 50 个数据集,概述了评估目标和指标,以及当前的评估基准和工具。此外,我们展望了 RAG 的未来方向,强调了应对当前挑战的潜在改进。

本文的结构如下:第 节介绍了 RAG 的主要概念和当前范式。接下来的三节分别探讨了核心组件——“检索”、“生成”和“增强”。第三节重点讨论了检索中的优化方法,包括索引、查询和嵌入优化。第四节集中于生成中的后检索过程和LLM微调。第五节分析了三种增强过程。第六节关注 RAG 的下游任务和评估系统。第七节主要讨论了 RAG 目前面临的挑战。

faces and its future development directions. At last, the paper concludes in Section VIII.

面临的挑战及其未来发展方向。最后,本文在第八节中总结。

II. OVERVIEW of RAG

II. RAG 概述

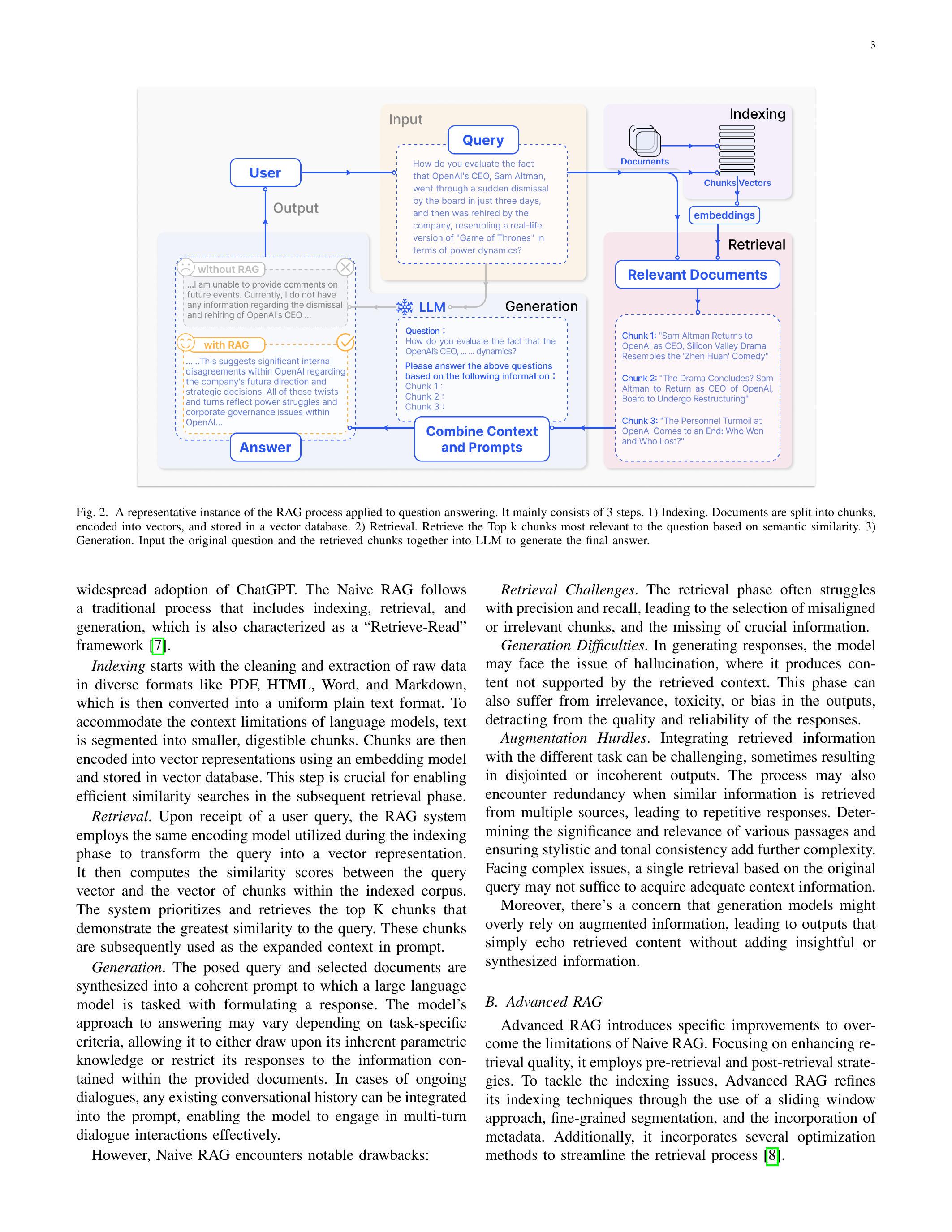

RAG 的一个典型应用如图 2 所示。在这里,用户向 ChatGPT 提出一个关于最近广泛讨论的新闻的问题。由于 ChatGPT 依赖于预训练数据,它最初无法提供关于最近发展的更新。RAG 通过从外部数据库获取和整合知识来弥补这一信息差距。在这种情况下,它收集与用户查询相关的新闻文章。这些文章与原始问题结合,形成一个全面的提示,使LLMs能够生成一个信息丰富的答案。

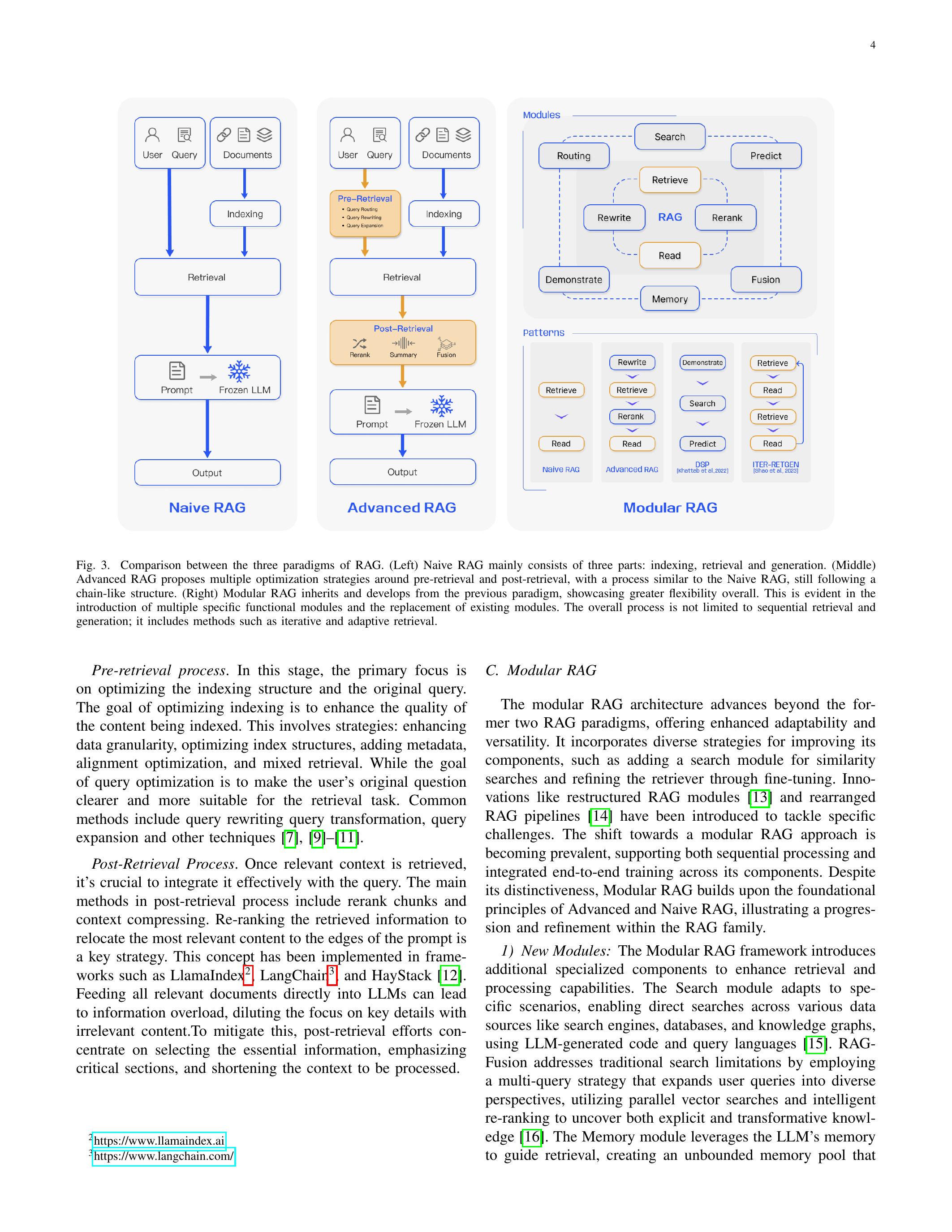

RAG 研究范式正在不断发展,我们将其分为三个阶段:初级 RAG、高级 RAG 和模块化 RAG,如图 3 所示。尽管 RAG 方法具有成本效益并且超越了原生LLM的性能,但它们也表现出一些局限性。高级 RAG 和模块化 RAG 的发展是对初级 RAG 中这些特定缺陷的回应。

A. Naive RAG A. 天真检索增强生成 (RAG)

朴素 RAG 研究范式代表了最早的方法论,随后不久便获得了广泛关注

图 2. 应用于问答的 RAG 过程的一个代表性实例。它主要包括 3 个步骤。1) 索引。文档被拆分成块,编码为向量,并存储在向量数据库中。2) 检索。根据语义相似性检索与问题最相关的前 k 个块。3) 生成。将原始问题和检索到的块一起输入LLM以生成最终答案。

widespread adoption of ChatGPT. The Naive RAG follows a traditional process that includes indexing, retrieval, and generation, which is also characterized as a “Retrieve-Read” framework [7].

ChatGPT 的广泛应用。朴素的 RAG 遵循一个传统的过程,包括索引、检索和生成,这也被称为“检索-阅读”框架[7]。

索引从清理和提取各种格式的原始数据开始,如 PDF、HTML、Word 和 Markdown,然后将其转换为统一的纯文本格式。为了适应语言模型的上下文限制,文本被分割成更小、易于消化的块。然后,使用嵌入模型将块编码为向量表示,并存储在向量数据库中。这一步对于在后续检索阶段实现高效的相似性搜索至关重要。

检索。在接收到用户查询后,RAG 系统使用在索引阶段所采用的相同编码模型将查询转换为向量表示。然后,它计算查询向量与索引语料库中各个块的向量之间的相似性得分。系统优先检索与查询最相似的前 K 个块。这些块随后被用作提示中的扩展上下文。

生成。提出的查询和选定的文档被合成成一个连贯的提示,大型语言模型的任务是制定响应。模型回答的方式可能会根据特定任务的标准而有所不同,使其能够利用其固有的参数知识,或将其响应限制在提供的文档中包含的信息。在进行对话的情况下,任何现有的对话历史都可以集成到提示中,使模型能够有效地进行多轮对话交互。

然而,简单的检索增强生成(Naive RAG)遇到了显著的缺陷:

检索挑战。检索阶段通常在精确度和召回率上存在困难,导致选择不匹配或无关的片段,并错过关键信息。

生成困难。在生成响应时,模型可能面临幻觉问题,即生成的内容未得到检索上下文的支持。这个阶段还可能遭受输出的无关性、毒性或偏见,降低响应的质量和可靠性。

增强障碍。将检索到的信息与不同任务整合可能具有挑战性,有时会导致输出不连贯或不一致。当从多个来源检索到相似信息时,过程也可能遇到冗余,导致重复的响应。确定各种段落的重要性和相关性,并确保风格和语调的一致性增加了进一步的复杂性。面对复杂问题,基于原始查询的单次检索可能不足以获取足够的上下文信息。

此外,人们担心生成模型可能过于依赖增强信息,从而导致输出仅仅重复检索到的内容,而没有添加有见地或综合的信息。

B. Advanced RAG B. 高级 RAG

高级 RAG 引入了特定的改进,以克服朴素 RAG 的局限性。它专注于提高检索质量,采用了预检索和后检索策略。为了解决索引问题,高级 RAG 通过使用滑动窗口方法、细粒度分割和元数据的结合来优化其索引技术。此外,它还结合了多种优化方法,以简化检索过程[8]。

图 3. RAG 三种范式的比较。(左) 简单 RAG 主要由三个部分组成:索引、检索和生成。(中) 高级 RAG 在预检索和后检索周围提出了多种优化策略,过程类似于简单 RAG,仍然遵循链式结构。(右) 模块化 RAG 继承并发展自前一个范式,整体展示出更大的灵活性。这在引入多个特定功能模块和替换现有模块中显而易见。整体过程不仅限于顺序检索和生成;还包括迭代和自适应检索等方法。

预检索过程。在这个阶段,主要关注的是优化索引结构和原始查询。优化索引的目标是提高被索引内容的质量。这涉及到以下策略:增强数据粒度、优化索引结构、添加元数据、对齐优化和混合检索。而查询优化的目标是使用户的原始问题更加清晰,更适合检索任务。常见的方法包括查询重写、查询转换、查询扩展和其他技术 [7],[9]-[11]。

后检索过程。一旦检索到相关上下文,将其有效地与查询整合是至关重要的。后检索过程中的主要方法包括重新排序块和上下文压缩。重新排序检索到的信息,将最相关的内容重新定位到提示的边缘是一项关键策略。这个概念已经在 LlamaIndex 、LangChair 和 HayStack [12]等框架中得到了实现。直接将所有相关文档输入到LLMs中可能会导致信息过载,使得对关键细节的关注被无关内容稀释。为了解决这个问题,后检索工作集中在选择必要的信息,强调关键部分,并缩短要处理的上下文。

C. Modular RAG C. 模块化 RAG

模块化 RAG 架构超越了前两种 RAG 范式,提供了更强的适应性和多样性。它结合了多种策略来改善其组件,例如添加用于相似性搜索的搜索模块和通过微调来优化检索器。像重构的 RAG 模块[13]和重新排列的 RAG 管道[14]这样的创新被引入以应对特定挑战。向模块化 RAG 方法的转变正变得越来越普遍,支持其组件之间的顺序处理和集成的端到端训练。尽管具有独特性,模块化 RAG 仍建立在高级和简单 RAG 的基础原则之上,展示了 RAG 家族内的进步和完善。

- New Modules: The Modular RAG framework introduces additional specialized components to enhance retrieval and processing capabilities. The Search module adapts to specific scenarios, enabling direct searches across various data sources like search engines, databases, and knowledge graphs, using LLM-generated code and query languages [15]. RAGFusion addresses traditional search limitations by employing a multi-query strategy that expands user queries into diverse perspectives, utilizing parallel vector searches and intelligent re-ranking to uncover both explicit and transformative knowledge [16]. The Memory module leverages the LLM’s memory to guide retrieval, creating an unbounded memory pool that

新模块:模块化 RAG 框架引入了额外的专用组件,以增强检索和处理能力。搜索模块适应特定场景,能够直接在各种数据源(如搜索引擎、数据库和知识图谱)中进行搜索,使用LLM生成的代码和查询语言[15]。RAGFusion 通过采用多查询策略来解决传统搜索的局限性,将用户查询扩展为多样化的视角,利用并行向量搜索和智能重新排序来发现显性和变革性知识[16]。内存模块利用LLM的内存来指导检索,创建一个无限制的内存池。

aligns the text more closely with data distribution through iterative self-enhancement [17], [18]. Routing in the RAG system navigates through diverse data sources, selecting the optimal pathway for a query, whether it involves summarization, specific database searches, or merging different information streams [19]. The Predict module aims to reduce redundancy and noise by generating context directly through the LLM, ensuring relevance and accuracy [13]. Lastly, the Task Adapter module tailors RAG to various downstream tasks, automating prompt retrieval for zero-shot inputs and creating task-specific retrievers through few-shot query generation [20], [21] .This comprehensive approach not only streamlines the retrieval process but also significantly improves the quality and relevance of the information retrieved, catering to a wide array of tasks and queries with enhanced precision and flexibility.

通过迭代自我增强,使文本与数据分布更紧密对齐[17],[18]。RAG 系统中的路由通过多样的数据源进行导航,为查询选择最佳路径,无论是涉及摘要、特定数据库搜索,还是合并不同的信息流[19]。预测模块旨在通过直接生成上下文来减少冗余和噪声,确保相关性和准确性[13]。最后,任务适配器模块将 RAG 调整为各种下游任务,自动化零样本输入的提示检索,并通过少样本查询生成创建特定任务的检索器[20],[21]。这种综合方法不仅简化了检索过程,还显著提高了检索信息的质量和相关性,满足了各种任务和查询的需求,增强了精确性和灵活性。 - New Patterns: Modular RAG offers remarkable adaptability by allowing module substitution or reconfiguration to address specific challenges. This goes beyond the fixed structures of Naive and Advanced RAG, characterized by a simple “Retrieve” and “Read” mechanism. Moreover, Modular RAG expands this flexibility by integrating new modules or adjusting interaction flow among existing ones, enhancing its applicability across different tasks.

新模式:模块化 RAG 通过允许模块替换或重新配置以应对特定挑战,提供了显著的适应性。这超越了朴素和高级 RAG 的固定结构,其特征是简单的“检索”和“阅读”机制。此外,模块化 RAG 通过集成新模块或调整现有模块之间的交互流程,进一步扩展了这种灵活性,提高了其在不同任务中的适用性。

像 Rewrite-Retrieve-Read [7]模型这样的创新利用了LLM的能力,通过重写模块和 LM 反馈机制来优化检索查询,从而更新重写模型,提高任务性能。同样,像 Generate-Read [13]的方法用LLM生成的内容替代传统检索,而 ReciteRead [22]则强调从模型权重中检索,增强模型处理知识密集型任务的能力。混合检索策略整合了关键词、语义和向量搜索,以满足多样化的查询。此外,采用子查询和假设文档嵌入(HyDE)[11]旨在通过关注生成答案与真实文档之间的嵌入相似性来提高检索相关性。

模块排列和交互的调整,例如演示-搜索-预测(DSP)[23]框架和 ITERRETGEN [14]的迭代检索-阅读-检索-阅读流程,展示了模块输出的动态使用,以增强另一个模块的功能,体现了对增强模块协同作用的深刻理解。模块化 RAG 流程的灵活编排展示了通过 FLARE [24]和 Self-RAG [25]等技术进行自适应检索的好处。这种方法超越了固定的 RAG 检索过程,通过根据不同场景评估检索的必要性。灵活架构的另一个好处是 RAG 系统可以更容易地与其他技术(如微调或强化学习)[26]集成。例如,这可以涉及对检索器进行微调以获得更好的检索结果,对生成器进行微调以获得更个性化的输出,或进行协作微调[27]。

D. RAG vs Fine-tuning

D. RAG 与微调

LLMs的增强因其日益普及而引起了相当大的关注。在优化中

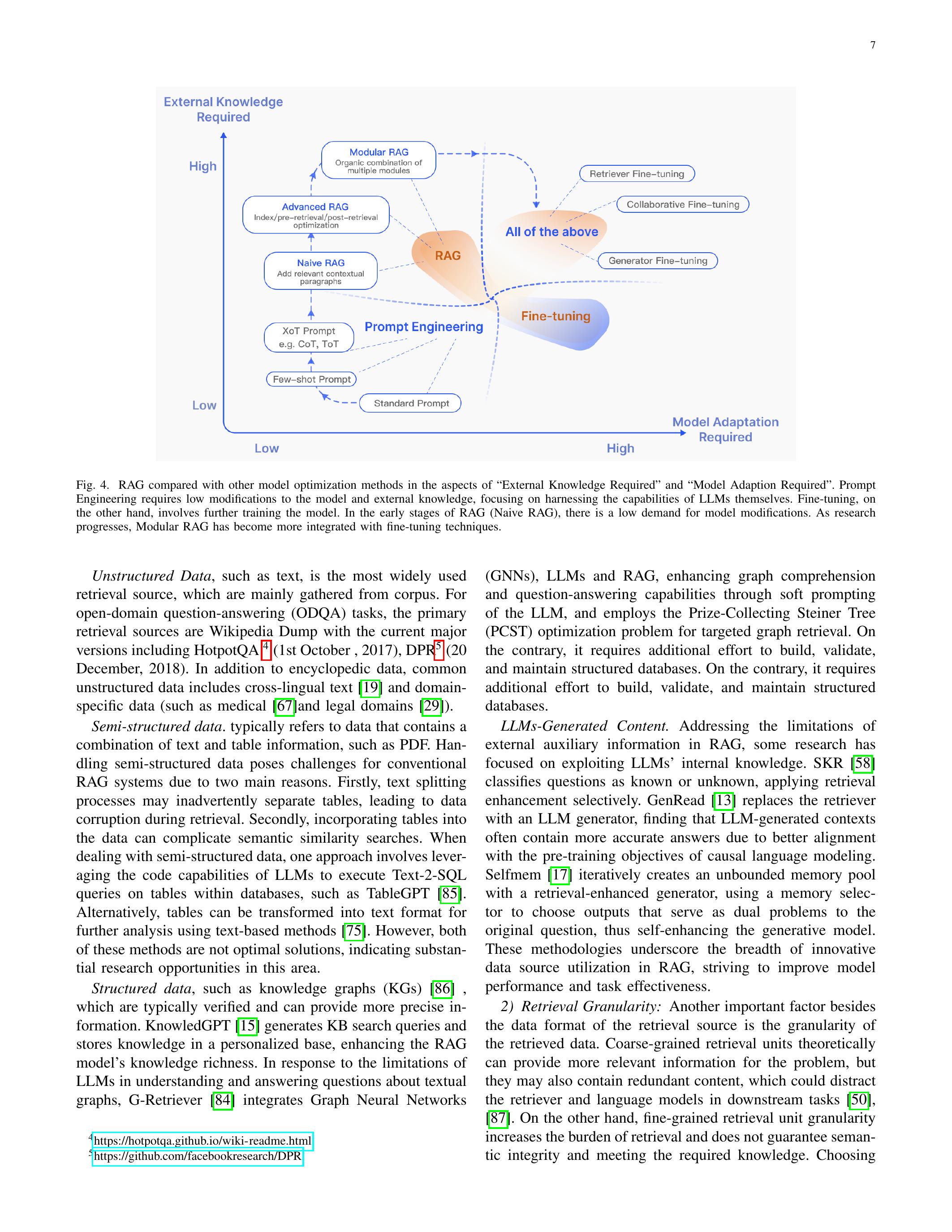

methods for LLMs, RAG is often compared with Fine-tuning (FT) and prompt engineering. Each method has distinct characteristics as illustrated in Figure 4 We used a quadrant chart to illustrate the differences among three methods in two dimensions: external knowledge requirements and model adaption requirements. Prompt engineering leverages a model’s inherent capabilities with minimum necessity for external knowledge and model adaption. RAG can be likened to providing a model with a tailored textbook for information retrieval, ideal for precise information retrieval tasks. In contrast, FT is comparable to a student internalizing knowledge over time, suitable for scenarios requiring replication of specific structures, styles, or formats.

对于LLMs的方法,RAG 通常与微调(FT)和提示工程进行比较。每种方法都有其独特的特征,如图 4 所示。我们使用象限图来说明三种方法在外部知识需求和模型适应需求两个维度上的差异。提示工程利用模型的固有能力,最小化对外部知识和模型适应的需求。RAG 可以比作为模型提供一本量身定制的教科书以进行信息检索,适合精确的信息检索任务。相比之下,FT 可以比作学生随着时间的推移内化知识,适合需要复制特定结构、风格或格式的场景。

RAG 在动态环境中表现出色,通过提供实时知识更新和有效利用外部知识源,具有很高的可解释性。然而,它伴随着更高的延迟和关于数据检索的伦理考虑。另一方面,FT 则更为静态,需要重新训练以进行更新,但能够深度定制模型的行为和风格。它在数据集准备和训练方面需要大量计算资源,虽然可以减少幻觉,但可能在处理不熟悉的数据时面临挑战。

在对不同主题的各种知识密集型任务进行多次评估时,[28] 发现虽然无监督微调显示出一些改进,但 RAG 始终优于它,无论是在训练期间遇到的现有知识还是全新的知识。此外,发现 LLMs 在通过无监督微调学习新事实信息方面存在困难。RAG 和 FT 之间的选择取决于应用上下文中对数据动态、定制和计算能力的具体需求。RAG 和 FT 不是相互排斥的,可以相互补充,在不同层面上增强模型的能力。在某些情况下,它们的结合使用可能会导致最佳性能。涉及 RAG 和 FT 的优化过程可能需要多次迭代才能达到令人满意的结果。

III. RetrieVAL III. 检索

在 RAG 的背景下,高效地从数据源中检索相关文档至关重要。涉及几个关键问题,例如检索源、检索粒度、检索的预处理以及相应嵌入模型的选择。

A. Retrieval Source A. 检索源

RAG 依赖外部知识来增强LLMs,而检索源的类型和检索单元的粒度都会影响最终的生成结果。

- Data Structure: Initially, text is s the mainstream source of retrieval. Subsequently, the retrieval source expanded to include semi-structured data (PDF) and structured data (Knowledge Graph, KG) for enhancement. In addition to retrieving from original external sources, there is also a growing trend in recent researches towards utilizing content generated by LLMs themselves for retrieval and enhancement purposes.

数据结构:最初,文本是检索的主流来源。随后,检索来源扩展到包括半结构化数据(PDF)和结构化数据(知识图谱,KG)以进行增强。除了从原始外部来源进行检索,最近的研究中也有越来越多的趋势倾向于利用LLMs自身生成的内容进行检索和增强。

SUMMARY OF RAG METHODS

RAG 方法的总结

| Method 方法 | Retrieval Source 检索源 | Retrieval Data Type 检索数据类型 |

检索粒度

| Augmentation Stage 增强阶段 | Retrieval process 检索过程 | ||

| CoG [29] | Wikipedia 维基百科 | Text 文本 | Phrase 短语 | Pre-training 预训练 | Iterative 迭代 | ||

| DenseX 30 3 | FactoidWiki | Text 文本 | Proposition 提议 | Inference 推理 | Once 一次 | ||

| EAR [31] | Dataset-base 数据集基础 | Text 文本 | Sentence 句子 | Tuning 调优 | Once 一次 | ||

| UPRISE 20. | Dataset-base 数据集基础 | Text 文本 | Sentence 句子 | Tuning 调优 | Once 一次 | ||

| RAST 32 | Dataset-base 数据集基础 | Text 文本 | Sentence 句子 | Tuning 调优 | Once 一次 | ||

| Self-Mem 17 , Self-Mem 17 | Dataset-base 数据集基础 | Text 文本 | Sentence 句子 | Tuning 调优 | Iterative 迭代 | ||

| FLARE 24 | Search Engine,Wikipedia 搜索引擎,维基百科 | Text 文本 | Sentence 句子 | Tuning 调优 | Adaptive 自适应 | ||

| PGRA 33 | Wikipedia 维基百科 | Text 文本 | Sentence 句子 | Inference 推理 | Once 一次 | ||

| FILCO 34 | Wikipedia 维基百科 | Text 文本 | Sentence 句子 | Inference 推理 | Once 一次 | ||

| RADA 35 | Dataset-base 数据集基础 | Text 文本 | Sentence 句子 | Inference 推理 | Once 一次 | ||

| Filter-rerank 36. | Synthesized dataset 合成数据集 | Text 文本 | Sentence 句子 | Inference 推理 | Once 一次 | ||

| R-GQA 37 | Dataset-base 数据集基础 | Text 文本 | Sentence Pair 句子对 | Tuning 调优 | Once 一次 | ||

| LLM-R 38 | Dataset-base 数据集基础 | Text 文本 | Sentence Pair 句子对 | Inference 推理 | Iterative 迭代 | ||

| TIGER 39 | Dataset-base 数据集基础 | Text 文本 | Item-base 项目基础 | Pre-training 预训练 | Once 一次 | ||

| LM-Indexer 40 . | Dataset-base 数据集基础 | Text 文本 | Item-base 项目基础 | Tuning 调优 | Once 一次 | ||

| BEQUE 9 9 | Dataset-base 数据集基础 | Text 文本 | Item-base 项目基础 | Tuning 调优 | Once 一次 | ||

| CT-RAG [41] | Synthesized dataset 合成数据集 | Text 文本 | Item-base 项目基础 | Tuning 调优 | Once 一次 | ||

| Atlas 42 | Wikipedia, Common Crawl 维基百科,公共爬虫 | Text 文本 | Chunk 块 | Pre-training 预训练 | Iterative 迭代 | ||

| RAVEN [43] | Wikipedia 维基百科 | Text 文本 | Chunk 块 | Pre-training 预训练 | Once 一次 | ||

| RETRO++ 44 | Pre-training Corpus 预训练语料库 | Text 文本 | Chunk 块 | Pre-training 预训练 | Iterative 迭代 | ||

| INSTRUCTRETRO 45. | Pre-training corpus 预训练语料库 | Text 文本 | Chunk 块 | Pre-training 预训练 | Iterative 迭代 | ||

| RRR [7] | Search Engine 搜索引擎 | Text 文本 | Chunk 块 | Tuning 调优 | Once 一次 | ||

| RA-e2e | Dataset-base 数据集基础 | Text 文本 | Chunk 块 | Tuning 调优 | Once 一次 | ||

| PROMPTAGATOR 21. | BEIR | Text 文本 | Chunk 块 | Tuning 调优 | Once 一次 | ||

| AAR 47 | MSMARCO,Wikipedia MSMARCO,维基百科 | Text 文本 | Chunk 块 | Tuning 调优 | Once 一次 | ||

| RA-DIT [27] | Common Crawl,Wikipedia Common Crawl, Wikipedia | Text 文本 | Chunk 块 | Tuning 调优 | Once 一次 | ||

| RAG-Robust 48 RAG-鲁棒 48 | Wikipedia 维基百科 | Text 文本 | Chunk 块 | Tuning 调优 | Once 一次 | ||

| RA-Long-Form 49 | Dataset-base 数据集基础 | Text 文本 | Chunk 块 | Tuning 调优 | Once 一次 | ||

| Con 150 | Wikipedia 维基百科 | Text 文本 | Chunk 块 | Tuning 调优 | Once 一次 | ||

| Self-RAG 25 | Wikipedia 维基百科 | Text 文本 | Chunk 块 | Tuning 调优 | Adaptive 自适应 | ||

| BGM 26. | Wikipedia 维基百科 | Text 文本 | Chunk 块 | Inference 推理 | Once 一次 | ||

| CoQ [51] | Wikipedia 维基百科 | Text 文本 | Chunk 块 | Inference 推理 | Iterative 迭代 | ||

| Token-Elimination 52 | Wikipedia 维基百科 | Text 文本 | Chunk 块 | Inference 推理 | Once 一次 | ||

| PaperQA 53 | Arxiv,Online Database,PubMed Arxiv,在线数据库,PubMed | Text 文本 | Chunk 块 | Inference 推理 | Iterative 迭代 | ||

| NoiseRAG 54. | FactoidWiki | Text 文本 | Chunk 块 | Inference 推理 | Once 一次 | ||

| Search Engine,Wikipedia 搜索引擎,维基百科 | Text 文本 | Chunk 块 | Inference 推理 | Once 一次 | |||

| NoMIRACL 56 | Wikipedia 维基百科 | Text 文本 | Chunk 块 | Inference 推理 | Once 一次 | ||

| ToC 57 | Search Engine,Wikipedia 搜索引擎,维基百科 | Text 文本 | Chunk 块 | Inference 推理 | Recursive 递归 | ||

| SKR | Dataset-base,Wikipedia 数据集基础,维基百科 | Text 文本 | Chunk 块 | Inference 推理 | Adaptive 自适应 | ||

| ITRG 59 | Wikipedia 维基百科 | Text 文本 | Chunk 块 | Inference 推理 | Iterative 迭代 | ||

| RAG-LongContext 60] RAG-LongContext 60 | Dataset-base 数据集基础 | Text 文本 | Chunk 块 | Inference 推理 | Once 一次 | ||

| ITER-RETGEN 14 | Wikipedia 维基百科 | Text 文本 | Chunk 块 | Inference 推理 | Iterative 迭代 | ||

| IRCoT [61] | Wikipedia 维基百科 | Text 文本 | Chunk 块 | Inference 推理 | Recursive 递归 | ||

| LLM-Knowledge-Boundary 62 LLM-知识边界 62 | Wikipedia 维基百科 | Text 文本 | Chunk 块 | Inference 推理 | Once 一次 | ||

| RAPTOR 63, | Dataset-base 数据集基础 | Text 文本 | Chunk 块 | Inference 推理 | Recursive 递归 | ||

| RECITE 22 | LLMs | Text 文本 | Chunk 块 | Inference 推理 | Once 一次 | ||

| ICRALM 64 | Pile,Wikipedia 堆,维基百科 | Text 文本 | Chunk 块 | Inference 推理 | Iterative 迭代 | ||

| Retrieve-and-Sample 65 | Dataset-base 数据集基础 | Text 文本 | Doc 文档 | Tuning 调优 | Once 一次 | ||

| Zemi 66 | Text 文本 | Doc 文档 | Tuning 调优 | Once 一次 | |||

| CRAG 67 | Arxiv | Text 文本 | Doc 文档 | Inference 推理 | Once 一次 | ||

| 1-PAGER 68 | Wikipedia 维基百科 | Text 文本 | Doc 文档 | Inference 推理 | Iterative 迭代 | ||

| PRCA 69 | Dataset-base 数据集基础 | Text 文本 | Doc 文档 | Inference 推理 | Once 一次 | ||

| QLM-Doc-ranking 70. QLM-Doc-ranking 70。 | Dataset-base 数据集基础 | Text 文本 | Doc 文档 | Inference 推理 | Once 一次 | ||

| Recomp 71. | Wikipedia 维基百科 | Text 文本 | Doc 文档 | Inference 推理 | Once 一次 | ||

| DSP 23 | Wikipedia 维基百科 | Text 文本 | Doc 文档 | Inference 推理 | Iterative 迭代 | ||

| RePLUG 72 | Pile 堆 | Text 文本 | Doc 文档 | Inference 推理 | Once 一次 | ||

| ARM-RAG 73. | Dataset-base 数据集基础 | Text 文本 | Doc 文档 | Inference 推理 | Iterative 迭代 | ||

| GenRead 13 | LLMs | Text 文本 | Doc 文档 | Inference 推理 | Iterative 迭代 | ||

| UniMS-RAG 774 | Dataset-base 数据集基础 | Text 文本 | Multi 多重 | Tuning 调优 | Once 一次 | ||

| CREA-ICL 19. | Dataset-base 数据集基础 | Crosslingual,Text 跨语言,文本 | Sentence 句子 | Inference 推理 | Once 一次 | ||

| PKG 75 | LLM | Tabular,Text 表格,文本 | Chunk 块 | Inference 推理 | Once 一次 | ||

| SANTA 76 | Dataset-base 数据集基础 | Code,Text 代码,文本 | Item 项目 | Pre-training 预训练 | Once 一次 | ||

| SURGE 77 | Freebase | KG | Sub-Graph 子图 | Tuning 调优 | Once 一次 | ||

| MK-ToD 78 | Dataset-base 数据集基础 | KG | Entity 实体 | Tuning 调优 | Once 一次 | ||

| Dual-Feedback-ToD 79 . | Dataset-base 数据集基础 | KG | Entity Sequence 实体序列 | Tuning 调优 | Once 一次 | ||

| KnowledGPT 15 | Dataset-base 数据集基础 | KG | Triplet 三元组 | Inference 推理 | Muti-time | ||

| FABULA 80 | Dataset-base,Graph 数据集基础,图形 | KG | Entity 实体 | Inference 推理 | Once 一次 | ||

| HyKGE 81 | CMeKG | KG | Entity 实体 | Inference 推理 | Once 一次 | ||

| KALMV 82 | Wikipedia 维基百科 | KG | Triplet 三元组 | Inference 推理 | Iterative 迭代 | ||

| RoG 183 | Freebase | KG | Triplet 三元组 | Inference 推理 | Iterative 迭代 | ||

| G-Retriever 84 | | Dataset-base 数据集基础 | TextGraph | Sub-Graph 子图 | Inference 推理 | Once 一次 |

图 4. RAG 与其他模型优化方法在“所需外部知识”和“所需模型适应性”方面的比较。提示工程对模型和外部知识的修改要求较低,专注于利用LLMs本身的能力。另一方面,微调涉及对模型的进一步训练。在 RAG 的早期阶段(简单 RAG),对模型修改的需求较低。随着研究的进展,模块化 RAG 与微调技术的集成度越来越高。

非结构化数据,如文本,是最广泛使用的检索来源,主要来自语料库。对于开放域问答(ODQA)任务,主要的检索来源是维基百科数据,当前主要版本包括 HotpotQA (2017 年 10 月 1 日)、DPR (2018 年 12 月 20 日)。除了百科全书数据,常见的非结构化数据还包括跨语言文本[19]和特定领域数据(如医学[67]和法律领域[29])。

半结构化数据通常指包含文本和表格信息组合的数据,例如 PDF。处理半结构化数据对传统的 RAG 系统提出了挑战,主要有两个原因。首先,文本拆分过程可能会无意中分离表格,导致检索过程中数据损坏。其次,将表格纳入数据中可能会使语义相似性搜索变得复杂。在处理半结构化数据时,一种方法是利用LLMs的代码能力在数据库中的表格上执行 Text-2-SQL 查询,例如 TableGPT [85]。另外,表格也可以转换为文本格式,以便使用基于文本的方法进行进一步分析[75]。然而,这两种方法都不是最佳解决方案,表明该领域存在大量研究机会。

结构化数据,如知识图谱(KGs)[86],通常经过验证,可以提供更精确的信息。KnowledGPT [15] 生成知识库搜索查询,并将知识存储在个性化的基础中,增强了 RAG 模型的知识丰富性。针对 LLMs 在理解和回答有关文本图的问题上的局限性,G-Retriever [84] 集成了图神经网络。

(GNNs),LLMs 和 RAG,通过对 LLM 的软提示增强图形理解和问答能力,并采用奖收集斯坦纳树(PCST)优化问题进行针对性图形检索。相反,它需要额外的努力来构建、验证和维护结构化数据库。相反,它需要额外的努力来构建、验证和维护结构化数据库。

LLMs-生成内容。针对 RAG 中外部辅助信息的局限性,一些研究集中于利用LLMs的内部知识。SKR 58]将问题分类为已知或未知,选择性地应用检索增强。GenRead [13]用LLM生成器替代检索器,发现LLM生成的上下文通常包含更准确的答案,因为与因果语言建模的预训练目标更好地对齐。Selfmem [17]通过检索增强生成器迭代创建一个无限制的记忆池,使用记忆选择器选择作为原始问题的双重问题的输出,从而自我增强生成模型。这些方法强调了 RAG 中创新数据源利用的广度,努力提高模型性能和任务有效性。

2) Retrieval Granularity: Another important factor besides the data format of the retrieval source is the granularity of the retrieved data. Coarse-grained retrieval units theoretically can provide more relevant information for the problem, but they may also contain redundant content, which could distract the retriever and language models in downstream tasks [50], [87]. On the other hand, fine-grained retrieval unit granularity increases the burden of retrieval and does not guarantee semantic integrity and meeting the required knowledge. Choosing

检索粒度:除了检索源的数据格式之外,另一个重要因素是检索数据的粒度。粗粒度检索单元理论上可以为问题提供更多相关信息,但它们也可能包含冗余内容,这可能会分散检索器和语言模型在下游任务中的注意力[50],[87]。另一方面,细粒度检索单元的粒度增加了检索的负担,并不能保证语义完整性和满足所需知识。选择

the appropriate retrieval granularity during inference can be a simple and effective strategy to improve the retrieval and downstream task performance of dense retrievers.

在推理过程中,适当的检索粒度可以是一种简单有效的策略,以提高密集检索器的检索和下游任务性能。

在文本中,检索粒度从细到粗,包括标记、短语、句子、命题、块、文档。其中,DenseX [30]提出了使用命题作为检索单元的概念。命题被定义为文本中的原子表达式,每个命题封装一个独特的事实片段,并以简洁、自包含的自然语言格式呈现。这种方法旨在提高检索的精确度和相关性。在知识图谱(KG)中,检索粒度包括实体、三元组和子图。检索的粒度也可以适应下游任务,例如在推荐任务中检索项目 ID [40]和句子对 [38]。详细信息见表[I

B. Indexing Optimization

B. 索引优化

在索引阶段,文档将被处理、分段,并转化为嵌入以存储在向量数据库中。索引构建的质量决定了在检索阶段是否能够获得正确的上下文。

- Chunking Strategy: The most common method is to split the document into chunks on a fixed number of tokens (e.g., 100, 256, 512) [88]. Larger chunks can capture more context, but they also generate more noise, requiring longer processing time and higher costs. While smaller chunks may not fully convey the necessary context, they do have less noise. However, chunks leads to truncation within sentences, prompting the optimization of a recursive splits and sliding window methods, enabling layered retrieval by merging globally related information across multiple retrieval processes [89]. Nevertheless, these approaches still cannot strike a balance between semantic completeness and context length. Therefore, methods like Small2Big have been proposed, where sentences (small) are used as the retrieval unit, and the preceding and following sentences are provided as (big) context to LLMs [90].

分块策略:最常见的方法是将文档按固定数量的标记(例如,100、256、512)分成块[88]。较大的块可以捕捉更多的上下文,但它们也会产生更多的噪声,导致更长的处理时间和更高的成本。虽然较小的块可能无法充分传达必要的上下文,但它们的噪声较少。然而,块会导致句子内部的截断,促使优化递归分割和滑动窗口方法,通过合并多个检索过程中的全局相关信息实现分层检索[89]。尽管如此,这些方法仍然无法在语义完整性和上下文长度之间取得平衡。因此,提出了像 Small2Big 这样的方案,其中句子(小)被用作检索单元,前后句子作为(大)上下文提供给LLMs[90]。 - Metadata Attachments: Chunks can be enriched with metadata information such as page number, file name, author,category timestamp. Subsequently, retrieval can be filtered based on this metadata, limiting the scope of the retrieval. Assigning different weights to document timestamps during retrieval can achieve time-aware RAG, ensuring the freshness of knowledge and avoiding outdated information.

元数据附件:数据块可以通过元数据信息进行丰富,例如页码、文件名、作者、类别时间戳。随后,可以根据这些元数据过滤检索,限制检索的范围。在检索过程中为文档时间戳分配不同的权重可以实现时间感知的 RAG,确保知识的新鲜度,避免过时的信息。

除了从原始文档中提取元数据外,元数据还可以被人工构建。例如,添加段落摘要,以及引入假设性问题。这种方法也被称为反向 HyDE。具体来说,使用LLM生成可以由文档回答的问题,然后在检索过程中计算原始问题与假设性问题之间的相似性,以减少问题与答案之间的语义差距。

3) Structural Index: One effective method for enhancing information retrieval is to establish a hierarchical structure for the documents. By constructing In structure, RAG system can expedite the retrieval and processing of pertinent data.

3) 结构索引:增强信息检索的一个有效方法是为文档建立层次结构。通过构建该结构,RAG 系统可以加快相关数据的检索和处理。

层次索引结构。文件以父子关系排列,块与它们相连。数据摘要存储在每个节点,帮助快速遍历数据,并协助 RAG 系统确定提取哪些块。这种方法还可以减轻由于块提取问题造成的错觉。

知识图谱索引。在构建文档的层次结构时利用知识图谱有助于保持一致性。它描绘了不同概念和实体之间的联系,显著减少了产生幻觉的可能性。另一个优势是将信息检索过程转化为LLM可以理解的指令,从而提高知识检索的准确性,并使LLM能够生成上下文连贯的响应,从而提高 RAG 系统的整体效率。为了捕捉文档内容和结构之间的逻辑关系,KGP [91]提出了一种使用知识图谱在多个文档之间建立索引的方法。该知识图谱由节点(表示文档中的段落或结构,如页面和表格)和边(指示段落之间的语义/词汇相似性或文档结构内的关系)组成,有效解决了多文档环境中的知识检索和推理问题。

C. Query Optimization C. 查询优化

Naive RAG 的主要挑战之一是它直接依赖用户的原始查询作为检索的基础。制定一个精确清晰的问题是困难的,而不明智的查询会导致检索效果不佳。有时,问题本身就很复杂,语言组织也不够清晰。另一个困难在于语言复杂性的模糊性。当处理专业词汇或具有多重含义的模糊缩写时,语言模型往往会遇到困难。例如,它们可能无法判断“LLM”是在法律上下文中指代大型语言模型还是法学硕士。

- Query Expansion: Expanding a single query into multiple queries enriches the content of the query, providing further context to address any lack of specific nuances, thereby ensuring the optimal relevance of the generated answers.

查询扩展:将单个查询扩展为多个查询丰富了查询的内容,提供了进一步的上下文以解决任何特定细微差别的缺乏,从而确保生成答案的最佳相关性。

Multi-Query. By employing prompt engineering to expand queries via LLMs, these queries can then be executed in parallel. The expansion of queries is not random, but rather meticulously designed.

多查询。通过使用提示工程来扩展查询,通过LLMs,这些查询可以并行执行。查询的扩展不是随机的,而是经过精心设计的。

Sub-Query. The process of sub-question planning represents the generation of the necessary sub-questions to contextualize and fully answer the original question when combined. This process of adding relevant context is, in principle, similar to query expansion. Specifically, a complex question can be decomposed into a series of simpler sub-questions using the least-to-most prompting method [92].

子查询。子问题规划的过程代表了生成必要的子问题,以便在结合时为原始问题提供上下文并完全回答。添加相关上下文的过程在原则上类似于查询扩展。具体而言,复杂问题可以使用从少到多的提示方法[92]分解为一系列更简单的子问题。

验证链(CoVe)。扩展查询经过LLM的验证,以达到减少幻觉的效果。经过验证的扩展查询通常表现出更高的可靠性[93]。

2) Query Transformation: The core concept is to retrieve chunks based on a transformed query instead of the user’s original query.

查询转换:核心概念是基于转换后的查询检索数据块,而不是用户的原始查询。

查询重写。原始查询并不总是最优的,尤其是在现实世界场景中。因此,我们可以提示LLM重写查询。除了使用LLM进行查询重写外,专门的小型语言模型,如 RRR(重写-检索-阅读)[7]。在淘宝中实施的查询重写方法,被称为 BEQUE [9],显著提高了长尾查询的召回效果,导致 GMV 的上升。

另一种查询转换方法是使用提示工程让LLM根据原始查询生成查询以进行后续检索。HyDE [11] 构建假设文档(假定的原始查询答案)。它侧重于从答案到答案的嵌入相似性,而不是寻求问题或查询的嵌入相似性。使用回退提示方法 [10],将原始查询抽象化以生成高层次概念问题(回退问题)。在 RAG 系统中,回退问题和原始查询都用于检索,并且两个结果都作为语言模型答案生成的基础。

3) Query Routing: Based on varying queries, routing to distinct RAG pipeline, which is suitable for a versatile RAG system designed to accommodate diverse scenarios.

3) 查询路由:根据不同的查询,路由到不同的 RAG 管道,这适合于一个旨在适应多种场景的多功能 RAG 系统。

元数据路由器/过滤器。第一步涉及从查询中提取关键词(实体),然后根据关键词和块中的元数据进行过滤,以缩小搜索范围。

语义路由器是另一种路由方法,涉及利用查询的语义信息。具体方法见语义路由器 当然,也可以采用混合路由方法,结合语义和基于元数据的方法以增强查询路由。

D. Embedding D. 嵌入

在 RAG 中,通过计算问题和文档块的嵌入之间的相似性(例如余弦相似性)来实现检索,其中嵌入模型的语义表示能力发挥着关键作用。这主要包括稀疏编码器(BM25)和密集检索器(BERT 架构预训练语言模型)。最近的研究引入了显著的嵌入模型,如 AngIE、Voyage、BGE 等,这些模型受益于多任务指令调优。Hugging Face 的 MTEB 排行榜评估了 8 个任务中的嵌入模型,涵盖了 58 个数据集。此外,C-MTEB 专注于中文能力,涵盖 6 个任务和 35 个数据集。对于“使用哪个嵌入模型”没有放之四海而皆准的答案。然而,一些特定模型更适合特定的用例。

- Mix/hybrid Retrieval : Sparse and dense embedding approaches capture different relevance features and can benefit from each other by leveraging complementary relevance information. For instance, sparse retrieval models can be used

混合检索:稀疏和密集嵌入方法捕捉不同的相关性特征,并可以通过利用互补的相关性信息相互受益。例如,可以使用稀疏检索模型

提供初始搜索结果以训练密集检索模型。此外,预训练语言模型(PLMs)可以用于学习术语权重,以增强稀疏检索。具体而言,它还表明稀疏检索模型可以增强密集检索模型的零样本检索能力,并帮助密集检索器处理包含稀有实体的查询,从而提高鲁棒性。

2) Fine-tuning Embedding Model: In instances where the context significantly deviates from pre-training corpus, particularly within highly specialized disciplines such as healthcare, legal practice, and other sectors replete with proprietary jargon, fine-tuning the embedding model on your own domain dataset becomes essential to mitigate such discrepancies.

2) 微调嵌入模型:在上下文与预训练语料库显著偏离的情况下,特别是在医疗、法律等充满专有术语的高度专业化领域,基于您自己的领域数据集微调嵌入模型变得至关重要,以减轻这种差异。

除了补充领域知识,微调的另一个目的在于对齐检索器和生成器,例如,使用LLM的结果作为微调的监督信号,这被称为 LSR(LM 监督检索器)。PROMPTAGATOR [21]利用LLM作为少量示例查询生成器来创建特定任务的检索器,解决了监督微调中的挑战,特别是在数据稀缺的领域。另一种方法,LLM-Embedder [97],利用LLMs在多个下游任务中生成奖励信号。检索器通过两种类型的监督信号进行微调:数据集的硬标签和来自LLMs的软奖励。这种双信号方法促进了更有效的微调过程,使嵌入模型适应多样的下游应用。REPLUG [72]利用检索器和LLM计算检索文档的概率分布,然后通过计算 KL 散度进行监督训练。这种简单有效的训练方法通过使用 LM 作为监督信号来增强检索模型的性能,消除了对特定交叉注意机制的需求。 此外,受到 RLHF(来自人类反馈的强化学习)的启发,通过强化学习利用基于语言模型的反馈来增强检索器。

E. Adapter E. 适配器

微调模型可能会面临挑战,例如通过 API 集成功能或解决由于本地计算资源有限而产生的限制。因此,一些方法选择引入外部适配器以帮助对齐。

为了优化LLM的多任务能力,UPRISE [20] 训练了一个轻量级的提示检索器,可以自动从预构建的提示池中检索适合给定零-shot 任务输入的提示。AAR(增强适应检索器)[47] 引入了一个通用适配器,旨在适应多个下游任务。而 PRCA [69] 则增加了一个可插拔的奖励驱动上下文适配器,以提高特定任务的性能。BGM [26] 保持检索器和LLM不变,并在两者之间训练一个桥接 Seq2Seq 模型。桥接模型旨在将检索到的信息转换为LLMs可以有效处理的格式,使其不仅能够重新排序,还能够为每个查询动态选择段落,并可能采用更高级的策略,如重复。此外,PKG

introduces an innovative method for integrating knowledge into white-box models via directive fine-tuning [75]. In this approach, the retriever module is directly substituted to generate relevant documents according to a query. This method assists in addressing the difficulties encountered during the fine-tuning process and enhances model performance.

引入了一种通过指令微调将知识整合到白盒模型中的创新方法[75]。在这种方法中,检索模块被直接替换,以根据查询生成相关文档。这种方法有助于解决微调过程中遇到的困难,并提高模型性能。

IV. Generation IV. 生成

在检索之后,直接将所有检索到的信息输入到LLM以回答问题并不是一个好的做法。接下来将从两个方面介绍调整:调整检索到的内容和调整LLM。

A. Context Curation A. 上下文策划

冗余信息可能会干扰LLM的最终生成,过长的上下文也可能导致LLM出现“迷失在中间”的问题[98]。像人类一样,LLM往往只关注长文本的开头和结尾,而忘记中间部分。因此,在 RAG 系统中,我们通常需要进一步处理检索到的内容。

- Reranking: Reranking fundamentally reorders document chunks to highlight the most pertinent results first, effectively reducing the overall document pool, severing a dual purpose in information retrieval, acting as both an enhancer and a filter, delivering refined inputs for more precise language model processing [70]. Reranking can be performed using rule-based methods that depend on predefined metrics like Diversity, Relevance, and MRR, or model-based approaches like Encoder-Decoder models from the BERT series (e.g., SpanBERT), specialized reranking models such as Cohere rerank or bge-raranker-large, and general large language models like GPT [12], [99].

重新排序:重新排序从根本上重新排列文档块,以优先突出最相关的结果,有效减少整体文档池,发挥信息检索中的双重作用,既作为增强器又作为过滤器,为更精确的语言模型处理提供精炼的输入 [70]。重新排序可以使用基于规则的方法,这些方法依赖于预定义的指标,如多样性、相关性和平均排名回报(MRR),或基于模型的方法,如 BERT 系列的编码器-解码器模型(例如,SpanBERT)、专门的重新排序模型,如 Cohere rerank 或 bge-raranker-large,以及通用的大型语言模型,如 GPT [12],[99]。 - Context Selection/Compression: A common misconception in the RAG process is the belief that retrieving as many relevant documents as possible and concatenating them to form a lengthy retrieval prompt is beneficial. However, excessive context can introduce more noise, diminishing the LLM’s perception of key information .

上下文选择/压缩:在 RAG 过程中,一个常见的误解是认为检索尽可能多的相关文档并将它们连接起来形成一个冗长的检索提示是有益的。然而,过多的上下文可能会引入更多的噪音,降低LLM对关键信息的感知。

(Long) LLMLingua [100], [101] utilize small language models (SLMs) such as GPT-2 Small or LLaMA-7B, to detect and remove unimportant tokens, transforming it into a form that is challenging for humans to comprehend but well understood by LLMs. This approach presents a direct and practical method for prompt compression, eliminating the need for additional training of LLMs while balancing language integrity and compression ratio. PRCA tackled this issue by training an information extractor [69]. Similarly, RECOMP adopts a comparable approach by training an information condenser using contrastive learning [71]. Each training data point consists of one positive sample and five negative samples, and the encoder undergoes training using contrastive loss throughout this process [102].

(长)LLMLingua [100],[101] 利用小型语言模型(SLMs),如 GPT-2 Small 或 LLaMA-7B,来检测和移除不重要的标记,将其转化为一种人类难以理解但 LLMs 理解良好的形式。这种方法提供了一种直接且实用的提示压缩方法,消除了对 LLMs 额外训练的需求,同时平衡了语言完整性和压缩比。PRCA 通过训练信息提取器 [69] 解决了这个问题。类似地,RECOMP 通过使用对比学习训练信息压缩器 [71] 采用了类似的方法。每个训练数据点由一个正样本和五个负样本组成,编码器在整个过程中使用对比损失进行训练 [102]。

除了压缩上下文,减少文档数量也有助于提高模型答案的准确性。Ma 等人[103]提出了“过滤-重排序”范式,该范式结合了LLMs和 SLMs 的优势。

在这个范式中,SLM 作为过滤器,而LLMs作为重排序代理。研究表明,指示LLMs重新排列由 SLM 识别的具有挑战性的样本可以显著改善各种信息提取(IE)任务。另一种简单有效的方法是让LLM在生成最终答案之前评估检索到的内容。这使得LLM能够通过LLM批评过滤掉相关性差的文档。例如,在 Chatlaw [104]中,LLM被提示对引用的法律条款进行自我建议,以评估其相关性。

B. LLM Fine-tuning B. LLM 微调

基于场景和数据特征的针对性微调可以在LLMs上获得更好的结果。这也是使用本地LLMs的最大优势之一。当LLMs在特定领域缺乏数据时,可以通过微调向LLM提供额外的知识。Huggingface 的微调数据也可以作为初始步骤使用。

微调的另一个好处是能够调整模型的输入和输出。例如,它可以使LLM适应特定的数据格式,并按照指示生成特定风格的响应[37]。对于涉及结构化数据的检索任务,SANTA 框架[76]实施了三方训练方案,以有效地封装结构和语义的细微差别。初始阶段专注于检索器,在此阶段利用对比学习来优化查询和文档嵌入。

通过强化学习将LLM的输出与人类或检索器的偏好对齐是一种潜在的方法。例如,手动标注最终生成的答案,然后通过强化学习提供反馈。除了与人类偏好对齐外,还可以与微调模型和检索器的偏好对齐[79]。当情况阻止访问强大的专有模型或更大参数的开源模型时,一种简单有效的方法是提炼更强大的模型(例如 GPT-4)。LLM的微调也可以与检索器的微调协调,以对齐偏好。一种典型的方法,如 RA-DIT [27],使用 KL 散度对齐检索器和生成器之间的评分函数。

V. Augmentation PROCESs in RAG

V. 在 RAG 中的增强过程

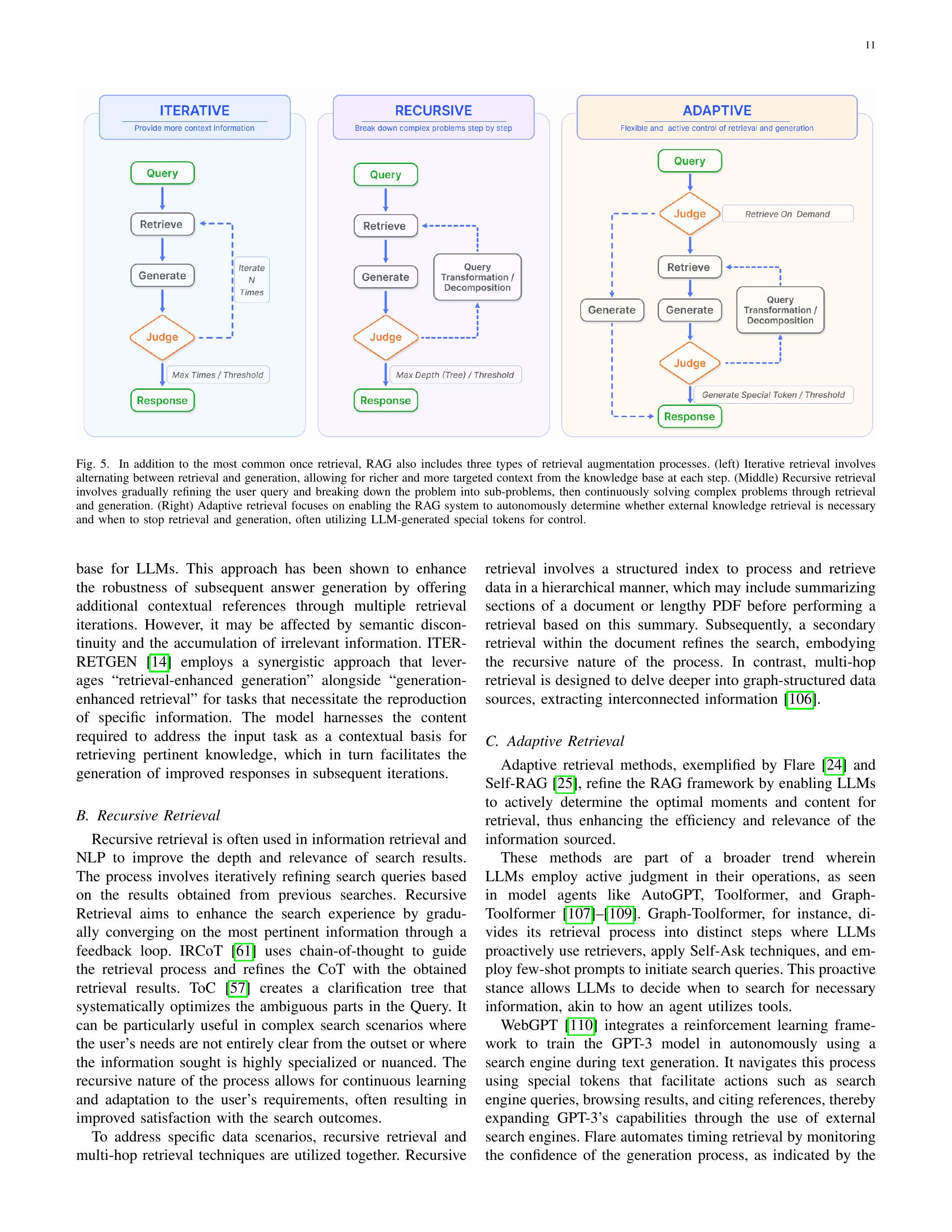

在 RAG 领域,标准做法通常涉及一次检索步骤,随后进行生成,这可能导致效率低下,有时对于需要多步推理的复杂问题来说通常是不够的,因为它提供的信息范围有限[105]。许多研究已针对这一问题优化了检索过程,我们在图 5 中对它们进行了总结。

A. Iterative Retrieval A. 迭代检索

迭代检索是一个过程,在这个过程中,知识库根据初始查询和迄今生成的文本反复进行搜索,从而提供更全面的知识

图 5。除了最常见的单次检索,RAG 还包括三种类型的检索增强过程。(左)迭代检索涉及在检索和生成之间交替进行,使每一步都能从知识库中获得更丰富和更有针对性的上下文。(中)递归检索涉及逐步细化用户查询,将问题分解为子问题,然后通过检索和生成不断解决复杂问题。(右)自适应检索侧重于使 RAG 系统能够自主判断是否需要外部知识检索以及何时停止检索和生成,通常利用LLM生成的特殊标记进行控制。

base for LLMs. This approach has been shown to enhance the robustness of subsequent answer generation by offering additional contextual references through multiple retrieval iterations. However, it may be affected by semantic discontinuity and the accumulation of irrelevant information. ITERRETGEN [14] employs a synergistic approach that leverages “retrieval-enhanced generation” alongside “generationenhanced retrieval” for tasks that necessitate the reproduction of specific information. The model harnesses the content required to address the input task as a contextual basis for retrieving pertinent knowledge, which in turn facilitates the generation of improved responses in subsequent iterations.

LLMs的基础。该方法已被证明通过多次检索迭代提供额外的上下文参考,从而增强后续答案生成的鲁棒性。然而,它可能会受到语义不连贯和无关信息积累的影响。ITERRETGEN [14] 采用了一种协同方法,利用“检索增强生成”和“生成增强检索”相结合,适用于需要重现特定信息的任务。该模型利用解决输入任务所需的内容作为检索相关知识的上下文基础,从而促进后续迭代中生成更好的响应。

B. Recursive Retrieval B. 递归检索

递归检索通常用于信息检索和自然语言处理,以提高搜索结果的深度和相关性。该过程涉及根据先前搜索获得的结果迭代地细化搜索查询。递归检索旨在通过反馈循环逐渐收敛到最相关的信息,从而增强搜索体验。IRCoT [61] 使用思维链来指导检索过程,并根据获得的检索结果细化思维链。ToC [57] 创建了一个澄清树,系统地优化查询中的模糊部分。在复杂的搜索场景中,特别是在用户的需求从一开始并不完全明确或所寻求的信息高度专业化或细致的情况下,这种方法尤其有用。该过程的递归特性允许持续学习和适应用户的需求,通常会导致对搜索结果的满意度提高。

为了应对特定的数据场景,递归检索和多跳检索技术被结合使用。递归

retrieval involves a structured index to process and retrieve data in a hierarchical manner, which may include summarizing sections of a document or lengthy PDF before performing a retrieval based on this summary. Subsequently, a secondary retrieval within the document refines the search, embodying the recursive nature of the process. In contrast, multi-hop retrieval is designed to delve deeper into graph-structured data sources, extracting interconnected information [106].

检索涉及一个结构化索引,以层次化的方式处理和检索数据,这可能包括在基于该摘要执行检索之前对文档或冗长 PDF 的部分进行总结。随后,文档内的二次检索进一步细化搜索,体现了该过程的递归特性。相比之下,多跳检索旨在深入挖掘图结构数据源,提取相互关联的信息[106]。

C. Adaptive Retrieval C. 自适应检索

自适应检索方法,如 Flare [24]和 Self-RAG [25],通过使LLMs能够主动确定检索的最佳时机和内容,从而优化 RAG 框架,提高信息来源的效率和相关性。

这些方法是一个更广泛趋势的一部分,其中LLMs在其操作中采用主动判断,正如在模型代理如 AutoGPT、Toolformer 和 GraphToolformer [107]-[109]中所见。例如,Graph-Toolformer 将其检索过程分为不同的步骤,其中LLMs主动使用检索器,应用自我提问技术,并使用少量示例提示来启动搜索查询。这种主动的态度使LLMs能够决定何时搜索必要的信息,类似于代理如何利用工具。

WebGPT [110] 集成了一个强化学习框架,以训练 GPT-3 模型在文本生成过程中自主使用搜索引擎。它通过使用特殊的标记来导航这一过程,这些标记促进了诸如搜索引擎查询、浏览结果和引用参考文献等操作,从而通过使用外部搜索引擎扩展了 GPT-3 的能力。Flare 通过监控生成过程的信心来自动化时机检索,如所示的

probability of generated terms [24]. When the probability falls below a certain threshold would activates the retrieval system to collect relevant information, thus optimizing the retrieval cycle. Self-RAG [25] introduces “reflection tokens” that allow the model to introspect its outputs. These tokens come in two varieties: “retrieve” and “critic”. The model autonomously decides when to activate retrieval, or alternatively, a predefined threshold may trigger the process. During retrieval, the generator conducts a fragment-level beam search across multiple paragraphs to derive the most coherent sequence. Critic scores are used to update the subdivision scores, with the flexibility to adjust these weights during inference, tailoring the model’s behavior. Self-RAG’s design obviates the need for additional classifiers or reliance on Natural Language Inference (NLI) models, thus streamlining the decision-making process for when to engage retrieval mechanisms and improving the model’s autonomous judgment capabilities in generating accurate responses.

生成术语的概率[24]。当概率低于某个阈值时,会激活检索系统以收集相关信息,从而优化检索周期。Self-RAG [25] 引入了“反思令牌”,允许模型自省其输出。这些令牌有两种类型:“检索”和“批评”。模型自主决定何时激活检索,或者预定义的阈值可能触发该过程。在检索过程中,生成器在多个段落中进行片段级束搜索,以推导出最连贯的序列。批评分数用于更新细分分数,具有在推理过程中调整这些权重的灵活性,从而定制模型的行为。Self-RAG 的设计消除了对额外分类器或依赖自然语言推理(NLI)模型的需求,从而简化了何时启动检索机制的决策过程,并提高了模型在生成准确响应时的自主判断能力。

VI. TASK AND EvaluAtion

VI. 任务与评估

RAG 在自然语言处理领域的快速发展和日益普及,使得 RAG 模型的评估成为LLMs社区研究的前沿。该评估的主要目标是理解和优化 RAG 模型在不同应用场景中的性能。本章将主要介绍 RAG 的主要下游任务、数据集以及如何评估 RAG 系统。

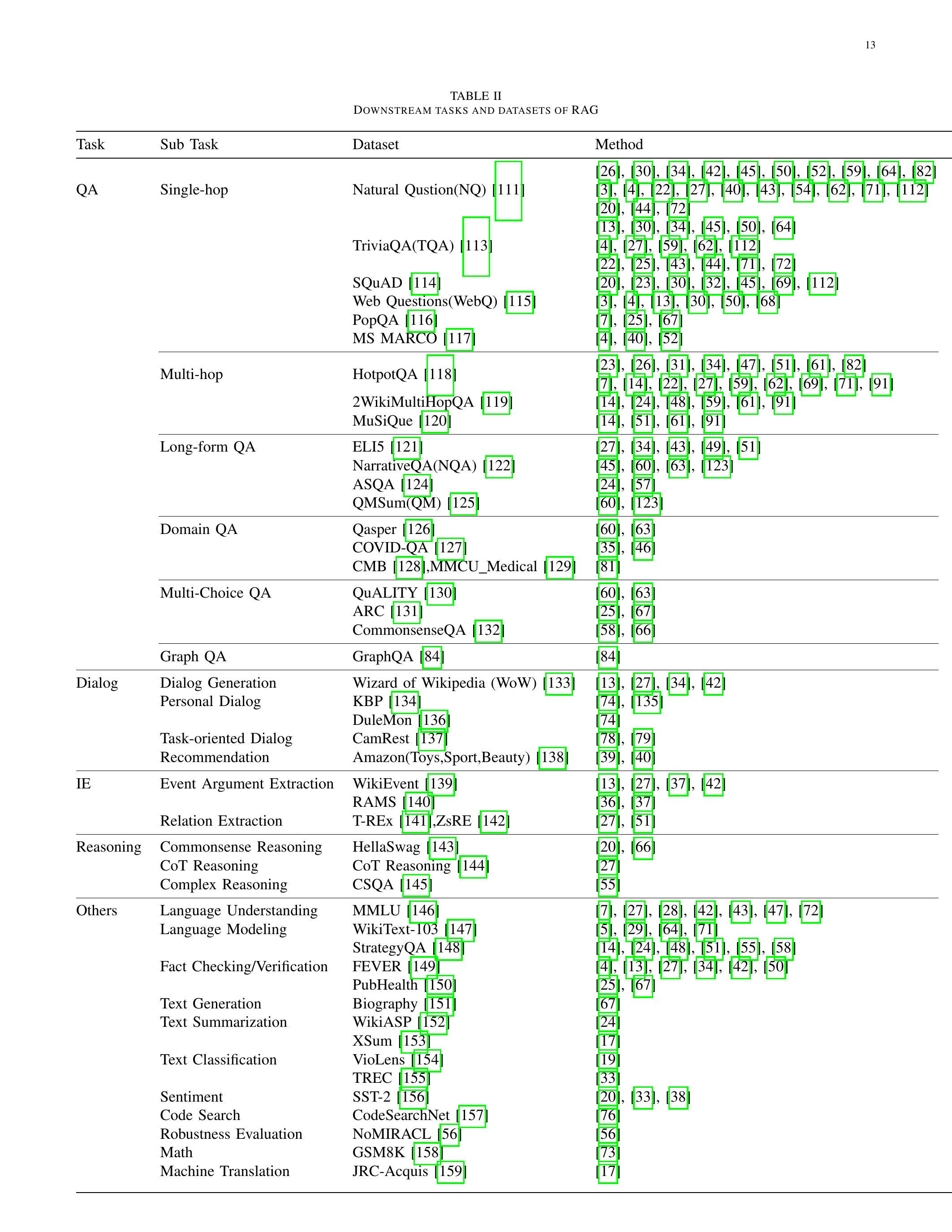

A. Downstream Task A. 下游任务

RAG 的核心任务仍然是问答(QA),包括传统的单跳/多跳问答、多项选择、特定领域问答以及适合 RAG 的长文本场景。除了问答,RAG 还在不断扩展到多个下游任务,如信息提取(IE)、对话生成、代码搜索等。RAG 的主要下游任务及其对应的数据集总结在表 III 中。

B. Evaluation Target B. 评估目标

历史上,RAG 模型的评估主要集中在它们在特定下游任务中的执行。这些评估采用适合于当前任务的既定指标。例如,问答评估可能依赖于 EM 和 F1 分数[7],[45],[59],[72],而事实核查任务通常以准确性作为主要指标[4],[14],[42]。BLEU 和 ROUGE 指标也常用于评估答案质量[26],[32],[52],[78]。像 RALLE 这样的工具,旨在自动评估 RAG 应用程序,同样基于这些特定任务的指标[160]。尽管如此,专门评估 RAG 模型独特特征的研究仍然显著不足。主要评估目标包括:

检索质量。评估检索质量对于确定检索组件所获取上下文的有效性至关重要。来自各个领域的标准指标

of search engines, recommendation systems, and information retrieval systems are employed to measure the performance of the RAG retrieval module. Metrics such as Hit Rate, MRR, and NDCG are commonly utilized for this purpose [161], [162].

搜索引擎、推荐系统和信息检索系统被用来衡量 RAG 检索模块的性能。常用的指标包括命中率、平均排名回报(MRR)和归一化折现累计增益(NDCG)[161],[162]。

生成质量。生成质量的评估集中在生成器从检索到的上下文中合成连贯且相关的答案的能力上。该评估可以根据内容的目标进行分类:未标记内容和标记内容。对于未标记内容,评估包括生成答案的真实性、相关性和无害性。相反,对于标记内容,重点在于模型生成信息的准确性。此外,检索和生成质量评估可以通过手动或自动评估方法进行 [29],[161],[163]。

C. Evaluation Aspects C. 评估方面

当代 RAG 模型的评估实践强调三个主要质量评分和四个基本能力,这些共同为 RAG 模型的两个主要目标:检索和生成的评估提供信息。

- Quality Scores: Quality scores include context relevance, answer faithfulness, and answer relevance. These quality scores evaluate the efficiency of the RAG model from different perspectives in the process of information retrieval and generation [164]-166].

质量评分:质量评分包括上下文相关性、答案可信度和答案相关性。这些质量评分从不同角度评估 RAG 模型在信息检索和生成过程中的效率[164]-166]。

上下文相关性评估检索到的上下文的准确性和特异性,确保相关性并最小化与多余内容相关的处理成本。

答案的真实性确保生成的答案与检索到的上下文保持一致,维护一致性并避免矛盾。

答案相关性要求生成的答案与提出的问题直接相关,有效地解决核心询问。

2) Required Abilities: RAG evaluation also encompasses four abilities indicative of its adaptability and efficiency: noise robustness, negative rejection, information integration, and counterfactual robustness [167], 168]. These abilities are critical for the model’s performance under various challenges and complex scenarios, impacting the quality scores.

所需能力:RAG 评估还包括四种能力,表明其适应性和效率:噪声鲁棒性、负面拒绝、信息整合和反事实鲁棒性。这些能力对于模型在各种挑战和复杂场景下的表现至关重要,影响质量评分。

噪声鲁棒性评估模型处理与问题相关但缺乏实质性信息的噪声文档的能力。

负面拒绝评估模型在检索到的文档不包含回答问题所需知识时,抑制回应的能力。

信息集成评估模型在综合多个文档信息以解决复杂问题方面的能力。

反事实鲁棒性测试模型识别和忽略文档中已知不准确性的能力,即使在被告知潜在错误信息的情况下。

上下文相关性和噪声鲁棒性对于评估检索质量非常重要,而答案的真实性、答案的相关性、负面拒绝、信息整合和反事实鲁棒性对于评估生成质量则非常重要。

DOWNSTREAM TASKS AND DATASETS OF RAG

RAG 的下游任务和数据集

SUMMARY OF METRICS APPLICABLE FOR EVALUATION ASPECTS OF RAG

适用于 RAG 评估方面的指标总结

|

上下文相关性

| Faithfulness 忠实性 |

答案相关性

|

噪声鲁棒性

|

负面拒绝

|

信息集成

|

反事实鲁棒性

| |||||||||||||

| Accuracy 准确性 | |||||||||||||||||||

| EM | |||||||||||||||||||

| Recall 回忆 | |||||||||||||||||||

| Precision 精确度 | |||||||||||||||||||

| R-Rate | |||||||||||||||||||

| Cosine Similarity 余弦相似度 | |||||||||||||||||||

| Hit Rate 命中率 | |||||||||||||||||||

| MRR | |||||||||||||||||||

| NDCG | |||||||||||||||||||

| BLEU | |||||||||||||||||||

| ROUGE/ROUGE-L |

每个评估方面的具体指标总结在表 III 中。必须认识到,这些指标源自相关工作,是传统的衡量标准,尚未代表量化 RAG 评估方面的成熟或标准化方法。虽然这里未包含,但在一些评估研究中也开发了针对 RAG 模型细微差别的定制指标。

D. Evaluation Benchmarks and Tools

D. 评估基准和工具

一系列基准测试和工具已被提出,以促进对 RAG 的评估。这些工具提供了定量指标,不仅衡量 RAG 模型的性能,还增强了对模型在各个评估方面能力的理解。著名的基准如 RGB、RECALL 和 CRUD [167]-[169]专注于评估 RAG 模型的基本能力。同时,最先进的自动化工具如 RAGAS [164]、ARES [165]和 TruLens 使用LLMs来裁定质量评分。这些工具和基准共同构成了一个系统评估 RAG 模型的强大框架,如表 IV 所总结。

VII. Discussion And Future Prospects

VII. 讨论与未来展望

尽管 RAG 技术取得了显著进展,但仍然存在一些值得深入研究的挑战。本章将主要介绍 RAG 面临的当前挑战和未来研究方向。

A. RAG vs Long Context

A. RAG 与长上下文

随着相关研究的深入,LLMs的背景不断扩展[170]-[172]。目前,LLMs可以轻松处理超过 200,000 个标记的上下文。这一能力意味着,之前依赖于 RAG 的长文档问答现在可以直接将整个文档纳入提示中。这也引发了关于在LLMs时 RAG 是否仍然必要的讨论。

不受上下文的限制。事实上,RAG 仍然发挥着不可替代的作用。一方面,向LLMs一次性提供大量上下文将显著影响其推理速度,而分块检索和按需输入可以显著提高操作效率。另一方面,基于 RAG 的生成可以快速定位LLMs的原始参考资料,以帮助用户验证生成的答案。整个检索和推理过程是可观察的,而仅依赖于长上下文的生成仍然是一个黑箱。相反,上下文的扩展为 RAG 的发展提供了新的机会,使其能够解决更复杂的问题以及需要阅读大量材料才能回答的综合性或总结性问题[49]。在超长上下文的背景下开发新的 RAG 方法是未来研究的趋势之一。

B. RAG Robustness B. RAG 鲁棒性

在检索过程中,噪声或矛盾信息的存在会对 RAG 的输出质量产生不利影响。这种情况被形象地称为“错误信息可能比没有信息更糟”。提高 RAG 对这种对抗性或反事实输入的抵抗力正在获得研究动力,并已成为一个关键性能指标[48],[50],[82]。Cuconasu 等人[54]分析了应该检索哪种类型的文档,评估文档与提示的相关性、位置以及上下文中包含的数量。研究结果表明,包含不相关的文档可能意外地将准确性提高超过 ,这与最初假设的质量降低相矛盾。这些结果强调了开发专门策略以将检索与语言生成模型结合的重要性,突显了对 RAG 的鲁棒性进行进一步研究和探索的必要性。

C. Hybrid Approaches C. 混合方法

将 RAG 与微调相结合正在成为一种领先策略。确定 RAG 与微调的最佳集成方式,无论是顺序、交替,还是通过端到端的联合训练,以及如何利用这两者的参数化。

SUMMARY OF EVALUATION FRAMEWORKS

评估框架的总结

| Evaluation Framework 评估框架 | Evaluation Targets 评估目标 | Evaluation Aspects 评估方面 | Quantitative Metrics 定量指标 | ||||||||

| Retrieval Quality Generation Quality 检索质量 生成质量 |

噪声鲁棒性 负拒绝 信息集成 反事实鲁棒性

|

准确性 EM 准确性 准确性

| |||||||||

| RECALL | Generation Quality 生成质量 | Counterfactual Robustness 反事实鲁棒性 | R-Rate (Reappearance Rate) R-Rate(重现率) | ||||||||

| RAGAS | Retrieval Quality Generation Quality 检索质量 生成质量 | Context Relevance Faithfulness Answer Relevance 上下文相关性 可信度 答案相关性 | Cosine Similarity 余弦相似度 | ||||||||

| ARES | Retrieval Quality Generation Quality 检索质量 生成质量 |

上下文相关性 可信度 答案相关性

|

准确性 准确性 准确性

| ||||||||

| TruLens | Retrieval Quality Generation Quality 检索质量 生成质量 | Context Relevance Faithfulness Answer Relevance 上下文相关性 可信度 答案相关性 | * | ||||||||

| CRUD | Retrieval Quality Generation Quality 检索质量 生成质量 | Creative Generation Knowledge-intensive QA Error Correction Summarization 创意生成 知识密集型问答 错误修正 摘要 |

代表基准, 代表工具。*表示定制的定量指标,这些指标偏离了传统指标。鼓励读者根据需要查阅相关文献以获取与这些指标相关的具体量化公式。

and non-parameterized advantages are areas ripe for exploration [27]. Another trend is to introduce SLMs with specific functionalities into RAG and fine-tuned by the results of RAG system. For example, CRAG [67] trains a lightweight retrieval evaluator to assess the overall quality of the retrieved documents for a query and triggers different knowledge retrieval actions based on confidence levels.

和非参数化优势是值得探索的领域[27]。另一个趋势是将具有特定功能的 SLM 引入 RAG,并通过 RAG 系统的结果进行微调。例如,CRAG [67]训练一个轻量级检索评估器,以评估针对查询的检索文档的整体质量,并根据置信水平触发不同的知识检索动作。

D. Scaling laws of RAG

D. RAG 的扩展法则

端到端的 RAG 模型和基于 RAG 的预训练模型仍然是当前研究者关注的重点之一[173]。这些模型的参数是关键因素之一。虽然已经为LLMs建立了规模法则[174],但其在 RAG 中的适用性仍然不确定。像 RETRO++ [44]这样的初步研究已经开始解决这个问题,但 RAG 模型的参数数量仍然落后于LLMs。逆规模法则 的可能性,即较小的模型优于较大的模型,尤其引人注目,值得进一步研究。

E. Production-Ready RAG E. 生产就绪的 RAG

RAG 的实用性和与工程要求的一致性促进了它的采用。然而,提高检索效率、改善大型知识库中的文档召回率以及确保数据安全,例如防止

LLMs的文档来源或元数据的无意披露是仍需解决的关键工程挑战[175]。

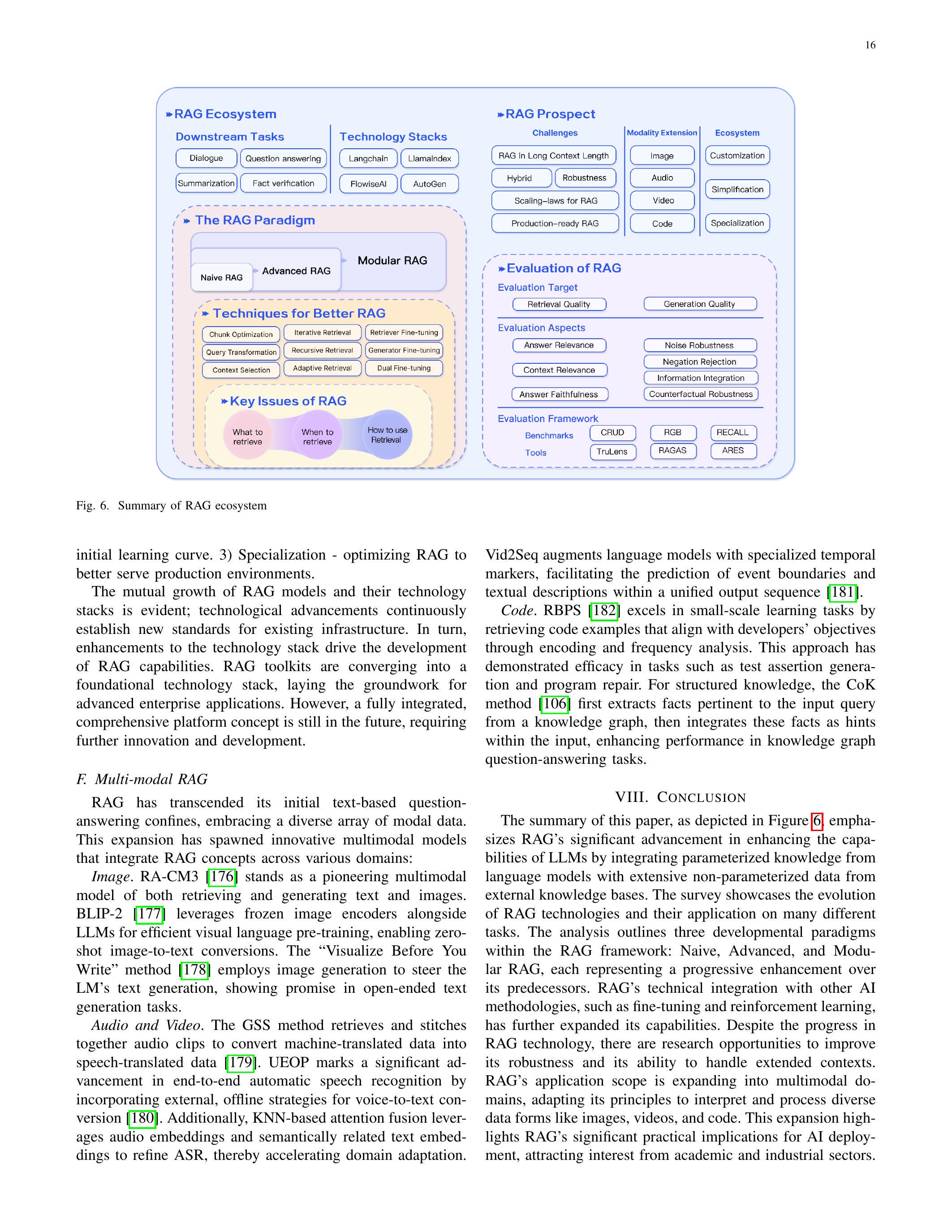

RAG 生态系统的发展受到其技术栈进展的重大影响。像 LangChain 和 LLamaIndex 这样的关键工具在 ChatGPT 出现后迅速获得了人气,提供了广泛的 RAG 相关 API,并在LLMs领域中变得不可或缺。虽然新兴的技术栈在功能上不如 LangChain 和 LLamaIndex 丰富,但通过其专业化产品脱颖而出。例如,Flowise AI 优先考虑低代码方法,使用户能够通过用户友好的拖放界面部署包括 RAG 在内的 AI 应用程序。其他技术如 HayStack、Meltano 和 Cohere Coral 也因其对该领域的独特贡献而受到关注。

除了专注于人工智能的供应商,传统软件和云服务提供商也在扩展他们的产品,以包括以 RAG 为中心的服务。Weaviate 的 Verba 旨在用于个人助手应用,而亚马逊的 Kendra 提供智能企业搜索服务,使用户能够使用内置连接器浏览各种内容库。在 RAG 技术的发展中,明显出现了不同专业化方向的趋势,例如:1)定制 - 根据特定需求量身定制 RAG。2)简化 - 使 RAG 更易于使用,以减少

图 6. RAG 生态系统的总结

initial learning curve. 3) Specialization - optimizing RAG to better serve production environments.

初始学习曲线。3)专业化 - 优化 RAG 以更好地服务于生产环境。

RAG 模型及其技术栈的相互增长显而易见;技术进步不断为现有基础设施建立新的标准。反过来,技术栈的增强推动了 RAG 能力的发展。RAG 工具包正在汇聚成一个基础技术栈,为高级企业应用奠定基础。然而,完全整合的综合平台概念仍在未来,需要进一步的创新和发展。

F. Multi-modal RAG F. 多模态 RAG

RAG 已经超越了最初基于文本的问题回答限制,拥抱了多种模态数据。这一扩展催生了创新的多模态模型,这些模型在各个领域整合了 RAG 概念:

图像。RA-CM3 [176] 是一个开创性的多模态模型,能够检索和生成文本和图像。BLIP-2 [177] 利用冻结的图像编码器和 LLMs 进行高效的视觉语言预训练,实现零样本图像到文本的转换。“写作前可视化”方法 [178] 采用图像生成来引导语言模型的文本生成,在开放式文本生成任务中显示出潜力。

音频和视频。GSS 方法检索并拼接音频片段,将机器翻译的数据转换为语音翻译的数据[179]。UEOP 在端到端自动语音识别方面标志着一个重要的进展,通过结合外部的离线策略进行语音转文本转换[180]。此外,基于 KNN 的注意力融合利用音频嵌入和语义相关的文本嵌入来优化 ASR,从而加速领域适应。

Vid2Seq 通过专门的时间标记增强语言模型,促进事件边界和文本描述在统一输出序列中的预测[181]。

代码。RBPS [182] 在小规模学习任务中表现出色,通过编码和频率分析检索与开发者目标一致的代码示例。这种方法在测试断言生成和程序修复等任务中显示了有效性。对于结构化知识,CoK 方法 [106] 首先从知识图谱中提取与输入查询相关的事实,然后将这些事实作为提示整合到输入中,从而提高知识图谱问答任务的性能。

VIII. CONCLUSION 八、结论

本文的摘要如图 6 所示,强调了 RAG 在通过将来自语言模型的参数化知识与来自外部知识库的大量非参数化数据相结合,显著提升LLMs能力方面的重要进展。该调查展示了 RAG 技术的发展及其在许多不同任务上的应用。分析概述了 RAG 框架内的三种发展范式:简单 RAG、先进 RAG 和模块化 RAG,每种范式都代表了对其前身的逐步增强。RAG 与其他人工智能方法(如微调和强化学习)的技术整合进一步扩展了其能力。尽管 RAG 技术取得了进展,但仍有研究机会可以提高其鲁棒性和处理扩展上下文的能力。RAG 的应用范围正在扩展到多模态领域,调整其原则以解释和处理图像、视频和代码等多种数据形式。这一扩展突显了 RAG 在人工智能部署中的重要实际意义,吸引了学术界和工业界的关注。

RAG 日益增长的生态系统体现在以 RAG 为中心的人工智能应用的增加以及支持工具的持续发展。随着 RAG 应用领域的扩大,需要改进评估方法,以跟上其发展的步伐。确保准确和具有代表性的性能评估对于全面捕捉 RAG 对人工智能研究和开发社区的贡献至关重要。

REFERENCES 参考文献

[1] N. Kandpal, H. Deng, A. Roberts, E. Wallace, 和 C. Raffel,“大型语言模型难以学习长尾知识,”在国际机器学习会议上。PMLR,2023,页 15696-15707。

[2] Y. Zhang, Y. Li, L. Cui, D. Cai, L. Liu, T. Fu, X. Huang, E. Zhao, Y. Zhang, Y. Chen et al., “Siren’s song in the ai ocean: A survey on hallucination in large language models,” arXiv preprint arXiv:2309.01219, 2023.

[2] Y. Zhang, Y. Li, L. Cui, D. Cai, L. Liu, T. Fu, X. Huang, E. Zhao, Y. Zhang, Y. Chen 等, “人工智能海洋中的海妖之歌:关于大型语言模型幻觉的调查,” arXiv 预印本 arXiv:2309.01219, 2023.

[3] D. Arora, A. Kini, S. R. Chowdhury, N. Natarajan, G. Sinha, and A. Sharma, “Gar-meets-rag paradigm for zero-shot information retrieval,” arXiv preprint arXiv:2310.20158, 2023.

[3] D. Arora, A. Kini, S. R. Chowdhury, N. Natarajan, G. Sinha, 和 A. Sharma, “Gar-meets-rag 范式用于零-shot 信息检索,” arXiv 预印本 arXiv:2310.20158, 2023.

[4] P. Lewis, E. Perez, A. Piktus, F. Petroni, V. Karpukhin, N. Goyal, H. Küttler, M. Lewis, W.-t. Yih, T. Rocktäschel et al., “Retrievalaugmented generation for knowledge-intensive nlp tasks,” Advances in Neural Information Processing Systems, vol. 33, pp. 9459-9474, 2020.

[4] P. Lewis, E. Perez, A. Piktus, F. Petroni, V. Karpukhin, N. Goyal, H. Küttler, M. Lewis, W.-t. Yih, T. Rocktäschel 等,“用于知识密集型自然语言处理任务的检索增强生成”,《神经信息处理系统进展》,第 33 卷,页 9459-9474,2020 年。

[5] S. Borgeaud, A. Mensch, J. Hoffmann, T. Cai, E. Rutherford, K. Millican, G. B. Van Den Driessche, J.-B. Lespiau, B. Damoc, A. Clark et al., “Improving language models by retrieving from trillions of tokens,” in International conference on machine learning. PMLR, 2022, pp. 2206-2240.

[5] S. Borgeaud, A. Mensch, J. Hoffmann, T. Cai, E. Rutherford, K. Millican, G. B. Van Den Driessche, J.-B. Lespiau, B. Damoc, A. Clark 等, “通过从万亿个标记中检索来改进语言模型,” 在国际机器学习会议. PMLR, 2022, 第 2206-2240 页.

[6] L. Ouyang, J. Wu, X. Jiang, D. Almeida, C. Wainwright, P. Mishkin, C. Zhang, S. Agarwal, K. Slama, A. Ray et al., “Training language models to follow instructions with human feedback,” Advances in neural information processing systems, vol. 35, pp. , 2022.

[6] L. Ouyang, J. Wu, X. Jiang, D. Almeida, C. Wainwright, P. Mishkin, C. Zhang, S. Agarwal, K. Slama, A. Ray 等,“训练语言模型以遵循人类反馈的指令,”神经信息处理系统进展,卷 35,页 ,2022。

[7] X. Ma, Y. Gong, P. He, H. Zhao, and N. Duan, “Query rewriting for retrieval-augmented large language models,” arXiv preprint arXiv:2305.14283, 2023.

[7] X. Ma, Y. Gong, P. He, H. Zhao, 和 N. Duan, “用于检索增强的大型语言模型的查询重写,” arXiv 预印本 arXiv:2305.14283, 2023.

[8] I. ILIN, “Advanced rag techniques: an il-

lustrated

overview,” https://pub.towardsai.net/ advanced-rag-techniques-an-illustrated-overview-04d193d8fec6 2023.

概述,” https://pub.towardsai.net/ advanced-rag-techniques-an-illustrated-overview-04d193d8fec6 2023.

[9] W. Peng, G. Li, Y. Jiang, Z. Wang, D. Ou, X. Zeng, E. Chen et al., “Large language model based long-tail query rewriting in taobao search,” arXiv preprint arXiv:2311.03758, 2023.

[9] W. Peng, G. Li, Y. Jiang, Z. Wang, D. Ou, X. Zeng, E. Chen 等, “基于大型语言模型的淘宝搜索长尾查询重写,” arXiv 预印本 arXiv:2311.03758, 2023.

[10] H. S. Zheng, S. Mishra, X. Chen, H.-T. Cheng, E. H. Chi, Q. V. Le, and D. Zhou, “Take a step back: Evoking reasoning via abstraction in large language models,” arXiv preprint arXiv:2310.06117, 2023.

[10] H. S. Zheng, S. Mishra, X. Chen, H.-T. Cheng, E. H. Chi, Q. V. Le, 和 D. Zhou, “退一步:通过抽象在大型语言模型中引发推理,” arXiv 预印本 arXiv:2310.06117, 2023.

[11] L. Gao, X. Ma, J. Lin, and J. Callan, “Precise zero-shot dense retrieval without relevance labels,” arXiv preprint arXiv:2212.10496, 2022.

[11] L. Gao, X. Ma, J. Lin, 和 J. Callan, “无相关性标签的精确零-shot 密集检索,” arXiv 预印本 arXiv:2212.10496, 2022.

[12] V. Blagojevi, “Enhancing rag pipelines in haystack: Introducing diversityranker and lostinthemiddleranker,” https://towardsdatascience.com/ enhancing-rag-pipelines-in-haystack-45f14e2bc9f5 2023.

[12] V. Blagojevi, “在 Haystack 中增强 RAG 管道:引入 DiversityRanker 和 LostInTheMiddleRanker,” https://towardsdatascience.com/ enhancing-rag-pipelines-in-haystack-45f14e2bc9f5 2023.

[13] W. Yu, D. Iter, S. Wang, Y. Xu, M. Ju, S. Sanyal, C. Zhu, M. Zeng, and M. Jiang, “Generate rather than retrieve: Large language models are strong context generators,” arXiv preprint arXiv:2209.10063, 2022.

[13] W. Yu, D. Iter, S. Wang, Y. Xu, M. Ju, S. Sanyal, C. Zhu, M. Zeng, 和 M. Jiang, “生成而非检索:大型语言模型是强大的上下文生成器,” arXiv 预印本 arXiv:2209.10063, 2022.

[14] Z. Shao, Y. Gong, Y. Shen, M. Huang, N. Duan, and W. Chen, “Enhancing retrieval-augmented large language models with iterative retrieval-generation synergy,” arXiv preprint arXiv:2305.15294, 2023.

[14] Z. Shao, Y. Gong, Y. Shen, M. Huang, N. Duan, 和 W. Chen, “通过迭代检索-生成协同增强检索增强的大型语言模型,” arXiv 预印本 arXiv:2305.15294, 2023.

[15] X. Wang, Q. Yang, Y. Qiu, J. Liang, Q. He, Z. Gu, Y. Xiao, and W. Wang, “Knowledgpt: Enhancing large language models with retrieval and storage access on knowledge bases,” arXiv preprint arXiv:2308.11761, 2023.

[15] X. Wang, Q. Yang, Y. Qiu, J. Liang, Q. He, Z. Gu, Y. Xiao, 和 W. Wang, “Knowledgpt: 通过对知识库的检索和存储访问增强大型语言模型,” arXiv 预印本 arXiv:2308.11761, 2023.

[16] A. H. Raudaschl, “Forget rag, the future is rag-fusion,” https://towardsdatascience.com/ forget-rag-the-future-is-rag-fusion-1147298d8ad1 2023.

[16] A. H. Raudaschl, “忘记 RAG,未来是 RAG 融合,” https://towardsdatascience.com/ forget-rag-the-future-is-rag-fusion-1147298d8ad1 2023.

[17] X. Cheng, D. Luo, X. Chen, L. Liu, D. Zhao, and R. Yan, “Lift yourself up: Retrieval-augmented text generation with self memory,” arXiv preprint arXiv:2305.02437, 2023.

[17] X. Cheng, D. Luo, X. Chen, L. Liu, D. Zhao, 和 R. Yan, “提升自我:带有自我记忆的检索增强文本生成,” arXiv 预印本 arXiv:2305.02437, 2023.

[18] S. Wang, Y. Xu, Y. Fang, Y. Liu, S. Sun, R. Xu, C. Zhu, and M. Zeng, “Training data is more valuable than you think: A simple and effective method by retrieving from training data,” arXiv preprint arXiv:2203.08773, 2022.

[18] S. Wang, Y. Xu, Y. Fang, Y. Liu, S. Sun, R. Xu, C. Zhu, 和 M. Zeng, “训练数据比你想的更有价值:一种通过从训练数据中检索的简单有效方法,” arXiv 预印本 arXiv:2203.08773, 2022.

[19] X. Li, E. Nie, and S. Liang, “From classification to generation: Insights into crosslingual retrieval augmented icl,” arXiv preprint arXiv:2311.06595, 2023.

[19] X. Li, E. Nie, 和 S. Liang, “从分类到生成:跨语言检索增强 ICL 的见解,” arXiv 预印本 arXiv:2311.06595, 2023.

[20] D. Cheng, S. Huang, J. Bi, Y. Zhan, J. Liu, Y. Wang, H. Sun, F. Wei, D. Deng, and Q. Zhang, “Uprise: Universal prompt retrieval for improving zero-shot evaluation,” arXiv preprint arXiv:2303.08518, 2023.

[20] D. Cheng, S. Huang, J. Bi, Y. Zhan, J. Liu, Y. Wang, H. Sun, F. Wei, D. Deng, 和 Q. Zhang, “Uprise: 通用提示检索以改善零样本评估,” arXiv 预印本 arXiv:2303.08518, 2023.

[21] Z. Dai, V. Y. Zhao, J. Ma, Y. Luan, J. Ni, J. Lu, A. Bakalov, K. Guu, K. B. Hall, and M.-W. Chang, “Promptagator: Few-shot dense retrieval from 8 examples,” arXiv preprint arXiv:2209.11755, 2022.

[21] Z. Dai, V. Y. Zhao, J. Ma, Y. Luan, J. Ni, J. Lu, A. Bakalov, K. Guu, K. B. Hall, 和 M.-W. Chang, “Promptagator: 从 8 个示例中进行少量密集检索,” arXiv 预印本 arXiv:2209.11755, 2022.

[22] Z. Sun, X. Wang, Y. Tay, Y. Yang, and D. Zhou, “Recitation-augmented language models,” arXiv preprint arXiv:2210.01296, 2022.

[22] Z. Sun, X. Wang, Y. Tay, Y. Yang, and D. Zhou, “背诵增强语言模型,” arXiv 预印本 arXiv:2210.01296, 2022.

[23] O. Khattab, K. Santhanam, X. L. Li, D. Hall, P. Liang, C. Potts, and M. Zaharia, “Demonstrate-search-predict: Composing retrieval and language models for knowledge-intensive nlp,” arXiv preprint arXiv:2212.14024, 2022.

[23] O. Khattab, K. Santhanam, X. L. Li, D. Hall, P. Liang, C. Potts, 和 M. Zaharia, “Demonstrate-search-predict: 组合检索和语言模型以进行知识密集型自然语言处理,” arXiv 预印本 arXiv:2212.14024, 2022.

[24] Z. Jiang, F. F. Xu, L. Gao, Z. Sun, Q. Liu, J. Dwivedi-Yu, Y. Yang, J. Callan, and G. Neubig, “Active retrieval augmented generation,” arXiv preprint arXiv:2305.06983, 2023.

[24] Z. Jiang, F. F. Xu, L. Gao, Z. Sun, Q. Liu, J. Dwivedi-Yu, Y. Yang, J. Callan, 和 G. Neubig, “主动检索增强生成,” arXiv 预印本 arXiv:2305.06983, 2023.

[25] A. Asai, Z. Wu, Y. Wang, A. Sil, and H. Hajishirzi, “Self-rag: Learning to retrieve, generate, and critique through self-reflection,” arXiv preprint arXiv:2310.11511, 2023.

[25] A. Asai, Z. Wu, Y. Wang, A. Sil, 和 H. Hajishirzi, “Self-rag: 通过自我反思学习检索、生成和批评,” arXiv 预印本 arXiv:2310.11511, 2023.

[26] Z. Ke, W. Kong, C. Li, M. Zhang, Q. Mei, and M. Bendersky, “Bridging the preference gap between retrievers and llms,” arXiv preprint arXiv:2401.06954, 2024.

[26] Z. Ke, W. Kong, C. Li, M. Zhang, Q. Mei, 和 M. Bendersky, “弥合检索器与 llms 之间的偏好差距,” arXiv 预印本 arXiv:2401.06954, 2024.

[27] X. V. Lin, X. Chen, M. Chen, W. Shi, M. Lomeli, R. James, P. Rodriguez, J. Kahn, G. Szilvasy, M. Lewis et al., “Ra-dit: Retrievalaugmented dual instruction tuning,” arXiv preprint arXiv:2310.01352, 2023.

[27] X. V. Lin, X. Chen, M. Chen, W. Shi, M. Lomeli, R. James, P. Rodriguez, J. Kahn, G. Szilvasy, M. Lewis 等,“Ra-dit: Retrievalaugmented dual instruction tuning,”arXiv 预印本 arXiv:2310.01352,2023。

[28] O. Ovadia, M. Brief, M. Mishaeli, and O. Elisha, “Fine-tuning or retrieval? comparing knowledge injection in llms,” arXiv preprint arXiv:2312.05934, 2023.

[28] O. Ovadia, M. Brief, M. Mishaeli, 和 O. Elisha, “微调还是检索?比较知识注入在 llms 中的效果,” arXiv 预印本 arXiv:2312.05934, 2023.

[29] T. Lan, D. Cai, Y. Wang, H. Huang, and X.-L. Mao, “Copy is all you need,” in The Eleventh International Conference on Learning Representations, 2022.

[29] T. Lan, D. Cai, Y. Wang, H. Huang, 和 X.-L. Mao, “复制就是你所需要的一切,” 在第十一届国际学习表征会议, 2022.

[30] T. Chen, H. Wang, S. Chen, W. Yu, K. Ma, X. Zhao, D. Yu, and H. Zhang, “Dense x retrieval: What retrieval granularity should we use?” arXiv preprint arXiv:2312.06648, 2023.

[30] T. Chen, H. Wang, S. Chen, W. Yu, K. Ma, X. Zhao, D. Yu, 和 H. Zhang, “密集 x 检索:我们应该使用什么检索粒度?” arXiv 预印本 arXiv:2312.06648, 2023.

[31] F. Luo and M. Surdeanu, “Divide & conquer for entailment-aware multi-hop evidence retrieval,” arXiv preprint arXiv:2311.02616, 2023.

[31] F. Luo 和 M. Surdeanu, “针对蕴含感知的多跳证据检索的分而治之,” arXiv 预印本 arXiv:2311.02616, 2023.

[32] Q. Gou, Z. Xia, B. Yu, H. Yu, F. Huang, Y. Li, and N. Cam-Tu, “Diversify question generation with retrieval-augmented style transfer,” arXiv preprint arXiv:2310.14503, 2023.

[32] Q. Gou, Z. Xia, B. Yu, H. Yu, F. Huang, Y. Li, 和 N. Cam-Tu, “通过检索增强风格转移多样化问题生成,” arXiv 预印本 arXiv:2310.14503, 2023.

[33] Z. Guo, S. Cheng, Y. Wang, P. Li, and Y. Liu, “Prompt-guided retrieval augmentation for non-knowledge-intensive tasks,” arXiv preprint arXiv:2305.17653, 2023.

[33] Z. Guo, S. Cheng, Y. Wang, P. Li, 和 Y. Liu, “针对非知识密集型任务的提示引导检索增强,” arXiv 预印本 arXiv:2305.17653, 2023.

[34] Z. Wang, J. Araki, Z. Jiang, M. R. Parvez, and G. Neubig, “Learning to filter context for retrieval-augmented generation,” arXiv preprint arXiv:2311.08377, 2023.

[34] Z. Wang, J. Araki, Z. Jiang, M. R. Parvez, 和 G. Neubig, “学习过滤上下文以进行检索增强生成,” arXiv 预印本 arXiv:2311.08377, 2023.

[35] M. Seo, J. Baek, J. Thorne, and S. J. Hwang, “Retrieval-augmented data augmentation for low-resource domain tasks,” arXiv preprint arXiv:2402.13482, 2024.

[35] M. Seo, J. Baek, J. Thorne, 和 S. J. Hwang, “用于低资源领域任务的检索增强数据增强,” arXiv 预印本 arXiv:2402.13482, 2024.

[36] Y. Ma, Y. Cao, Y. Hong, and A. Sun, “Large language model is not a good few-shot information extractor, but a good reranker for hard samples!” arXiv preprint arXiv:2303.08559, 2023.

[36] Y. Ma, Y. Cao, Y. Hong, 和 A. Sun, “大型语言模型不是一个好的少量信息提取器,但对于困难样本是一个好的重排序器!” arXiv 预印本 arXiv:2303.08559, 2023.

[37] X. Du and H. Ji, “Retrieval-augmented generative question answering for event argument extraction,” arXiv preprint arXiv:2211.07067, 2022.

[37] X. Du 和 H. Ji,“用于事件论元提取的检索增强生成式问答,”arXiv 预印本 arXiv:2211.07067,2022。

[38] L. Wang, N. Yang, and F. Wei, “Learning to retrieve in-context examples for large language models,” arXiv preprint arXiv:2307.07164, 2023.

[38] L. Wang, N. Yang, 和 F. Wei, “为大型语言模型学习检索上下文示例,” arXiv 预印本 arXiv:2307.07164, 2023.

[39] S. Rajput, N. Mehta, A. Singh, R. H. Keshavan, T. Vu, L. Heldt, L. Hong, Y. Tay, V. Q. Tran, J. Samost et al., “Recommender systems with generative retrieval,” arXiv preprint arXiv:2305.05065, 2023.

[39] S. Rajput, N. Mehta, A. Singh, R. H. Keshavan, T. Vu, L. Heldt, L. Hong, Y. Tay, V. Q. Tran, J. Samost 等,“具有生成检索的推荐系统,”arXiv 预印本 arXiv:2305.05065,2023。

[40] B. Jin, H. Zeng, G. Wang, X. Chen, T. Wei, R. Li, Z. Wang, Z. Li, Y. Li, H. Lu et al., “Language models as semantic indexers,” arXiv preprint arXiv:2310.07815, 2023.

[40] B. Jin, H. Zeng, G. Wang, X. Chen, T. Wei, R. Li, Z. Wang, Z. Li, Y. Li, H. Lu 等, “语言模型作为语义索引器,” arXiv 预印本 arXiv:2310.07815, 2023.

[41] R. Anantha, T. Bethi, D. Vodianik, and S. Chappidi, “Context tuning for retrieval augmented generation,” arXiv preprint arXiv:2312.05708, 2023.

[41] R. Anantha, T. Bethi, D. Vodianik, 和 S. Chappidi, “用于检索增强生成的上下文调优,” arXiv 预印本 arXiv:2312.05708, 2023.

[42] G. Izacard, P. Lewis, M. Lomeli, L. Hosseini, F. Petroni, T. Schick, J. Dwivedi-Yu, A. Joulin, S. Riedel, and E. Grave, “Few-shot learning with retrieval augmented language models,” arXiv preprint arXiv:2208.03299, 2022.

[42] G. Izacard, P. Lewis, M. Lomeli, L. Hosseini, F. Petroni, T. Schick, J. Dwivedi-Yu, A. Joulin, S. Riedel, 和 E. Grave, “使用检索增强语言模型的少量学习,” arXiv 预印本 arXiv:2208.03299, 2022.

[43] J. Huang, W. Ping, P. Xu, M. Shoeybi, K. C.-C. Chang, and B. Catanzaro, “Raven: In-context learning with retrieval augmented encoderdecoder language models,” arXiv preprint arXiv:2308.07922, 2023.

[43] J. Huang, W. Ping, P. Xu, M. Shoeybi, K. C.-C. Chang, 和 B. Catanzaro, “Raven: 通过检索增强的编码解码语言模型进行上下文学习,” arXiv 预印本 arXiv:2308.07922, 2023.

-

Corresponding Author.Email haofen.wang @ tongji.edu.cn

通讯作者.电子邮件 haofen.wang @ tongji.edu.cn

Resources are available at https://github.com/Tongji-KGLLM/ RAG-Survey

资源可在 https://github.com/Tongji-KGLLM/ RAG-Survey 获取

406

406

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?