yolov5 区域识别具体实现

YOLOv5(You Only Look Once version 5)是目标检测算法 YOLO(You Only Look Once)的最新版本,由美国加州大学戴维斯分校研究人员 Alexey Bochkovskiy, Chien-Yao Wang 和 Hong-Yuan Mark Liao 开发。它使用深度学习技术,结合卷积神经网络和目标检测技术,能够在实时视频中识别和定位多个目标,包括人、车、动物等。相比前几个版本,YOLOv5的性能提升了很多,速度更快,精度更高,并且支持多种架构。

在YOLOv5中,区域识别的具体实现可以通过以下步骤完成:

1.数据预处理:首先,需要对输入的图像进行预处理,将其缩放到固定的尺寸大小,并将像素值归一化到0到1的范围内。此外,还需要进行数据增强的操作,包括随机裁剪、旋转、翻转等,以增加训练数据的多样性和鲁棒性。

2.网络结构:YOLOv5采用了一种基于骨干网络的轻量级网络结构,即CSPDarknet。该网络结构由多个连续的CSP块组成,每个CSP块由一个卷积层和多个残差块组成,其中残差块内部也包含了CSP结构。这种设计可以使得模型在保证高精度的情况下,具有更高的速度和更小的模型体积。

3.损失函数:YOLOv5采用了一种新的损失函数——CIoU loss,用于优化检测框的预测结果。具体来说,CIoU loss包括目标置信度损失(objectness loss)、分类损失(classification loss)和位置损失(box regression loss),其中位置损失部分还包括了一些特殊的技巧,如GIoU、DIoU等。

4.预测与后处理:在训练好模型后,需要对测试图像进行预测,并对预测结果进行后处理,包括非极大值抑制(NMS)、置信度阈值过滤、目标尺寸还原等操作,最终得到检测结果。

以下是使用yolov5进行目标检测和区域识别的大致代码流程:

import cv2

import torch

import numpy as np

from yolov5 import detect

from PIL import Image

载入模型

model = torch.hub.load(‘ultralytics/yolov5’, ‘yolov5s’)

定义类别信息

class_names = [‘person’, ‘car’, ‘bicycle’, ‘motorcycle’, ‘truck’, ‘bus’, ‘train’]

读取图片

image = cv2.imread(‘test.jpg’)

进行目标检测

results = model(image)

提取预测结果

pred_boxes = results.xyxy[0].numpy()

pred_classes = results.pred[0].numpy()

遍历每个预测结果,进行区域识别

for i in range(len(pred_boxes)):

box = pred_boxes[i]

label = class_names[int(pred_classes[i])]

xmin, ymin, xmax, ymax = box

# 提取目标区域

roi = image[int(ymin):int(ymax), int(xmin):int(xmax)]

# 进行区域识别,以车辆为例

if label in [‘car’, ‘truck’, ‘bus’]:

# 转换为PIL格式

roi = Image.fromarray(cv2.cvtColor(roi, cv2.COLOR_BGR2RGB))

# 进行分类,这里需要自己定义车辆分类器

class_id = classify_car(roi)

# 输出结果

print(‘Found a {} with class id {} in region ({}, {}, {}, {})’.format(label, class_id, xmin, ymin, xmax, ymax))

其中, classify_car() 函数需要自己定义。可以使用机器学习或深度学习的方法对车辆进行分类。常用的方法包括传统的SVM、KNN和最近比较火的CNN模型等

## 以下根据yolov5原detect.py文件做的更改

对需要识别的区域添加mask蒙版,完整代码如下:

```python

import argparse

import base64

import os

import platform

import sys

import datetime

import time

from pathlib import Path

import numpy as np

import pymysql as ml

import torch

from models.experimental import attempt_load

from utils.datasets import letterbox

FILE = Path(__file__).resolve()

ROOT = FILE.parents[0] # YOLOv5 root directory

if str(ROOT) not in sys.path:

sys.path.append(str(ROOT)) # add ROOT to PATH

ROOT = Path(os.path.relpath(ROOT, Path.cwd())) # relative

from models.common import DetectMultiBackend

from utils.dataloaders import IMG_FORMATS, VID_FORMATS, LoadImages, LoadScreenshots, LoadStreams

from utils.general import (LOGGER, Profile, check_file, check_img_size, check_imshow, check_requirements, colorstr, cv2,

increment_path, non_max_suppression, print_args, scale_boxes, strip_optimizer, xyxy2xywh,

set_logging, apply_classifier)

from utils.plots import Annotator, colors, save_one_box

from utils.torch_utils import select_device, smart_inference_mode

@smart_inference_mode()

def run(

weights=ROOT / 'yolov5s.pt', # model path or triton URL

source=ROOT / 'data/images', # file/dir/URL/glob/screen/0(webcam)

data=ROOT / 'data/coco128.yaml', # dataset.yaml path

imgsz=(480, 640), # inference size (height, width)

conf_thres=0.45, # confidence threshold

iou_thres=0.65, # NMS IOU threshold

max_det=1000, # maximum detections per image

device='', # cuda device, i.e. 0 or 0,1,2,3 or cpu

view_img=False, # show results

save_txt=False, # save results to *.txt

save_conf=False, # save confidences in --save-txt labels

save_crop=False, # save cropped prediction boxes

nosave=False, # do not save images/videos

classes=None, # filter by class: --class 0, or --class 0 2 3

agnostic_nms=False, # class-agnostic NMS

augment=False, # augmented inference

visualize=False, # visualize features

update=False, # update all models

project=ROOT / 'runs/detect', # save results to project/name

name='exp', # save results to project/name

exist_ok=False, # existing project/name ok, do not increment

line_thickness=3, # bounding box thickness (pixels)

hide_labels=False, # hide labels

hide_conf=False, # hide confidences

half=False, # use FP16 half-precision inference

dnn=False, # use OpenCV DNN for ONNX inference

vid_stride=1, # video frame-rate stride

):

source = str(source)

save_img = not nosave and not source.endswith('.txt') # save inference images

is_file = Path(source).suffix[1:] in (IMG_FORMATS + VID_FORMATS)

is_url = source.lower().startswith(('rtsp://', 'rtmp://', 'http://', 'https://'))

webcam = source.isnumeric() or source.endswith('.streams') or (is_url and not is_file)

screenshot = source.lower().startswith('screen')

if is_url and is_file:

source = check_file(source) # download

# Directories

save_dir = increment_path(Path(project) / name, exist_ok=exist_ok) # increment run

(save_dir / 'labels' if save_txt else save_dir).mkdir(parents=True, exist_ok=True) # make dir

# Load model

device = select_device(device)

model = DetectMultiBackend(weights, device=device, dnn=dnn, data=data, fp16=half)

stride, names, pt = model.stride, model.names, model.pt

imgsz = check_img_size(imgsz, s=stride) # check image size

# Dataloader

bs = 1 # batch_size

# 判断是摄像机来源还是图片

is_camera = False

if webcam:

is_camera = True

view_img = check_imshow(warn=True)

dataset = LoadStreams(source, img_size=imgsz, stride=stride, auto=pt, vid_stride=vid_stride)

bs = len(dataset)

elif screenshot:

dataset = LoadScreenshots(source, img_size=imgsz, stride=stride, auto=pt)

else:

dataset = LoadImages(source, img_size=imgsz, stride=stride, auto=pt, vid_stride=vid_stride)

vid_path, vid_writer = [None] * bs, [None] * bs

# Run inference

model.warmup(imgsz=(1 if pt or model.triton else bs, 3, *imgsz)) # warmup

seen, windows, dt = 0, [], (Profile(), Profile(), Profile())

for path, im, im0s, vid_cap, s in dataset:

# =====================画mask蒙版============================================================

# mask for certain region

# 1,2,3,4 分别对应左上,右上,右下,左下四个点

hl1 = 4.8 / 10 # 监测区域高度距离图片顶部比例(左上)

hl2 = 4.6 / 10 # 监测区域高度距离图片顶部比例(右上)

hl3 = 9.9 / 10 # 监测区域高度距离图片顶部比例(右下)

hl4 = 9.9 / 10 # 监测区域高度距离图片顶部比例(左下)

hl5 = 6.9 / 10 # 监测区域高度距离图片顶部比例(左下)

wl1 = 5.4 / 10 # 监测区域高度距离图片左部比例(上水平距左)

wl2 = 9.95 / 10 # 监测区域高度距离图片左部比例(上水平距右)

wl3 = 9.9 / 10 # 监测区域高度距离图片左部比例(下水平距右)

wl4 = 4.8 / 10 # 监测区域高度距离图片左部比例(下水平距左)

wl5 = 3.8 / 10 # 监测区域高度距离图片左部比例(下水平距左)

if webcam:

for b in range(0, im.shape[0]):

mask = np.zeros([im[b].shape[1], im[b].shape[2]], dtype=np.uint8)

# mask[round(img[b].shape[1] * hl1):img[b].shape[1], round(img[b].shape[2] * wl1):img[b].shape[2]] = 255

pts = np.array([[int(im[b].shape[2] * wl1), int(im[b].shape[1] * hl1)], # pts1

[int(im[b].shape[2] * wl2), int(im[b].shape[1] * hl2)], # pts2

[int(im[b].shape[2] * wl3), int(im[b].shape[1] * hl3)], # pts3

[int(im[b].shape[2] * wl4), int(im[b].shape[1] * hl4)],

[int(im[b].shape[2] * wl5), int(im[b].shape[1] * hl5)]

], np.int32)

mask = cv2.fillPoly(mask, [pts], (255, 255, 255))

imgc = im[b].transpose((1, 2, 0))

imgc = cv2.add(imgc, np.zeros(np.shape(imgc), dtype=np.uint8), mask=mask)

# cv2.imshow('1',imgc)

im[b] = imgc.transpose((2, 0, 1))

else:

mask = np.zeros([im.shape[1], im.shape[2]], dtype=np.uint8)

pts = np.array([[int(im.shape[2] * wl1), int(im.shape[1] * hl1)], # pts1

[int(im.shape[2] * wl2), int(im.shape[1] * hl2)], # pts2

[int(im.shape[2] * wl3), int(im.shape[1] * hl3)], # pts3

[int(im.shape[2] * wl4), int(im.shape[1] * hl4)],

[int(im.shape[2] * wl5), int(im.shape[1] * hl5)]

], np.int32)

mask = cv2.fillPoly(mask, [pts], (255, 255, 255))

img = im.transpose((1, 2, 0))

img = cv2.add(img, np.zeros(np.shape(img), dtype=np.uint8), mask=mask)

img = img.transpose((2, 0, 1))

# =====================画mask蒙版============================================================

with dt[0]:

im = torch.from_numpy(im).to(model.device)

im = im.half() if model.fp16 else im.float() # uint8 to fp16/32

im /= 255 # 0 - 255 to 0.0 - 1.0

if len(im.shape) == 3:

im = im[None] # expand for batch dim

# Inference

with dt[1]:

visualize = increment_path(save_dir / Path(path).stem, mkdir=True) if visualize else False

pred = model(im, augment=augment, visualize=visualize)

# NMS

with dt[2]:

pred = non_max_suppression(pred, conf_thres, iou_thres, classes, agnostic_nms, max_det=max_det)

# 置信度 为设置阈值

confidence = conf_thres

# Second-stage classifier (optional)

# pred = utils.general.apply_classifier(pred, classifier_model, im, im0s)

# Process predictions

for i, det in enumerate(pred): # per image

seen += 1

if webcam: # batch_size >= 1

p, im0, frame = path[i], im0s[i].copy(), dataset.count

s += f'{i}: '

device_id = f'{i}' # 设备列表index

device_name = f'{p}'

device_ip = device_name.split("/")[2].split("_")[-1]

# print(device_ip)

# =====================显示mask蒙版============================================================

pts = np.array([[int(im0.shape[1] * wl1), int(im0.shape[0] * hl1)], # pts1

[int(im0.shape[1] * wl2), int(im0.shape[0] * hl2)], # pts2

[int(im0.shape[1] * wl3), int(im0.shape[0] * hl3)], # pts3

[int(im0.shape[1] * wl4), int(im0.shape[0] * hl4)],

[int(im0.shape[1] * wl5), int(im0.shape[0] * hl5)]

], np.int32) # pts4

# pts = pts.reshape((-1, 1, 2))

zeros = np.zeros((im0.shape), dtype=np.uint8)

mask = cv2.fillPoly(zeros, [pts], color=(0, 165, 255))

im0 = cv2.addWeighted(im0, 1, mask, 0.2, 0)

cv2.polylines(im0, [pts], True, (255, 255, 0), 3)

# =====================显示mask蒙版============================================================

else:

p, im0, frame = path, im0s.copy(), getattr(dataset, 'frame', 0)

# =====================显示mask蒙版============================================================

cv2.putText(im0, "Detection_Region", (int(im0.shape[1] * wl1 - 5), int(im0.shape[0] * hl1 - 5)),

cv2.FONT_HERSHEY_SIMPLEX,

1.0, (255, 255, 0), 2, cv2.LINE_AA)

pts = np.array([[int(im0.shape[1] * wl1), int(im0.shape[0] * hl1)], # pts1

[int(im0.shape[1] * wl2), int(im0.shape[0] * hl2)], # pts2

[int(im0.shape[1] * wl3), int(im0.shape[0] * hl3)], # pts3

[int(im0.shape[1] * wl4), int(im0.shape[0] * hl4)],

[int(im0.shape[1] * wl5), int(im0.shape[0] * hl5)]

], np.int32) # pts4

zeros = np.zeros((im0.shape), dtype=np.uint8)

mask = cv2.fillPoly(zeros, [pts], color=(0, 165, 255))

im0 = cv2.addWeighted(im0, 1, mask, 0.2, 0)

cv2.polylines(im0, [pts], True, (255, 255, 0), 3)

# =====================显示mask蒙版============================================================

p = Path(p) # to Path

save_path = str(save_dir / p.name) # im.jpg

txt_path = str(save_dir / 'labels' / p.stem) + ('' if dataset.mode == 'image' else f'_{frame}') # im.txt

####################################保存实时检测图片路径##########################################

pic_dir = str(save_dir) + '/pic'

if not os.path.exists(pic_dir):

os.makedirs(pic_dir)

pic_path = pic_dir + '\\' + str(p.stem) + ('' if dataset.mode == 'image' else f'_{frame}')

##############################################################################################

s += '%gx%g ' % im.shape[2:] # print string

img_size = '%gx%g ' % im.shape[2:] # 图片尺寸

count = 0

class_name = ''

pic_str = ''

gn = torch.tensor(im0.shape)[[1, 0, 1, 0]] # normalization gain whwh

imc = im0.copy() if save_crop else im0 # for save_crop

annotator = Annotator(im0, line_width=line_thickness, example=str(names))

if len(det):

# Rescale boxes from img_size to im0 size

det[:, :4] = scale_boxes(im.shape[2:], det[:, :4], im0.shape).round()

# Print results

for c in det[:, 5].unique():

n = (det[:, 5] == c).sum() # detections per class

count = f"{n}"

class_name = 'invade'

s += f"{n} {names[int(c)]}{'s' * (n > 1)}, " # add to string

# Write results

for *xyxy, conf, cls in reversed(det):

if save_txt: # Write to

xywh = (xyxy2xywh(torch.tensor(xyxy).view(1, 4)) / gn).view(-1).tolist() # normalized xywh

line = (cls, *xywh, conf) if save_conf else (cls, *xywh) # label format

with open(f'{txt_path}.txt', 'a') as f:

f.write(names[int(cls)] + '\t')

f.write(('%g ' * len(line)).rstrip() % line + '\n')

if save_img or save_crop or view_img: # Add bbox to image

c = int(cls) # integer class

label = None if hide_labels else (names[c] if hide_conf else f'{names[c]} {conf:.2f}')

annotator.box_label(xyxy, label, color=colors(c, True))

##############################只保存含目标的实时检测图片#################################

if is_camera:

pic = (int(xyxy[0].item()) + int(xyxy[2].item())) / 2

if pic != 0:

cv2.imwrite(pic_path + f'{p.stem}.jpg', im0)

else:

im1 = cv2.imread('no.jpg', 1)

cv2.imwrite(pic_path + f'{p.stem}.jpg', im1)

#####################################################################################

if save_crop:

save_one_box(xyxy, imc, file=save_dir / 'crops' / names[c] / f'{p.stem}.jpg', BGR=True)

# Stream results

im0 = annotator.result()

if view_img:

if platform.system() == 'Linux' and p not in windows:

windows.append(p)

cv2.namedWindow(str(p), cv2.WINDOW_NORMAL | cv2.WINDOW_KEEPRATIO) # allow window resize (Linux)

cv2.resizeWindow(str(p), im0.shape[1], im0.shape[0])

# cv2.imshow(str(p), im0)

cv2.waitKey(1) # 1 millisecond

# Save results (image with detections)

if save_img:

if dataset.mode == 'image':

cv2.imwrite(save_path, im0)

else: # 'video' or 'stream'

if vid_path[i] != save_path: # new video

vid_path[i] = save_path

if isinstance(vid_writer[i], cv2.VideoWriter):

vid_writer[i].release() # release previous video writer

if vid_cap: # video

fps = vid_cap.get(cv2.CAP_PROP_FPS)

w = int(vid_cap.get(cv2.CAP_PROP_FRAME_WIDTH))

h = int(vid_cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

else: # stream

fps, w, h = 30, im0.shape[1], im0.shape[0]

save_path = str(Path(save_path).with_suffix('.mp4')) # force *.mp4 suffix on results videos

vid_writer[i] = cv2.VideoWriter(save_path, cv2.VideoWriter_fourcc(*'mp4v'), fps, (w, h))

vid_writer[i].write(im0)

time.sleep(3)

# Print time (inference-only)

LOGGER.info(f"{s}{'' if len(det) else '(no detections), '}{dt[1].dt * 1E3:.1f}ms")

# Print results

t = tuple(x.t / seen * 1E3 for x in dt) # speeds per image

LOGGER.info(f'Speed: %.1fms pre-process, %.1fms inference, %.1fms NMS per image at shape {(1, 3, *imgsz)}' % t)

if save_txt or save_img:

s = f"\n{len(list(save_dir.glob('labels/*.txt')))} labels saved to {save_dir / 'labels'}" if save_txt else ''

LOGGER.info(f"Results saved to {colorstr('bold', save_dir)}{s}")

if update:

strip_optimizer(weights[0]) # update model (to fix SourceChangeWarning)

def parse_opt():

parser = argparse.ArgumentParser()

parser.add_argument('--weights', nargs='+', type=str, default='weights/best.pt', help='model path or triton URL')

parser.add_argument('--source', type=str, default='file.streams', help='file/dir/URL/glob/screen/0(webcam)')

parser.add_argument('--data', type=str, default=ROOT / 'data/hat.yaml', help='(optional) dataset.yaml path')

parser.add_argument('--imgsz', '--img', '--img-size', nargs='+', type=int, default=[640], help='inference size h,w')

parser.add_argument('--conf-thres', type=float, default=0.8, help='confidence threshold')

parser.add_argument('--iou-thres', type=float, default=0.45, help='NMS IoU threshold')

parser.add_argument('--max-det', type=int, default=1000, help='maximum detections per image')

parser.add_argument('--device', default='', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')

parser.add_argument('--view-img', action='store_true', help='show results')

parser.add_argument('--save-txt', action='store_true', help='save results to *.txt')

parser.add_argument('--save-conf', action='store_true', default=True, help='save confidences in --save-txt labels')

parser.add_argument('--save-crop', action='store_true', help='save cropped prediction boxes')

parser.add_argument('--nosave', action='store_true', help='do not save images/videos')

parser.add_argument('--classes', nargs='+', type=int, help='filter by class: --classes 0, or --classes 0 2 3')

parser.add_argument('--agnostic-nms', action='store_true', help='class-agnostic NMS')

parser.add_argument('--augment', action='store_true', help='augmented inference')

parser.add_argument('--visualize', action='store_true', help='visualize features')

parser.add_argument('--update', action='store_true', help='update all models')

parser.add_argument('--project', default=ROOT / 'runs/detect', help='save results to project/name')

parser.add_argument('--name', default='exp', help='save results to project/name')

parser.add_argument('--exist-ok', action='store_true', help='existing project/name ok, do not increment')

parser.add_argument('--line-thickness', default=3, type=int, help='bounding box thickness (pixels)')

parser.add_argument('--hide-labels', default=False, action='store_true', help='hide labels')

parser.add_argument('--hide-conf', default=False, action='store_true', help='hide confidences')

parser.add_argument('--half', action='store_true', help='use FP16 half-precision inference')

parser.add_argument('--dnn', action='store_true', help='use OpenCV DNN for ONNX inference')

parser.add_argument('--vid-stride', type=int, default=1, help='video frame-rate stride')

opt = parser.parse_args()

opt.imgsz *= 2 if len(opt.imgsz) == 1 else 1 # expand

print_args(vars(opt))

return opt

def main(opt):

check_requirements(exclude=('tensorboard', 'thop'))

run(**vars(opt))

if __name__ == '__main__':

opt = parse_opt()

main(opt)

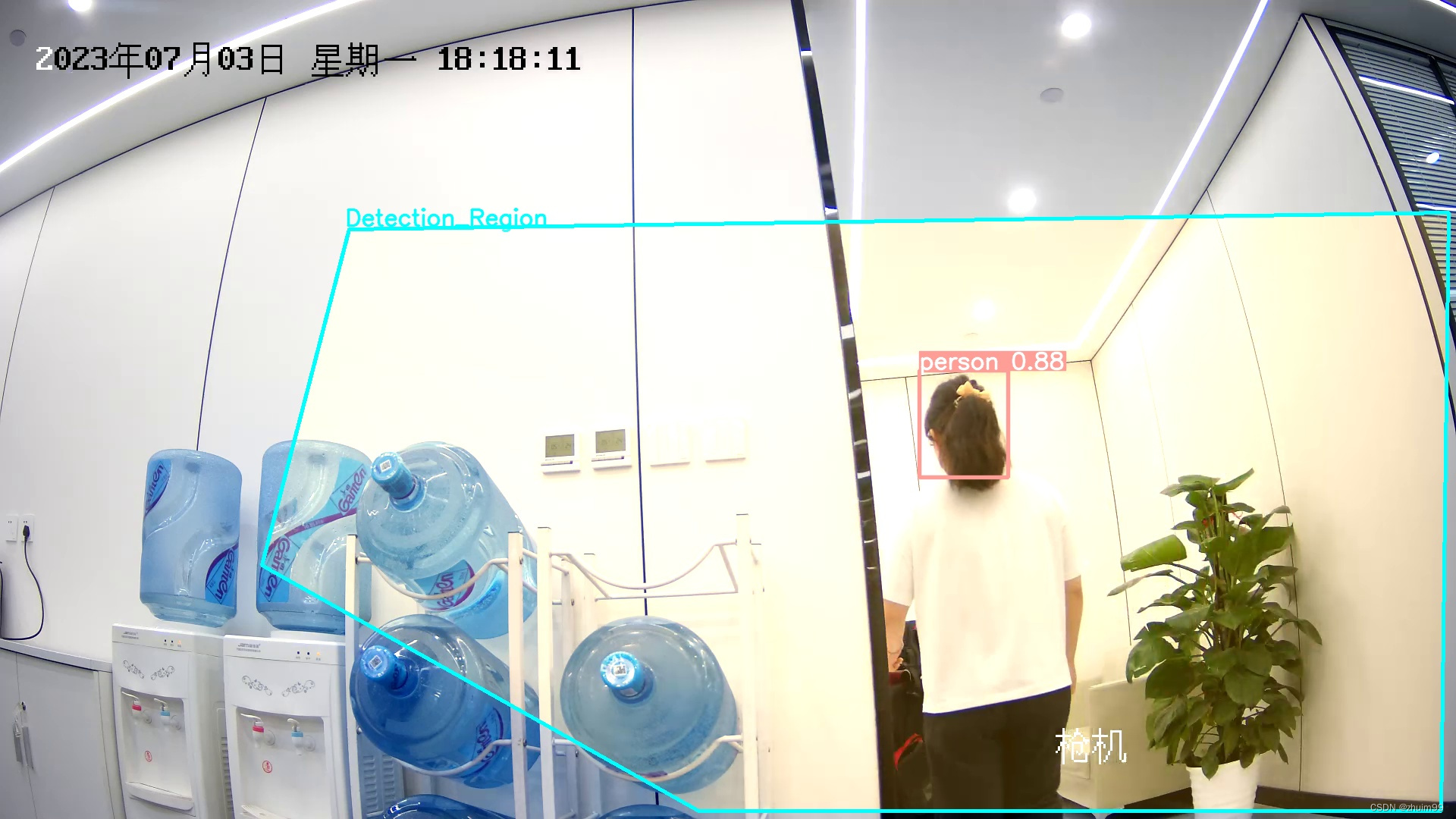

效果展示

1943

1943

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?