学习目标:

- 调试HMN项目代码

- 调研CVPR中与视频理解相关的文章

学习内容:

- HMN项目代码:保存字幕的prediction以及对应的groundtruth到本地。

- HMN项目代码:找到video对应的id,并按照id与caption的映射关系保存到本地。

- 调研CVPR中与图像/视频字幕相关的六篇文章,包括已经汇报过的HMN、SWINBERT。

学习时间:

- 10.31 ~ 11.5

学习笔记:

HMN项目代码:保存字幕的prediction以及对应的groundtruth到本地

在项目HMN中,最终只输出了模型在数据集上测试的分数,为了更好地发现模型生成的prediction与original video及groundtruth之间的差异,有必要输出更多有价值的信息,包括每一个video的id、模型在该video上预测的结果以及groundtruth caption等,以此能够通过对比输出结果中的prediction以及reference来确定预测不准确的字幕,再根据字幕对应的video id追溯到数据集中的original video,进而通过观察视频,通过人为分析,发现其中容易影响预测结果的因素。

首先观察HMN项目中eval.py文件中的代码:

def language_eval(sample_seqs, groundtruth_seqs):

assert len(sample_seqs) == len(groundtruth_seqs), 'length of sampled seqs is different from that of groundtruth seqs!'

references, predictions = OrderedDict(), OrderedDict()

for i in range(len(groundtruth_seqs)):

references[i] = [groundtruth_seqs[i][j] for j in range(len(groundtruth_seqs[i]))]

for i in range(len(sample_seqs)):

predictions[i] = [sample_seqs[i]]

predictions = {i: predictions[i] for i in range(len(sample_seqs))}

references = {i: references[i] for i in range(len(groundtruth_seqs))}

avg_bleu_score, bleu_score = Bleu(4).compute_score(references, predictions)

print('avg_bleu_score == ', avg_bleu_score)

avg_cider_score, cider_score = Cider().compute_score(references, predictions)

print('avg_cider_score == ', avg_cider_score)

avg_meteor_score, meteor_score = Meteor().compute_score(references, predictions)

print('avg_meteor_score == ', avg_meteor_score)

avg_rouge_score, rouge_score = Rouge().compute_score(references, predictions)

print('avg_rouge_score == ', avg_rouge_score)

return {'BLEU': avg_bleu_score, 'CIDEr': avg_cider_score, 'METEOR': avg_meteor_score, 'ROUGE': avg_rouge_score}

在HMN中,每一个epoch完成后都会进行一次eval来评价当前模型的性能,因此在项目代码的eavl.py文件中,就包含了对 BLEU、CIDEr、METEOR、ROUGE 四种评价指标的计算,而计算这些指标就需要用到模型生成的predictions以及对应的groundtruth,所以要想输出每一轮的prediction以及groundtruth并不难:

def language_eval(sample_seqs, groundtruth_seqs):

assert len(sample_seqs) == len(groundtruth_seqs), 'length of sampled seqs is different from that of groundtruth seqs!'

references, predictions = OrderedDict(), OrderedDict()

for i in range(len(groundtruth_seqs)):

references[i] = [groundtruth_seqs[i][j] for j in range(len(groundtruth_seqs[i]))]

for i in range(len(sample_seqs)):

predictions[i] = [sample_seqs[i]]

predictions = {i: predictions[i] for i in range(len(sample_seqs))}

references = {i: references[i] for i in range(len(groundtruth_seqs))}

# 接下来对prediction和groundtruth进行保存

print("loading data to txt_file....")

with open('result_predictions.txt', 'a') as f1:

f1.write(str(predictions)+'\n')

f1.write('************************************\n')

with open('result_references.txt', 'a') as f2:

f2.write(str(references)+'\n')

f2.write('************************************'+'\n')

print("data loaded successfully!")

avg_bleu_score, bleu_score = Bleu(4).compute_score(references, predictions)

print('avg_bleu_score == ', avg_bleu_score)

avg_cider_score, cider_score = Cider().compute_score(references, predictions)

print('avg_cider_score == ', avg_cider_score)

avg_meteor_score, meteor_score = Meteor().compute_score(references, predictions)

print('avg_meteor_score == ', avg_meteor_score)

avg_rouge_score, rouge_score = Rouge().compute_score(references, predictions)

print('avg_rouge_score == ', avg_rouge_score)

return {'BLEU': avg_bleu_score, 'CIDEr': avg_cider_score, 'METEOR': avg_meteor_score, 'ROUGE': avg_rouge_score}

因为该项目我已经部署到服务器上,所以保存结果也在对应的文件目录中,最终生成了两个txt文件,我们可以使用Xftp 7 来查看服务器上路径为 ‘/anaconda3/envs/HMN_/HMN’ 的文件:

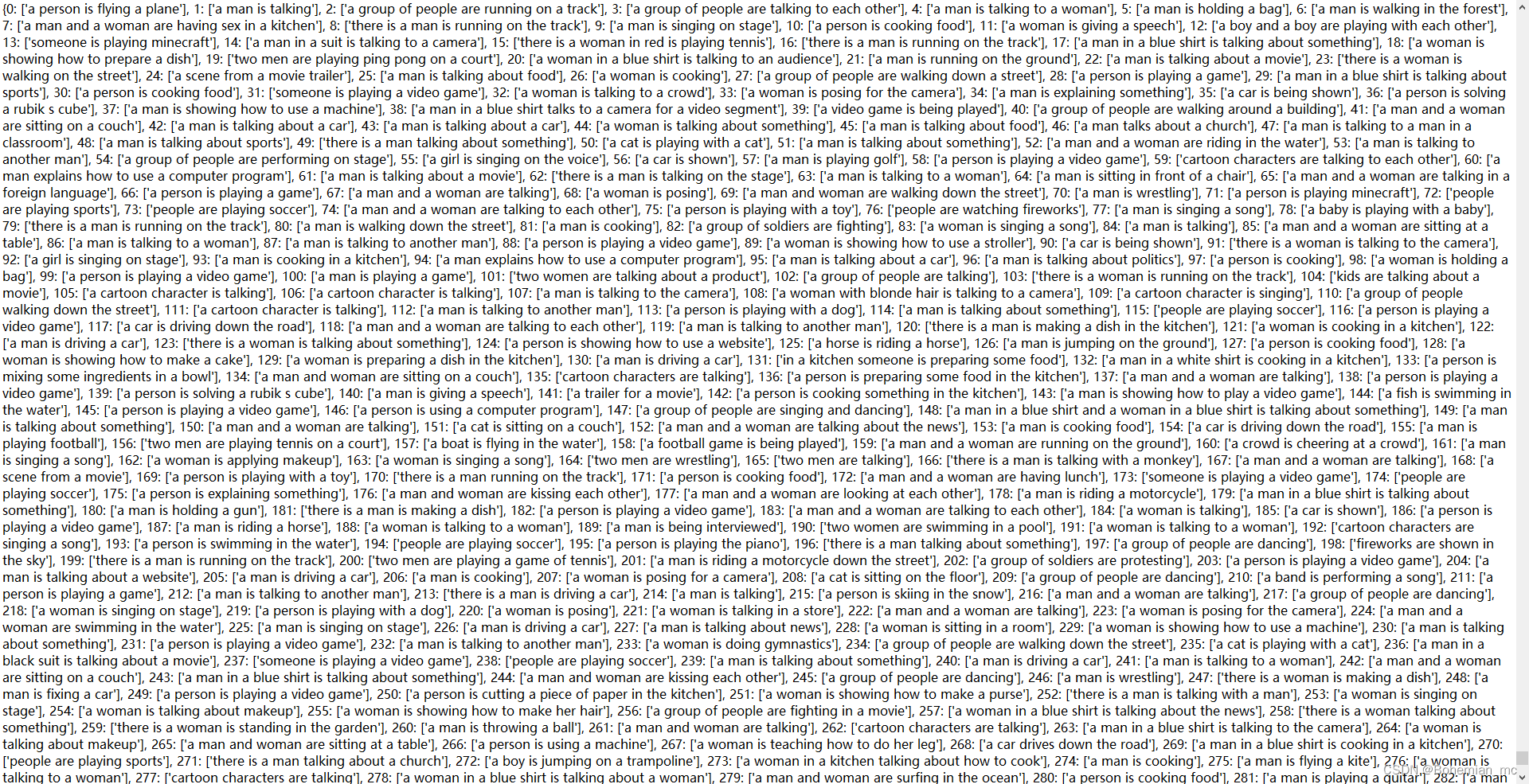

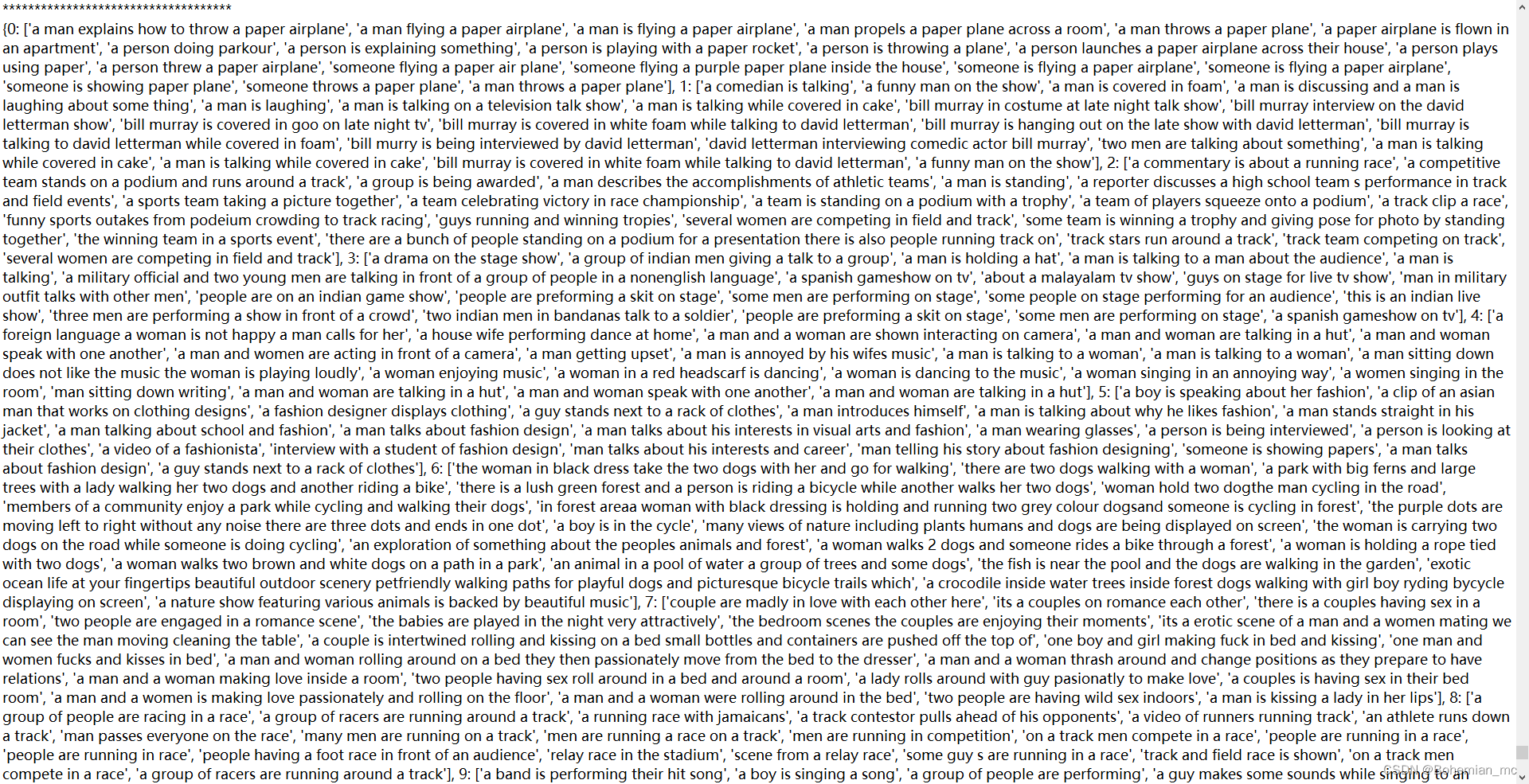

打开这两个txt文件,查看保存的内容如下:

- result_predictions.txt

- result_references.txt

拿编号0所保存的结果为例,

预测结果为:a person is flying a plane

groundtruth为:a man flying a paper airplane

可以发现字幕预测的结果已经十分接近于参考字幕,通过大量的测试案例也证明了该模型的性能十分优秀。

HMN项目代码:找到video对应的id,并按照id与caption的映射关系保存到本地

通过输出prediction以及groundtruth虽然可以查看最终的预测结果是否达到预期,但对于那些预测结果较差的案例,只通过比较groundtruth并不能发现更多的细节,因此一个可行的方案就是通过追溯到数据集中的video,以video代替caption作为参考,人为地分析判断模型预测结果较差的原因,进而再通过汇总分析来找到提升预测结果准确性的方案。

首先观察HMN项目中保存predictions以及groundtruth代码部分:

def eval_fn(model, loader, device, idx2word, save_on_disk, cfgs: TotalConfigs, vid2groundtruth)->dict:

model.eval()

if save_on_disk:

result_dict = {}

predictions, gts = [], []

for i, (feature2ds, feature3ds, object_feats, object_masks, \

vp_semantics, caption_semantics, numberic_caps, masks, \

captions, nouns_dict_list, vids, vocab_ids, vocab_probs, fillmasks) \

in enumerate(loader):

feature2ds = feature2ds.to(device)

feature3ds = feature3ds.to(device)

object_feats = object_feats.to(device)

object_masks = object_masks.to(device)

vp_semantics = vp_semantics.to(device)

caption_semantics = caption_semantics.to(device)

numberic_caps = numberic_caps.to(device)

masks = masks.to(device)

pred, seq_probabilities = model.sample(object_feats, object_masks, feature2ds, feature3ds)

pred = pred.cpu().numpy()

batch_pred = [decode_idx(single_seq, idx2word, cfgs.dict.eos_idx) for single_seq in pred]

predictions += batch_pred

batch_gts = [vid2groundtruth[id] for id in vids] if save_on_disk else [item for item in captions]

gts += batch_gts

if save_on_disk:

assert len(batch_pred) == len(vids), \

'expect len(batch_pred) == len(vids), ' \

'but got len(batch_pred) == {} and len(vids) == {}'.format(len(batch_pred), len(vids))

for vid, pred in zip(vids, batch_pred):

result_dict[vid] = pred

model.train()

score_states = language_eval(sample_seqs=predictions, groundtruth_seqs=gts)

if save_on_disk:

with open(cfgs.test.result_path, 'wb') as f:

pickle.dump(result_dict, f)

return score_states

可以看到在训练过程中结果result是通过dict字典保存,预测的结果predictions以及对应的groundtruth都是通过列表的方式保存,而没有涉及到video_id的保存,因为对于该模型而言,只需要计算predictions以及groundtruth之间的loss便可,但我们为了能够达到更好地分析结果的目的,需要对项目代码进行深入的研究,一般来说,数据的加载都是通过名为“dataloader”的python文件或函数实现的,该项目也不例外:

def __getitem__(self, idx):

vid = self.corresponding_vid[idx] if (self.mode == 'train' and not self.save_on_disk) or self.is_total else \

self.video_ids[idx]

choose_idx = 0

feature2d = self.backbone_2d_dict[vid]

feature3d = self.backbone_3d_dict[vid]

objects = self.objects_dict[vid]

if (self.mode == 'train' and not self.save_on_disk) or self.is_total:

numberic_cap, vp_semantics, caption_semantics, nouns, nouns_vec, vocab_ids, vocab_probs, fillmasks = \

self.total_entries[idx]

else:

numberic_cap, vp_semantics, caption_semantics, nouns, nouns_vec = self.vid2language[vid][choose_idx][1:]

vocab_ids, vocab_probs, fillmasks = None, None, None

captions = self.vid2captions[vid]

nouns_dict = {'nouns': nouns, 'vec': torch.FloatTensor(nouns_vec)}

return torch.FloatTensor(feature2d), torch.FloatTensor(feature3d), torch.FloatTensor(objects), \

torch.LongTensor(numberic_cap), \

torch.FloatTensor(vp_semantics), \

torch.FloatTensor(caption_semantics), captions, nouns_dict, vid, \

vocab_ids, vocab_probs, fillmasks

上述代码最终返回如下内容:

Returns:

feature2d: (sample_numb, dim2d)

feature3d: (sample_numb, dim3d)

objects: (sample_numb * object_num, dim_obj) or (object_num_per_video, dim_obj)

numberic: (max_caption_len, )

captions: List[str]

vid: str

可以看到return的内容中包括有video的id(vid),但是在eval的时候由于项目已经知道了该id的prediction以及对应的groundtruth,因此没有保存vid,经过上述分析,需要通过传递参数将vid传到eval函数中,再通过json的dump方法将每一条video的id、predict caption、groundtruth caption保存到文件中。除此之外,由于模型是在最后一轮训练完成后直接进行测试,并没有严格意义上的测试集,所以我们也只需要保存最后一个epoch中的video信息,于是可以借助一个if判断条件,来保证最后保存的结果是由已经训练好的模型生成的最佳结果,最终代码如下(省略了参数传递的过程):

def language_eval(sample_seqs, groundtruth_seqs, vids_list, is_last_epoch, path_join):

assert len(sample_seqs) == len(groundtruth_seqs), 'length of sampled seqs is different from that of groundtruth seqs!'

if is_last_epoch:#判断epoch是否达到设定的max_epoch

path_dirname = os.path.join(os.path.dirname(path_join), os.path.splitext(os.path.basename(path_join))[0])#设定文件保存的路径

dirname = os.path.dirname(path_dirname)

if not os.path.exists(dirname):#路径不存在则创建

os.makedirs(dirname)

# ERROR log_dir_and_base_name

print('data loading...........................\n', path_dirname)

#保存sample、groundtruth、id信息

with open(path_dirname + 'sample_seqs.json', 'a+') as f:

json.dump(sample_seqs, f)

with open(path_dirname + "groundtruth_seqs.json", 'a+') as f:

json.dump(groundtruth_seqs, f)

with open(path_dirname + "vids_list.json", 'a+') as f:

json.dump(vids_list, f)

references, predictions = OrderedDict(), OrderedDict()

for i in range(len(groundtruth_seqs)):

references[i] = [groundtruth_seqs[i][j] for j in range(len(groundtruth_seqs[i]))]

for i in range(len(sample_seqs)):

predictions[i] = [sample_seqs[i]]

predictions = {i: predictions[i] for i in range(len(sample_seqs))}

references = {i: references[i] for i in range(len(groundtruth_seqs))}

avg_bleu_score, bleu_score = Bleu(4).compute_score(references, predictions)

print('avg_bleu_score == ', avg_bleu_score)

avg_cider_score, cider_score = Cider().compute_score(references, predictions)

print('avg_cider_score == ', avg_cider_score)

avg_meteor_score, meteor_score = Meteor().compute_score(references, predictions)

print('avg_meteor_score == ', avg_meteor_score)

avg_rouge_score, rouge_score = Rouge().compute_score(references, predictions)

print('avg_rouge_score == ', avg_rouge_score)

return {'BLEU': avg_bleu_score, 'CIDEr': avg_cider_score, 'METEOR': avg_meteor_score, 'ROUGE': avg_rouge_score}

未完待续

调试好代码后,在运行到最后一个epoch时进程被杀死,导致模型并没有保存我们想要的视频信息,只能等待模型再跑一遍。

调研CVPR中与图像/视频字幕相关的六篇文章

[1] X -Trans2Cap: Cross-Modal Knowledge Transfer using Transformer for 3D Dense Captioning(使用 Transformer 进行 3D 密集字幕的跨模式知识迁移) keywords:Image Captioning and Dense Captioning(图像字幕/密集字幕);Knowledge distillation(知识蒸馏);Transformer;3D Vision(三维视觉) 论文地址

[2] Hierarchical Modular Network for Video Captioning(用于视频字幕的分层模块化网络) 论文地址 代码地址

[3] Open-Domain, Content-based, Multi-modal Fact-checking of Out-of-Context Images via Online Resources(通过在线资源对上下文外图像进行开放域、基于内容、多模式的事实检查) 论文地址

代码地址

[4] SwinBERT: End-to-End Transformers with Sparse Attention for Video Captioning(用于视频字幕的具有稀疏注意力的端到端transformer) 论文地址 代码地址

[5] NOC-REK: Novel Object Captioning with Retrieved Vocabulary from External Knowledge(从外部知识中检索词汇的新颖对象字幕) 论文地址

[6] Quantifying Societal Bias Amplification in Image Captioning(量化图像字幕中的社会偏见放大) 论文地址

3166

3166

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?