ds_test = ds_test_.map(preprocess).batch(batch_size, drop_remainder=True).prefetch(batch_size)

train_num = ds_info.splits[‘train’].num_examples

test_num = ds_info.splits[‘test’].num_examples

class GaussianSampling(Layer):

def call(self, inputs):

means, logvar = inputs

epsilon = tf.random.normal(shape=tf.shape(means), mean=0., stddev=1.)

samples = means + tf.exp(0.5 * logvar) * epsilon

return samples

class DownConvBlock(Layer):

count = 0

def init(self, filters, kernel_size=(3,3), strides=1, padding=‘same’):

super(DownConvBlock, self).init(name=f"DownConvBlock_{DownConvBlock.count}")

DownConvBlock.count += 1

self.forward = Sequential([

Conv2D(filters, kernel_size, strides, padding),

BatchNormalization(),

LeakyReLU(0.2)

])

def call(self, inputs):

return self.forward(inputs)

class UpConvBlock(Layer):

count = 0

def init(self, filters, kernel_size=(3,3), padding=‘same’):

super(UpConvBlock, self).init(name=f"UpConvBlock_{UpConvBlock.count}")

UpConvBlock.count += 1

self.forward = Sequential([

Conv2D(filters, kernel_size, 1, padding),

LeakyReLU(0.2),

UpSampling2D((2,2))

])

def call(self, inputs):

return self.forward(inputs)

class Encoder(Layer):

def init(self, z_dim, name=‘encoder’):

super(Encoder, self).init(name=name)

self.features_extract = Sequential([

DownConvBlock(filters=32, kernel_size=(3,3), strides=2),

DownConvBlock(filters=32, kernel_size=(3,3), strides=2),

DownConvBlock(filters=64, kernel_size=(3,3), strides=2),

DownConvBlock(filters=64, kernel_size=(3,3), strides=2),

Flatten()

])

self.dense_mean = Dense(z_dim, name=‘mean’)

self.dense_logvar = Dense(z_dim, name=‘logvar’)

self.sampler = GaussianSampling()

def call(self, inputs):

x = self.features_extract(inputs)

mean = self.dense_mean(x)

logvar = self.dense_logvar(x)

z = self.sampler([mean, logvar])

return z, mean, logvar

class Decoder(Layer):

def init(self, z_dim, name=‘decoder’):

super(Decoder, self).init(name=name)

self.forward = Sequential([

Dense(7764, activation=‘relu’),

Reshape((7,7,64)),

UpConvBlock(filters=64, kernel_size=(3,3)),

UpConvBlock(filters=64, kernel_size=(3,3)),

UpConvBlock(filters=32, kernel_size=(3,3)),

UpConvBlock(filters=32, kernel_size=(3,3)),

Conv2D(filters=3, kernel_size=(3,3), strides=1, padding=‘same’, activation=‘sigmoid’)

])

def call(self, inputs):

return self.forward(inputs)

class VAE(Model):

def init(self, z_dim, name=‘VAE’):

super(VAE, self).init(name=name)

self.encoder = Encoder(z_dim)

self.decoder = Decoder(z_dim)

self.mean = None

self.logvar = None

def call(self, inputs):

z, self.mean, self.logvar = self.encoder(inputs)

out = self.decoder(z)

return out

if num_devices > 1:

with strategy.scope():

vae = VAE(z_dim=200)

else:

vae = VAE(z_dim=200)

def vae_kl_loss(y_true, y_pred):

kl_loss = -0.5 * tf.reduce_mean(1 + vae.logvar - tf.square(vae.mean) - tf.exp(vae.logvar))

return kl_loss

def vae_rc_loss(y_true, y_pred):

rc_loss = tf.keras.losses.MSE(y_true, y_pred)

return rc_loss

def vae_loss(y_true, y_pred):

kl_loss = vae_kl_loss(y_true, y_pred)

rc_loss = vae_rc_loss(y_true, y_pred)

kl_weight_const = 0.01

return kl_weight_const * kl_loss + rc_loss

model_path = “vae_faces_cele_a.h5”

checkpoint = ModelCheckpoint(

model_path,

monitor=‘vae_rc_loss’,

verbose=1,

save_best_only=True,

mode=‘auto’,

save_weights_only=True

)

early = EarlyStopping(

monitor=‘vae_rc_loss’,

mode=‘auto’,

patience=3

)

callbacks_list = [checkpoint, early]

initial_learning_rate = 1e-3

steps_per_epoch = int(np.round(train_num/batch_size))

lr_schedule = tf.keras.optimizers.schedules.ExponentialDecay(

initial_learning_rate,

decay_steps=steps_per_epoch,

decay_rate=0.96,

staircase=True

)

vae.compile(

loss=[vae_loss],

optimizer=tf.keras.optimizers.RMSprop(learning_rate=3e-3),

metrics=[vae_kl_loss, vae_rc_loss]

)

history = vae.fit(ds_train, validation_data=ds_test,epochs=50,callbacks=callbacks_list)

images, labels = next(iter(ds_train))

vae.load_weights(model_path)

outputs = vae.predict(images)

Display

grid_col = 8

grid_row = 2

f, axarr = plt.subplots(grid_row, grid_col, figsize=(grid_col2, grid_row2))

i = 0

for row in range(0, grid_row, 2):

for col in range(grid_col):

axarr[row,col].imshow(images[i])

axarr[row,col].axis(‘off’)

axarr[row+1,col].imshow(outputs[i])

axarr[row+1,col].axis(‘off’)

i += 1

f.tight_layout(0.1, h_pad=0.2, w_pad=0.1)

plt.show()

avg_z_mean = []

avg_z_std = []

for i in range(steps_per_epoch):

images, labels = next(iter(ds_train))

z, z_mean, z_logvar = vae.encoder(images)

avg_z_mean.append(np.mean(z_mean, axis=0))

avg_z_std.append(np.mean(np.exp(0.5*z_logvar),axis=0))

avg_z_mean = np.mean(avg_z_mean, axis=0)

avg_z_std = np.mean(avg_z_std, axis=0)

plt.plot(avg_z_mean)

plt.ylabel(“Average z mean”)

plt.xlabel(“z dimension”)

grid_col = 10

grid_row = 10

f, axarr = plt.subplots(grid_row, grid_col, figsize=(grid_col, 1.5*grid_row))

i = 0

for row in range(grid_row):

for col in range(grid_col):

axarr[row, col].hist(z[:,i], bins=20)

axarr[row, col].axis(‘off’)

i += 1

#f.tight_layout(0.1, h_pad=0.2, w_pad=0.1)

plt.show()

z_dim = 200

z_samples = np.random.normal(loc=0, scale=1, size=(25, z_dim))

images = vae.decoder(z_samples.astype(np.float32))

grid_col = 7

grid_row = 2

f, axarr = plt.subplots(grid_row, grid_col, figsize=(2grid_col,2grid_row))

i = 0

for row in range(grid_row):

for col in range(grid_col):

axarr[row, col].imshow(images[i])

axarr[row, col].axis(‘off’)

i += 1

f.tight_layout(0.1, h_pad=0.2, w_pad=0.1)

plt.show()

采样技巧

z_samples = np.random.normal(loc=0., scale=np.mean(avg_z_std), size=(25, z_dim))

z_samples += avg_z_mean

images = vae.decoder(z_samples.astype(np.float32))

grid_col = 7

grid_row = 2

f, axarr = plt.subplots(grid_row, grid_col, figsize=(2grid_col, 2grid_row))

i = 0

for row in range(grid_row):

for col in range(grid_col):

axarr[row,col].imshow(images[i])

axarr[row,col].axis(‘off’)

i += 1

f.tight_layout(0.1, h_pad=0.2, w_pad=0.1)

plt.show()

(ds_train, ds_test), ds_info = tfds.load(

‘celeb_a’,

split=[‘train’, ‘test’],

shuffle_files=True,

with_info=True)

test_num = ds_info.splits[‘test’].num_examples

def preprocess_attrib(sample, attribute):

image = sample[‘image’]

image = tf.image.resize(image, [112, 112])

image = tf.cast(image, tf.float32) / 255.

return image, sample[‘attributes’][attribute]

def extract_attrib_vector(attribute, ds):

batch_size = 32 * num_devices

ds = ds.map(lambda x: preprocess_attrib(x, attribute))

ds = ds.batch(batch_size)

steps_per_epoch = int(np.round(test_num / batch_size))

pos_z = []

pos_z_num = []

neg_z = []

neg_z_num = []

for i in range(steps_per_epoch):

images, labels = next(iter(ds))

z, z_mean, z_logvar = vae.encoder(images)

z = z.numpy()

step_pos_z = z[labels==True]

pos_z.append(np.mean(step_pos_z, axis=0))

pos_z_num.append(step_pos_z.shape[0])

step_neg_z = z[labels==False]

neg_z.append(np.mean(step_neg_z, axis=0))

neg_z_num.append(step_neg_z.shape[0])

avg_pos_z = np.average(pos_z, axis=(0), weights=pos_z_num)

avg_neg_z = np.average(neg_z, axis=(0), weights=neg_z_num)

attrib_vector = avg_pos_z - avg_neg_z

return attrib_vector

attributes = list(ds_info.features[‘attributes’].keys())

attribs_vectors = {}

for attrib in attributes:

print(attrib)

attribs_vectors[attrib] = extract_attrib_vector(attrib, ds_test)

def explore_latent_variable(image, attrib):

grid_col = 8

grid_row = 1

z_samples,, = vae.encoder(tf.expand_dims(image,0))

f, axarr = plt.subplots(grid_row, grid_col, figsize=(2grid_col, 2grid_row))

i = 0

row = 0

step = -3

axarr[0].imshow(image)

axarr[0].axis(‘off’)

for col in range(1, grid_col):

new_z_samples = z_samples + step * attribs_vectors[attrib]

reconstructed_image = vae.decoder(new_z_samples)

step += 1

axarr[col].imshow(reconstructed_image[0])

axarr[col].axis(‘off’)

i += 1

f.tight_layout(0.1, h_pad=0.2, w_pad=0.1)

plt.show()

ds_test1 = ds_test.map(preprocess).batch(100)

images, labels = next(iter(ds_test1))

控制属性向量生成人脸图片

explore_latent_variable(images[34], ‘Male’)

explore_latent_variable(images[20], ‘Eyeglasses’)

explore_latent_variable(images[38], “Chubby”)

fname = “”

if fname:

using existing image from file

image = cv2.imread(fname)

image = image[:,:,::-1]

crop

min_dim = min(h, w)

h_gap = (h-min_dim) // 2

w_gap = (w-min_dim) // 2

image = image[h_gap:h-h_gap, w_gap,w-w_gap, :]

image = cv2.resize(image, (112,112))

plt.imshow(image)

encode

input_tensor = np.expand_dims(image, 0)

input_tensor = input_tensor.astype(np.float32) / 255.

z_samples = vae.encoder(input_tensor)

else:

start with random image

z_samples = np.random.normal(loc=0., scale=np.mean(avg_z_std), size=(1, 200))

import ipywidgets as widgets

from ipywidgets import interact, interact_manual

@interact

def explore_latent_variable(Male = (-5,5,0.1),

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

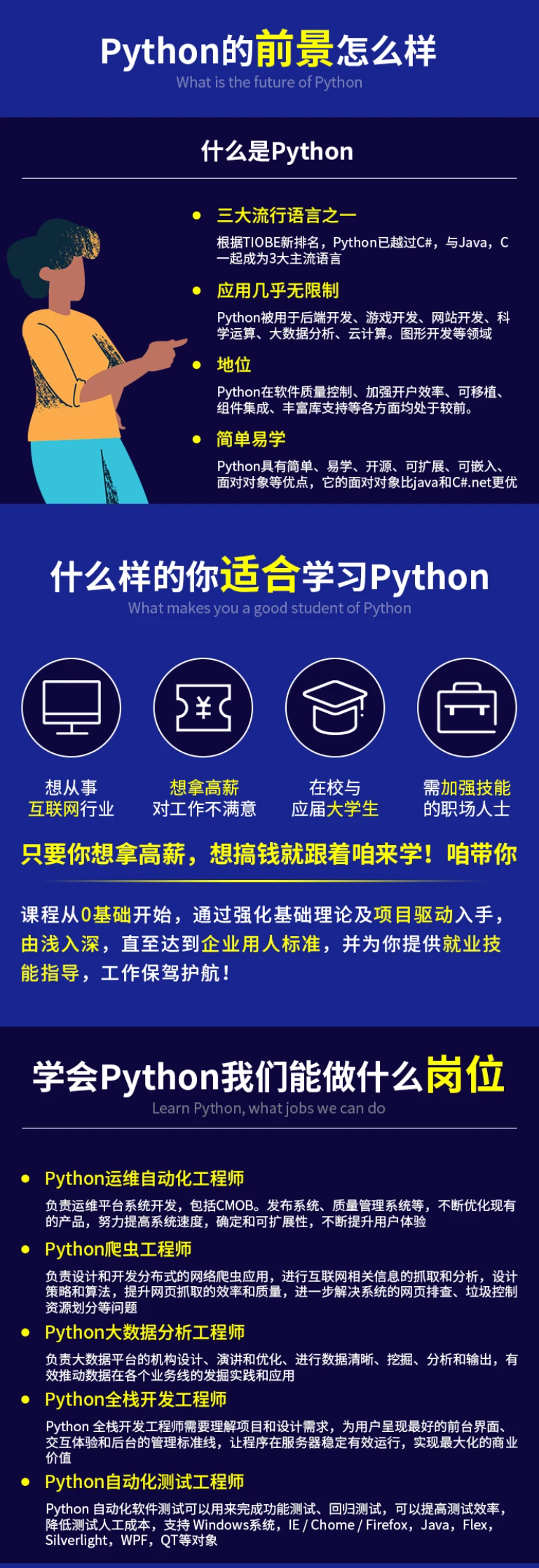

深知大多数Python工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年Python开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上前端开发知识点,真正体系化!

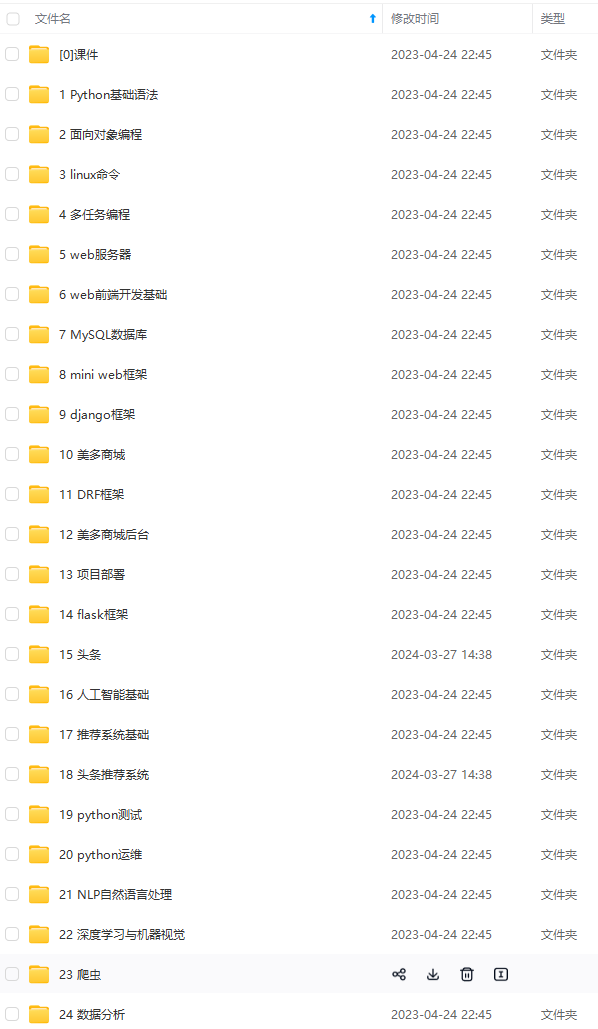

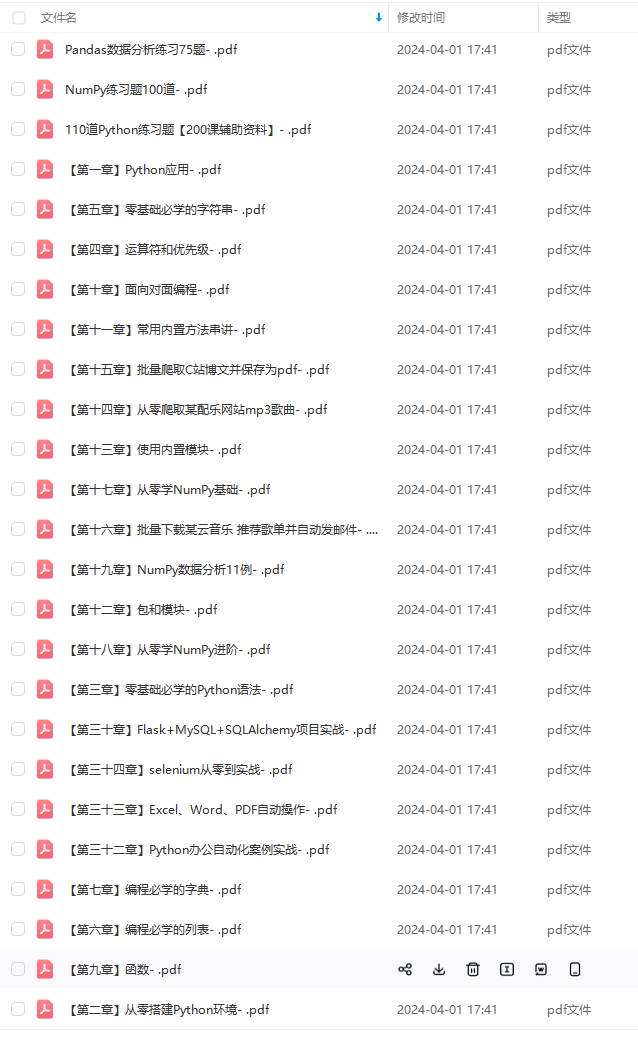

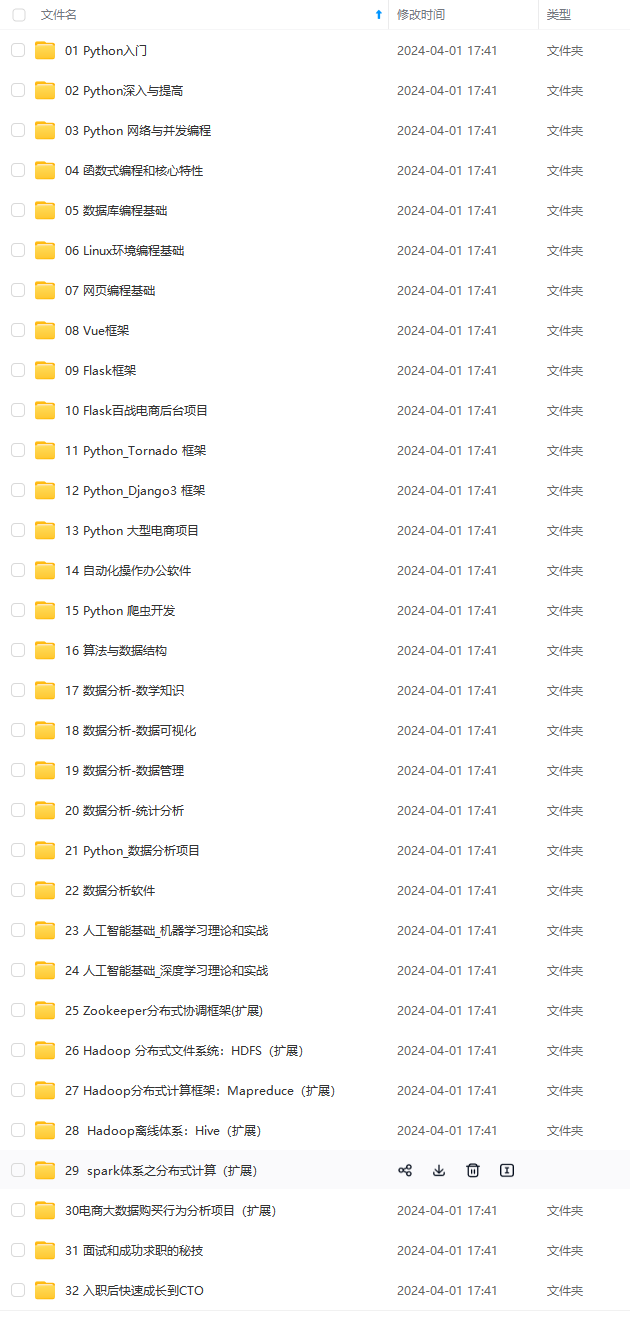

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以扫码获取!!!(备注Python)

师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!**

因此收集整理了一份《2024年Python开发全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友,同时减轻大家的负担。

[外链图片转存中…(img-T9RFPzCU-1713242953563)]

[外链图片转存中…(img-Skml08Tq-1713242953564)]

[外链图片转存中…(img-XWIYMH0W-1713242953564)]

[外链图片转存中…(img-b2B15zwC-1713242953564)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上前端开发知识点,真正体系化!

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以扫码获取!!!(备注Python)

本文介绍了使用Keras构建的VariationalAuto-Encoder(VAE)模型,包括编码器、解码器和损失函数的详细实现,以及如何在CelebA数据集上进行训练和可视化。作者展示了如何探索和操纵潜在空间以控制生成的人脸图像属性。

本文介绍了使用Keras构建的VariationalAuto-Encoder(VAE)模型,包括编码器、解码器和损失函数的详细实现,以及如何在CelebA数据集上进行训练和可视化。作者展示了如何探索和操纵潜在空间以控制生成的人脸图像属性。

2106

2106

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?