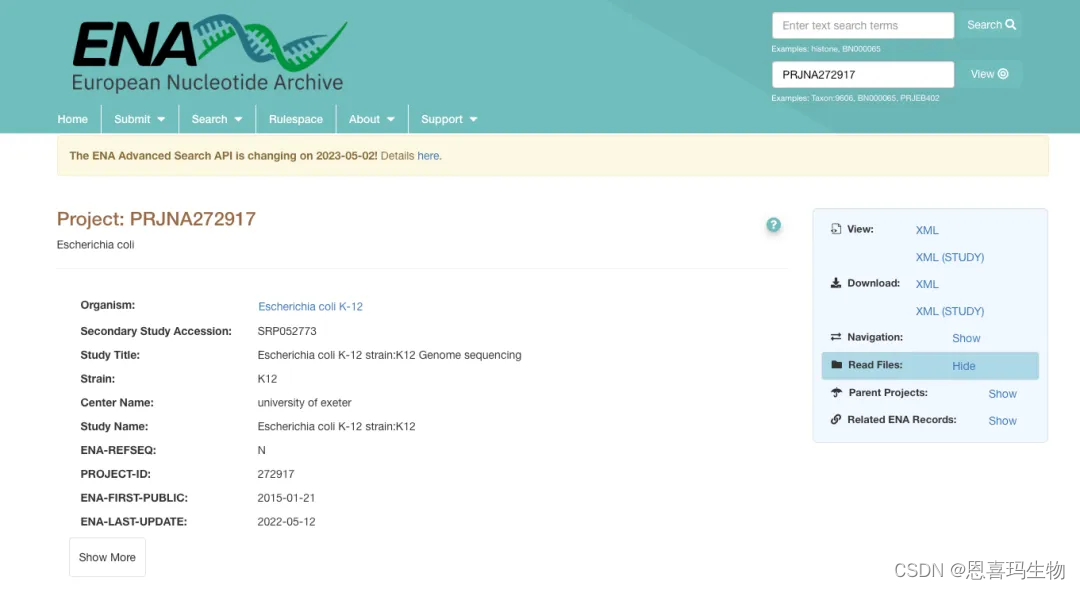

借鉴Reference中第2、3篇文章的代码。分析的数据是大肠杆菌,因为基因组小,适合拿来快速跑通整个流程

00 下载fastq数据

mkdir -p ~/Project/DNA/raw

cd ~/Project/DNA/raw

wget ftp://ftp.sra.ebi.ac.uk/vol1/fastq/SRR177/003/SRR1770413/SRR1770413_1.fastq.gz

wget ftp://ftp.sra.ebi.ac.uk/vol1/fastq/SRR177/003/SRR1770413/SRR1770413_2.fastq.gz

01 filter the bad quality reads and remove adaptors

脚本

#!/bin/bash

#clean.sh

# python3.10/python2.7

# fastqc multiqc trim_galore

HOME_DIR=~/Project/DNA

FASTQ_DIR=${HOME_DIR}/raw

CLEAN_DIR=${HOME_DIR}/clean

N_JOBS=12

if [ ! -d $CLEAN_DIR ]

then

mkdir -p $CLEAN_DIR

fi

micromamba activate dna2

# 1.QC

cd $FASTQ_DIR

fastqc --thread $N_JOBS *fastq.gz && multiqc .

# 2.remove adapter

micromamba activate dna3

for FILE in `ls $FASTQ_DIR/*_1.fastq.gz`

do

SAMPLE=`basename $FILE | sed s/_1\.fastq\.gz//`

echo "Processing $SAMPLE"

F1=$FASTQ_DIR/$SAMPLE"_1.fastq.gz"

F2=$FASTQ_DIR/$SAMPLE"_2.fastq.gz"

trim_galore \

--quality 30 \

--length 50 \

--output_dir $CLEAN_DIR \

--cores $N_JOBS \

--paired \

--fastqc \

-e 0.1 \

--trim-n \

$F1 $F2

mv $CLEAN_DIR/${SAMPLE}"_1_val_1.fq.gz" \

$CLEAN_DIR/${SAMPLE}"_1.fastq.gz"

mv $CLEAN_DIR/${SAMPLE}"_2_val_2.fq.gz" \

$CLEAN_DIR/${SAMPLE}"_2.fastq.gz"

mv $CLEAN_DIR/${SAMPLE}"_1_val_1_fastqc.html" \

$CLEAN_DIR/${SAMPLE}"_1_fastqc.html"

mv $CLEAN_DIR/${SAMPLE}"_1_val_1_fastqc.zip" \

$CLEAN_DIR/${SAMPLE}"_1_fastqc.zip"

mv $CLEAN_DIR/${SAMPLE}"_2_val_2_fastqc.html" \

$CLEAN_DIR/${SAMPLE}"_2_fastqc.html"

mv $CLEAN_DIR/${SAMPLE}"_2_val_2_fastqc.zip" \

$CLEAN_DIR/${SAMPLE}"_2_fastqc.zip"

done

# 3.QC for clean

cd $CLEAN_DIR

micromamba activate dna2

multiqc .

运行

source clean.sh &> clean.sh.log &

02 align

下载参考基因组数据

curl -OJX GET "https://api.ncbi.nlm.nih.gov/datasets/v2alpha/genome/accession/GCF_000005845.2/download?include_annotation_type=GENOME_FASTA,GENOME_GFF,RNA_FASTA,CDS_FASTA,PROT_FASTA,SEQUENCE_REPORT&filename=GCF_000005845.2.zip" -H "Accept: application/zip"

unzip GCF_000005845.2.zip

cp ncbi_dataset/data/GCF_000005845.2/GCF_000005845.2_ASM584v2_genomic.fna genome/E.coli.fa

生成的文件索引

#!/bin/bash

#python2.7

HOME_DIR=~/Project/DNA

GENOME_FILE=E.coli.fa

INDEX_DIR=$HOME_DIR/genome

micromamba activate dna2

echo "----------------- BWA INDEX START-----------------"

bwa index $INDEX_DIR/$GENOME_FILE && \

echo "----------------- BWA INDEX DONE -----------------"

echo "----------------- CreateSequenceDictionary START -----------------"

gatk CreateSequenceDictionary \

--REFERENCE $INDEX_DIR/$GENOME_FILE \

--OUTPUT $INDEX_DIR/E.coli.dict && echo "** dict done **" && \

echo "----------------- CreateSequenceDictionary DONE -----------------"

samtools faidx $INDEX_DIR/$GENOME_FILE

source index.sh &> index.sh.log &

对比

-R:Read group;

ID:Read group identifier,必须唯一,一般为样本名;

SM:Sample,样本名;

LB:Library,测序文库;

PL:Platform,测序平台

#!/bin/bash

#python2.7

micromamba activate dna2

HOME_DIR=~/Project/DNA

BWA_INDEX=$HOME_DIR/genome/E.coli.fa

CLEAN_DIR=$HOME_DIR/clean

ALIGN_DIR=$HOME_DIR/align

N_JOBS=12

if [ ! -d $ALIGN_DIR ]

then

mkdir -p $ALIGN_DIR

fi

for FILE in `ls $CLEAN_DIR/*_1.fastq.gz`

do

SAMPLE=`basename $FILE | sed s/_1\.fastq\.gz//`

echo "----------------- BWA ${SAMPLE} START -----------------"

F1=$CLEAN_DIR/$SAMPLE"_1.fastq.gz"

F2=$CLEAN_DIR/$SAMPLE"_2.fastq.gz"

RG="@RG\tID:"$SAMPLE"\tSM:"$SAMPLE"\tLB:WGS\tPL:ILLUMINA"

bwa mem -t $N_JOBS -R $RG $BWA_INDEX $F1 $F2 | \

samtools sort --threads $N_JOBS -O bam -o $ALIGN_DIR/$SAMPLE".bam" - && \

echo "----------------- BWA ${SAMPLE} DONE -----------------"

echo "----------------- MarkDuplicates ${SAMPLE} START-----------------"

gatk MarkDuplicates \

--INPUT ${ALIGN_DIR}/${SAMPLE}".bam" \

--OUTPUT ${ALIGN_DIR}/${SAMPLE}".mark.bam" \

--METRICS_FILE ${ALIGN_DIR}/${SAMPLE}".mark.matrics" && \

echo "----------------- MarkDuplicates ${SAMPLE} DONE -----------------" &&

rm -f ${ALIGN_DIR}/${SAMPLE}".bam"

echo "-----------------Samtools Indexing ${SAMPLE} START -----------------"

samtools index ${ALIGN_DIR}/${SAMPLE}".mark.bam" && \

echo "----------------- Samtools Indexing ${SAMPLE} DONE -----------------"

done

source bwa.sh &> bwa.sh.log &

03 call variants

#!/bin/bash

micromamba activate dna2

HOME_DIR=~/Project/DNA

BWA_INDEX=$HOME_DIR/genome/E.coli.fa

ALIGN_DIR=$HOME_DIR/align

MUTATION=$HOME_DIR/mutation

N_JOBS=12

if [ ! -d $MUTATION ]

then

mkdir -p $MUTATION

fi

# 1 每个样本生成一个gvcf文件

for FILE in `ls ${ALIGN_DIR}/*.mark.bam`

do

SAMPLE=`basename $FILE | sed s/\.mark\.bam//`

echo $SAMPLE

gatk HaplotypeCaller \

--reference $BWA_INDEX \

--emit-ref-confidence GVCF \

--input $ALIGN_DIR/$SAMPLE".mark.bam" \

--output $MUTATION/$SAMPLE".gvcf" && echo "** gvcf done **"

done

# 2 通过gvcf检测变异

gatk GenotypeGVCFs \

--reference $BWA_INDEX \

--variant $MUTATION/$SAMPLE".gvcf" \

--output $MUTATION/final.vcf && echo "** vcf done **"

# 3 压缩 构建tabix索引

bgzip -f $MUTATION/final.vcf

tabix -p vcf $MUTATION/final.vcf.gz

# 4.过滤(硬指标)

## 使用SelectVariants,选出SNP

gatk SelectVariants \

-select-type SNP \

--variant $MUTATION/final.vcf.gz \

--output $MUTATION/final.snp.vcf.gz

## 为SNP作硬过滤

gatk VariantFiltration \

--variant $MUTATION/final.snp.vcf.gz \

--filter-expression "QD < 2.0 || MQ < 40.0 || FS > 60.0 || SOR > 3.0 || MQRankSum < -12.5 || ReadPosRankSum < -8.0" \

--filter-name "PASS" \

--output $MUTATION/final.snp.filter.vcf.gz

## 使用SelectVariants,选出Indel

gatk SelectVariants \

-select-type INDEL \

--variant $MUTATION/final.vcf.gz \

--output $MUTATION/final.indel.vcf.gz

## 为Indel作过滤

gatk VariantFiltration \

--variant $MUTATION/final.indel.vcf.gz \

--filter-expression "QD < 2.0 || FS > 200.0 || SOR > 10.0 || MQRankSum < -12.5 || ReadPosRankSum < -8.0" \

--filter-name "PASS" \

--output $MUTATION/final.indel.filter.vcf.gz

# 重新合并过滤后的SNP和Indel

gatk MergeVcfs \

--INPUT $MUTATION/final.snp.filter.vcf.gz \

--INPUT $MUTATION/final.indel.filter.vcf.gz \

--OUTPUT $MUTATION/final.filter.vcf.gz

cd $MUTATION

rm -f *snp* *indel* final.vcf* *gvcf*

source call_variant.sh &> call_variant.sh.log &

Reference

# gatk api

https://gatk.broadinstitute.org/hc/en-us/articles/13832655155099--Tool-Documentation-Index

#GATK4.0和全基因组数据分析实践

https://mp.weixin.qq.com/s/Heu-jmJeM3EQy2PmyA-gyQ

https://mp.weixin.qq.com/s/ooU7Tuh0adGNKEISELFjUA

#GATK best practices

https://mp.weixin.qq.com/s/GvuL8NlFSkQ6MVdghlEYUQ

# trim-galore

https://mp.weixin.qq.com/s/n3G_qot5mhB3ZR1gcDnV6g

# 方法分享 | 全外显子测序分析的标准流程

https://mp.weixin.qq.com/s/WOeLHXIomhNjSFXjXZ8zcg

https://mp.weixin.qq.com/s/WOeLHXIomhNjSFXjXZ8zcg

# GATK4全基因组数据分析最佳实践

https://mp.weixin.qq.com/s/r3sghKtEKq1FnjQ05WCcnA

# 第1节 测序技术

https://mp.weixin.qq.com/s/kq2i5tNZmm9GKGAx2Day-g

#第2节 FASTA和FASTQ

https://mp.weixin.qq.com/s/0h5B3T6RKTHTsZBuoZvtgQ

#第3节 数据质控

https://mp.weixin.qq.com/s/8hY0U48kiVTH6Yx4JBcAhg#

#第4节 构建WGS主流程

https://mp.weixin.qq.com/s/AT2oodvdqWgbj1gvVo1hWQ

#第5节 理解并操作BAM文件

https://mp.weixin.qq.com/s/UnMyAuUHmK7DMGo8h88oQw

# WGS(全基因组测序)/WES(全外显子组测序)/WGRS(全基因组重测序)分析与可视化教程

https://zhuanlan.zhihu.com/p/380684876

https://blog.csdn.net/bio_meimei/article/details/108236406

https://zhuanlan.zhihu.com/p/422365954

https://zhuanlan.zhihu.com/p/69726572

https://hpc.nih.gov/training/gatk_tutorial/haplotype-caller.html

1409

1409

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?