1. gradient descent

1.1 Model to consider

Consider unconstrained, smooth convex optimization

i.e., f is convex and differentiable with

Gradient descent: choose initial value

x(0)

, repeat:

Stop at some point.

1.2 Interpretaion

1.2.1 Interpretation via newton method

Since for the smooth and convex function

f(x)

, the minimum satisfies a condition that

- If ∇f(x) can be calculated simply, we can get x∗ directly just by solving this equality.

- If

∇f(x)

is difficult to calculated. Then we can use linear approximation to

∇f(x)

at

x(0)

:

ℓ(x)=∇f(x(0))+∇2f(x(0))⋅(x−x(0))

and by setting this linear approximation to zero, we get

x(new)=x(0)−∇2f(x(0))−1∇f(x(0))

But for many functions, calculating their twice differential is difficult. So we can replace

∇2f(x)

by

1t⋅I

:

The core idea behind gradient descent is that using linear approximation of ∇f(x) to get the root of ∇f(x) , which is exactly Newton-Raphson method. Newton-Raphson method is a method for finding successively better approximations to the roots (or zeroes) of a real-valued function.

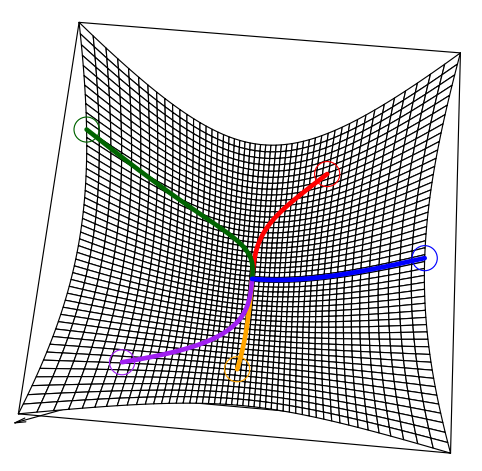

1.2.2 Interpretation via quadratic approximation of original function

Linear approximation of

∇f(x)

can be regarded as a quadratic approximation of the original function

f(x)

:

which satisfies that:

we can also use

1t⋅I

to replace

∇2f(x(0))

:

Then setting first differential of

f~(x)

to be zero:

we get

1.3 How to choose step size t(k)

If

t

is too large, we algorithm will not converge. If it is tool small, the algorithm will converge too slow. So how to choose a suitable

- fixed t

- The exact line search can be used if

f(x) is good enough:

t=argmintf(x−t∇f(x)) -

- But in most cases, we can use backtracking line search

- But in most cases, we can use backtracking line search

(i) set

β∈(0,1)

and

α∈(0,1/2)

fixed.(in practice, choose

α=1/2

)

(ii) at each iteration, start with

t=1

and while

shrink t=βt . Else perform gradient descent:

From backtracking line search, we can see that

which make sure that gradient descent is going on a exact descent direction.

1.4 Convergence analysis

Theorem:[lipschitz of first derivative with fixed t] if

f

is convex and differentiable,

proof:

- from lipschize properties of

∇f(x)

, we have

∥∇f(x)−∇f(y)∥2≤L∥x−y∥2f(y)≤f(x)+∇f(x)⋅(y−x)+L2∥y−x∥22 - from the definition of gradient descent method, we know

x+=x−t⋅∇f(x) - from the convexity of

f(x)

, we have

f(x∗)≥f(x)+∇f(x)⋅(x∗−x)

which can be written as

f(x)≤f(x∗)+∇f(x)⋅(x−x∗)

combining these three together, we have

So we have

summing all of these inequalities, we have

Then

Theorem:[lipschitz of first derivative with backtracking] if

f

is convex and differentiable,

where tmin≥min{1,βL}

proof:

All are the same as fixed t but for the value of

tmin

,

From the backtrack line search idea, we know that, there exists a

t0

such that for

∀t∈[0,t0]

, we have

So the final value of

tbacktrack∈(βt0,t0]

. From the equality of last theorem: we have

we know that t0=1L .

So

tmin∈(βL,1L]

and

tmin≤1

Theorem:[lipschitz of first derivative and strong convexity of function] If

f(x)

is m-strong convex and

∇f(x)

is L-lipshcitz function, then gradient descent with fixed step size

t≤2L+m

or with backtracking line search satisfies:

with 0<c<1

proof:

Then we have

and from the convexity of f(x)

1.5 Summarize

- For lipschitz gradient situation, gradient descent has convergence rate

O(1/k)

i.e., to get f(x(k))−f∗≤O(ϵ) , we need O(1/ϵ) iterations - For lipschitz gradient and strong function situation, gradient decent has exponential convergence rate.

Reference:

http://www.stat.cmu.edu/~ryantibs/convexopt/lectures/05-grad-descent.pdf

http://www.seas.ucla.edu/~vandenbe/236C/lectures/gradient.pdf

http://www.stat.cmu.edu/~ryantibs/convexopt/scribes/05-grad-descent-scribed.pdf

1273

1273

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?